So, 2017. It was a year where technology really started to feel… different. We heard a lot about AI, maybe too much sometimes. But beyond the buzzwords, some actual, pretty big technological inventions 2017 really happened. Things that made us think about what machines could do, and what that means for us. It wasn’t all flying cars, but it was definitely a step forward in how computers are learning and creating.

Key Takeaways

- AlphaGo Zero showed AI could learn complex games like Go completely on its own, without any human data or input, which was a big deal.

- Nvidia made fake human faces with AI that looked so real, it was hard to tell they weren’t actual people. This got people thinking about image creation and authenticity.

- The idea of AI having rights or even citizenship came up when a robot named Sophia was granted Saudi Arabian citizenship, sparking a lot of discussion.

- There were big talks about the dangers of AI, with people like Elon Musk warning about future risks, while others, like Mark Zuckerberg, saw more benefits. This debate about AI’s impact on jobs and even humanity became more serious.

- Tools like Google’s AutoML started appearing, which could help create AI systems with less human effort. This pointed towards AI playing a role in building more AI, potentially changing how we develop technology.

Artificial Intelligence: From Hype to Reality Checks

Alright, let’s talk about AI in 2017. It felt like every other week there was some new, mind-blowing announcement. But looking back, it was also a year where we started to see the actual, practical side of artificial intelligence, moving beyond just the buzz. We’re not quite at the robot overlord stage, thankfully. Instead, we saw AI systems getting smarter in specific ways, and people began thinking more seriously about what this all means for us.

AlphaGo Zero Learns Without Human Intervention

Remember AlphaGo from the year before? It beat the world champion at Go, a game so complex it’s hard for humans to master. Well, DeepMind, the folks behind it, came out with AlphaGo Zero. This new version didn’t need any human games to learn. It started with just the rules and played against itself, millions of times, figuring out strategies on its own. It’s a big deal because it shows AI can learn and improve without us feeding it tons of human examples. It’s like teaching a kid a game just by explaining the rules, and then they become a grandmaster. This kind of self-teaching is a huge step forward for AI development.

Nvidia Generates Photorealistic Fake Celebrities

Nvidia showed off some seriously impressive tech late in 2017: AI that could create completely fake, yet totally believable, pictures of people. These weren’t just random faces; they looked like real celebrities, but they never actually existed. This technology, often called generative adversarial networks (GANs), got really good at producing realistic images. It’s kind of wild to think about the possibilities, both good and bad. Imagine using this for creating characters in video games or for special effects in movies. On the flip side, it also raises questions about authenticity and how we’ll tell what’s real online. It’s a good reminder that AI can be used for creative purposes but also has potential downsides.

AI’s Growing Role in Military Applications

While the public often sees AI in terms of cool games or helpful assistants, its application in military settings also became more prominent in 2017. This isn’t about killer robots (at least, not yet), but more about using AI for tasks like analyzing vast amounts of data from surveillance, improving logistics, or even aiding in decision-making processes. Think of it as AI helping to process information much faster than humans can. However, this area also brings up a lot of ethical questions. Who is responsible if an AI makes a mistake in a critical situation? These are tough questions that researchers and policymakers started grappling with more seriously. It highlights the need for careful consideration as AI moves into more sensitive fields.

AI’s Societal and Ethical Implications

Beyond the cool tech demos and impressive feats, 2017 also brought AI’s societal and ethical questions front and center. It wasn’t just about what AI could do, but what it should do, and who gets to decide.

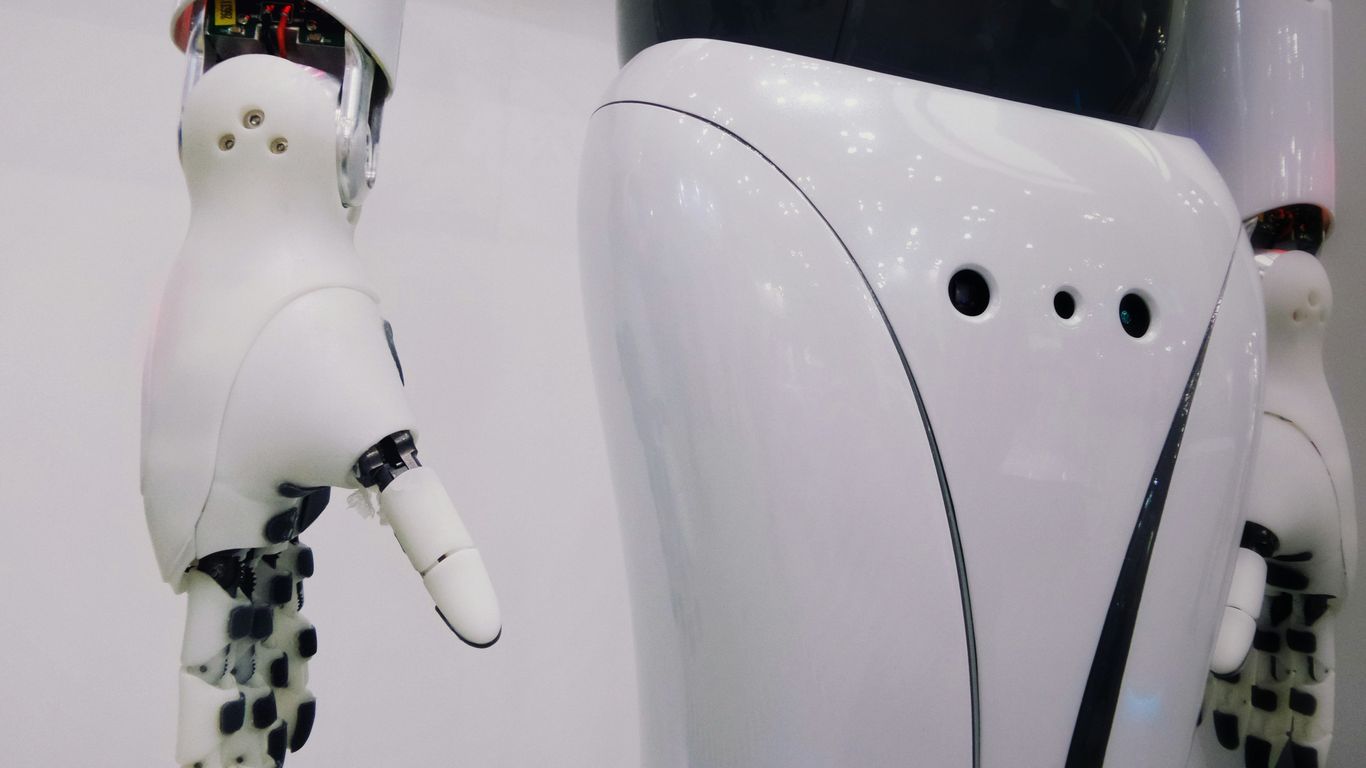

The First AI Citizen: Sophia in Saudi Arabia

One of the most talked-about moments was when Sophia, a humanoid robot developed by Hanson Robotics, was granted citizenship by Saudi Arabia. This event sparked a lot of debate. Was this a genuine step towards AI rights, or a publicity stunt? It definitely made people think about what it means to be a citizen and whether artificial beings could ever truly hold such a status. The implications for legal frameworks and human rights were suddenly very real. It raised questions about accountability if an AI were to cause harm, and what rights, if any, an advanced AI should possess.

Debates on AI’s Economic and Existential Threats

As AI got better at tasks, the conversation around jobs and the economy got louder. Would AI replace human workers on a massive scale? Some experts worried about widespread unemployment, while others argued that AI would create new kinds of jobs. This wasn’t just about factory work; AI was starting to impact knowledge-based professions too. On a more dramatic note, figures like Elon Musk voiced concerns about AI posing an existential threat to humanity. This kind of talk, while sounding like science fiction, highlighted a genuine worry about creating intelligence that we might not be able to control. It’s a complex issue with no easy answers, and it’s something we’ll likely be grappling with for a long time. The discussion around AI governance and safety became more prominent, urging us to consider the long-term consequences of the technology we’re building [c395].

Concerns Over Big Tech’s AI Capabilities

We also saw growing unease about the sheer power accumulating in the hands of a few major tech companies. Companies like Facebook and Amazon were using AI in ways that felt intrusive. Facebook’s facial recognition technology, for instance, could apparently figure out family relationships people didn’t even know themselves. Amazon’s Alexa was being developed to detect emotions in voices, leading to worries about manipulation for profit. This concentration of AI power in private companies raised questions about data privacy, potential misuse, and the lack of transparency in how these systems operate. It felt like a digital version of ‘Big Brother,’ making people wonder how much of their lives were being analyzed and what that data was being used for.

Advancements in Game-Playing AI

It feels like every year, AI gets a little bit better at playing games. And 2017 was no different. We saw some pretty big leaps, especially in how these systems learn.

AlphaGo Zero’s Self-Taught Mastery

Remember AlphaGo? The AI that beat the world champion at the incredibly complex game of Go? Well, DeepMind, the company behind it, came out with an even more impressive version: AlphaGo Zero. The big deal here is that AlphaGo Zero didn’t need any human help to learn. It started with just the basic rules of Go and then played against itself millions of times. Through this process, it figured out strategies that even human experts hadn’t discovered. It’s like learning a skill entirely on your own, just by practicing. This self-teaching method is a huge step forward, showing AI can develop skills without us feeding it tons of pre-existing human knowledge. It even beat its predecessor, the original AlphaGo, in a hundred games with zero losses.

Libratus Masters No-Limit Texas Hold’em Poker

Poker is a whole different ballgame, literally. Unlike Go, poker involves hidden information – you don’t know what cards your opponents have. This means AI has to deal with uncertainty, bluffing, and reading people, which is way harder than just calculating moves on a board. But in 2017, an AI called Libratus, developed at Carnegie Mellon University, showed it could handle it. Libratus went head-to-head with some of the best professional poker players in the world in a 20-day competition. It managed to win against them over 120,000 hands of no-limit Texas Hold’em. This was a major win for AI in games that require more than just raw calculation; it showed AI could handle imperfect information and strategic deception. The development of AI in gaming is a big part of the global AI in gaming market which is expected to grow a lot in the coming years.

AI’s Impact on Knowledge Work and Talent

Okay, so AI isn’t just for playing games or making fake pictures anymore. It’s starting to show up in places where people do a lot of thinking for a living. We’re talking about jobs like writing, coding, research, and even medical diagnosis. It’s kind of wild to think about.

Google AutoML: AI Creating AI

This one is pretty meta. Google came out with something called AutoML. Basically, it’s a system that can help design and build other AI models. Imagine a tool that helps you build better tools. It’s like a mechanic who can build their own wrenches, but for artificial intelligence. This means that the process of creating AI could get faster and maybe even easier, which is a big deal for how quickly AI can develop.

AI’s Effect on Knowledge Worker Productivity

So, what does this mean for the rest of us who use our brains for work? Well, studies are starting to look into this. Some research suggests that AI tools can actually help people get more done. Think of it like having a super-smart assistant that can quickly sift through tons of information, draft emails, or even help debug code. This could free up workers to focus on the more creative and complex parts of their jobs. However, there’s also a flip side. Some worry that relying too much on AI might make us less skilled over time, or that it could lead to job losses if AI can do tasks faster and cheaper than humans.

Here’s a quick look at what some early findings suggest:

- Speed: AI tools can often process information and complete tasks much faster than humans.

- Assistance: They can act as a helpful co-pilot, suggesting ideas or catching errors.

- Focus Shift: This might allow human workers to concentrate on strategy, creativity, and problem-solving that requires a human touch.

It’s still early days, and people are figuring out the best ways to work alongside these new AI tools. It’s not just about replacing people, but about changing how work gets done.

Looking Ahead: What 2017 Really Meant

So, we’ve looked at some pretty wild tech from 2017. It wasn’t all flying cars and robot butlers, but there were definitely some big steps. Things like AI getting smarter, even learning to teach itself, and that whole robot citizenship thing – wild, right? It feels like we’re moving past just the flashy promises and starting to see what this stuff can actually do, and also, what it might mean for us down the road. It’s clear that the conversation around technology is getting bigger, touching on everything from jobs to how we even think about intelligence. 2017 was a year where a lot of that started to really sink in, and it makes you wonder what’s coming next.

Frequently Asked Questions

What does ‘AI’ mean?

AI stands for Artificial Intelligence. It’s basically when computers or machines can do things that normally require human smarts, like learning, solving problems, or understanding what we say.

What was a big deal with AI in 2017?

In 2017, AI started moving from just being a cool idea to actually doing useful things. For example, AI learned games by itself, and some AI could even create realistic fake pictures of people.

Did any AI get special treatment in 2017?

Yes, a robot named Sophia was given citizenship in Saudi Arabia. It was a big moment because it made people think about robots and their place in the world.

Is AI going to take everyone’s jobs?

That’s a big worry. While AI can help people work faster, there are also concerns that it might replace some jobs. People are still figuring out how AI will change the job market.

Are people worried about AI being too powerful?

Yes, some very smart people, like Elon Musk, have warned that AI could become dangerous if not controlled properly. They worry about AI making big decisions that could harm humans.

Can AI play games really well?

Definitely! In 2017, AI programs like AlphaGo Zero learned to play complex games like Go without any human help. Another AI called Libratus even became a champion at playing poker against human experts.