Navigating the Hype of Simulation 2024

Alright, let’s talk about Simulation 2024. It feels like everywhere you turn, there’s talk of AI changing the game. And sure, it can do some pretty amazing things. But sometimes, it feels like we’re getting caught up in the excitement without really thinking things through. It’s easy to get swept up in the ‘AI is the answer to everything’ narrative, but that’s not always the full picture.

Understanding the Core of AI

At its heart, AI, especially the generative kind we’re hearing so much about, is a tool. It’s really good at processing information, spotting patterns, and creating new content based on what it’s learned. Think of it like a super-fast assistant that can churn out drafts, summarize long documents, or even create realistic-sounding text and images. It’s fantastic for tasks that are repetitive or require sifting through massive amounts of data. But it doesn’t ‘understand’ in the way a human does. It doesn’t have intuition, personal experience, or the ability to truly grasp complex social dynamics.

Distinguishing Hype from Reality

So, how do we tell what’s real from what’s just noise? A lot of the buzz around AI in simulations is about its potential to speed things up. For example, creating realistic scenarios for training exercises. Instead of spending days writing out fake emails or social media posts for a cyber-attack drill, AI can generate them in minutes. That’s a huge time saver. It can also help analyze threat intelligence reports, pulling out the key points so your team can focus on strategy. But here’s the thing: AI can’t replicate the messy, unpredictable nature of real-world human interaction under pressure. It can’t simulate the friction between different teams, the non-verbal cues in a tense meeting, or the gut feelings that experienced professionals rely on. Those are human skills, built through practice.

Strategic AI Adoption Frameworks

Instead of just jumping on the AI bandwagon, we need a plan. It’s about being smart with where and why we use AI. Think of it as a ‘force multiplier’ – something that makes your existing efforts stronger, not a replacement for everything. A good framework looks at:

- Where AI excels: Automating content creation for exercises, summarizing threat data, drafting reports, scripting basic scenarios.

- Where humans are irreplaceable: Simulating complex team dynamics, developing critical judgment, building muscle memory through realistic drills, making decisions when plans fall apart.

- How to measure success: Moving beyond just checking boxes to actually measuring performance and proving capability, using AI to help analyze that data.

We need to be selective. Use AI where it genuinely saves time and resources, freeing up people to focus on the human elements that AI just can’t touch. It’s about building a program that uses AI smartly, not just because it’s the latest trend.

AI as a Force Multiplier in Training

It’s easy to get caught up in the buzz around AI, but when it comes to training, it’s not about replacing people; it’s about making them better, faster, and more efficient. Think of AI not as a magic wand, but as a really smart assistant that can handle the grunt work, freeing up your human experts for the stuff that really matters. AI can significantly speed up the creation of training materials and streamline complex analysis tasks.

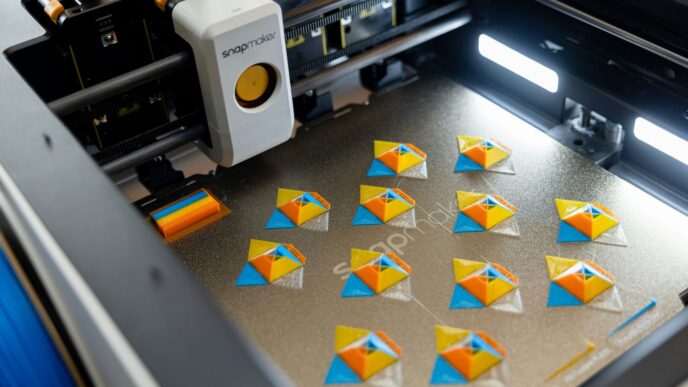

Accelerating Content Generation for Exercises

Remember spending ages crafting realistic phishing emails or fake social media profiles for a simulation? AI can whip those up in a fraction of the time. This isn’t just about saving a few hours; it’s about allowing your training developers to focus on the scenario design and learning objectives, rather than getting bogged down in the details of content creation. It means more varied and up-to-date exercises, without a proportional increase in workload.

Streamlining Threat Intelligence Analysis

Digesting mountains of threat intelligence reports can be a real headache. AI can sift through this data, summarize key findings, and highlight relevant threats that should inform your training scenarios. This helps ensure your training is always aligned with the latest real-world risks, making it more relevant and impactful. Instead of just reading reports, your team can quickly grasp the actionable intelligence needed to build effective training.

Automating Report Generation and Scripting

After a training exercise, the last thing anyone wants to do is write a lengthy report. AI can automate much of this process, drafting initial reports based on exercise data. It can also help script dynamic, branching scenarios for tabletop exercises, making them more engaging and responsive to participant actions. This automation means quicker feedback loops and more time for debriefs and actual learning.

The Irreplaceable Human Element in Readiness

Look, AI is pretty neat for a lot of things, and we’ll talk more about that. But when it comes to actual readiness, especially in high-stakes situations like cybersecurity, we can’t forget about the people involved. AI can’t replicate the messy, unpredictable, and intensely human experience of a real crisis.

Simulating Complex Human Interactions

Think about a major incident. It’s not just about following a checklist. You’ve got different teams – legal, IT, communications – all with their own priorities and pressures. There’s friction, there’s miscommunication, there are subtle cues in a room that tell you if people are losing faith. Can an AI really simulate that? Can it capture the look on someone’s face when they’re stressed, or the way a team leader tries to keep everyone calm under fire? Probably not. These are the kinds of interactions that build real teamwork and resilience, and they happen between people.

Developing Critical Judgment Under Pressure

When things go sideways, and they will go sideways, plans often fall apart. That’s when people have to think on their feet. They need to make tough calls with incomplete information, often when everyone is watching and the clock is ticking. AI can present scenarios, sure, but it can’t teach that gut feeling, that ability to weigh risks and make a judgment when the textbook answer isn’t clear. That kind of decision-making is honed through practice, through making mistakes in a safe environment and learning from them.

Building Muscle Memory Through Realistic Drills

This is where the rubber meets the road. You can read all you want about how to fight a fire, but until you’re actually holding the hose, feeling the heat, and working with a team, you don’t truly know what to do. Realistic drills, the kind that mimic the chaos and pressure of a real event, are how teams build that automatic response – that muscle memory. It’s about getting so familiar with the procedures and the team dynamics that you can act effectively even when your brain is overloaded. AI can help set up these drills, but it can’t replace the experience of going through them.

Measuring True Readiness with Data

Look, we all hear about AI and how it’s going to change everything, right? And yeah, it can do some cool stuff for training, like whipping up practice scenarios or summarizing threat reports way faster than a person could. But when it comes to knowing if your team is actually ready for a real-world mess, just tracking how many training modules they clicked through isn’t going to cut it anymore. We need to get smarter about how we measure what matters.

Shifting from Completion Rates to Performance Metrics

Think about it. Did someone finish a "cyber incident response" course? Great. But did they actually perform well when put on the spot? That’s the real question. We’re talking about moving beyond just ticking boxes to seeing how people perform under pressure. It’s about what they can do, not just what they’ve seen or read.

Leveraging AI for Evidence-Based Measurement

This is where AI can actually help us measure the human stuff. Instead of just using AI to create training, we can use it to analyze how people do in realistic drills. It can crunch the numbers from those high-stress exercises, looking at things like communication breakdowns, decision-making speed, and how well teams work together. This gives us concrete proof of capability, not just a feeling that we’re prepared.

Here’s a quick look at what we should be tracking:

- Decision Speed: How quickly do individuals and teams make critical choices during simulated crises?

- Communication Effectiveness: Are messages clear, timely, and understood by the right people?

- Adaptability: How well do teams adjust their plans when unexpected events occur?

- Task Execution Accuracy: Are critical tasks performed correctly under duress?

Presenting Defensible, Data-Driven Readiness Stories

Imagine walking into a board meeting and not just saying, "We’re ready." Instead, you can show them charts and graphs. You can say, "Our team’s response time to simulated phishing attacks has improved by 15% in the last quarter, based on AI analysis of our drills." That’s a story backed by facts. It makes your readiness efforts look solid and justifies the resources you’re using. It’s about turning subjective confidence into objective, measurable results that leadership can actually understand and trust.

Calculating the Return on Investment for AI

So, you’re thinking about bringing AI into your simulation training, huh? That’s a big step, and honestly, it’s smart to ask about the payoff. It’s not just about having the latest tech; it’s about seeing real results. Figuring out the return on investment (ROI) for AI isn’t just about crunching numbers; it’s about understanding the whole picture.

Tangible Benefits: Cost Savings and Productivity

Let’s talk about the stuff you can actually measure. AI can seriously cut down on costs and make things run smoother. Think about how much time your team spends creating training scenarios or sifting through after-action reports. AI can automate a lot of that. For instance, AI tools can help generate realistic exercise content much faster than a human can, freeing up your instructors to focus on teaching and feedback. It can also speed up the analysis of threat intelligence, giving you more up-to-date information for your simulations without a huge increase in manpower.

Here’s a quick look at where you might see those savings:

- Reduced Content Creation Time: AI can draft exercise scripts, populate scenarios with data, and even generate virtual characters, cutting down development hours significantly.

- Faster Data Analysis: Processing large volumes of performance data or threat intel can be done in a fraction of the time, leading to quicker insights and adjustments.

- Lower Operational Costs: Automating repetitive tasks means fewer people needed for those jobs, or your existing staff can handle more with the same resources.

Intangible Gains: Agility and Employee Satisfaction

Beyond the hard numbers, there are other big wins. AI can make your training programs more adaptable. If a new threat emerges or a training objective needs a quick tweak, AI can help you pivot faster. This agility is hard to put a price on, but it means your readiness stays current. Plus, when AI takes over the tedious parts of a job, your people can focus on more interesting and challenging work. That usually leads to happier employees, and happy employees tend to stick around and do better work.

Consider these less obvious, but still important, advantages:

- Increased Adaptability: Quickly update training scenarios based on real-world events or new intelligence.

- Improved Morale: Employees spend less time on grunt work and more time on meaningful tasks.

- Enhanced Decision-Making: AI can spot patterns in performance data that humans might miss, leading to better training strategies.

Justifying AI Investments Through Cost-Benefit Analysis

So, how do you actually show this all adds up? You need a solid cost-benefit analysis. Start by listing all the costs: the software, the hardware, the training for your team, and any ongoing maintenance. Then, list out all the benefits, both the tangible ones (like saved hours and reduced material costs) and the intangible ones (like improved agility and employee retention). By comparing these, you can build a strong case for why investing in AI for your simulation training makes good business sense. It’s about showing that the value you get back outweighs the money and effort you put in, making your training more effective and your organization better prepared.

Responsible AI Implementation

So, we’ve talked a lot about what AI can do for simulation, especially in 2024. It’s exciting stuff, right? But before we go all-in, we really need to pump the brakes and think about how we’re actually going to use this technology. It’s not just about getting the coolest new tools; it’s about using them the right way.

Ethical Considerations and Data Privacy

This is a big one. When we’re feeding AI systems all sorts of data, especially sensitive information for training simulations, we have to be super careful. Where does that data come from? Who owns it? And most importantly, how are we protecting it? We need clear rules about data privacy, making sure we’re not accidentally exposing personal or classified information. Think about it: if a simulation uses real-world data, even anonymized, there’s always a chance someone could piece things back together. We need to be upfront with everyone involved about how their data is being used and stored.

Ensuring Transparency and Accountability

When an AI makes a decision or generates content for a simulation, we need to know why. If an AI flags a certain behavior as a threat, or if it creates a specific scenario, we should be able to trace that back. This isn’t just about debugging when something goes wrong; it’s about building trust. If people don’t understand how the AI works, they’re less likely to rely on it, or worse, they might distrust the entire simulation process. We need systems where we can see the logic, or at least the inputs and outputs, so we know who or what is responsible when things happen.

Mitigating Bias in AI Algorithms

This is something that trips up a lot of AI projects. AI learns from the data we give it. If that data has existing biases – and let’s be honest, most real-world data does – the AI will learn and repeat those biases. For example, if an AI is trained on historical data that shows certain groups being disproportionately flagged in security scenarios, it might continue to do that, even if it’s not accurate. We need to actively look for these biases in our data and in the AI’s outputs. This means:

- Auditing training data: Regularly checking the data used to train AI models for skewed representation or prejudiced information.

- Testing AI outputs: Running simulations specifically designed to see if the AI behaves differently or unfairly towards different groups.

- Implementing fairness metrics: Using mathematical ways to measure if the AI’s decisions are equitable across various demographics.

It’s an ongoing process, not a one-time fix. Getting this right means our simulations are fairer and more effective for everyone involved.

So, What’s the Takeaway?

Look, AI is definitely here to stay, and it’s changing how we do things, no doubt about it. But as we’ve seen, it’s not some magic wand that fixes everything. For simulation and training, especially in tough spots like cybersecurity, AI can be a huge help with the grunt work – like creating realistic scenarios or summarizing info fast. That frees up people to do the really important stuff, like thinking critically and working together under pressure. That’s the human part, the stuff AI just can’t fake. So, the real trick for 2024 and beyond isn’t just jumping on the AI bandwagon. It’s about being smart: using AI where it makes sense as a tool to boost what humans do best, and never forgetting that real readiness comes from people practicing and learning together. It’s about finding that balance.