Moving Beyond AI Hype: A Purpose-Driven Approach

It feels like everywhere you turn, there’s talk about AI. Companies are rushing to get on board, asking about AI strategies and what their chatbot roadmap should look like. There’s this pressure to show you’re adopting the latest tech, and vendors are promising the moon. Meanwhile, teams are scrambling to find problems that AI can fix, which is kind of backward, right? This is what happens when we chase AI for the sake of hype – we end up implementing technology because it’s trendy, not because it solves a real issue. We start measuring success by how many tools we’ve deployed instead of the actual problems we’ve solved. It’s all about looking innovative, not about creating real value.

Research actually shows that most AI projects don’t fail because the technology isn’t good enough. Nope, the biggest reason is a misunderstanding of what the project is supposed to achieve. When organizations focus on the technology itself rather than the persistent business problems they face, they often end up in the large group of projects that just don’t work out.

Identifying Genuine Business Problems

This is where it all starts. Forget asking, "What can we do with this new AI model?" Instead, ask questions like: "Our customer service team is struggling to find product information quickly. Can AI help them get that info faster?" Or, "Our sales reps can’t seem to find the right case studies when a potential client asks a specific question. How can we fix that?" When the problems define what we’re doing, the technology becomes a tool to solve those specific issues, not the main event.

- Start with the pain point: Who is experiencing difficulty? What specific task is taking too long or causing errors?

- Quantify the impact: How does this problem affect the business? Does it cost money, lose customers, or slow down operations?

- Define success clearly: How will we know if we’ve actually solved this problem? What will be different?

Evaluating AI’s True Value Proposition

Not every problem needs AI. Seriously. Sometimes, a simpler fix like improving a process, offering better training, or just communicating more clearly can do the job much better. We need to be honest about whether AI’s specific abilities actually match what the problem needs. AI is great for tasks that involve spotting patterns in lots of data, doing things at a scale humans can’t, or when consistency and speed are key. But it’s not so good with completely new situations, tasks that need real creativity, or when you absolutely need to understand exactly how a decision was made.

| Problem Type | AI Suitability | Alternative Solutions |

|---|---|---|

| Repetitive Data Entry | High | Process automation, better forms |

| Complex Decision Making | Medium | Expert systems, workflow redesign, human oversight |

| Creative Content Generation | Low | Human creativity, AI as brainstorming partner |

| Customer Service FAQs | High | Chatbots, knowledge base improvements |

Prioritizing Human-Centric Design

Getting the technology to work is only half the battle. For AI to really stick, people have to trust it and use it. If you build AI systems without asking the people who will use them what they need, you might end up solving a problem nobody has, or creating new headaches that outweigh any benefits. Designing with users from the start is key to making sure AI actually helps people and doesn’t just get in the way. This means thinking about how the AI fits into their daily work, making sure it’s easy to use, and building in ways for people to oversee what it’s doing. It’s about making AI a helpful partner, not a confusing replacement.

The Infrastructure Demands of Advanced Simulation

So, we’ve talked a lot about AI hype and what’s real. But there’s a whole other side to this that often gets overlooked: the sheer amount of computing power and data storage needed to make advanced simulations actually work. It’s not just about having a fancy algorithm; it’s about having the pipes and plumbing to feed it and let it run.

Think about it. To really simulate the world, or even a complex part of it, you need to process mountains of information. We’re talking about data that makes current AI training look like a small hobby project. This isn’t just about crunching numbers; it’s about understanding physical reality, not just patterns in text.

Massive Datasets and Distributed Storage

Building these ‘world models’ means feeding AI with sensory data, especially video. Imagine trying to store and process petabytes of video footage from countless sources. This isn’t something your average server farm can handle. We’re looking at a need for massive, distributed storage solutions that can handle constant influxes of data without breaking a sweat. It’s like trying to build a library where every book is a high-definition movie, and you need to find specific scenes instantly.

Pushing Cloud Compute Boundaries

Training AI to understand physics, causality, and real-world interactions requires immense computational power. Current cloud infrastructure, while impressive, is being pushed to its limits. We’re talking about simulations that need to run complex physics engines, model intricate systems, and iterate through scenarios at speeds that demand more than just incremental upgrades. It’s about rethinking how we use cloud resources, perhaps with specialized hardware or entirely new architectures, to handle these heavy workloads.

Real-Time Sensor Integration

For simulations to be truly useful, they need to connect with the real world. This means integrating data from millions of sensors in real-time. Think about self-driving cars, smart cities, or industrial IoT devices. All these generate a constant stream of data that needs to be fed into simulation models to keep them accurate and relevant. This requires robust networks, efficient data pipelines, and systems that can handle the latency and volume of real-time data streams without missing a beat. It’s a complex dance between the digital simulation and the physical world it’s trying to represent.

World Models: Simulating Reality for Enhanced AI

We’ve gotten pretty good at making AI that can talk, write, and even create art. It’s impressive, sure, but it’s also a bit like having a super-smart parrot. It can mimic, but does it really get what it’s saying? Most current AI, especially the big language models, are trained on text. They learn to predict the next word, which is great for writing emails or summarizing articles. But they don’t actually understand the physical world. They don’t know that if you drop a glass, it’ll likely break, or that water flows downhill. This is where world models come in, aiming to give AI a grasp of how reality actually works.

Grounding AI in Physical Reality

Instead of just feeding AI billions of words, the idea behind world models is to train them on sensory data, especially video. Think of it like showing a child how the world works through observation, not just by reading books about it. These models would build internal simulations of how objects interact, how physics applies, and how cause and effect play out. This means AI could start to understand concepts like gravity, momentum, and object permanence – things a toddler figures out pretty quickly.

Understanding Causality Over Correlation

Right now, many AIs are great at spotting patterns, but they often confuse correlation with causation. Just because two things happen together doesn’t mean one caused the other. World models aim to change this. By simulating physical interactions, AI can learn that pushing a block causes it to move, rather than just noticing that blocks often move after something is near them. This shift from pattern matching to understanding cause and effect is a huge step towards more robust and reliable AI.

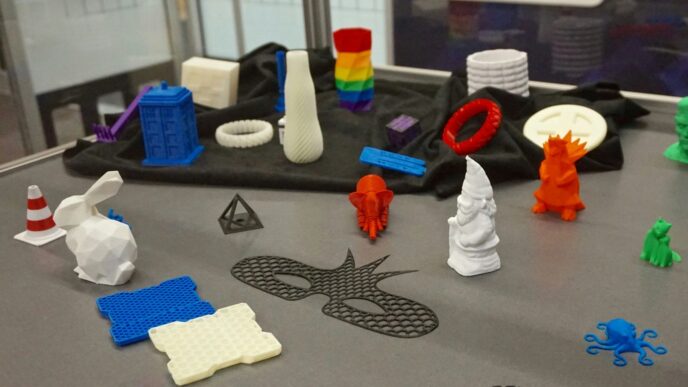

Applications in Robotics and Logistics

So, what does this mean in practice? Imagine robots that can actually handle unpredictable situations. Instead of just picking up pre-placed boxes, they could navigate a cluttered warehouse, adapt to spills, or even perform delicate tasks in a chaotic environment. In logistics, AI could predict when a truck might skid on a wet road, not just calculate the fastest route. This kind of simulation allows AI to anticipate problems and react intelligently to real-world complexity, moving beyond simple automation to genuine problem-solving.

The Real Fear: AI’s Impact on Human Agency

Look, everyone’s talking about AI taking jobs, and sure, that’s a worry. My hairdresser Alan hears it from his clients all the time. But the real fear, the one that keeps some people up at night, goes a bit deeper than just unemployment. It’s about losing control over our own lives.

Beyond Job Displacement: Loss of Control

Think about it. We’re building these incredibly powerful tools, but are we using them to help us make better choices, or are they starting to make choices for us? It feels like we’re heading towards a future where algorithms subtly steer our decisions, from what we buy to how we spend our free time. It’s like having a super-smart assistant who’s also a bit of a control freak.

Algorithmic Lifestyles and Reduced Choice

This isn’t just about efficiency. When AI systems are designed to optimize everything, they can smooth out the messy, unpredictable parts of life. You know, those random encounters or unexpected detours that often lead to the most interesting experiences? AI might see those as bugs to be fixed. The worry is that we’ll end up living lives that are perfectly optimized but also a bit bland, with fewer genuine surprises and less room for spontaneous decisions. It’s a trade-off: convenience for a bit of the unexpected.

Augmenting Human Capability, Not Replacing Judgment

So, what’s the alternative? Instead of AI that just replaces human tasks, we need AI that works alongside us. Imagine systems that give us better information, help us see patterns we might miss, or handle the tedious parts of a job, freeing us up to focus on the creative and strategic thinking. The goal should be to build AI that amplifies our own abilities, not one that makes our own judgment seem unnecessary. This means designing AI with a clear purpose: to support human decision-making, not to take over.

Here’s a quick look at how we might want to think about this:

- Focus on Augmentation: Prioritize AI tools that provide insights and support, rather than those that fully automate complex decision-making.

- Preserve Choice: Design systems that present options and recommendations, but always leave the final decision with the human user.

- Value Human Input: Ensure that AI systems are trained and evaluated with human feedback, recognizing that human experience and intuition are still vital.

- Transparency: Understand how AI recommendations are generated, so we can critically assess them and not just accept them blindly.

Measuring Success: Outcomes Over Tools Deployed

It’s easy to get caught up in the shiny new tools when talking about simulation and AI. Everyone wants to show off the latest model or the most complex system they’ve put into play. But honestly, that’s not really the point, is it? The real win comes from solving actual problems and making things better, not just from having the fanciest tech.

Think about it. If you spend a fortune on a super-advanced simulation tool, but it doesn’t actually help your team work faster, make fewer mistakes, or come up with better ideas, what have you really gained? It’s like buying a top-of-the-line chef’s knife and then only using it to open mail. It’s a waste, plain and simple.

Focusing on Tangible Business Value

So, how do we shift our focus? We need to look at what truly matters to the business. This means asking hard questions before we even start building or buying anything. What specific pain point are we trying to fix? What does success look like in concrete terms? We’re talking about things you can actually measure, not just vague feelings of progress.

Here are some examples of what that looks like:

- Reduced Cycle Time: How much faster can we complete a process from start to finish?

- Improved Quality Metrics: Are we seeing fewer defects, errors, or customer complaints?

- Increased Throughput: Can we produce more, serve more customers, or handle more data in the same amount of time?

- Cost Savings: Are we spending less on materials, labor, or rework?

These aren’t just numbers; they represent real improvements that impact the bottom line and make people’s jobs easier.

Avoiding Vanity Metrics in AI Adoption

Vanity metrics are those impressive-sounding numbers that don’t actually mean much in the grand scheme of things. Things like "number of AI models deployed" or "lines of code written" fall into this category. They make it look like you’re doing a lot, but they don’t tell you if you’re actually achieving anything useful.

We need to be honest with ourselves. If a simulation project uses a cutting-edge AI algorithm but doesn’t lead to a measurable improvement in, say, product design or supply chain efficiency, then it’s just a technical exercise. It might be interesting, but it’s not adding real business value. We have to resist the urge to chase after impressive-sounding but ultimately hollow achievements.

Establishing Clear Accountability for Results

Who is responsible when things don’t go as planned? If we’re only focused on deploying tools, it’s easy for responsibility to get spread thin. But when we focus on outcomes, accountability becomes much clearer. Someone needs to own the problem we’re trying to solve and be responsible for whether or not we actually solve it.

This means setting clear goals upfront and assigning ownership for achieving those goals. It’s not just about the technical team; it involves business leaders, project managers, and end-users. When everyone understands what success looks like and who is responsible for getting there, the chances of actually achieving meaningful results go way up. It’s about making sure that the technology serves a purpose, and that purpose is tied to tangible, positive change.

The Value Bridge: Leadership in AI Transformation

It’s easy to get caught up in the latest AI tech. Everyone’s talking about new models and fancy features. But here’s the thing: technology vendors can build amazing tools, and data scientists can create complex models. That’s all great. What really makes a difference, though, is having leaders who can connect that technical potential to actual, real-world results. These leaders act as a bridge.

Translating Technical Potential to Impact

Think of it like this: a brilliant engineer might invent a super-efficient engine, but it’s the product manager who figures out how to put that engine into a car that people actually want to buy and use. Leaders in AI transformation do something similar. They don’t necessarily need to know how to write the code themselves, but they need to understand enough about what AI can do to ask the right questions. They need to look at a new AI capability and ask, "How does this solve a real problem for our customers or our business?" rather than just asking, "How cool is this technology?" It’s about focusing on the problems worth solving, not just the impressive demos. Sometimes, a simpler solution works just fine, and leaders need to recognize when AI’s complexity is truly necessary and when it’s just overkill.

Informed Questioning Without Deep Technical Expertise

Leaders don’t need to be AI experts to ask smart questions. They should be asking things like:

- What specific business challenge are we trying to address with this AI?

- How will we measure success, and what does that look like in concrete terms?

- What are the potential downsides or unintended consequences we need to watch out for?

- Does this AI tool actually make our processes better, or just more complicated?

It’s about having a clear-eyed view of what the technology can realistically achieve and whether it aligns with the company’s goals. It means not getting swayed by the hype but insisting on clear, measurable outcomes.

Navigating Human Dimensions of AI Adoption

Perhaps the most important part of this bridging role is understanding the human side of AI. AI isn’t just about technology; it’s about people. Leaders need to figure out which teams will be most affected, what kind of support they’ll need, and how to address any resistance. Is the pushback coming from a genuine concern about how the AI will impact their work, or is it just fear of the unknown? The goal should always be to use AI to make people better at their jobs, to augment their capabilities, not to replace their judgment or make them feel obsolete. True AI transformation happens when technology amplifies human potential, not when it diminishes it.

Responsible AI: Governance and Unintended Consequences

Ensuring Ethical AI Development

Look, building AI is getting easier, but building it right is still a huge challenge. We’re talking about systems that can make decisions, and sometimes those decisions have real-world impacts. It’s not enough to just get the tech working; we have to think about what’s fair and what’s not. This means looking at the data we feed these systems. If the data is skewed, the AI will be too. We need to actively check for biases, whether it’s about race, gender, or anything else. It’s like baking a cake – if you mess up the ingredients, the whole thing tastes bad.

Monitoring for Bias and System Failures

Once an AI is out there doing its thing, the job isn’t over. Things change, and AI systems can start acting in ways we didn’t expect. Maybe a system designed to help with hiring starts unfairly filtering out certain candidates, not because it was programmed to, but because the data it learned from had hidden biases. Or perhaps a self-driving car encounters a situation it was never trained for, leading to a breakdown. We need constant checks. Think of it like a car needing regular maintenance. You don’t just buy it and forget about it; you get oil changes and tire rotations. AI needs similar oversight to catch problems before they become big issues.

Here’s a quick look at what we need to watch for:

- Data Drift: When the real-world data the AI sees starts to differ significantly from the data it was trained on.

- Performance Degradation: The AI’s accuracy or effectiveness slowly getting worse over time.

- Unexpected Outputs: The AI generating results that are nonsensical, harmful, or simply not what was intended.

- Edge Cases: Rare situations that the AI hasn’t encountered before and doesn’t know how to handle properly.

Adapting Governance Frameworks to Rapid Advancement

The pace of AI development is just wild. What was cutting-edge last year might be old news now. This means the rules and guidelines we set up for AI – the governance – can’t stay static. They need to be flexible. If we create a rigid set of rules today, they’ll be outdated by the time the AI is actually deployed. We need processes that allow us to update our policies as the technology evolves and as we learn more about its effects. This constant adaptation is key to making sure AI benefits us without causing too much trouble. It’s a moving target, for sure, but ignoring it is a recipe for disaster.

So, What’s Next?

Look, the whole AI thing can feel like a whirlwind, right? One minute it’s all over the news, the next everyone’s trying to figure out what it actually means for them. We’ve talked a lot about the hype versus the real deal, and it seems pretty clear that just jumping on the bandwagon because it’s popular isn’t the way to go. It’s more about figuring out what problems we actually need to solve and then seeing if AI is the right tool for the job. We need to build things that help people, not just replace them or make them feel less in control. The real progress will come when we focus on making AI work with us, amplifying what we can do, rather than just automating everything in sight. It’s a big shift, and it’s going to take some careful thought, but the payoff could be huge if we get it right.