Understanding The Core Components Of Robots

When you think about robots, it’s easy to picture something that looks and acts like a person, maybe like those sci-fi characters we see in movies. But the reality is, robots are much broader than that. At their heart, robots are just machines designed to do tasks automatically. This means a 3D printer, or even a regular office printer, could technically be called a robot. Some robots don’t even have a physical body, like the bots you interact with online for customer service. The idea of a robot often gets tied to human-like forms because we build them to take over jobs humans used to do, bridging the gap from manual work to automated processes.

Robotics is a field that pulls from many different areas of study. While it’s a complex and ever-changing landscape, most robots today are built on a foundation of three main disciplines. Understanding these core components is key to grasping how robots work and what they can do.

Mechanical Engineering: The Foundation Of Robotic Structure

This is all about the physical build of a robot. Think of it as the bones and muscles. Mechanical engineers figure out how to design and construct the robot’s body, its joints, and how it will move. They deal with materials, forces, and how to make sure the robot’s structure can handle the tasks it’s meant for without falling apart. It’s about making things that are strong and reliable.

- Structural Integrity: Making sure the robot’s frame can support its own weight and any external loads.

- Kinematics and Dynamics: Understanding how the robot’s parts move and the forces involved in that movement.

- Material Selection: Choosing the right materials for durability, weight, and cost.

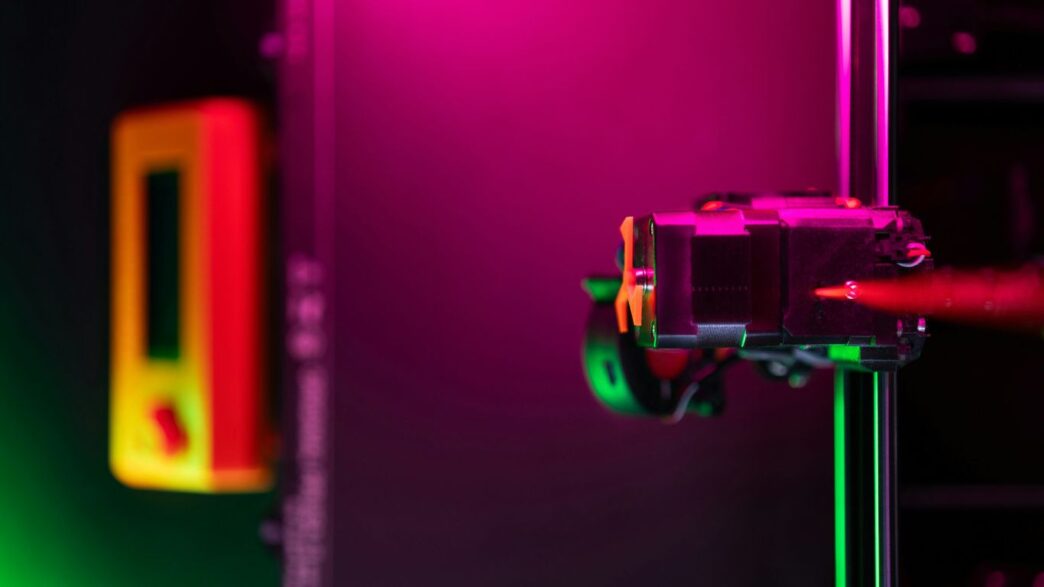

Electrical Engineering: Powering And Controlling Robotic Systems

Electrical engineers are the ones who bring the robot to life with power and control. They design the circuits, manage the power supply, and figure out how to send signals throughout the robot. This includes everything from the batteries that power the robot to the wires that act like its nervous system, connecting sensors to the main processing unit and sending commands to motors.

- Power Systems: Designing efficient ways to power the robot, whether through batteries or external sources.

- Circuit Design: Creating the electronic pathways that allow different components to communicate.

- Sensor Integration: Connecting and interpreting signals from various sensors.

Computer Science: The Brains Behind Robotic Operations

If mechanical engineering builds the body and electrical engineering provides the lifeblood, then computer science is the brain. This is where the robot’s intelligence comes from. Computer scientists write the code that tells the robot what to do, how to process information from its sensors, and how to make decisions. They develop the algorithms that guide the robot’s actions, making it capable of performing complex tasks.

- Software Development: Writing the code that controls the robot’s behavior.

- Algorithm Design: Creating the step-by-step instructions for tasks and decision-making.

- Data Processing: Handling and interpreting the information gathered by the robot’s sensors.

Beyond Traditional Disciplines: Expanding The Robotic Palette

You know, when we think about robots, it’s easy to get stuck on the idea of gears and circuits, the usual mechanical and electrical stuff. But the field is getting way bigger than just those core engineering disciplines. It’s like, the more we build these machines, the more we realize we need people from all sorts of backgrounds to make them actually useful and, well, not scary.

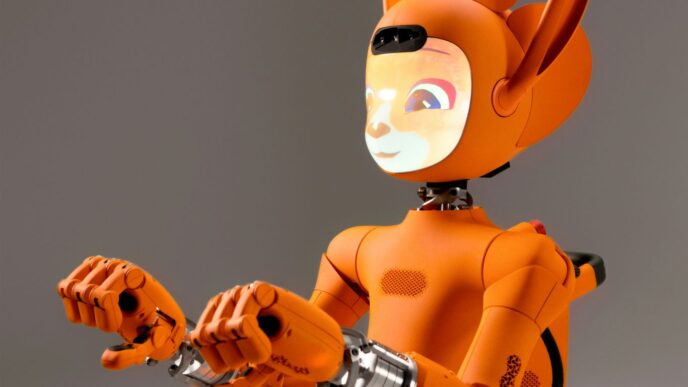

Human-Computer Interaction: Designing For Seamless Integration

This is all about making robots that people can actually work with without pulling their hair out. Think about it: if a robot is going to help out in a factory or even in your home, it needs to be easy to understand and operate. It’s not just about making a robot that can do a job, but one that can do it with people. This means thinking about how a robot looks, how it moves, and how it communicates its intentions. Is it going to be a friendly-looking helper or a more utilitarian machine? How does it signal that it’s about to move, or that it needs something? Getting this right means fewer accidents and a lot less frustration for everyone involved.

Natural Language Processing: Enabling Robot-Human Communication

This is where robots start to talk, or at least understand us when we talk to them. For a long time, robots were programmed with very specific commands. But what if you could just tell a robot what you need? That’s where Natural Language Processing (NLP) comes in. It’s the tech that lets computers understand human language, whether it’s spoken or written. Imagine telling your robot vacuum, "Hey, can you clean up that mess in the kitchen?" instead of having to manually direct it. Or a robot assistant in a hospital understanding a doctor’s verbal instructions. It’s a huge step towards making robots more intuitive assistants.

Machine Learning: Teaching Robots To Learn And Adapt

This is probably the most talked-about area right now. Machine learning is basically how we teach robots to learn from experience, kind of like how we do. Instead of programming every single possible scenario, we can feed a robot a ton of data and let it figure things out. For example, a robot learning to pick up different objects doesn’t need to be programmed for the exact shape and weight of every single item. It can learn to adjust its grip based on what it senses. This ability to adapt is what makes robots more versatile and capable of handling the messy, unpredictable real world. It’s how we get robots that can get better at tasks over time, without needing constant human reprogramming.

The Physicality Of Robots: Hardware And Its Limitations

When we think about robots, we often picture them moving and interacting with the world. But getting that physical interaction right is a huge hurdle. It’s not just about making things look cool; it’s about making them work reliably in the real, messy world.

The Hardware Bottleneck: Challenges In Robotic Construction

Building robots that can do complex tasks is tough, and a lot of that difficulty comes down to the physical parts. Think about a robot’s hand. We might imagine something with five fingers, just like ours, capable of all sorts of delicate movements. But creating such a hand is incredibly complicated. It needs many joints, and controlling each one precisely is a big challenge. Often, these complex hands use cables, which can stretch and aren’t always accurate. Plus, they get heavy, meaning the robot can’t lift as much. And the cost? Astronomical. Some advanced robot hands can run into the hundreds of thousands of dollars.

This is why simpler tools are often more effective. Many surgical robots use basic grippers, and surgeons manage fine procedures with them. Even chopsticks, a simple tool used by billions daily, show how much can be achieved with basic designs. The real trick isn’t always the complexity of the gripper itself, but how well it’s controlled.

Sensors And Actuators: The Robot’s Senses And Muscles

Robots need ways to sense their surroundings and ways to move. Sensors are like their eyes, ears, and touch. They help the robot understand where things are and what they’re like. Actuators are the robot’s muscles, providing the force to move its parts. Getting these right is tricky.

- Precision Issues: Motors, which are common actuators, aren’t perfect. Even when commanded to move to a specific spot, they might end up slightly off due to tiny errors in gears or friction. This might not matter for some tasks, but for others, like picking up a delicate glass, a small error could mean disaster.

- Perception Challenges: Cameras, even advanced ones, struggle to create a perfect 3D map of the world. Humans do this naturally, using intuition and past experience. Robots have to build this understanding from scratch, which is hard. Knowing exactly where an object is and how it will behave is key for manipulation.

- Deformable Objects: Handling rigid objects is one thing, but dealing with soft, bendy things like fabric, cables, or even a human body is much harder. These materials change shape in unpredictable ways, making them difficult to model, control, and even see accurately.

Soft Robotics: Exploring Flexible And Adaptable Designs

Because traditional rigid robots face so many limitations, researchers are looking at "soft robotics." Instead of hard metal and plastic, these robots use flexible materials like silicone. The idea is to create robots that can move more like living things, adapting to their environment and handling delicate objects more safely.

- Mimicking Nature: Soft robots can squeeze through tight spaces or gently grasp fragile items, much like an octopus or a worm.

- Safety: Their flexible nature can make them safer to work alongside humans, reducing the risk of injury.

- New Possibilities: This approach opens doors for robots in areas where traditional robots just wouldn’t work, like in healthcare for internal procedures or in agriculture for handling delicate produce.

The Software Dimension: Enabling Robotic Intelligence

So, we’ve talked about the nuts and bolts, the physical stuff. But what makes a robot actually do things? That’s where the software comes in. It’s the brain, the nervous system, the whole shebang that tells the hardware what to do and how to react. Without good software, even the most advanced robot is just a fancy paperweight.

Algorithms and Control Systems: Directing Robotic Actions

Think of algorithms as the step-by-step instructions for the robot. If you want a robot arm to pick up a box, you need an algorithm that tells it exactly how to move, how much force to apply, and when to stop. Control systems are the mechanisms that make sure these algorithms are followed precisely. They’re constantly checking and adjusting to keep the robot on track. It’s like a chef following a recipe, but with way more math and a lot less tasting.

Here’s a simplified look at how a basic pick-and-place algorithm might work:

| Step | Action |

|---|---|

| 1 | Move gripper to above the object. |

| 2 | Lower gripper to grasp the object. |

| 3 | Close gripper to secure the object. |

| 4 | Lift the object. |

| 5 | Move object to the target location. |

| 6 | Open gripper to release the object. |

| 7 | Return gripper to a neutral position. |

Perception and Scene Understanding: How Robots ‘See’

Robots don’t have eyes like we do, but they need to understand their surroundings. This is where perception software comes in. It takes data from sensors – like cameras, lidar, or touch sensors – and turns it into something the robot can use. This could be identifying objects, mapping out a room, or detecting obstacles. It’s about giving the robot a digital understanding of the physical world. This isn’t just about seeing; it’s about interpreting what’s seen. A robot needs to know that a red, round object is a ball, not just a collection of pixels.

Key aspects of perception include:

- Object Recognition: Identifying specific items in the environment.

- Localization: Figuring out where the robot is within its space.

- Mapping: Creating a digital representation of the surroundings.

- Obstacle Detection: Spotting things the robot needs to avoid.

Fault Tolerance and Reliability: Building Robust Robots

Things go wrong. That’s just a fact of life, and it’s true for robots too. Software needs to be built with fault tolerance in mind. This means the robot can keep working, or at least fail gracefully, even if something unexpected happens. Maybe a sensor gives a bad reading, or a command gets garbled. Robust software can detect these issues and compensate, preventing a minor glitch from turning into a major breakdown. It’s the difference between a robot that stops dead and one that can reroute or ask for help.

The Physics Of Motion: Degrees Of Freedom And Dexterity

When we talk about robots moving, it’s not just about getting from point A to point B. It’s about how they move, how precisely they can position themselves, and how they interact with the world around them. This is where the physics of motion really comes into play, especially when we look at things like degrees of freedom and dexterity.

Navigating Three-Dimensional Space: Position And Orientation

Think about any object, like your keys on a table. They have a specific spot, right? That’s their position. But they also sit at a certain angle – they could be flat, or tilted. That’s their orientation. For a robot, especially one trying to grab something or move around, it needs to know both its position and its orientation in space. This is often described using "degrees of freedom" (DOF). A simple robot arm might have a few joints, each allowing movement in a certain way. To get to any point in 3D space and face any direction, a robot typically needs at least six degrees of freedom. It’s like having six independent ways to adjust its position and angle.

Complex Manipulations: The Challenge Of Multiple Degrees Of Freedom

Now, imagine trying to pick up a pen. You don’t just move your whole arm; your fingers do all sorts of intricate movements. This is where things get complicated. A human hand, for example, has dozens of degrees of freedom. Trying to replicate this in a robot means a lot of joints, a lot of motors, and a lot of complex calculations to make them all work together. Building a robot hand that can do everything a human hand can is incredibly hard. The more joints you add, the more ways things can go wrong, and the harder it is to control precisely. This is why simple grippers, like pincers or even chopsticks, are often used. They might not look fancy, but they can do a lot with fewer degrees of freedom.

Intuitive Physics: Bridging The Gap Between Human And Robot Understanding

We humans are pretty good at figuring out how things will move, even if we can’t explain exactly why. Push a pen across a table, and it might spin a bit. We don’t usually calculate the exact friction or the microscopic bumps on the table; we just adjust our push or use our fingers to "cage" the object, guiding it where we want it to go. This intuitive understanding of physics is something robots struggle with. They often need precise models and calculations. However, researchers are finding ways to help robots learn from experience, using things like "caging" or "funneling" techniques. These methods use the physical properties of objects and environments to help robots achieve tasks, even without perfect knowledge of every detail. It’s about setting up the situation so that physics does some of the work for the robot.

The Evolving Landscape Of Robotic Applications

Robots aren’t just in factories anymore, you know? They’re popping up everywhere, changing how we get things done and how we live. It’s pretty wild to think about how fast this field is moving. What seemed like science fiction a few years ago is now becoming a regular part of our lives.

Logistics and Automation: Transforming Supply Chains

Remember when getting a package took ages? Well, robots are speeding things up big time. In warehouses, they’re sorting, packing, and moving goods around like nobody’s business. This isn’t just about making things faster; it’s also about making the whole process safer and more efficient. Think about those massive online shopping orders – robots are the ones making sure your stuff gets to you without a hitch. Even delivery drones are becoming a real thing, potentially cutting down on delivery times and being kinder to the environment than a fleet of trucks.

Here’s a quick look at how robots are shaking up logistics:

- Warehouse Operations: Robots handle tasks like stocking shelves, picking items, and packing orders, reducing human error and increasing speed.

- Package Sorting: Automated systems sort millions of packages daily, directing them to the right destinations.

- Delivery: From autonomous vehicles on the road to drones in the air, robots are changing how goods reach our doorsteps.

The goal is to make the entire journey of a product, from creation to your hands, as smooth and quick as possible.

Home Care and Assistance: Robots in Everyday Life

This is where things get really personal. Robots are starting to show up in our homes, not just for cleaning (though robot vacuums are pretty common now!), but to help people. For folks who need a bit of extra support, like the elderly or those with disabilities, robots can be a real game-changer. They can help with daily tasks, provide companionship, and even remind people to take their medication. It’s all about giving people more independence and a better quality of life. We’re still figuring out the best ways to make these robots friendly and easy to use, but the potential is huge.

Healthcare and Medicine: Precision and Innovation

In hospitals and operating rooms, robots are becoming indispensable tools. Surgical robots, for instance, allow doctors to perform incredibly delicate procedures with more precision than ever before. This can mean smaller incisions, less pain for the patient, and faster recovery times. Beyond surgery, robots are also being used for tasks like dispensing medication, sterilizing rooms, and even assisting with physical therapy. The precision and tireless nature of robots are pushing the boundaries of what’s possible in medical care. It’s a field where the stakes are high, and the need for reliable, advanced technology is constant.

So, What’s Next?

We’ve looked at what goes into making robots, from the nuts and bolts to the smart software. It’s a mix of old-school engineering and brand-new ideas, all working together. While we’re not quite at the point where robots can do everything around the house or perfectly mimic human tasks, things are moving fast. The progress we’re seeing, especially in areas like moving packages around, shows that robots are becoming more capable. It’s a complex field, with challenges in hardware, software, and understanding the physical world, but the drive to create more useful machines continues. Keep an eye on this space, because the future of what robots are made of, and what they can do, is still being written.