AI’s Physical Manifestation: The Rise of Intelligent Robots

It feels like just yesterday AI was mostly about chatbots and making cool pictures. But at CES 2025, it was clear that AI is stepping out of the screen and into the real world. We’re talking about robots that can actually do things, not just sit in a lab. This isn’t science fiction anymore; it’s becoming a part of how things get made, moved around, and even how we drive.

Nvidia’s Vision for Physical AI and Robotics

Nvidia, a company you probably know for computer graphics cards, is really pushing the idea of ‘physical AI.’ Their CEO, Jensen Huang, talked a lot about how their new chips and platforms are designed to give robots the brains they need to interact with the world. Think of it like giving a robot eyes, ears, and the ability to figure things out on its own. They showed off new tools and systems, like the Cosmos platform, which is all about processing video and data to help robots and self-driving cars get smarter. It’s like they’re building the engine for all these new physical AI applications.

Transformative Force in Manufacturing and Logistics

This is where things get really interesting for businesses. Robots are no longer just for simple, repetitive tasks on an assembly line. With AI, they can handle more complex jobs. Companies are looking at robots to help with things like moving heavy stuff in warehouses or doing tasks that are too dangerous for people. We saw demonstrations of humanoid robots, like Agility Robotics’ Digit, which is already being tested in places like Amazon warehouses. The idea is that these robots can fill gaps where there aren’t enough workers, especially with more companies bringing manufacturing back home.

Advancements in Autonomous Driving Capabilities

Self-driving cars have been a hot topic for years, and CES 2025 showed they’re getting much closer to being a common sight. Companies like Hyundai and BMW showed off vehicles with much better self-driving features. It’s not just about cruise control anymore; it’s about cars that can handle more complex driving situations safely. Nvidia is also working with car companies to develop the systems needed for these advanced driver-assistance features, aiming for a future where driving is safer and maybe even a bit more hands-off.

Humanoid Robots: Bridging the Gap Between Science Fiction and Reality

Remember when robots that looked and acted like us were just stuff from movies? Well, CES 2026 is showing us that’s not the case anymore. These human-like machines are moving out of the labs and into real-world jobs. It’s pretty wild to see.

The Commercialization of Humanoid Robotics

It feels like just yesterday we were talking about AI in robots as a far-off idea. Now, companies are actually selling them. This isn’t just about cool tech demos; it’s about robots that can do actual work. Think about factories and warehouses – places with lots of repetitive or tough jobs. Humanoids are starting to fill those roles. This shift is creating a whole new market, not just for the robots themselves, but for all the parts that make them tick, like the AI brains, the sensors that let them see, and the software that controls them.

Agility Robotics’ Digit Leading the Way

One robot that’s really making waves is Digit from Agility Robotics. It’s designed for warehouse work, and it’s already being tested by big names like Amazon. Digit is one of the first humanoids you can actually buy for these kinds of jobs. The demand is so high that Agility is building a whole new factory just to make more of them, aiming for 10,000 units a year. That tells you something about how much businesses want this kind of help.

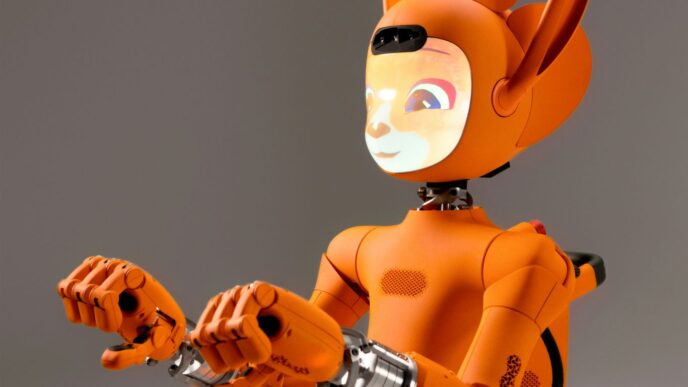

Realbotix Melody: Enhancing Human-Like Interaction

Then there’s Melody from Realbotix. This robot is built to interact more like a person. It can make eye contact, talk, and move in ways that seem pretty natural. Its eyes have cameras that help it follow movement and recognize things. While Digit is focused on physical tasks, Melody seems to be pushing the boundaries of how robots can communicate and connect with us, making them feel less like machines and more like… well, something else entirely.

Hyundai Motor Group’s Ambitious AI Robotics Strategy

Hyundai Motor Group is really pushing forward with its plans for robots, and they’re calling it the ‘Partnering Human Progress’ strategy. It sounds like they want robots to work alongside people, not just replace them. They’re planning to show off a lot of this at CES 2026. It’s not just about building robots; it’s about how these robots fit into everything the group does, from making cars to moving things around.

Unveiling the ‘Partnering Human Progress’ Theme

This theme is all about how robots can help people do their jobs better and make life easier. It’s not just about automation for automation’s sake. Hyundai wants robots to be helpful partners. They’re talking about using their whole network of businesses – like car manufacturing, construction, and logistics – to make this happen. The idea is to create a system where robots can learn and improve, and where humans and robots can collaborate effectively.

Boston Dynamics’ New Atlas Robot Debuts

One of the big reveals will be the new Atlas robot from Boston Dynamics, which is part of Hyundai. This isn’t just a concept anymore; they’re bringing it out for everyone to see. This next-generation Atlas is a big step towards making humanoid robots something we actually see in factories and other workplaces. It’s designed to be adaptable and safe, which is pretty important if you’re going to have robots working around people.

Leveraging the Group Value Network for AI Robotics Expansion

Hydraulic Motor Group has a plan to use what they call their ‘Group Value Network’ to speed up how they develop and use robots. This means they’re looking at the whole process, from designing the robots to how they’re made, trained, and used. They’re also talking about a ‘Software-Defined Factory’ approach, which sounds like they’re using smart software and data to make their factories more flexible and efficient. They even have a goal to produce 30,000 humanoid robots annually by 2028, which is a pretty huge number. This integrated approach is how they plan to grow their AI robotics business across all their different companies.

Revolutionizing Industries with Autonomous Machinery

It’s pretty wild how much automation is changing the way big industries work. This year at CES, it felt like every other booth was showing off some kind of machine that could do a job all by itself. We’re talking about farms, construction sites, and even warehouses getting a serious tech upgrade.

John Deere and Caterpillar Drive Automation in Agriculture and Construction

Companies like John Deere and Caterpillar are really pushing the envelope here. They’re not just talking about making machines smarter; they’re actually putting them to work. Think tractors that can plant seeds with pinpoint accuracy, or excavators that can dig trenches without a human operator needing to be right there. This isn’t just about making things faster; it’s about doing jobs that are often dangerous or just plain tedious, with a lot more precision. They’re using AI to help these machines understand their surroundings, avoid obstacles, and complete tasks more efficiently than ever before. It’s a big step towards making these heavy industries more productive and, honestly, a bit safer.

Electrification and Sustainability in Heavy Equipment

Beyond just being autonomous, a lot of this new machinery is also going electric. It makes sense, right? If you’re building a smarter machine, you might as well make it cleaner too. We saw a lot of focus on battery-powered equipment, which cuts down on emissions and noise pollution. This is a pretty big deal, especially for industries like construction and mining that have a large environmental footprint. The goal seems to be creating machines that are not only self-sufficient in their operation but also kinder to the planet.

Addressing Workforce Shortages with Intelligent Machines

Let’s be real, finding people to do certain jobs, especially in agriculture and construction, has been tough. That’s where these autonomous machines really shine. They can fill in the gaps and keep projects moving even when there aren’t enough workers. It’s not about replacing people entirely, but more about giving businesses the tools they need to operate effectively. This technology can handle the repetitive or physically demanding tasks, freeing up human workers for more complex or supervisory roles. It’s a practical solution to a growing problem, and it’s exciting to see how it’s unfolding.

The Evolving Landscape of Autonomous Transportation

This year’s CES really hammered home how close we are to seeing self-driving cars and trucks everywhere. It wasn’t just talk; companies showed off real progress, especially with Level 4 autonomy. That’s the level where the car can handle most driving situations on its own, without a human needing to jump in. We’re moving beyond the current Level 2 systems that still need a lot of driver attention.

Progress Towards Level 4 Autonomy in Vehicles

Several companies are pushing hard for Level 4. Think long-haul trucking – companies like Kodiak have already logged tens of thousands of miles with their autonomous trucks. They’re working with big names like Continental and Nvidia to get these trucks on the road, with mass production of some systems planned for around 2027. It’s not just trucks, either. The robotaxi world is heating up. Zeekr, a brand from Geely, showed off their RT robotaxi, which is being developed with Waymo for ride-hailing services. They’re even sending it over to the US for testing soon.

Innovations in LiDAR and Sensor Technology

What makes all this possible? Better sensors, especially LiDAR. CES had a bunch of new LiDAR tech that’s way more powerful and, importantly, cheaper. Some new systems boast resolutions 30 times better than what’s common now, and they might cost less than $200. This means we could see these advanced sensors showing up in more affordable cars, not just luxury models. Plus, there are smart software solutions, like those from Helm.AI, that can generate tons of training data for these self-driving systems much faster than before. Instead of thousands of hours of real-world driving, they can create massive datasets from just a few hours of data, which really speeds things up.

The Future of Robotaxis and Long-Haul Trucking

So, what does this all mean for us? Well, expect to see more robotaxis popping up in cities for ride-sharing. They’re getting closer to being a regular sight. And for shipping and logistics, autonomous trucks could change how goods are moved across the country. The combination of advanced AI, better sensors, and more powerful computing is making autonomous transportation a reality, not just a futuristic dream. It’s going to take time, and there are still hurdles, but the direction is clear: our roads are going to look very different in the coming years.

Enabling the Future: AI Computing and Chipset Innovations

CES 2025 really hammered home how much AI is moving beyond just software and into the physical world. A big part of that is the hardware – the chips and computing platforms that make all this intelligence possible. It’s not just about faster processors anymore; it’s about specialized silicon designed to handle complex AI tasks.

Nvidia’s Blackwell and Cosmos Platforms

Nvidia was definitely a major player here. They showed off their Blackwell architecture, which is powering new gaming chips like the RTX 50 series, aiming for graphics that look almost like movies. But what’s really interesting for robotics and AI is their Cosmos platform. Think of it as a way to generate realistic video that robots and self-driving cars can learn from. Instead of collecting tons of real-world data, which takes ages and costs a fortune, Cosmos creates synthetic training data. This should speed up how quickly robots can understand what’s around them, kind of like how large language models help chatbots talk. They also talked about Project DIGITS, which is basically a personal AI supercomputer that fits on your desk. It’s meant to give regular folks, like students or professionals, access to serious AI power without needing a giant data center.

AI PC Chipsets from Intel, AMD, and Qualcomm

It wasn’t just Nvidia, though. The whole AI PC thing is really taking off. AMD showed off their Ryzen AI chips, including the AI 300 series, which are for running AI applications on laptops for both regular people and businesses. Intel had their new Core Ultra 200V chips, aimed at business computers. And Qualcomm introduced their Snapdragon X chip for Windows laptops. The idea is to have AI capabilities built right into the computer, making everyday tasks smarter and faster.

Edge Computing and IoT with Generative AI Chips

Beyond the big computers and laptops, there’s a whole world of smaller devices and specialized applications. Companies like Ambarella are making chips for edge computing and the Internet of Things (IoT). Their new generative AI chips are pretty neat – they can handle decoding 12 video streams at once, process that video, and run multiple AI models all at the same time. This kind of power on smaller devices is what will make smart cameras, industrial sensors, and other connected gadgets much more capable. It means more AI processing can happen right where the data is created, instead of sending everything to the cloud.

The Road Ahead

So, what does all this mean for us? CES 2025 really showed that robots aren’t just for factories anymore. They’re showing up everywhere, from helping out on farms and construction sites to maybe even driving our cars someday. Companies like Hyundai are talking about making thousands of these robots, and you’ve got machines like Boston Dynamics’ Atlas getting ready for real work. It feels like we’re moving past just talking about the future and actually building it. Things are getting smarter, more automated, and honestly, pretty exciting. It’s going to be interesting to see how quickly all these new ideas actually make their way into our everyday lives.