So, the European Commission has put out this big new rule for AI, called the AI Act. It’s a pretty major move to get a handle on how artificial intelligence is developed and used across the EU. Think of it like a rulebook designed to make sure AI is safe and fair, while still letting companies innovate. It’s a complex topic, and this article aims to break down what it all means, especially for digital medical products and how Europe stacks up against other places in the world.

Key Takeaways

- The EU AI Act sorts AI systems by how risky they are, with stricter rules for high-risk ones like those in medical devices.

- Providers of AI systems have the main responsibilities, but users of high-risk AI also have duties.

- There are specific rules for general-purpose AI models, especially the really powerful ones.

- The AI Act sets out a timeline for when different parts of the rules will start applying, with high-risk systems getting more time.

- This new ai regulation european commission aims to balance safety and innovation, impacting everything from medical tech to global competition in AI.

Understanding the European Commission’s AI Regulation

So, the EU has put together a pretty big deal called the AI Act. It’s basically a set of rules for artificial intelligence, aiming to make sure AI is developed and used in a way that’s good for everyone. Think of it as setting up a framework to guide how this technology grows and is put to work.

Foundational Principles and Objectives of the AI Act

The whole idea behind the AI Act is built on a few core ideas. First off, they want AI systems to be safe and to respect people’s basic rights. That’s a big one. They also want to give a boost to AI innovation within the EU, so companies can create and use this tech without too much red tape. Legal clarity is another goal – making sure businesses know what’s expected so they can invest with confidence. And finally, the Act is designed to tackle the risks that come with certain AI uses, especially when those risks could impact fundamental rights.

A Comprehensive and General Approach to AI Governance

Unlike some laws that focus on just one type of product, the AI Act takes a broader view. It’s structured in a way that covers AI generally, much like how the GDPR handles data protection. This means it’s not just about specific AI applications but about the technology itself and how it’s governed across the board. The goal is to create a consistent set of rules that apply widely.

Complementing Existing Data Protection Frameworks

This new AI regulation doesn’t exist in a vacuum. It works alongside existing laws, like the GDPR. While GDPR looks after personal data, the AI Act zeroes in on the unique challenges AI presents, including how AI systems might process data in new ways. It’s about adding another layer of protection and guidance where needed, making sure the digital landscape is well-covered.

The Risk-Based Classification of AI Systems

So, the EU’s AI Act doesn’t just treat all AI the same. That wouldn’t make much sense, right? Instead, they’ve come up with a system that sorts AI based on how much risk it might pose. Think of it like a tiered approach, where the more potential for harm, the stricter the rules.

Categorizing AI by Risk Level

The core idea here is that not all AI is created equal when it comes to potential problems. The Act breaks AI down into different categories, and the level of scrutiny depends entirely on where an AI system lands. It’s a way to focus the heavy lifting on the AI that really needs it.

Here’s a general breakdown of how they’re thinking about it:

- Unacceptable Risk: These are AI systems that are just not allowed. Things like social scoring by governments or AI that manipulates people into doing things they shouldn’t are banned outright. No exceptions.

- High-Risk: This is where a lot of the detailed rules come into play. These are AI systems that could impact safety or fundamental rights. We’ll get into this more, but think medical devices or AI used in critical infrastructure.

- Limited Risk: For AI in this category, the main requirement is transparency. People need to know when they’re interacting with AI, especially if it’s generating content.

- Minimal Risk: Most AI applications out there fall into this bucket. The Act doesn’t impose many new obligations here, acknowledging that these systems generally don’t pose a significant threat.

Focus on High-Risk AI Systems and Medical Devices

When we talk about ‘high-risk’ AI, it’s a pretty broad category, but it’s definitely where the AI Act puts a lot of its energy. These are systems that, if they go wrong, could cause real damage. This includes AI used as a safety component in products that are already regulated by EU safety laws. So, if an AI is part of a toy, a car, or a medical device, and that product already needs a safety assessment, the AI itself will likely be considered high-risk.

Beyond products, there’s a whole list of specific areas that are automatically flagged as high-risk. This covers things like:

- AI used in managing critical infrastructure (like power grids).

- AI in education and job training.

- AI that affects employment and how workers are managed.

- AI used to access public services or benefits.

- AI in law enforcement.

- AI for managing migration and border control.

- AI that helps interpret or apply laws.

For these high-risk systems, providers have to do a lot of homework before they can even think about putting them on the market. This includes setting up risk management systems, making sure their data is good quality, and creating detailed technical documentation so authorities can check their work. It’s a big responsibility.

Transparency Obligations for Limited Risk AI

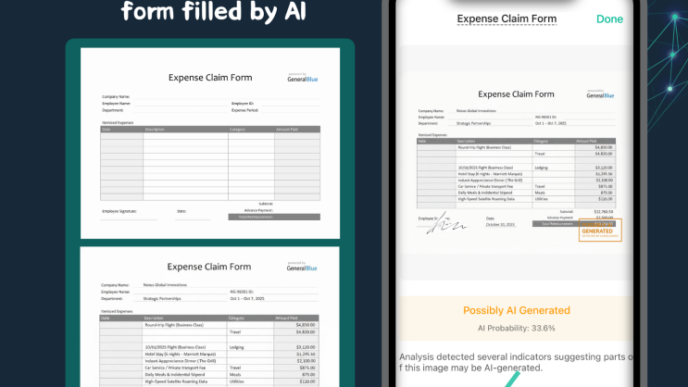

Now, not all AI is high-risk, but that doesn’t mean it gets a free pass. For AI systems that fall into the ‘limited risk’ category, the main focus is on making sure people know what’s going on. A big example here is generative AI, like those large language models that can create text or images. While they aren’t automatically classified as high-risk, they do have specific transparency duties.

What does this mean in practice?

- Disclosure: If content is generated by AI, people should be told. It’s about being upfront.

- Preventing Illegal Content: The AI models need to be designed so they don’t churn out illegal material.

- Copyright: If copyrighted data was used to train the AI, there needs to be a summary of that information available. This is to respect intellectual property rights.

It’s a way to keep things fair and honest, even for AI that isn’t directly impacting safety or fundamental rights in the same way as the high-risk systems.

Key Obligations Under the AI Act

So, what does this all mean for folks actually building or using AI? The AI Act lays out some pretty clear rules, and they mostly fall on the shoulders of those creating high-risk AI systems. Think of it like this: if you’re developing something that could potentially cause harm, you’ve got more hoops to jump through.

Responsibilities for Providers of High-Risk AI

If you’re putting a high-risk AI system on the market or getting it ready for use in the EU, you’ve got a list of duties. This applies whether your company is based in Europe or somewhere else, as long as the AI’s output is used here. You’ll need to make sure your system is built with safety and accuracy in mind from the get-go. This includes things like:

- Having a solid plan for how you’ll manage risks. You need to know what could go wrong and have steps in place to stop it.

- Keeping good records. This means logging data and system performance so you can look back if something happens.

- Making sure your AI is reliable and accurate. No one wants a system that’s all over the place.

- Having human oversight. There needs to be a way for people to check and, if needed, step in.

- Being clear about what your AI can and can’t do. Don’t oversell it.

Obligations for Users and Deployers of AI

If you’re using a high-risk AI system in your job – not just as an end-user, but in a professional capacity – you’ve got some responsibilities too. These are generally lighter than what the providers have to do, but they’re still important. You need to:

- Use the AI system according to its instructions and for its intended purpose.

- Keep an eye on how the AI is performing and report any issues you notice.

- Make sure that any data you feed into the system is appropriate and handled correctly.

- Be aware of the AI’s limitations and potential risks.

Requirements for General-Purpose AI Models

Then there are the big players: general-purpose AI (GPAI) models. These are the foundational models that can be used for many different things. The rules here are still being ironed out, but the providers of these models will have specific duties, especially if their models are considered to have "systemic risk." This could involve things like:

- Conducting risk assessments.

- Providing documentation about the model’s capabilities and limitations.

- Cooperating with the EU AI Office.

It’s a lot to keep track of, but the goal is to make sure AI develops in a way that benefits everyone without causing undue harm.

Implementation and Enforcement of the AI Act

So, how does this whole AI Act thing actually get put into practice? It’s not just a document that magically appears and everyone follows it. There’s a whole system being set up to make sure it works.

The Role of the EU AI Office

Think of the EU AI Office as the main referee. This office, which is part of the European Commission, is going to keep an eye on how things are going, especially with those big, general-purpose AI models. They’ll be checking if companies are playing by the rules. If a company that makes a general-purpose AI model messes up, or if there’s a big problem that could affect a lot of people, this office can step in. They can ask for information, look into things, and even evaluate models if they’re not sure if they’re compliant. It’s also set up so that if a company using an AI system (a downstream provider) thinks the company that made the AI system (an upstream provider) isn’t following the rules, they can complain to the AI Office. They’re also supposed to help sort out key parts of the Act.

Timelines for AI Act Compliance

When does all this start? Well, it’s not all at once. The AI Act has different deadlines for different parts of the rules. It’s a bit like a staggered rollout.

- Prohibited AI systems: These are the ones that are just not allowed. The ban on these started applying pretty quickly, about 6 months after the Act officially came into force.

- General-purpose AI (GPAI) models: The rules for these, including transparency requirements, kicked in around 12 months after the Act was enacted.

- High-risk AI systems: These get a bit more time. The obligations for many high-risk systems, especially those listed in Annex III, will apply 24 months after the Act came into force. For other high-risk systems, it’s a longer wait, with obligations applying 36 months after entry into force.

- Codes of Practice: These are guidelines that help companies figure out how to follow the rules. They were expected to be ready about 9 months after the Act was put into law.

National Implementation Plans and Member State Responsibilities

While the EU AI Office is the central body, each country in the EU also has a part to play. They need to figure out how to implement these rules within their own borders. This means they’ll have their own plans and ways of making sure companies in their country are following the AI Act. They’ll be working with the EU AI Office, but they’re the ones on the ground, making sure things happen locally. This also includes setting up systems for testing AI in real-world-like conditions, which is supposed to help smaller companies and startups compete.

Impact on Regulated Digital Medical Products

AI in Medical Devices Under the AI Act

The EU AI Act is a big deal for digital medical products, especially those using AI and machine learning. Think about AI-powered diagnostic tools or software that helps doctors make decisions. These aren’t just regular apps; they’re often regulated medical devices already, meaning they have to follow strict rules like the EU’s Medical Device Regulation (MDR) or the In Vitro Diagnostic Regulation (IVDR). Now, with the AI Act, they’re also being looked at through the lens of AI risk.

Most AI systems found in medical devices are automatically put into the "high-risk" category under the AI Act. This isn’t a surprise, given the potential impact on patient health and safety. Being classified as high-risk means a whole set of new requirements kick in.

Here’s a quick rundown of what that means:

- Risk Management: You’ll need a solid plan to identify, assess, and reduce any risks associated with the AI. This ties into the existing risk management processes for medical devices, but with an added AI focus.

- Data Governance: How you handle data is super important. This includes making sure the data used to train and test the AI is good quality, suitable, and doesn’t have biases that could harm people. It also means being mindful of the specific situations where the AI will be used.

- Transparency: Users need to know they’re interacting with an AI system, and there should be clear information about its capabilities and limitations.

- Human Oversight: There needs to be a way for humans to oversee the AI’s operation, especially in critical situations.

Providers of these AI medical devices, whether they’re based in the EU or elsewhere, will need to make sure their products meet these new AI Act standards on top of the existing medical device regulations. This could mean updating technical documentation, quality management systems, and post-market surveillance procedures.

Balancing Regulation with Innovation in Healthcare AI

It’s a tricky balance, right? On one hand, we want to make sure AI in healthcare is safe and reliable. Nobody wants a faulty AI misdiagnosing a serious condition. On the other hand, we don’t want to stifle innovation. The EU has a lot of smart people working on AI for medicine, and the goal is to encourage that, not shut it down.

The AI Act tries to do this by focusing on the risks. If an AI system is low-risk, the rules are much lighter. But for high-risk stuff, like AI that directly influences medical treatment, the rules are tougher. This approach aims to let good ideas flourish while putting guardrails around the ones that could cause harm.

However, there are concerns, especially for smaller companies. The medical device world is already complex, and adding the AI Act’s requirements on top of things like the MDR can be a lot. It might mean more paperwork, higher costs, and longer development times. The hope is that the regulations are clear enough to guide companies without becoming an impossible hurdle.

Addressing Global Health Disparities with AI Diagnostics

AI has the potential to really help bridge gaps in healthcare, especially in areas where access to medical expertise is limited. Think about remote regions or developing countries. AI-powered diagnostic tools could bring advanced medical insights to places that currently lack them.

However, for this to work, these AI tools need to be developed and deployed responsibly. The AI Act’s focus on data quality and bias is particularly relevant here. If AI is trained only on data from one specific population group, it might not work well for others, potentially worsening existing health disparities.

So, while the AI Act is primarily an EU regulation, its principles about fair data and robust testing could have a ripple effect globally. It pushes for AI that is not only effective but also equitable. This means developers need to think about diverse populations and contexts from the very beginning of the design process. Ultimately, the goal is to ensure that AI in healthcare benefits everyone, not just a select few.

Navigating the Global AI Landscape

So, the EU AI Act is a big deal, right? But what happens when you zoom out and look at the rest of the world? It’s not just Europe making rules for AI. Other countries are doing their own thing, and honestly, it gets pretty complicated.

Geopolitical Considerations and Dual-Use AI Technologies

Think about it: AI isn’t just for making apps or helping doctors. Some AI tech can be used for, well, not-so-great stuff. This is what people mean by "dual-use" technologies. When countries are already not seeing eye-to-eye, like say, the US and China, these AI advancements can become a real sticking point. Imagine one country restricting access to certain AI tools because of trade disputes. This can really mess things up for everyone else, especially when it comes to important areas like healthcare. It’s like a global game of chess, but with really high stakes.

Comparing EU AI Regulation with Other Jurisdictions

Europe’s approach with the AI Act is pretty thorough, focusing on risk levels. But other places are taking different paths. Some might be more focused on encouraging rapid development, maybe with fewer rules upfront. Others might have specific rules for certain industries. It’s a bit like looking at different roadmaps to get to the same destination.

Here’s a quick look at how things might stack up:

- EU AI Act: Risk-based, with strict rules for high-risk AI.

- United States: More sector-specific, with a focus on innovation and voluntary frameworks.

- China: Emphasis on state control and national security alongside AI development.

- Canada: Developing a framework that balances innovation with safety and ethics.

It’s a real balancing act. Europe wants to be a leader in trustworthy AI, but they also don’t want to get left behind in the global race.

Promoting Innovation and Competition in the EU AI Market

This is the million-dollar question, isn’t it? How does the EU AI Act, with all its rules, actually help European companies compete? On one hand, clear rules can build trust, which is good for business. People are more likely to use AI they feel is safe and ethical. On the other hand, if the regulations are too heavy, it could slow down smaller companies or startups that don’t have the resources to deal with all the compliance. The goal is to create an environment where AI can grow responsibly, without stifling the very innovation it’s trying to encourage. It’s a tough line to walk, and everyone will be watching to see how it plays out.

Wrapping Up the AI Act

So, the EU AI Act is here, and it’s a pretty big deal for how artificial intelligence will work in Europe. It’s not just about banning the really bad stuff; it’s also about setting rules for AI that could be risky, like in healthcare or critical infrastructure. They’ve put this whole system in place, classifying AI by how much risk it poses, from totally banned to just a little bit of transparency needed. It’s going to take some time for everything to really kick in, with different rules applying at different points over the next few years. Companies developing or using AI, especially the high-risk kinds, will need to pay close attention to these new requirements. It’s a complex piece of law, and figuring out exactly how it all plays out will be an ongoing process, but the EU is definitely trying to lead the way in AI governance.

Frequently Asked Questions

What is the main goal of the EU AI Act?

The main goal is to make sure AI systems used in Europe are safe, respect people’s basic rights, and are trustworthy. It also aims to help businesses in Europe create and use AI in a good way, making sure they can compete with others.

How does the EU AI Act sort different AI systems?

The Act sorts AI systems by how much risk they might cause. There’s ‘unacceptable risk’ (which is banned), ‘high-risk’ (which has strict rules), ‘limited risk’ (which needs transparency), and ‘minimal risk’ (which has few rules).

What are ‘high-risk’ AI systems?

These are AI systems that could potentially harm people’s safety, fundamental rights, or health. Examples include AI used in critical infrastructure, medical devices, or for important decisions like job applications or loan approvals.

Who has to follow the rules in the AI Act?

The rules mostly apply to people and companies who create (providers) or use (deployers) AI systems. If you make an AI system or use one for your job, you likely have responsibilities, especially if it’s a high-risk system.

When do these AI rules start applying?

The rules are being put into place over time. Some parts, like the ban on unacceptable AI, started applying in early 2025. Rules for high-risk AI systems will mostly apply about 3 years after the Act officially started, giving companies time to get ready.

How does the AI Act affect AI in healthcare, like medical devices?

AI used in medical devices is usually considered ‘high-risk’ because it can directly impact patient health. This means these AI systems must meet strict safety and performance standards, and companies need to follow specific rules for developing and selling them.