OpenAI robotics is changing fast, and it’s not just for tech experts anymore. The way robots learn and work is shifting, with OpenAI leading the charge. What started as a small research group is now shaping how machines help in everyday life and business. There’s a lot of talk about big ideas like machines that think more like us, smarter factories, and the risks we all need to think about. In this article, we’ll look at where OpenAI robotics is heading in 2026, why it matters, and what it could mean for people, jobs, and the world.

Key Takeaways

- OpenAI robotics is moving from research to real-world use, changing industries and daily life.

- Efforts are focused on making robots smarter and more adaptable, aiming for machines that can think and learn more like humans.

- Manufacturing is set to benefit from AI that can quickly adjust to changes, making factories more flexible and efficient.

- Working with others through partnerships and open-source projects helps OpenAI robotics grow and stay up to date.

- Concerns about data, privacy, and trust are being addressed as robots become a bigger part of society.

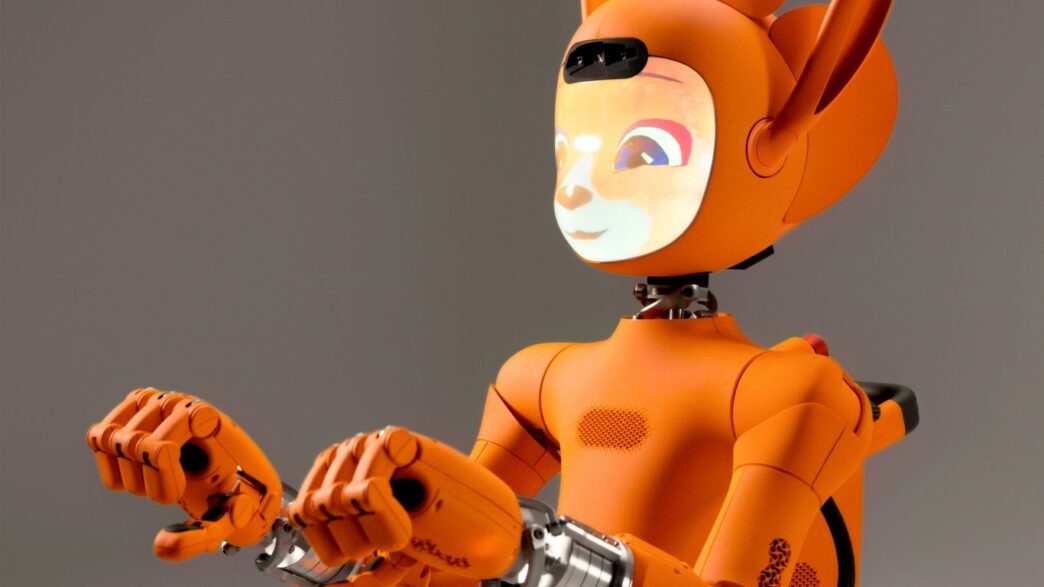

The Evolving Landscape of OpenAI Robotics

It feels like just yesterday that OpenAI was primarily known as a research lab, quietly working on big ideas. Now, though? They’re right in the thick of it, shaping how we think about and use artificial intelligence. It’s a pretty big shift, moving from the academic side of things to being a real industry player. This change isn’t just about them; it’s about how AI itself is developing and becoming a part of our everyday lives, and frankly, our work lives too.

OpenAI’s Journey: From Research Lab to Industry Leader

OpenAI started with a clear goal: to make sure artificial intelligence, or AI, helps everyone. They weren’t just tinkering; they were building towards something significant. Over the years, they’ve gone from publishing papers to releasing tools that people and businesses actually use. Think about their work on large language models and image generation – these aren’t just theoretical concepts anymore. They’re being applied in real ways, changing how we create content, write code, and even interact with information. This transition from a research-focused group to a company with tangible products has been quite something to watch.

Why OpenAI Matters: Shaping the Future of Artificial Intelligence

So, why all the fuss about OpenAI? Well, they’re not just another tech company. Their commitment to developing AI that’s safe and benefits humanity sets them apart. They’re not just chasing the next big technological leap; they’re trying to do it responsibly. This approach is important because AI is becoming so powerful. It’s influencing everything from how we get our news to how businesses operate. By focusing on ethical development and trying to be open about their work, OpenAI is playing a big part in deciding what the future of AI looks like. It’s a future where AI could help solve some pretty big global problems, but it needs to be done right.

The rapid progress in AI means we need to be thoughtful about its development. OpenAI’s focus on safety and broad benefit is a key part of this ongoing conversation.

Here’s a look at some key areas of their development:

- Model Development: Creating increasingly capable AI models.

- Safety Research: Investigating and mitigating potential risks associated with advanced AI.

- Accessibility: Finding ways to make AI tools available to a wider audience.

- Ethical Guidelines: Working to establish principles for responsible AI deployment.

Pioneering General AI: Moving Closer to Human-Like Intelligence

The Vision Behind OpenAI: Revolutionising Technology for Humanity

When OpenAI first came onto the scene, it promised something different. The team wanted to build AI that helps people, not just companies or governments. That meant making technology that anyone could use, regardless of their background. Over time, this idea has grown—now OpenAI is aiming for general AI that starts to look and act more like a human. Their end goal is to create systems that understand, reason, and adapt the way a person can, but without risking anyone’s safety or privacy.

Some main priorities have shaped OpenAI’s push for general intelligence:

- Making AI that works outside of a controlled lab—so it understands people’s messy, unpredictable lives.

- Focusing on beneficial results, not just technical progress.

- Involving a wide mix of testers and users, so the technology is useful for everyone, not just a select few.

Sometimes it can seem like moving from clever chatbots to general intelligence is just hype, but the stuff happening in OpenAI’s back rooms suggests real change is closer than most people think.

Achieving AGI: The Culmination of Dedicated Research

Getting from ‘smart tools’ to true artificial general intelligence (AGI) has needed years of careful work. Teams have tackled all sorts of problems: getting algorithms to learn on their own, making mistakes without causing harm, and spotting the difference between what’s technically possible and what’s truly responsible.

Key milestones on this journey include:

- Training AI to solve hard problems using limited information—like humans often do.

- Building models that can switch from talking to writing to ‘seeing’ visual data and back again.

- Setting strict steps to test, double-check, and fix AI before it’s widely shared.

Here’s a plain look at progress made so far:

| Year | Major Step in AGI Progress | New Ability |

|---|---|---|

| 2022 | Multimodal models released | Processing images, text |

| 2024 | Self-improving small models | More efficient, less costly |

| 2025 | Reliable human-like reasoning gains | Context-aware decision making |

| 2026 | Real-world tests in varied workplaces | Adapts to new situations |

This kind of growth hasn’t been all smooth sailing. Safety, fairness, and real-world impacts have stayed at the top of the list for everyone involved. As 2026 rolls on, OpenAI’s next hurdle is showing that these systems can be trusted, learning from actual use, and holding to the original vision—technology for everyone, not just the privileged few.

Bridging the Sim-to-Real Gap in Manufacturing

It feels like just yesterday that factory simulations were a bit of a joke, right? You’d run a test, and the slightest change in lighting or how a part was shaped would completely break the whole thing. But now, with these massive AI models trained on tons of real-world data from cameras and sensors, that problem is starting to fade away. This means factories can move beyond just being automated to becoming truly autonomous. Imagine machines that just know what they need next, materials flowing without a hitch, and production lines that can instantly adjust if demand shifts or something unexpected happens. It’s a big change from the old days.

AI Models Solving Simulation Breakdowns

These new AI models are a game-changer for simulations. They’ve learned from so much data that they can handle variations in things like lighting and object shapes much better than before. This makes the virtual testing grounds far more reliable. We’re seeing this with systems like NVIDIA’s Isaac Sim, which is being used to test robots like LG’s CLOiD in virtual environments. It’s all about making sure what works in the computer actually works in the real world, saving a lot of time and money.

Autonomous Factories: Anticipating Needs and Adapting Production

The idea of a factory that can think for itself is pretty wild. Think about it: machines that can predict when they’ll need maintenance or more materials, and then act on it. Production lines that can reconfigure themselves on the fly if a rush order comes in or if a supplier is delayed. This isn’t science fiction anymore; it’s becoming a reality thanks to AI. Companies are looking at things like agentic AI, which can plan out workflows and even work alongside human staff to make better decisions. It’s about making factories smarter and more responsive.

Distributed Manufacturing: The Rise of Smaller, Agile Factories

With robots becoming more versatile and able to handle a wider range of tasks, the traditional long assembly line might become a thing of the past. This, combined with potentially lower hardware costs, could lead to a shift towards smaller, more distributed factories. Instead of one giant plant, you might have a network of smaller ones. This has a few perks: being closer to cities could make it easier to find and keep workers, being closer to customers can help with things like tariffs, and if one factory has a problem, it doesn’t shut down the whole operation. It’s a more flexible and resilient way to build things.

The shift towards more autonomous and distributed manufacturing isn’t just about new technology; it’s about rethinking how we design, build, and manage production facilities. It requires a blend of advanced AI, adaptable robotics, and a workforce trained to collaborate with these new systems.

Navigating Market Demands and Staying Relevant

Adapting Offerings Based on User Feedback and Industry Trends

It’s a bit like trying to keep up with the latest phone model, isn’t it? The tech world moves at a breakneck pace, and OpenAI is no different. They’re not just building things and hoping for the best; they’re actively listening. Think about it: if a company isn’t paying attention to what people actually want and what’s happening in the wider industry, they’re going to get left behind pretty quickly. OpenAI seems to get this. They’re constantly tweaking their models and services based on what users are saying and what trends are popping up. It’s about making sure what they offer actually solves real problems for people and businesses today, not just what they thought people needed last year.

Ensuring Relevance in a Rapidly Evolving Field

Staying relevant isn’t just about having the newest gadget; it’s about being useful and reliable. In the robotics and AI space, this means being agile. If a competitor suddenly releases something that blows everyone else out of the water, you can’t just sit there. OpenAI has to be ready to pivot, to adapt its own work, and to keep pushing forward. This involves a lot of behind-the-scenes work, looking at the State of Robotics Industry Report 2026 and other analyses to see where things are heading. It’s a constant balancing act between developing groundbreaking new ideas and making sure the current tools are still top-notch.

Active Engagement in Forum Discussions on Emerging Issues

Sometimes, the best way to know what’s coming next is to be right in the thick of the conversations. OpenAI is known to participate in online forums and discussions where new ideas and potential problems in AI and robotics are thrashed out. It’s not just about putting their own work out there; it’s about hearing from developers, researchers, and even critics. This kind of interaction helps them spot potential issues before they become big problems and also gives them a heads-up on where the field might be heading. It’s a smart way to stay ahead of the curve and make sure their future plans align with the real needs and challenges of the community.

The pace of change means that what works today might be outdated tomorrow. A company needs to be built for adaptation, not just for initial success. This means having systems in place to gather feedback, analyse market shifts, and be willing to change direction when necessary. It’s less about having all the answers and more about having the right process for finding them as the world evolves.

Here’s a look at how they might be adapting:

- User Feedback Loops: Implementing systems to collect and analyse user comments, bug reports, and feature requests from various platforms.

- Trend Analysis: Regularly reviewing industry reports, academic papers, and competitor activities to identify emerging technologies and market needs.

- Iterative Development: Adopting a development cycle that allows for frequent updates and improvements based on real-world performance and user input.

- Community Engagement: Actively participating in developer communities and industry events to gather insights and build relationships.

The Role of Partnerships and Open-Source Contributions

Working together has always been a big part of OpenAI’s progress, but in the last year or so it’s become even more important. The pace of robotics is so fast right now that no single company can keep up with change alone. OpenAI’s partnerships and open-source work make it possible to spread good ideas quickly and put new tools in more people’s hands.

Enhancing OpenAI’s Capabilities Through Collaboration

These days, cutting-edge robotics doesn’t happen in a vacuum. OpenAI teams up with universities, specialist labs, and even smaller startups. When researchers from different backgrounds have a reason to work together, projects move ahead faster and solutions to tough problems get found that much quicker.

- Joint research with manufacturing companies helps OpenAI spot real-world limitations in current AI models.

- Academic partners often try out risky ideas or new algorithms in controlled settings.

- Some partners share data—or even robots—opening up testing no single team could do alone.

Honest teamwork makes robotics feel less like a race and more like a group project where everyone wins if they’re open about what works and what does not.

Open-Source Contributions: Empowering Global Developers

Making robotics tools free and open is one way OpenAI keeps knowledge moving forward. coders in far-away places now use these releases as the foundation for their projects, and sometimes they find bugs, add missing features, or suggest improvements.

- Code that anyone can look at or change means bugs don’t last long.

- Developers can spot important gaps for smaller industries or regions.

- Many online guides and modules make it easier to get started with robotics, even in schools.

Here’s a quick summary of recent open-source activity:

| Year | Community Pull Requests | External Contributors | Projects Launched |

|---|---|---|---|

| 2023 | 220 | 80 | 12 |

| 2024 | 340 | 130 | 18 |

| 2025 | 400 | 165 | 21 |

The Role of Partnerships in Shaping AI’s Future

Partnering with others isn’t only about technology. It helps build trust and set clear norms for robot use (like in health care, factories, or homes). Some non-profits are now drafting safety-net policies for AI, and OpenAI is at those tables.

- Regulations move faster when experts from both AI and industry get a say from day one.

- OpenAI’s partners help keep things transparent, since more eyes are on each new tool.

- Standards agreed upon in partnership projects often spread into law or practice before issues can grow.

All of this cooperation is starting to show in the real world. Robots today are cheaper to train, safer to use, and less likely to go wrong on the job—and these gains aren’t coming from one lab alone. Instead, they’re what happens when people choose to share instead of hoard.

Addressing the ‘2026 Problem’ and Future Learning Methods

So, we’ve all heard about the ‘2026 Problem’, right? It’s this idea that by the end of this year, the sheer amount of data available for training generative AI might hit a bit of a wall. It sounds a bit dramatic, but it’s a real concern for folks working with these massive models. Basically, we’re getting so good at creating AI that can churn out text, images, and code, but we might run out of truly novel, high-quality data to feed them. This isn’t just about having more data, but about having better data that helps AI learn more nuanced and accurate things.

The Generative AI Data Limit by End of 2026

Think about it: a lot of the AI we use today learned from vast swathes of the internet. But what happens when the internet’s most interesting bits have already been processed? We’re seeing models start to regurgitate information or produce outputs that feel a bit… samey. This is where the ‘2026 Problem’ really bites. It means we need to get smarter about how we train AI, not just bigger.

- Data Saturation: The readily available, diverse internet data is becoming less plentiful for training.

- Model Collapse: AI models might start to learn from data that’s already been generated by other AIs, leading to a degradation of quality and originality.

- Bias Amplification: If training data becomes too homogenous or repetitive, existing biases can become even more pronounced in AI outputs.

The challenge isn’t just about quantity; it’s about the quality and diversity of the information AI learns from. We need to find new ways to generate or source data that keeps AI learning and improving, rather than just repeating itself.

The Importance of Synthetic Data and Reinforcement Learning

So, what’s the solution? Well, two big areas are getting a lot of attention: synthetic data and reinforcement learning. Synthetic data is basically data that’s artificially created, often by other AI models. It’s like generating new practice problems for AI instead of just using old exam papers. This allows us to create vast amounts of specific, high-quality data tailored to particular tasks, without worrying about privacy or copyright issues associated with real-world data. It’s a really promising way to keep AI training going strong. You can read more about how AI is drastically reshaping manufacturing and the associated data concerns on this page.

Reinforcement learning, on the other hand, is about teaching AI through trial and error, rewarding it for good actions and penalising it for bad ones. It’s more like how humans learn – by doing and experiencing. This method is particularly useful for complex tasks where defining ‘correct’ data is difficult. By allowing AI agents to interact with environments (real or simulated) and learn from the outcomes, we can develop more adaptable and intelligent systems. This approach is key to moving beyond simple pattern recognition towards more sophisticated problem-solving capabilities. It’s a bit like teaching a robot to walk by letting it try, fall, and learn how to balance itself. This is a big step towards more capable AI systems that can handle unforeseen situations.

Tackling Public Concerns: Privacy, Security, and Accountability

AI isn’t just for tech experts or engineers – these days, it’s creeping into every corner of life, and everyone’s got something to say about how their information is being used. Right at the heart of all this excitement is a real sense of worry: people want to know if AI is safe, secure, and acting in their best interest. If companies don’t handle privacy and security properly, there’s a good chance trust will tank, and progress could slow down. Here’s how these concerns are being addressed in real and practical ways.

Implementing Robust Security Measures

Keeping AI safe isn’t as easy as just locking the door at the end of the day – it means building safeguards at every step.

- Regular audits spot weak points in AI systems before attackers do.

- Encryption ensures data is protected everywhere it goes.

- Automated alerts catch accidents or breaches early.

- Limiting access so only the people who need information can get it.

- Practising with ‘red team’ drills to find what could go wrong, before it actually does.

| Security Step | Purpose |

|---|---|

| Regular audits | Find vulnerabilities and fix them |

| Encryption | Protect data from unauthorised access |

| Access control | Restrict system entry |

| Red team exercises | Test security response in real-time |

| Automated monitoring | Rapid breach detection and response |

With so much riding on AI systems, companies must treat digital locks and alarms as seriously as they do their own front door.

Ensuring Accountability and Transparency in AI Outputs

People want clear answers when algorithms make decisions that affect their lives. Transparency means giving users ways to ask, "How did you come to this answer?" Accountability means stepping up if things go wrong. Some ways to make sure companies stay honest include:

- Explaining in plain English how and why AI reached its output.

- Logging decisions so users and auditors have a record to follow up on.

- Initiating external reviews by experts to challenge and verify claims.

It’s not just about ticking boxes: being open about mistakes and how they’re fixed can go a long way toward restoring confidence, as discussed in the capabilities and risks report.

Building Public Trust for Widespread Adoption

No matter how clever a robot is, if people don’t trust it, they won’t use it. Trust comes from being upfront and responsible.

- Give people a way to report errors or issues directly.

- Share updates about what’s changing in AI systems and why.

- Involve communities in discussions about how new technology will be used.

Everyday people want more than grand promises – they look for clear signals that their privacy is respected and their voices matter.

A future where AI plays a bigger role doesn’t have to be scary. But it does demand strong habits around security and accountability. The companies that remember this are the ones people will keep turning to.

Conclusion

Looking at where OpenAI robotics is heading in 2026, it’s clear we’re in for some big changes. The tech is moving fast, and every year brings something new—sometimes it’s exciting, sometimes it’s a bit worrying. What stands out is how OpenAI keeps trying to balance pushing the limits with making sure things stay safe and fair for everyone. There are still plenty of hurdles, like making robots understand us better or making sure they don’t pick up bad habits from the data they learn from. But the goal is always to build tools that help people, not replace them. As robots and AI become more common in daily life, it’s going to be important for everyone—developers, businesses, and regular folks—to keep talking about what’s working and what isn’t. The choices made now will shape how we live and work for years to come. So, while the future isn’t set in stone, one thing’s for sure: OpenAI’s journey is just getting started, and we’ll all be along for the ride.

Frequently Asked Questions

What is OpenAI’s main goal with robotics?

OpenAI wants to make robots that can do many different tasks, almost like humans. They aim to create robots that can learn and adapt to new situations, helping us with all sorts of jobs in the future.

How will AI help factories in 2026?

By 2026, AI will help factories become ‘smart’. Robots will be able to predict what’s needed, move things around by themselves, and change how they work instantly if something changes, like running out of parts or if lots of people want a product.

What is the ‘2026 Problem’ for AI?

Some people think that by the end of 2026, AI might not have enough new, good information to learn from. This could slow down how much better AI gets. So, new ways of teaching AI, like using fake information or learning from trying things out, will become really important.

Why is it hard to get robots to work in the real world from simulations?

It’s tricky because computers can pretend things happen in a simulated world really well. But when robots try to do the same thing in the real world, things like different lighting or slightly changed objects can confuse them. Big AI models are helping to fix this so robots work better outside of simulations.

What are the biggest worries people have about AI?

People worry about their private information being safe, if AI systems are secure from hackers, and if we can trust the answers AI gives. It’s important for companies like OpenAI to be open about how AI works and keep things safe so everyone can trust it.

Will robots take all the jobs?

It’s true that some jobs might change or go away because of robots and AI. But AI is also seen as a tool to help people do their jobs better and create new kinds of work. It’s important to train people for these new roles.