So, Google’s AI Overviews. They’re everywhere now, right? Popping up at the top of search results, promising quick answers. But how accurate are they, really? We’ve all seen those weird results, the ones that make you scratch your head. This article is going to take a look at how accurate Google AI is, especially with these AI Overviews in 2025. We’ll check out what makes them right or wrong, how Google says it’s trying to fix things, and what people are actually experiencing. It’s a big change to how we search, and understanding its reliability is pretty important.

Key Takeaways

- Google AI Overviews aim to give fast answers but can sometimes be inaccurate, with reliability depending on the source data and question complexity.

- Google uses a mix of prioritizing good data, advanced language models, and human checks to try and make AI Overviews more reliable.

- Evaluating AI Overview accuracy involves both human experts reviewing content and automated tools checking facts against databases.

- User feedback plays a role in improving AI Overviews, creating a cycle of learning and updates for better performance.

- While Google states confidence in AI Overviews, concerns remain from publishers about traffic loss and experts about the potential for errors and hallucinations.

Assessing The Accuracy Of Google AI Overviews

So, how good are these AI Overviews that Google is putting right at the top of search results? It’s a question on a lot of people’s minds, especially with how fast this tech is changing.

Understanding The Role Of AI Overviews

Basically, AI Overviews are Google’s attempt to give you a quick answer without you having to click through a bunch of links. Think of it like a super-fast summary pulled from various websites. The idea is to save you time. Google says people are happier with these summaries and even ask more questions because of them. But, and this is a big but, they haven’t really shown proof for that claim. They’re meant to be helpful, a shortcut to information, but the accuracy is where things get tricky.

User Trust And Click-Through Rates

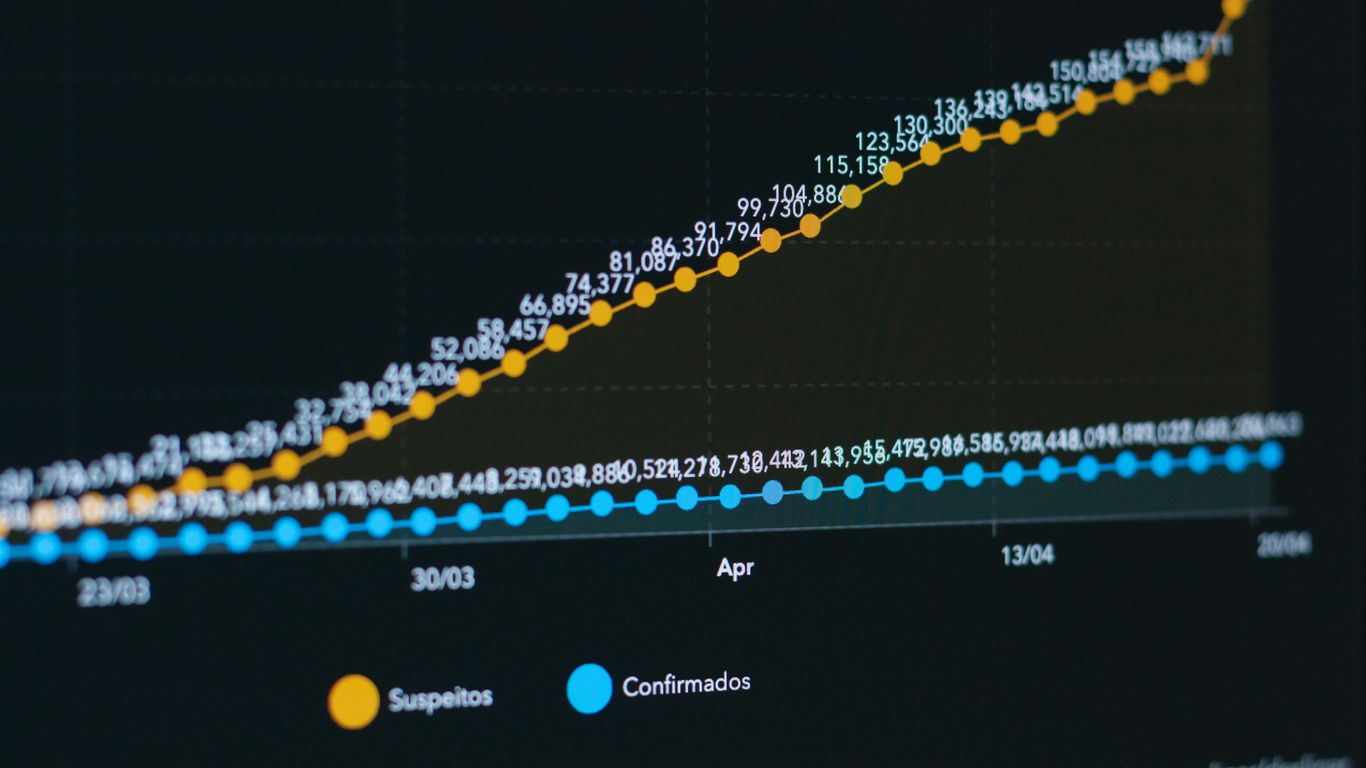

Here’s something interesting: a study showed that people are actually less likely to click on regular links when an AI Overview is present. We’re talking about a 50% drop in clicks. This has publishers pretty worried, calling it a "traffic apocalypse." It makes you wonder if users trust the AI summary more than the original articles, even if those articles come from experts. It’s a strange trade-off – getting an answer faster but potentially missing out on the deeper context or the original source’s credibility.

The Challenge Of Hallucinations In AI

One of the biggest headaches with AI right now is something called "hallucinations." This is when the AI just makes stuff up. It sounds wild, but it happens. Some of the newest AI models get things wrong up to 40% of the time. When it comes to AI Overviews, this means the summary you see might contain incorrect information, even if it sounds confident. We’ve seen instances where asking a question on Google might lead to an AI Overview that wildly overestimates its own errors, or just gets the facts wrong. It’s a constant battle to make sure these summaries are factual and not just confident-sounding fiction.

| Accuracy Factor | Impact on AI Overviews |

|---|---|

| Source Data Quality | High-quality, trusted sources generally improve accuracy. Poor or outdated sources lead to errors. |

| Data Freshness | Recent information is key. Old data can miss important updates, making summaries less reliable. |

| Query Complexity | Simple factual questions are easier to summarize accurately than complex, nuanced topics. |

| NLP Advancements | Better understanding of language, context, and slang improves accuracy, but misinterpretations can still occur. |

Factors Influencing Google AI Accuracy

So, what makes a Google AI Overview hit the mark or miss it entirely? It’s not just one thing; it’s a mix of elements that all play a part. Think of it like baking a cake – you need the right ingredients, the right temperature, and a good recipe to get it right.

Quality and Freshness of Source Data

The biggest factor, honestly, is where Google gets its info. If the websites and articles it pulls from are old, biased, or just plain wrong, the AI overview is going to reflect that. Google’s algorithms are constantly scanning the web, but not all sources are created equal. Reliable, up-to-date information from trusted sites generally leads to more accurate summaries. For instance, if you’re asking about a breaking news story, an overview pulling from early, unconfirmed reports might be less accurate than one that waits for more established news outlets to weigh in.

Complexity of User Queries

Not all questions are created equal, right? Asking "What’s the weather like today?" is pretty straightforward. But asking "What are the long-term economic impacts of renewable energy policies?" is a whole different ballgame. The more complex a question, the harder it is for the AI to give a simple, accurate summary. These kinds of questions often need context, interpretation, or even an understanding of human nuances that AI can struggle with. When the AI tries to boil down complicated topics into a few sentences, it can sometimes oversimplify or miss important details, especially when the answer isn’t a simple yes or no.

Advancements in Natural Language Processing

This is all about how well the AI can actually understand what we’re asking. Natural Language Processing, or NLP, is the tech that helps computers get human language. Google has put a ton of work into making its NLP smarter. The better it gets at understanding slang, context, and even sarcasm, the more accurate the overviews become. However, NLP is still a work in progress. Sometimes, the AI might misunderstand a phrase or miss the subtle meaning behind a question. As NLP gets better, so does the accuracy of AI overviews, but there’s always room for error.

Here’s a quick look at how these factors can stack up:

- Simple factual questions: Usually high accuracy, drawing from established knowledge bases.

- Developing news or niche topics: Accuracy can vary, depending on the availability and quality of recent sources.

- Complex or subjective questions: Higher chance of oversimplification or missing nuance, requiring user critical thinking.

- Outdated information: Overviews may not reflect current changes or developments.

It’s a constant balancing act for Google, trying to make these summaries helpful without sacrificing accuracy.

Google’s Approach To Ensuring Reliability

So, how does Google try to make sure those AI Overviews you see at the top of search results are actually, you know, right? It’s not just a shot in the dark. They’ve got a whole system in place, a mix of tech and human smarts, to keep things on the up and up. It’s a pretty involved process, honestly.

Data Source Prioritization and Filtering

First off, Google doesn’t just grab information from anywhere. The algorithms are designed to sniff out the good stuff. They look for high-quality, authoritative websites – the kind you’d generally trust. Think established news outlets, academic sites, and official sources. Stuff that’s less reliable or looks like spam? That gets filtered out. It’s like having a bouncer at the door for information. They also use structured data, which is basically organized information on the web, to cross-check facts. This helps build a solid foundation for the summaries. The quality of the source data is really the bedrock of a reliable overview.

Machine Learning and Natural Language Processing Models

Once they’ve got the data, the AI models get to work. Google has been pouring a lot of effort into making its machine learning and natural language processing (NLP) smarter. NLP is what helps computers understand human language – the slang, the context, even the occasional sarcasm. The better the AI gets at this, the more accurate it can be in summarizing complex topics. They’re constantly updating these models with new information and feedback, which is how they learn and improve over time. It’s a bit like how the Gemini 3 model is getting better at understanding and reasoning across different types of information.

Human Review and Quality Assurance Processes

Even with super-smart AI, humans are still in the loop. Google employs teams of people whose job it is to review these AI Overviews. They check for correctness, clarity, and any potential bias. These reviewers follow strict guidelines and test the summaries in different situations. If something looks off, it gets flagged. This human oversight acts as a crucial safety net, catching errors that the algorithms might miss. It’s a way to add that extra layer of trust before the information gets to you.

Methods For Evaluating AI Overview Performance

So, how do we actually check if these AI summaries Google is showing us are any good? It’s not like we can just ask the AI if it’s telling the truth, right? Turns out, there are a few ways Google and others try to figure this out. It’s a mix of people looking at things and computers doing their thing.

Human Evaluation And Expert Review

This is probably the most straightforward method. Real people, sometimes folks who really know their stuff about a topic, read through the AI-generated overviews. They compare what the AI says to what reliable sources say. They’re looking for things like:

- Is the information factually correct? Did the AI get the dates, names, and key details right?

- Is it easy to understand? Does the summary make sense, or is it confusing?

- Does it miss anything important? Did it leave out crucial context that changes the meaning?

Sometimes, these reviewers don’t even know if they’re looking at an AI summary or one written by a person. This helps make sure they’re judging it fairly. It’s good because humans can spot subtle errors or weird phrasing that a computer might miss. The downside? It takes a lot of time, and different people might have slightly different opinions.

Automated Fact-Checking And Data Validation

To speed things up and check a massive amount of overviews, automated tools come into play. These systems are designed to scan the AI’s text and cross-reference claims with big databases of known facts, news archives, and scientific papers. If an AI overview says, "The event happened on Tuesday," the tool checks if that matches what trusted sources report. This is super useful for catching obvious mistakes, like outdated information or claims that just aren’t backed up anywhere. It’s fast and can handle a huge volume of checks. However, these tools can sometimes get tripped up by complicated topics, very recent news, or situations where experts don’t all agree. They’re great for catching clear errors, but they aren’t perfect.

User Feedback Loops For Continuous Improvement

Once the AI overviews are out there for everyone to see, the feedback from actual users becomes really important. If you see an AI overview that’s just plain wrong or confusing, you can often flag it. Google collects all this feedback, looking for patterns to see where the AI is messing up repeatedly. They also watch how people interact with the overviews. If lots of users click away quickly or immediately search for more information after seeing an overview, that’s a signal that the summary might not have been helpful or accurate. This real-world feedback is gold because it shows how the AI is performing for everyday people, highlighting issues that might not pop up in controlled tests.

Real-World Performance And User Perception

So, how is Google’s AI actually doing out there in the wild? It’s a mixed bag, honestly. While Google itself says people are happier with the results and are asking more questions, the evidence isn’t always so clear-cut. Some users report that the AI overviews are pretty useful, offering neat tips like how to fix small car dents or confirming basic facts. But when things go wrong, they can go really wrong.

Anecdotal Evidence Of Accuracy

On the positive side, many AI overviews do a decent job summarizing information. For instance, when I asked about vegan pesto, the AI correctly explained how to make it by swapping out parmesan. It’s these kinds of helpful, everyday answers that make you think, "Okay, this could be useful." However, there are also plenty of stories floating around about AI overviews getting things spectacularly wrong. Sometimes they just miss the mark, and other times they seem to invent information entirely. It feels like a bit of a gamble sometimes, doesn’t it?

Publisher Concerns Over Traffic Impact

This is a big one for websites. Many online publishers are pretty worried because AI overviews can significantly cut down on the number of people clicking through to their actual articles. If the AI gives you the answer right there on the search page, why bother clicking a link? This drop in traffic is being called a "traffic apocalypse" by some in the industry. It’s a serious issue because publishers rely on those clicks for revenue. The Tow Center for Digital Journalism even found that AI search tools can fabricate citations and reduce traffic to original sources, which is bad news for journalism. It’s a tricky balance between giving users quick answers and supporting the creators of that information. The 2025 SEO Mega-Report also touches on how user experience metrics like Core Web Vitals are changing in this new search environment.

Expert Opinions On AI Overview Reliability

Experts are also weighing in, and not always with glowing reviews. Some researchers have pointed out that AI search tools can project an illusion of trustworthiness even when their answers are inaccurate. One AI accountability advisor stated plainly that "Google’s AI overviews hallucinate too much to be reliable." They also questioned whether users even want these AI overviews in the first place. While Google claims its AI is highly factual and improving, independent tests and user feedback sometimes tell a different story. It seems like there’s a gap between Google’s confidence in its AI and the reality users are experiencing. The technology is still pretty new, and while it’s getting smarter, it’s definitely not perfect yet.

The Evolving Landscape Of AI Search

Google’s Stated Confidence In AI Overviews

Google has been pretty upfront about its belief in AI Overviews. They’ve said they’re confident these AI summaries are a big step forward for search, making it faster and easier to get answers. They keep talking about how these overviews are getting better all the time, thanks to constant updates and more data. It’s like they see it as the future of how we find stuff online. They’ve even put them on a good chunk of searches, showing they’re really pushing this new feature. It’s a big bet, for sure.

Comparison To Other Search Features

AI Overviews aren’t the only new thing Google’s been playing with. Think about those "People Also Ask" boxes or the featured snippets that used to just pull a bit of text from a page. AI Overviews are kind of like a super-powered version of those. Instead of just a snippet, it’s a whole summary written by AI. It’s different from just clicking through to a website, which is what search engines used to be all about. Now, it feels like Google wants you to get your answer right there, without leaving their page. This is a pretty big shift from how search used to work.

The Future Of AI Integration In Search

So, what’s next? It’s pretty clear AI is here to stay in search. Google’s not going to back down from this. We’re probably going to see AI get even more involved in how we search. Maybe it’ll get better at understanding what we really mean when we ask something, or perhaps it’ll start pulling information from even more places. But there are still big questions. Will AI summaries always give credit where it’s due? Will they get better at not making stuff up? It’s a wild west right now, and how it all shakes out will change how we use the internet. It’s going to be interesting, and maybe a little scary, to see where it all leads.

So, What’s the Verdict on Google’s AI Overviews?

After looking into it, it seems like Google’s AI Overviews are a bit of a mixed bag. Sometimes they give you a pretty good summary, especially for simple questions. But other times, they can get things wrong, sometimes in pretty strange ways. It’s like they’re still figuring things out. Google says they’re working on making them better, and they do have some checks in place, but it’s clear they aren’t perfect. So, while they can be a quick way to get an answer, it’s probably best not to trust them completely, especially for important stuff. Always good to double-check what they say, just in case.

Frequently Asked Questions

What exactly are Google AI Overviews?

Think of Google AI Overviews as quick summaries that sometimes appear at the very top of your search results. They’re meant to give you a fast answer without you having to click on a bunch of different websites. It’s like Google trying to give you the main point right away.

Are these AI Overviews always correct?

Not always. Sometimes they get things right and give you helpful info from good sources. But other times, the information can be a bit off or even lead you the wrong way. It’s best to use them as a starting point and check other sources if you need to be really sure, especially for important stuff.

Why do AI Overviews sometimes get things wrong?

It happens for a few reasons. The AI looks at a lot of information from the internet, and sometimes the sources it uses might be old or not totally accurate. Also, if your question is really complicated or tricky, it’s harder for the AI to give a perfect summary. It’s still learning how to understand everything perfectly.

How does Google try to make sure the Overviews are good?

Google uses smart computer programs to pick the best information from trusted websites. They also have people who review the summaries to catch mistakes. Plus, they use feedback from people like you to help make the AI better over time. It’s a mix of technology and human checks.

Should I trust AI Overviews more than regular search results?

It’s probably safer not to trust them more. While they can be handy for quick facts, articles from experts and trusted websites often have more detail and are more reliable for important topics. It’s always a good idea to look at a few different sources.

Will AI Overviews change how I use Google?

Yes, they are changing things! Some people are clicking on fewer links because they get their answers from the Overviews. This is making some website owners worried about losing visitors. Google believes people like them, but it’s still a big change in how we find information online.