Air compressors are essential devices across many industries, powering a range of applications from industrial manufacturing and automotive repair to HVAC systems and environmental controls. At CFM Air Equipment, we provide cutting-edge air compressor solutions designed to deliver reliability, energy efficiency, and performance in even the most demanding environments. With a focus on innovation and quality, our compressors are engineered to enhance productivity while reducing operating costs.

Diverse Types of Air Compressors

CFM Air Equipment offers a comprehensive selection of air compressors, each tailored to meet specific process requirements. Understanding the differences among these types is crucial for selecting the ideal solution for your operations.

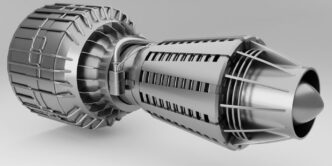

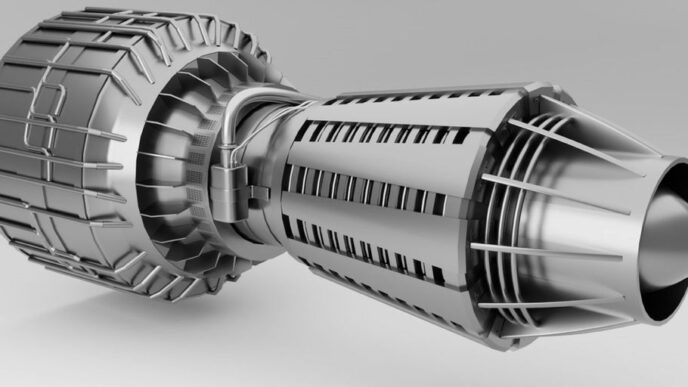

Rotary Screw Air Compressors

Rotary Screw Air Compressors are renowned for their continuous duty operation and high energy efficiency. These compressors work by using two interlocking helical rotors to compress air, resulting in a smooth and steady airflow with minimal vibration. Their robust design makes them perfect for applications requiring long operational hours and minimal downtime. Many industries, including automotive, manufacturing, and process engineering, favor Rotary Screw Air Compressors for their reliability and performance. Companies searching for dependable solutions often find that Rotary Screw Air Compressors offer the best balance of capacity and efficiency.

Reciprocating Air Compressors

Reciprocating Air Compressors utilize a piston mechanism to compress air within a cylinder. This design is ideal for applications requiring intermittent use or where high-pressure air is needed in bursts. Reciprocating Air Compressors are particularly valued in small to medium-sized facilities where duty cycles are lower. Their relatively simple construction translates to lower initial investment costs and easier maintenance, making them an attractive choice for various industrial and commercial settings. When the need arises for high-pressure output, Reciprocating Air Compressors deliver with proven performance and durability.

Oil-Free Air Compressors

Oil-Free Air Compressors are designed to provide clean, contaminant-free air, which is critical for industries such as food and beverage processing, pharmaceuticals, and electronics manufacturing. By eliminating the use of lubricating oil in the compression process, these compressors prevent oil carryover and ensure that the compressed air remains pure. This makes Oil-Free Air Compressors an essential component in applications where air quality cannot be compromised. Their advanced design and engineering provide high efficiency while meeting stringent industry standards for cleanliness.

Portable Air Compressors

Portable Air Compressors offer the advantage of mobility and ease of deployment, making them ideal for applications that require flexibility and on-site power generation. Whether used in field service, construction sites, or emergency backup systems, Portable Air Compressors deliver reliable performance in a compact and transportable format. Their lightweight design and ease of setup allow for rapid deployment and efficient operation in remote or temporary locations. Businesses often choose Portable Air Compressors when the need for mobility and versatility is paramount.

Key Features and Benefits

CFM Air Equipment’s air compressors incorporate several features that enhance operational efficiency and reliability:

- Energy Efficiency: Our compressors are engineered to optimize energy consumption. Technologies such as variable speed drives and advanced control systems help reduce power usage and lower overall operating costs.

- Durability and Longevity: Built with high-quality components and robust materials, our compressors are designed to withstand harsh conditions and continuous operation, ensuring long service life.

- Precision Control: Modern control systems allow for precise regulation of pressure and airflow, improving process consistency and reducing the risk of equipment damage.

- Low Maintenance Requirements: With streamlined designs and quality manufacturing, our compressors are easy to maintain, minimizing downtime and extending the intervals between routine service.

- Versatility: Whether you need a solution for high-pressure bursts, continuous operation, or contamination-sensitive applications, our range—including Rotary Screw Air Compressors, Reciprocating Air Compressors, Oil-Free Air Compressors, and Portable Air Compressors—ensures that you have the right tool for the job.

These advantages translate into improved productivity and lower operational costs, making our air compressors a smart investment for businesses across various sectors.

Applications Across Industries

The applications for air compressors are as diverse as the industries they serve. Some common uses include:

- Manufacturing and Process Industries: Air compressors are critical in production lines, providing the necessary force for pneumatic tools, material handling, and process automation.

- Automotive and Repair Workshops: In these environments, air compressors power tools for repairs, painting, and maintenance, ensuring efficient and reliable service.

- Food, Beverage, and Pharmaceutical Industries: Oil-Free Air Compressors are vital in these sectors where air purity is essential to prevent contamination and ensure product quality.

- Construction and Field Operations: Portable Air Compressors offer the mobility and convenience needed for on-site operations, from drilling and demolition to emergency power applications.

- HVAC and Environmental Controls: Compressed air is used in various systems to regulate temperature, control humidity, and improve indoor air quality, contributing to energy-efficient building management.

By providing tailored air compressor solutions, CFM Air Equipment helps organizations achieve higher efficiency, better product quality, and reduced energy costs.

Integration with Modern Technology

Modern air compressor systems are increasingly integrated with advanced digital controls and monitoring systems. These smart technologies provide real-time feedback on operational performance, enabling predictive maintenance and minimizing unplanned downtime. For example, monitoring systems can track parameters such as pressure, temperature, and energy consumption, allowing operators to adjust settings for optimal performance.

At CFM Air Equipment, we embrace digital integration to enhance the functionality of our compressors. This approach not only improves operational efficiency but also extends the lifespan of the equipment by enabling proactive service measures.

Sustainable and Energy-Efficient Operations

Sustainability is a key driver in today’s industrial landscape. Energy-efficient air compressors help reduce carbon footprints and support environmental compliance. CFM Air Equipment designs our compressors to meet modern energy standards, incorporating features that reduce power consumption and minimize waste.

The use of advanced materials and smart technology in our compressors ensures that they deliver high performance with a reduced environmental impact. Whether you are considering Rotary Screw Air Compressors for continuous operation or Oil-Free Air Compressors for contamination-sensitive applications, our products are engineered for efficiency and sustainability.

Commitment to Quality and Expertise

CFM Air Equipment has built a reputation for quality, innovation, and customer satisfaction. Our commitment to excellence is evident in every stage of product development—from design and engineering to manufacturing and service. We work closely with our clients to understand their unique requirements and provide customized air compressor solutions that meet their operational needs.

For more detailed information about our company and our approach to quality and innovation, please visit our About Us page. Our expertise and dedication ensure that you receive products that are not only reliable and efficient but also backed by comprehensive support and service.

Why Choose CFM Air Equipment

Choosing the right air compressor is critical to ensuring smooth and efficient operations. Here are several reasons why CFM Air Equipment is the preferred choice for air compressor solutions:

- Innovative Technology: We continually invest in research and development to bring the latest technological advancements to our air compressor systems.

- Customized Solutions: Our diverse range includes Rotary Screw Air Compressors, Reciprocating Air Compressors, Oil-Free Air Compressors, and Portable Air Compressors, ensuring a perfect match for every application.

- Quality Assurance: Each compressor undergoes rigorous testing and quality checks to ensure reliable performance and durability under demanding conditions.

- Comprehensive Support: From initial consultation and installation to ongoing maintenance and technical support, our team is committed to ensuring your success.

- Sustainable Practices: Our products are designed for energy efficiency and minimal environmental impact, aligning with modern sustainability goals.

To explore our full range of air compressor solutions, please visit our Compressors page. You can also learn more about our capabilities and company values on our homepage.

The Future of Air Compressors

The future of air compressor technology lies in continuous innovation and integration with smart systems. Emerging trends include the adoption of IoT-enabled monitoring, improved energy management through digital controls, and the development of even more efficient and compact designs. As industries evolve and demand higher efficiency and reliability, air compressors will continue to play a pivotal role in industrial operations.

CFM Air Equipment is at the forefront of this transformation, committed to developing next-generation air compressor solutions that meet the challenges of tomorrow while delivering exceptional performance today.

FAQ

- What are the main types of air compressors offered by CFM Air Equipment?

CFM Air Equipment offers a diverse range of air compressors, including Rotary Screw Air Compressors, Reciprocating Air Compressors, Oil-Free Air Compressors, and Portable Air Compressors. Each type is designed to meet specific operational requirements across various industries.

- How do Rotary Screw Air Compressors differ from Reciprocating Air Compressors?

Rotary Screw Air Compressors provide continuous duty operation and high energy efficiency through a smooth, steady airflow, making them ideal for applications with constant use. In contrast, Reciprocating Air Compressors are typically used in applications requiring intermittent operation and high-pressure bursts, offering a cost-effective solution for smaller operations.

- What benefits do Oil-Free Air Compressors offer?

Oil-Free Air Compressors ensure the delivery of contaminant-free air, which is crucial for industries such as food processing, pharmaceuticals, and electronics manufacturing. They eliminate the risk of oil carryover, thereby maintaining high air quality and protecting sensitive processes.

- Why are Portable Air Compressors important in certain applications?

Portable Air Compressors are designed for mobility and flexibility, making them ideal for field service, construction sites, and emergency backup applications. Their compact design and ease of transport allow for rapid deployment in various on-site situations, ensuring reliable performance wherever needed.

- How does CFM Air Equipment ensure the quality of its air compressors?

CFM Air Equipment is committed to quality through rigorous engineering, advanced technology, and comprehensive testing of all products. Our focus on energy efficiency, durability, and innovative design ensures that our compressors deliver reliable performance and long-term value to our customers. For more information about our commitment to excellence, please visit our About Us page.

CFM Air Equipment continues to lead the way in air compressor technology by providing innovative, energy-efficient, and reliable solutions tailored to meet the evolving needs of modern industry. Explore our products and discover how our expertise can help optimize your operations today.