Thinking about getting into generative AI training? It can seem like a lot, especially with all the new stuff coming out. This guide is here to break down the basics and some of the more involved parts of generative ai training. We’ll look at what you need to get started, how to make your training work better, and what to do after you’ve trained a model. It’s all about making sure your generative ai training efforts pay off.

Key Takeaways

- Get the right tools for your generative ai training. Picking the correct software and frameworks makes a big difference when you’re training models.

- Data is super important for good generative ai training. Make sure your data is clean and fits what you’re trying to teach the AI.

- Training models needs to be done carefully. Using things like regularization and adjusting the learning rate helps the AI learn correctly and perform better.

- After training, you have to check how well the model is doing. Fine-tuning it and keeping an eye on it over time helps keep it working well.

- Think about how your trained AI will fit into other systems. Making it easy to connect and making sure it’s fast enough for real-time use are key steps.

Foundational Elements Of Generative AI Training

Getting started with generative AI training can feel like a big undertaking, but breaking it down into a few core areas makes it much more manageable. Think of it like building anything substantial – you need a solid base before you can add the fancy bits.

Understanding The Core Concepts Of Generative AI

At its heart, generative AI is about teaching computers to create new things. This isn’t just about recognizing patterns, but about producing them. Whether it’s text, images, music, or code, the goal is to generate outputs that are novel yet plausible. This is typically achieved through complex neural networks, often trained on massive amounts of existing data. The model learns the underlying structure and characteristics of that data, then uses that knowledge to make something new. It’s a bit like an artist studying thousands of paintings to learn different styles before creating their own original piece.

Choosing The Right Toolkit For Your Generative AI Training

Picking the right tools is pretty important. You wouldn’t try to build a house with just a hammer, right? For generative AI, this means selecting the right programming languages, libraries, and frameworks. Python is a common choice because it has a rich ecosystem of AI and machine learning libraries like TensorFlow and PyTorch. These frameworks provide pre-built components and tools that make building and training complex models much easier. You’ll also need to consider your hardware – training these models can be very computationally intensive, so access to powerful GPUs is often a necessity.

Here are some common tools you’ll encounter:

- Programming Languages: Python is the go-to.

- Deep Learning Frameworks: TensorFlow, PyTorch.

- Libraries: NumPy for numerical operations, Pandas for data handling, Scikit-learn for general machine learning tasks.

- Hardware: GPUs (Graphics Processing Units) are almost always required for efficient training.

The Role Of Data In Effective Generative AI Training

Data is the fuel for generative AI. Without good data, your model won’t learn properly, no matter how fancy your tools are. The quality and quantity of your training data directly impact the performance and capabilities of your generative AI model. This means you need data that is not only abundant but also clean, relevant, and representative of what you want your AI to create. If you’re training an AI to write poetry, you need a lot of good poetry, not just random sentences. Cleaning and preparing this data – removing errors, standardizing formats, and ensuring it’s free from unwanted biases – is a significant part of the training process. It’s often said that data preparation can take up the majority of the time in an AI project, and for good reason.

Advanced Techniques For Generative AI Training

Mastering Repeatable Training Processes

Training a generative AI model isn’t a one-and-done kind of deal. Think of it like teaching a student – they need consistent practice to really get it. Making sure your training process is repeatable is super important. It helps you not only make the AI perform better but also stops weird issues, like the model getting stuck on one type of output (that’s called mode collapse, by the way).

Here’s a quick rundown of why this matters:

- Consistency: Repeatable training means you get similar results each time you run it, making it easier to track progress.

- Debugging: When something goes wrong, a repeatable process lets you isolate the problem more easily.

- Scalability: If you need to train more models or larger ones, having a solid, repeatable method makes it much simpler.

Implementing Regularization For Optimal Performance

Regularization is like giving your model a nudge to not just memorize the training material but actually learn from it. Without it, models can become too specialized in the data they’ve seen and then fail when they encounter new, slightly different information. Techniques like Dropout, where you randomly ignore some parts of the network during training, or L2 Regularization, which penalizes large weights, help keep the model more general and useful.

Leveraging Learning Rate Scheduling For Better Convergence

Getting the learning rate right is a bit of an art. Too high, and you might overshoot the best solution. Too low, and training can take forever or get stuck in a less-than-ideal spot. Learning rate scheduling means you change the learning rate over time. Often, you start with a higher rate to make quick progress and then gradually decrease it. This helps the model settle into a good solution without getting stuck. It’s a smart way to help your model find its footing and converge on a solid answer.

Model Selection And Preparation For Generative AI Training

Alright, so you’ve got a handle on the basics and you’re ready to pick the right brain for your generative AI project. This part is pretty important, honestly. It’s like choosing the right ingredients before you start cooking – get it wrong, and your whole dish can be off.

Defining Clear Objectives For Generative AI Implementation

Before you even look at a model, you gotta know what you want this AI to do. Seriously, what problem are you trying to fix? Are you trying to make cool art, write better emails, or maybe generate code? Be super specific. If you want to generate images, say "generate photorealistic images of cats in space." Don’t just say "make pictures." Having clear goals helps you figure out what kind of model you need and what data will actually be useful. It’s the first step, and if you skip it, you’ll probably end up wasting a lot of time and resources.

Selecting The Appropriate Model For Your Task

Now for the fun part: picking the actual AI model. There are tons of them out there, and they’re good at different things. For text stuff, you might look at models like those from the GPT family. If you’re into images, think about models that are known for visual generation. It really depends on what you’re trying to achieve. You can use models that someone else has already trained (pre-trained models) or build one from scratch, which is a whole other ballgame. Consider factors like how fast the model works, how accurate it is, and if it can even handle the kind of data you have. Sometimes, trying out a few different models on a small sample of your data is the best way to see which one fits best.

Ensuring High-Quality Data For Generative AI Training

This is where a lot of people stumble. Your AI is only as smart as the data you feed it. If your data is messy, biased, or just plain wrong, your AI will be too. So, you need to clean up your data. Make sure it’s in the right format, get rid of duplicates, and check for any weird errors. You’ll also want to split your data into different sets: one for training, one for checking how well it’s learning (validation), and one for a final test. If you don’t have enough data, you can sometimes use techniques to create more variations of your existing data, which can help the AI learn better. Think of it like giving your AI a really good textbook instead of a bunch of scribbled notes.

Evaluating And Optimizing Generative AI Models

So, you’ve put in the work, trained your generative AI model, and now it’s time to see how it actually performs. This isn’t just about checking a box; it’s about making sure your model is actually doing what you want it to do, and doing it well. Think of it like tasting your cooking before you serve it – you want to make sure it’s not too salty or bland, right?

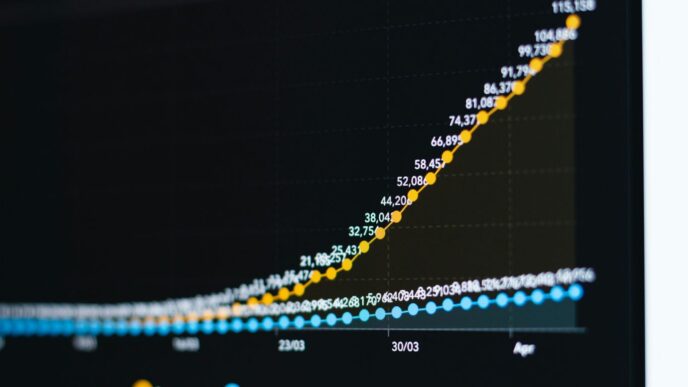

Rigorous Training And Evaluation Of Generative AI Models

Training isn’t a one-and-done deal. You need to really push your model and then check its homework. For generative models, this can be a bit trickier than with regular machine learning. We’re not just looking at simple accuracy scores. Metrics like Frechet Inception Distance (FID) can give us a quantitative idea of how close the generated data looks to real data – a lower FID score is generally better. But honestly, sometimes you just need to look at the output yourself or get a group of people to check it out. Human judgment is still pretty important here.

Here are a few things to keep in mind during this phase:

- Check for biases: Does your model produce unfair or skewed results? This often comes from the data it learned from.

- Assess output quality: Are the generated images clear? Is the text coherent and relevant?

- Measure performance: How fast is it? How much computing power does it need?

Fine-Tuning Parameters For Peak Performance

Once you have a handle on how your model is doing, it’s time to tweak things. This is where you adjust the settings – the hyperparameters – to get the best results. It’s like tuning a guitar; you adjust the strings until it sounds just right. You might play around with the learning rate, which controls how much the model changes with each piece of new information, or the batch size, which is how many data samples it looks at all at once. Making these small adjustments can make a big difference in how accurate and capable your model becomes. It can also save you time and resources down the line.

Continuous Monitoring And Retraining Strategies

Your model isn’t static. The world changes, and so does the data. So, you can’t just train it and forget about it. You need to keep an eye on how it’s performing over time. Are the results still good? Are users happy? You’ll want to track key performance indicators (KPIs) – things like how accurate it is, how fast it responds, and if it’s still producing good quality output. If you start noticing a dip in performance or if new data becomes available, it’s time to retrain. This keeps your model relevant and effective. Think of it as giving your model a refresher course every now and then.

Specialized Generative AI Training Approaches

Alright, so we’ve talked about the basics and some advanced stuff, but what about when you need to get really specific with your generative AI training? Sometimes, the general methods just don’t cut it, especially with certain types of models or data. This is where specialized techniques come into play. Think of it like having a toolbox with regular wrenches, but then you need a special socket set for a really tricky bolt. That’s what these approaches are for.

GAN-Specific Training Strategies

Generative Adversarial Networks, or GANs, are a bit unique. They have two networks, a generator and a discriminator, that battle it out. This adversarial process is powerful, but it can lead to problems. One common issue is the discriminator getting too good too fast, basically shutting down the generator’s learning. Label smoothing is a neat trick to help with this. Instead of telling the discriminator "this is definitely a real image" (a hard label of 1) or "this is definitely fake" (a hard label of 0), you soften it up. So, real images might get a label like 0.9, and fake ones get 0.1. This makes the discriminator a bit less confident and gives the generator more room to learn.

Progressive Growing For Complex Data

When you’re dealing with really complex data, like high-resolution images, training a GAN from scratch can be a nightmare. Progressive growing tackles this by starting small and building up. You train the model on very low-resolution images first. Once it’s doing well with those, you gradually increase the resolution, adding layers to both the generator and discriminator as you go. This means the model learns the basic structures and patterns first, then adds finer details later. It’s like learning to draw a stick figure before attempting a photorealistic portrait. This approach makes training more stable and can lead to much better results with intricate datasets.

Label Smoothing To Prevent Discriminator Overpowering

We touched on this with GANs, but it’s worth repeating because it’s so important for that specific architecture. The core idea is to make the training targets less extreme. For the discriminator, instead of aiming for a perfect 0 or 1, we aim for something closer to the middle, like 0.1 or 0.9. This prevents the discriminator from becoming overly confident and provides a smoother gradient for the generator to learn from. It’s a subtle change that can make a big difference in preventing training from stalling or collapsing. It helps maintain a healthy competition between the two networks, which is key to generating high-quality outputs.

Integrating Generative AI Training Into Workflows

So, you’ve gone through the whole process – picked your model, prepped your data, and trained it up. Now what? The real magic happens when you actually get this AI working with the stuff you already do. It’s not just about having a cool model; it’s about making it useful.

Developing APIs For Seamless Integration

Think of APIs (Application Programming Interfaces) as the translators between your new AI and your existing software. Without them, your AI is kind of like a brilliant person stuck in a room with no way to talk to anyone. You need a way for other programs to ask your AI questions and get answers back. This means building specific endpoints that can take requests, feed them to your trained model, and then send the generated output back. This connection is what makes your AI a practical tool, not just a research project.

Here’s a quick rundown of what goes into making this happen:

- Define Input/Output: What kind of data will your AI receive (text, images, numbers)? What will it produce?

- Build the Interface: Write the code that handles incoming requests and outgoing responses.

- Connect to the Model: Make sure your API can load and run your trained generative AI model.

- Handle Errors: What happens if the AI can’t generate a response or if the input is bad? Your API needs to manage these situations gracefully.

Prioritizing Real-Time Performance In Generative AI

When you’re using AI in a live setting, like a customer chat or a content creation tool, speed matters. Nobody wants to wait ages for a response. Getting your AI to perform quickly often means looking at a few things:

- Model Size: Bigger models can be more powerful, but they’re also slower. Sometimes, a slightly smaller, faster model is better for real-time use.

- Hardware: Running AI on the right hardware, like GPUs, makes a huge difference. Cloud services often provide these.

- Optimization: Techniques like model quantization (making the model use less memory) or pruning (removing unnecessary parts) can speed things up without losing too much quality.

- Batching: If you have multiple requests coming in, processing them together in batches can be more efficient than handling each one individually.

Understanding The Evolution Of Generative AI Models

Generative AI isn’t static; it’s always changing. What’s cutting-edge today might be standard tomorrow. Keeping up means understanding that your initial training might not be the end of the road. Models get updated, new architectures appear, and the data they’re trained on evolves. This means you need a plan for the future:

- Stay Informed: Keep an eye on new research and developments in the field.

- Plan for Updates: Think about how you’ll incorporate newer, better models into your workflow when they become available.

- Continuous Learning: Your AI might need to be retrained periodically with new data to stay relevant and accurate. This isn’t a one-and-done deal; it’s an ongoing process.

Wrapping It Up

So, we’ve covered a lot of ground on getting generative AI ready for action. It’s not just about picking the fanciest tools or having tons of data, though those things help. It really comes down to training your models right, checking your work, and keeping an eye on what happens after you put them to use. Think of it like building anything complex – you need the right parts, a solid plan, and a lot of testing. As we head into 2026, the landscape keeps changing, but the core ideas of careful planning, smart training, and checking results are what will help you build AI that actually works for you. Don’t forget to think about the ethical side of things too; that’s super important. Keep learning, keep trying new things, and you’ll be well on your way.

Frequently Asked Questions

What exactly is Generative AI?

Think of Generative AI like a super-smart computer program that can create new things. It learns from tons of examples, like pictures, words, or music, and then uses what it learned to make its own original content. It’s like an artist or writer that can come up with brand new ideas based on what it has studied.

Why is choosing the right tools important for training Generative AI?

Picking the right tools is like picking the right ingredients for a recipe. If you have the wrong tools, your AI might not learn well or might take too long to train. The best tools help the AI learn faster and better, making sure it can create the kind of content you want it to.

How does data help Generative AI learn?

Data is like the textbook for Generative AI. The more good quality examples it gets to study, the smarter it becomes. If the data is messy or wrong, the AI will learn bad habits. So, making sure the data is clean and covers many different things is super important for the AI to learn properly.

What does ‘repeatable training’ mean for AI?

Repeatable training means training the AI over and over again in a way that you can do the exact same steps each time. This helps the AI get better and better, like practicing a skill. It also helps fix problems so the AI doesn’t get stuck or make the same mistakes.

How do we know if our Generative AI is good enough?

We test it a lot! We look at what the AI creates and compare it to what we expect. We also adjust its settings, kind of like tuning a radio, to make its creations even better. We keep checking on it even after it’s trained to make sure it stays good.

What are some special tricks for training certain types of Generative AI, like GANs?

For some AI types, like GANs (which have two parts that compete), there are special methods. One trick is called ‘label smoothing,’ which helps one part of the AI not get too bossy. Another is ‘progressive growing,’ where the AI learns with simple things first and then moves on to more complicated stuff, like learning to draw a simple shape before drawing a whole picture.