It feels like every day there’s something new happening with big tech companies and how they’re managed. We’re talking about platforms like Facebook and Instagram, and the rules they set for what people can say and do online. This whole area is getting more complicated, especially with things like AI popping up. It makes you wonder how our governments, and even other countries, are keeping up. The idea of a ‘meta congress’ – a kind of overarching body or discussion about these digital spaces – is really starting to feel relevant. Let’s break down what’s going on with oversight, people who speak out about problems, and how it all connects to what’s happening globally.

Key Takeaways

- The Meta Oversight Board was set up to review tough content decisions, but it’s more about managing decisions for the company than truly checking its power.

- Getting outside groups and regular people involved in deciding platform rules is important, but it’s tricky to make sure their input really matters and that they are independent.

- When people inside these tech companies point out problems, it’s vital that their information is checked and that the company is open about how it makes decisions.

- Digital platforms have a big effect on how countries talk to each other, and fighting false information across borders is a major challenge.

- As AI gets more advanced, we need to think hard about how it affects elections and public discussion, and create laws to handle it.

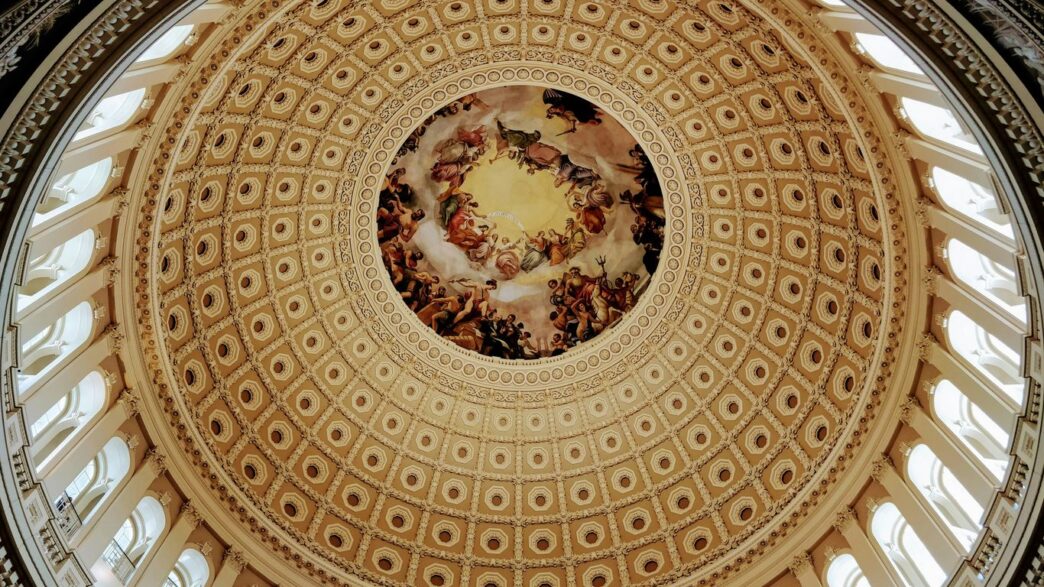

Meta Congress And The Evolving Landscape Of Digital Governance

It feels like every day there’s a new headline about how big tech platforms are shaping our world, and honestly, it’s a lot to keep up with. We’re talking about how these companies, with their massive reach, are basically running a whole digital society. This isn’t just about what you see in your feed; it’s about how decisions are made, who gets a say, and what that even means for us.

Understanding The Meta Oversight Board’s Role

The Meta Oversight Board is this interesting experiment. Think of it as a kind of independent group that Meta itself set up to review some of its toughest content decisions. They look at things like whether a post should stay up or come down, especially when it’s controversial or has a big impact. It’s a way for the company to try and get outside opinions on really tricky calls. They’ve got a process, and people can even appeal decisions to them. It’s not perfect, and there’s always debate about how much power they really have, but it’s a step towards some form of accountability.

Challenges In Platform Self-Regulation

Self-regulation is a tough gig for these platforms. On one hand, they’re businesses, and they need to make money. On the other, they’re dealing with issues that affect millions, even billions, of people. It’s a constant balancing act. They try to set their own rules, but sometimes those rules don’t quite fit the real world, or they get applied unevenly. It’s hard to be both the rule-maker and the enforcer, especially when there’s so much money and influence involved. Plus, the speed at which things change online means rules can feel outdated almost as soon as they’re written.

The Impact Of Algorithms On Democratic Processes

Algorithms are the hidden engines driving a lot of what we see online, and their impact on democracy is a huge topic. These aren’t neutral tools; they’re designed to keep us engaged, which often means showing us things that are more likely to get a reaction. This can lead to echo chambers, where we only see opinions that confirm our own, and it can amplify extreme views. It makes it harder for people to have productive conversations across different viewpoints. We’re seeing how these systems can shape public opinion and even influence election outcomes, which is pretty concerning when you think about it. It’s a complex area, and figuring out how to make these systems work better for everyone, not just for engagement metrics, is a big challenge we’re still grappling with.

Navigating Oversight In The Digital Age

So, how do we actually keep an eye on these massive online platforms? It’s a question a lot of people are wrestling with, and honestly, there aren’t easy answers. We’ve seen attempts, like Meta’s Oversight Board, which is kind of like a Supreme Court for content decisions. It’s an interesting idea, trying to bring some outside perspective to what can feel like a black box.

Examining The Meta Oversight Board’s Structure

The Oversight Board itself is structured to review some of the toughest content calls Meta makes. Think of really controversial posts or decisions to take down material. They have a panel of experts, and their rulings are supposed to guide Meta’s policies. It’s a step towards transparency, but it’s not perfect. The board’s decisions, while influential, don’t always address the root causes of why problematic content spreads in the first place. They look at individual cases, which is important, but the bigger picture of how algorithms push certain things to the top is a whole other beast.

The Role Of Civil Society In Platform Oversight

Beyond the official boards, there’s a whole world of civil society groups, academics, and everyday users trying to push for better practices. These folks are often the first to spot problems, whether it’s the spread of hate speech or the impact of misinformation on elections. They bring real-world experience and a different kind of accountability. It’s like having a watchdog that’s constantly on the ground. They often push for things like stronger whistleblower protections, which are vital for getting information out about what’s really happening inside these companies.

Balancing Oversight With Corporate Interests

This is where things get tricky. Companies like Meta are businesses, and their main goal is to make money. Oversight mechanisms, whether internal or external, have to figure out how to push for public good without completely stifling innovation or making it impossible for these platforms to operate. It’s a constant push and pull. We’ve seen other attempts at oversight, like TikTok’s regional councils, but they haven’t always stuck. Twitter even got rid of its safety council after new ownership. It shows how fragile these structures can be.

Here are some key considerations when thinking about this balance:

- Independence: How truly independent can an oversight body be if it’s funded by the company it’s supposed to be overseeing?

- Scope: Does the oversight focus only on individual content decisions, or does it look at the broader system, like how algorithms work?

- Local Context: Do oversight bodies consider local laws, cultural norms, and specific regional issues, or do they apply a one-size-fits-all global approach?

Getting this balance right is tough, and it’s something we’ll likely be debating for a long time.

Whistleblowers And Accountability In Tech

When things go wrong behind the scenes at big tech companies, who is supposed to speak up? That’s where whistleblowers come in, and frankly, they’re often in a tough spot. It’s not easy to be the person who knows about a problem and decides to say something, especially when you’re up against massive corporations. The rapid advancement of artificial intelligence is outpacing the development of whistleblower protections. Advocates are highlighting the urgent need for stronger safeguards as AI integration grows across industries. This trend necessitates a proactive approach to ensure whistleblowers can report wrongdoing related to AI without fear of retaliation. stronger safeguards

The Importance Of Independent Verification

It’s one thing for someone to claim a company is doing something wrong, but it’s another to prove it. Independent verification means looking at the evidence from outside the company. This could involve checking internal documents, talking to other employees, or even looking at the technical side of how a platform works. Without this step, it’s hard to know if the claims are true or just someone’s opinion. It helps make sure that any actions taken are based on facts, not just accusations.

Seeking Technical Input From External Parties

Tech companies are complicated. They use complex systems and algorithms that most people, including many regulators, don’t fully understand. That’s why it’s so important to bring in outside experts. These folks can look at the code, the data, and the systems to figure out what’s really going on. They can help explain how certain features might be causing harm or how a company’s internal processes are failing. This kind of input is vital for making informed decisions about accountability. It’s not just about what the company says is happening, but what external technical analysis reveals.

Transparency In Decision-Making Processes

When a company makes a decision about content, or how its systems work, it’s often done behind closed doors. Whistleblowers can shed light on these processes, but their information needs to be handled carefully. Transparency means making the decision-making process, and the reasons behind it, public. This allows for scrutiny and helps build trust. For example, understanding how Meta’s "cross-check" program works, or how their machine learning classifiers for nudity are set up, gives everyone a better picture. However, there’s a push and pull here. Companies want to protect their secrets and prevent bad actors from gaming the system. So, finding the right balance between openness and necessary confidentiality is key. It’s a tricky line to walk, but necessary for real accountability.

Meta Congress And Foreign Relations

It’s pretty wild how much these big tech platforms, like Meta, can sway things on a global scale. We’re talking about how they shape conversations between countries, influence what people in different nations think about each other, and even play a part in how information spreads – or doesn’t spread – across borders. It’s not just about what we see on our own feeds anymore; it’s about the bigger picture of international discourse.

The Influence Of Digital Platforms On International Discourse

Think about it: a single post, a trending topic, or even a coordinated campaign can jump from one country to another in minutes. This speed means that platforms aren’t just passive conduits; they’re active players in shaping international relations. They can amplify certain voices, silence others, and create echo chambers that make understanding different perspectives harder. This ability to rapidly disseminate information, or misinformation, globally presents a unique challenge for diplomacy and international understanding. When narratives are controlled or heavily influenced by algorithms and platform policies, it complicates efforts to build bridges and foster genuine dialogue between nations.

Addressing Disinformation In A Global Context

Dealing with fake news and propaganda is tough enough at home, but on a global stage, it’s a whole different ballgame. Disinformation campaigns can be launched from anywhere, targeting specific countries or groups with tailored messages. This makes it incredibly difficult for governments and international bodies to track, verify, and counter. We’ve seen instances where foreign actors have used social media to interfere in elections or sow discord, and these efforts are becoming more sophisticated. It’s a constant cat-and-mouse game, and platforms like Meta are caught in the middle, trying to balance free expression with the need to prevent harm.

Interoperability And International Standards

One of the big questions is how different platforms and countries can work together. Right now, it feels like everyone’s playing by their own rules. Different countries have different laws about online content, and platforms have their own internal policies. This lack of common ground makes it hard to create consistent approaches to issues like data privacy, content moderation, and combating disinformation. Ideally, we’d see more interoperability – systems that can talk to each other – and agreed-upon international standards. This would make it easier to share information, coordinate responses, and hold platforms accountable across borders. It’s a complex puzzle, but one that’s becoming more important as our world gets more connected online.

The Future Of Democracy In The Age Of AI

It feels like every day there’s a new headline about Artificial Intelligence and what it means for us. And honestly, it’s a lot to take in, especially when we start thinking about something as big as democracy. We’re talking about systems that can create content, influence opinions, and even mimic human interaction. It’s not just about robots taking jobs anymore; it’s about how these tools might change the way we vote, discuss issues, and hold our leaders accountable.

AI’s Impact On Elections And Political Discourse

Think about how campaigns already use data to target voters. Now imagine AI supercharging that. We’re seeing AI tools that can generate realistic-looking videos and audio – deepfakes, basically. These could be used to spread false information about candidates or events, making it really hard for people to know what’s real. It’s not just about the big, flashy stuff either. AI can also be used to flood social media with tailored messages, subtly nudging public opinion in ways we might not even notice. This makes it tougher for genuine public debate to happen.

- Automated content generation: AI can create fake news articles, social media posts, and even simulated conversations at a scale humans can’t match.

- Microtargeting on steroids: AI can analyze vast amounts of data to identify and persuade specific voter segments with highly personalized messages.

- Deepfake technology: Realistic fake videos and audio can be used to discredit politicians or spread fabricated events.

- Algorithmic amplification: AI-driven social media feeds can prioritize sensational or divisive content, further polarizing the electorate.

Rethinking Democracy For The Age Of AI

So, what do we do? We can’t just put the genie back in the bottle. We need to start thinking differently about how our democratic systems work. For starters, we need to get better at spotting AI-generated content. This might mean developing new tools for verification or educating the public on how to be more critical consumers of information. It also means looking at the platforms themselves. They’ve got algorithms that decide what we see, and those algorithms have a huge impact on political discourse. We need more transparency about how these algorithms work and who they benefit. Maybe we need new kinds of public forums or deliberative processes where people can discuss these complex issues and help shape the rules around AI.

The Need For Comprehensive AI Legislation

Legislation is definitely on the table. Right now, laws around technology often lag way behind the actual tech. We need lawmakers who understand AI and can create rules that protect democratic values without stifling innovation. This isn’t just about banning bad actors; it’s about setting standards for AI development and deployment. Think about things like:

- Transparency requirements: Mandating that AI-generated content be clearly labeled.

- Accountability frameworks: Establishing who is responsible when AI systems cause harm or spread misinformation.

- Data privacy protections: Strengthening rules around how personal data is collected and used to train AI models.

- Independent oversight: Creating bodies that can monitor AI’s impact on society and advise on policy.

Content Moderation And Free Expression

Counter-Speech As A Moderation Strategy

It’s a tricky balance, isn’t it? On one hand, we want platforms to be safe spaces, free from hate speech and harmful content. On the other, nobody wants to feel like their voice is being silenced. That’s where counter-speech comes in. Instead of just deleting problematic posts, the idea is to flood the zone with positive or corrective messages. Think of it like this: if someone is spreading misinformation, instead of just hitting the delete button, you encourage others to post factual information right alongside it. It’s a way to fight bad ideas with good ones, letting users engage and correct each other. This approach respects the idea that people should be able to express themselves, even if those expressions are unpopular, as long as they aren’t directly inciting violence or breaking laws. It’s a core part of how platforms try to manage online conversations without resorting to outright censorship. The goal is to create a healthier discourse, not just a quieter one.

The Meta Oversight Board And Freedom Of Expression

The Meta Oversight Board is supposed to be this independent body that looks at tough content decisions Meta makes. They review cases that the company flags as particularly difficult, and their rulings are meant to guide Meta’s policies. It’s a big deal because it touches on how much freedom people have to speak online. The board has to consider not just Meta’s rules, but also broader ideas about free expression. Sometimes they agree with Meta, sometimes they don’t, and they often send cases back with recommendations. It’s an attempt to bring some outside perspective to what can feel like a black box of content decisions. They’re trying to figure out where the line is between protecting users and allowing open discussion, which is a constant challenge for any platform. Their decisions can set precedents for how millions of people communicate online.

The Long Online Shadow Of Content Laws

Laws about what you can and can’t say online are a really complex issue, especially when you’re dealing with a global platform like Meta. What’s acceptable in one country might be illegal in another. This creates a huge headache for content moderation. Platforms have to figure out how to apply their rules consistently across different legal systems and cultural norms. It’s not just about deleting posts; it’s about understanding the local context, the language, and the history behind what’s being said. A comment that seems harmless in the US could be deeply offensive or even incite violence in a different part of the world. This is why understanding the legal framework is so important. It’s a constant balancing act, trying to respect free speech principles while also complying with laws and preventing real-world harm. The decisions made today cast a long shadow, shaping how we communicate and access information for years to come.

Wrapping It Up

So, we’ve looked at how Meta tries to keep things in check, what happens when people inside spill the beans, and how all this mess connects to what’s happening overseas. It’s not exactly a simple picture, is it? There are a lot of moving parts, and figuring out who’s watching the watchers, especially when foreign interests might be involved, is a tough nut to crack. We’ve seen how these platforms are trying to set up their own rules, but whether that’s enough, or if it just shifts the problem around, is still up in the air. It feels like we’re still figuring out the best way to handle this stuff, and honestly, it’s going to take a lot more talking and maybe some new ideas to get it right.

Frequently Asked Questions

What is the Meta Oversight Board and what does it do?

Think of the Meta Oversight Board as a special group that reviews some of the tricky decisions Facebook and Instagram make about what content should or shouldn’t be allowed on their sites. They act like a higher court for content, looking at cases that are really hard to decide and giving their opinions. Their goal is to help Meta make better choices about what people can see and say online.

How does Meta Congress relate to digital rules?

Meta Congress isn’t a real place where laws are made, but it’s a way to talk about how companies like Meta (which owns Facebook and Instagram) are being watched and how they should handle things like what people post. It’s about figuring out the rules for the internet and digital spaces, especially when it comes to big tech companies and their power.

Why are whistleblowers important in the tech world?

Whistleblowers are people who work inside a company and reveal important information about something wrong or harmful happening there. In tech, they can expose problems with how platforms work, how they handle user data, or how their technology might be causing harm. Their bravery helps bring attention to issues that might otherwise stay hidden, pushing for more honesty and better practices.

How do algorithms affect our online experience and democracy?

Algorithms are like secret recipes that decide what you see online, like posts on social media or videos on YouTube. They can be designed to keep you engaged, but sometimes they show you more extreme or misleading content, which can influence what you think and even affect elections. Making sure these algorithms are fair and transparent is a big challenge for democracy.

What does ‘counter-speech’ mean when talking about online content?

Counter-speech is when people respond to harmful or hateful messages online by posting their own messages that promote positive ideas or challenge the bad ones. Instead of just taking down bad content, it’s about adding more good or truthful voices to the conversation. It can be helpful sometimes, but it’s not always enough to solve the whole problem.

How can technology and AI change the future of democracy?

Artificial intelligence (AI) and other new technologies can change democracy in many ways. AI can be used to create fake news that looks real, spread it quickly, and even influence how people vote. It also raises questions about privacy and how our information is used. We need to think carefully about how to use these powerful tools in ways that support, rather than harm, democratic ideas.