So, Gartner’s 2025 Hype Cycle for AI is out, and it’s got some interesting things to say. You know how things get super hyped up, then everyone realizes it’s not quite what they thought, and then it settles down? That’s kind of what this whole AI thing is doing. This year’s report is less about the shiny new toys and more about the nuts and bolts that actually make AI work. We’re seeing a big shift from just playing around with AI to figuring out how to make it reliable, safe, and useful for the long haul. It’s a good time to look beyond just generative AI and see what else is cooking.

Key Takeaways

- Generative AI is moving past the initial excitement and into a phase where people are figuring out what really works and what doesn’t, according to the gartner hype cycle ai.

- Building AI that lasts requires a focus on things like AI engineering and ModelOps, making sure it’s dependable and follows the rules.

- Good data is super important, and we’re seeing more attention on making sure data is ready for AI and using synthetic data to help train models better and more privately.

- Software development is changing, with AI-native approaches becoming a thing, where AI is built into the process of making software from the start.

- AI is spreading out to devices at the edge, and there’s a growing need for clear rules and security as AI systems get more complex and decentralized.

Navigating the 2025 Gartner Hype Cycle for AI

Alright, let’s talk about where AI is headed in 2025, according to Gartner’s latest Hype Cycle report. It feels like just yesterday everyone was buzzing about the next big thing in AI, and honestly, it’s easy to get caught up in the excitement. But this year’s report shows a real shift. We’re moving past the initial ‘wow’ factor and starting to look at what actually works, what’s practical, and how we can make AI a reliable part of our businesses.

Understanding the AI Hype Cycle’s Evolution

So, what exactly is this Hype Cycle thing? Think of it as a roadmap for new technologies. It shows how quickly a technology catches on, how much people talk about it, and then how it settles into real-world use. It usually goes through a few phases:

- Innovation Trigger: This is where an idea first pops up, maybe a cool demo or a research paper. It gets people talking, but it’s not ready for prime time.

- Peak of Inflated Expectations: Everyone’s excited! Success stories start appearing, but so do the failures and the over-promising. It’s a bit of a rollercoaster.

- Trough of Disillusionment: The initial excitement fades. People realize it’s harder than they thought, and interest wanes. This is where many technologies get stuck.

- Slope of Enlightenment: Things start to get clearer. People figure out how to use the technology better, and second-generation versions start to appear.

- Plateau of Productivity: The technology is now mainstream. It’s delivering real results and is a standard part of how things are done.

For 2025, Gartner’s seeing a lot of AI technologies moving through these stages. The big story is that the wild, unbridled enthusiasm is cooling down. Instead, we’re focusing on the nuts and bolts – the stuff that makes AI actually work reliably and at scale. It’s less about the flashy experiments and more about building the infrastructure that can support AI for the long haul.

From Hype to Practical Application: The 2025 AI Landscape

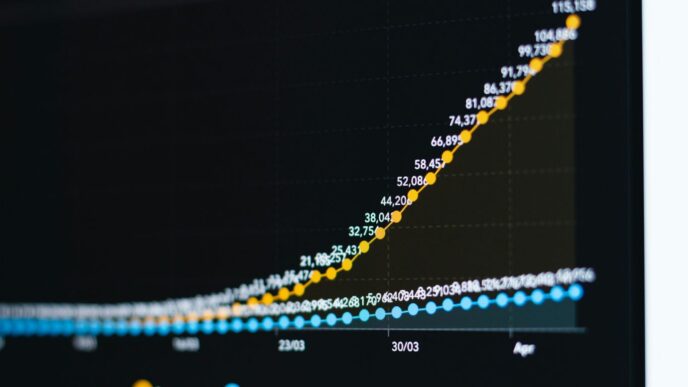

Last year, Generative AI (GenAI) was everywhere. Companies were throwing money at it, trying out chatbots and content generators. But now, in 2025, GenAI is entering what Gartner calls the ‘Trough of Disillusionment.’ This doesn’t mean it’s dead; far from it. It just means the initial, often unrealistic, expectations are being replaced by a more grounded view. We’re seeing that just investing in GenAI isn’t enough. Companies are realizing they need to figure out the right use cases, deal with issues like AI ‘hallucinations’ and bias, and actually get a return on their investment. It’s a tough but necessary phase for the technology to mature.

This shift is happening across the board. The focus is moving towards technologies that enable AI. Think about things like AI Engineering – building the systems to manage AI reliably – and ModelOps, which is all about getting AI models into production and keeping them running smoothly. Data is also a huge part of this. It’s not just about having data anymore; it’s about having ‘AI-ready’ data that’s clean, relevant, and ethically sound. We’re also seeing synthetic data become more important, helping to train models without using sensitive real-world information.

Key Takeaways Beyond Generative AI

So, what should we be paying attention to, besides the GenAI story?

- AI Agents are at their Peak: These are systems designed to act autonomously. While there’s a lot of excitement, Gartner warns that strong governance and collaboration between different teams are needed to make them work safely and effectively.

- Edge AI is Growing: This is about putting AI processing power directly onto devices, closer to where the data is generated. It’s faster, more private, and is becoming really important for things like smart devices and industrial equipment.

- AI-Native Software Engineering is New: This is a big one. It’s a whole new way of building software, where AI is integrated into the development process itself, making it more intelligent and automated.

Essentially, 2025 is shaping up to be the year AI starts to get serious. The hype is giving way to a more practical, infrastructure-focused approach. It’s about building AI that’s not just innovative, but also reliable, scalable, and trustworthy.

Generative AI’s Shift to the Trough of Disillusionment

Remember all the buzz around Generative AI? It felt like just yesterday every company was throwing money at it, playing with chatbots and trying to generate all sorts of content. Well, the 2025 Gartner Hype Cycle shows GenAI is now heading into the Trough of Disillusionment. This isn’t a bad thing, though. It’s more like a reality check, a moment where those sky-high expectations start to meet the actual challenges of putting this tech to work.

Recalibrating Expectations for GenAI

The initial excitement for GenAI was huge, and honestly, a bit over the top. Companies spent a lot, like an average of $1.9 million in 2024, but many weren’t seeing the returns they hoped for. It turns out just investing isn’t enough. For some, figuring out the right way to use it was tough, while others ran into problems trying to scale things up. This shift means we’re moving from just experimenting to figuring out how to make GenAI work reliably and safely in the real world. It’s about getting practical and focusing on what actually delivers business value, not just chasing the latest trend. This is a key part of understanding the AI Hype Cycle’s Evolution.

Addressing Investment Paradoxes and ROI

So, why the disconnect between spending and results? For companies still figuring out AI, finding the right use cases was a hurdle. They didn’t quite grasp how AI could fit into their operations. On the flip side, more advanced companies hit snags with scaling and making sure their teams actually understood how to use the tools effectively. This gap highlights that simply pouring money into GenAI projects doesn’t automatically lead to transformation. It’s about smart application and building the right foundation.

The Trust and Governance Challenge for GenAI

Beyond the practicalities, there’s the big issue of trust and control. Businesses are under more pressure than ever to ensure their AI is ethical, fair, and doesn’t produce weird, made-up information (hallucinations). Lawmakers are starting to pay attention, proposing rules for GenAI. Internally, companies are setting up committees to watch over AI models, trying to make them more transparent and less risky. It’s a two-part challenge: managing the AI itself and dealing with the rules about how it should be used. Plus, there’s the ongoing need to train employees so they can actually use and validate AI outputs correctly.

The Rise of Foundational AI Enablers

Okay, so Generative AI is getting a lot of the spotlight, right? But behind the scenes, there’s a whole other set of technologies quietly getting ready to make AI actually work, and work well, across businesses. Gartner’s 2025 Hype Cycle is pointing to a few key areas that are moving up the ‘Slope of Enlightenment’ – basically, they’re becoming more practical and less theoretical. Think of these as the plumbing and wiring for your AI systems; you don’t always see them, but they’re super important for everything else to function.

AI Engineering: Building Sustainable AI Infrastructure

This is all about treating AI development like any other serious engineering discipline. It’s not just about building a cool model in a lab; it’s about creating AI systems that are reliable, can be tested properly, and can actually be maintained over time. We’re talking about version control for models, automated testing, and constant monitoring. For many companies, this means shifting from seeing AI as a fun experiment to viewing it as a core utility, like electricity or the internet. AI engineering is becoming the bedrock for scaling AI responsibly. It helps make sure your AI investments fit into your existing IT setup and don’t cause chaos.

ModelOps: Operationalizing AI Models at Scale

Once you’ve built an AI model, what do you do with it? That’s where ModelOps comes in. It’s the practice of managing the entire lifecycle of AI models – from when they’re first trained, through testing, deployment, and then keeping an eye on them once they’re live. When you have dozens or even hundreds of AI models running, doing this manually is just not going to cut it. ModelOps provides the frameworks and automation needed to keep things consistent, compliant, and traceable. Gartner expects this to hit the ‘Plateau of Productivity’ soon, meaning it’s becoming a standard way to turn experimental AI into real-world applications.

The Importance of AI-Ready Data

This one might seem obvious, but it’s worth repeating: AI is only as good as the data it’s trained on. As AI systems become more complex and are used in more critical ways, the quality, cleanliness, and accessibility of the data become paramount. This isn’t just about having a lot of data; it’s about having the right data, properly labeled, and ready to be used without a ton of pre-processing. Think of it as preparing your ingredients before you start cooking – you can’t make a great meal with rotten produce. For AI, having ‘AI-ready data’ means reducing bias, improving model accuracy, and speeding up the whole development process. It’s a foundational piece that often gets overlooked in the rush for the latest AI model.

AI Agents: Peak Expectations and Path to Maturity

AI agents are currently sitting at the Peak of Inflated Expectations. You know, the stage where everyone’s talking about how autonomous workflows will change everything, but the reality is a bit more complicated. It’s like when a new gadget comes out, and the ads promise you’ll be a master chef overnight, but then you actually try to use it, and it’s not quite so simple.

From Automation to Autonomous Systems

So, what exactly are these AI agents? Think of them as more than just your average automation script. They can actually reason, understand different kinds of information (not just text!), and learn as they go. This means they can do things like manage complex workflows, talk to different software systems to get things done, or even figure out the best way to manage a supply chain. It’s a big step up from just following a set of rules. We’re seeing them move from simple chatbots to more complex systems where multiple agents work together to achieve a common goal.

Navigating Security, Governance, and Trust Barriers

But here’s the tricky part: trusting these agents. Most companies are still a bit hesitant to let agents run completely on their own, especially for important tasks. There’s a real fear of mistakes, or the agent making things up (hallucinations), which could lead to sensitive data getting out or other ethical problems. Plus, these systems create new ways for bad actors to try and get in. We need to think hard about who gets access, how we can understand why an agent made a certain decision, and how to fix it if it goes wrong. It’s a whole new set of security and control issues to figure out. This is a big reason why understanding AI compliance for software is becoming so important.

Organizational Readiness for AI Agents

Getting ready for AI agents isn’t just about the technology; it’s about the people and processes too. Organizations need to:

- Develop clear rules and guidelines for how agents will operate.

- Set up ways to watch what the agents are doing and how they’re performing.

- Make sure that the teams building and using these agents (like engineers and data scientists) are working together with the people who manage risks.

Without these things in place, it’s going to be tough to move past the hype and actually make AI agents work reliably and safely in the real world.

Edge AI and Decentralized Intelligence

You know, it feels like just yesterday we were all talking about how everything had to be in the cloud. Now, the 2025 Gartner Hype Cycle is pointing to something different: intelligence moving out to the devices themselves. This is what they’re calling Edge AI, and it’s a pretty big deal.

Intelligence Moving to the Periphery

Think about it. Instead of sending all your data back to a central server to be processed, a lot of that work can happen right on the device. This means faster responses, which is a lifesaver for things like self-driving cars or even just your smart thermostat. Plus, keeping data local can be a big win for privacy. It’s about making AI work where the action is, not just in some distant data center. This trend is really picking up steam, and it’s expected to be a major area for investment over the next few years Edge AI.

The Case for Decentralized Processing

As we get more and more connected devices – you know, the whole Internet of Things thing – sending all that information around becomes a bottleneck. Edge AI offers a way around that. By putting AI models directly into sensors or cameras, we can process information locally. This not only saves bandwidth but also helps meet new rules about where data needs to be processed. It’s a more efficient way to handle the growing amount of data we’re dealing with.

Integrating Edge AI with Foundation Models

What’s really interesting is when you start combining this edge processing with those big foundation models we’ve been hearing so much about. It opens up new possibilities, like having AI assistants that really understand your context or systems that can predict when a piece of factory equipment might break down. To make this work well, though, companies need to focus on making those AI models smaller and smarter, and figuring out how they can learn and adapt without needing constant updates from a central source.

The Debut of AI-Native Software Engineering

This year, we’re seeing a whole new category pop up: AI-native software engineering. Think of it like this: remember when tools like GitHub Copilot started suggesting code? That was AI-assisted. AI-native is the next step, where AI doesn’t just suggest, it actually builds entire pieces of software. It’s a big shift in how we make apps and systems.

Transforming Software Development with Intelligence

AI-native development means we’re baking intelligence right into the software creation process, from the very beginning. It’s not just about writing code line by line anymore. Instead, AI can handle tasks like figuring out what a program needs to do, writing the code itself, and even testing it. This changes the game for developers.

The Evolving Role of the Software Engineer

So, what does this mean for software engineers? Their job is changing. Instead of focusing on every single line of code, they’ll be more like conductors, guiding the AI. This means more time spent on big-picture thinking, creativity, and making sure the AI’s work makes sense and follows the rules. It’s about overseeing intelligent systems rather than just coding.

Here’s a look at the shift:

- AI-Assisted: Tools suggest code snippets or complete lines.

- AI-Native: AI systems independently generate larger code modules or even entire applications based on requirements.

- Human Role: Shifts from direct coding to requirement definition, AI output validation, and system orchestration.

Addressing Risks in AI-Native Lifecycles

Now, this new way of building software isn’t without its challenges. When AI systems work together, or when AI makes mistakes (sometimes called "hallucinations"), it can cause problems that spread quickly. Security also becomes a bigger concern as these complex systems connect. Gartner points out that while this move is happening, we absolutely need to build AI responsibly from the start to avoid issues down the road.

Synthetic Data: Fueling Responsible AI Innovation

Essential for Model Training and Bias Correction

So, we’ve talked a lot about AI getting smarter, right? Well, a big part of that is having good data to learn from. But sometimes, real-world data is tricky. It might be hard to get enough of it, or it could contain sensitive personal information that we absolutely can’t mess with. That’s where synthetic data comes in. Think of it like creating artificial examples that look and act like real data, but without any of the actual private stuff. This is super helpful for training AI models, especially when you need a ton of examples. It also gives us a way to fix biases that might be lurking in the original data. By generating diverse, controlled datasets, we can help make AI systems fairer.

Addressing Privacy and Security Challenges

Using real data, especially from customers or patients, comes with a whole heap of privacy rules and security worries. You can’t just go sharing that around. Synthetic data sidesteps a lot of these problems. Since it’s not real, it doesn’t carry the same privacy risks. This means companies can experiment and build better AI models without worrying about accidentally leaking sensitive information or breaking regulations. It’s like having a safe sandbox to play in. This approach helps keep things secure and compliant, which is a big deal these days.

Enabling Ethical AI Experimentation

Building AI responsibly means thinking about ethics from the start. Synthetic data plays a key role here. It allows developers to test out different AI scenarios and model behaviors in a controlled environment. For instance, you can create specific datasets to see how an AI model handles rare situations or to check if it’s treating different groups of people equally. This kind of experimentation is vital for identifying and fixing potential ethical issues before the AI is used in the real world. It’s a practical way to make sure AI is developed and used in a way that benefits everyone.

Looking Ahead: Beyond the Hype

So, what does all this mean for businesses looking to actually use AI in 2025 and beyond? It’s clear the initial frenzy around generative AI is settling down. We’re moving past the ‘wow’ factor and into the practicalities of making AI work reliably and safely. Technologies like AI Engineering and ModelOps are becoming the backbone, helping companies build and manage AI systems that deliver real results, not just experiments. Plus, with AI agents and AI-native software engineering starting to take shape, the way we build and interact with technology is set for a big change. It’s less about chasing the newest shiny object and more about building solid, trustworthy AI foundations that can grow with your business.

Frequently Asked Questions

What is the Gartner Hype Cycle for AI?

Think of the Gartner Hype Cycle as a roadmap for new technologies. It shows how popular a technology is, how much people expect it to do, and when it will actually be useful. It has five parts: an idea starting, lots of excitement, people getting disappointed, learning how to use it better, and finally, it becoming a normal, helpful tool.

Why is Generative AI moving to the ‘Trough of Disillusionment’?

Generative AI, like chatbots and image creators, had a lot of hype. Now, people are realizing it’s not perfect. Companies spent money but didn’t always get the results they hoped for. This stage means people are figuring out the real challenges and how to use it smartly, instead of just being amazed by it.

What are ‘AI Enablers’ and why are they important?

AI Enablers are the behind-the-scenes technologies that make AI work well. Things like AI Engineering (building good AI systems) and ModelOps (managing AI models) are key. They help make sure AI is reliable, safe, and useful for businesses in the long run, not just a cool experiment.

What are AI Agents?

AI Agents are like smart helpers that can do tasks on their own. They can understand things, make decisions, and act in computer programs or even the real world. Imagine them as digital assistants that can handle more complex jobs than simple chatbots, but they still need careful setup and rules.

What is Edge AI?

Edge AI means that AI processing happens right on devices, like your phone or a smart car, instead of sending all the information to a big computer in the cloud. This makes things faster, keeps your information more private, and allows for quick decisions, which is great for things like self-driving cars or smart factories.

What is AI-Native Software Engineering?

This is a new way of building computer programs where AI is part of the whole process, from start to finish. Instead of just using AI to help write code, AI can actually help design, build, test, and manage software more automatically. It changes how software developers work, making them more like supervisors of smart systems.