Artificial intelligence medical devices are changing healthcare. These smart tools can help doctors and patients in new ways, but they also bring up some tricky questions. How do we make sure they’re safe and work right? Plus, how do different countries handle these new technologies? Let’s break down what you need to know about these evolving devices.

Key Takeaways

- AI medical devices combine artificial intelligence with traditional medical tools to improve how they work, like making them smarter and more adaptable.

- The US FDA faces challenges with its 510(k) approval process for AI devices, especially those that keep learning and changing.

- The EU’s Medical Device Regulation (MDR) has rules for AI devices, but figuring out how to approve constantly updating algorithms is still tough.

- Big issues for AI medical devices include making sure we understand how they make decisions, keeping patient data safe, and fixing problems when the AI’s performance drops or shows bias.

- Keeping an eye on AI medical devices after they’re approved is super important to make sure they keep working well and stay safe for patients, even as they learn from real-world use.

Understanding Artificial Intelligence Medical Devices

So, what exactly are these AI medical devices we keep hearing about? Basically, they’re regular medical tools that have been given a brain boost with artificial intelligence. Think of it like giving your old flip phone a smartphone upgrade – it can do so much more now. These aren’t just fancy gadgets; they’re designed to help doctors and patients in some pretty significant ways.

Defining AI-Enabled Combination Devices

These are devices where AI is a core part of how they work, often combined with traditional medical device technology. Unlike a simple thermometer, an AI-enabled device can learn from the data it collects. This means it can get better over time, adapt to new situations, and sometimes even make decisions on its own or with minimal human input. It’s this ability to learn and adapt that really sets them apart.

Key AI Technologies in Medical Devices

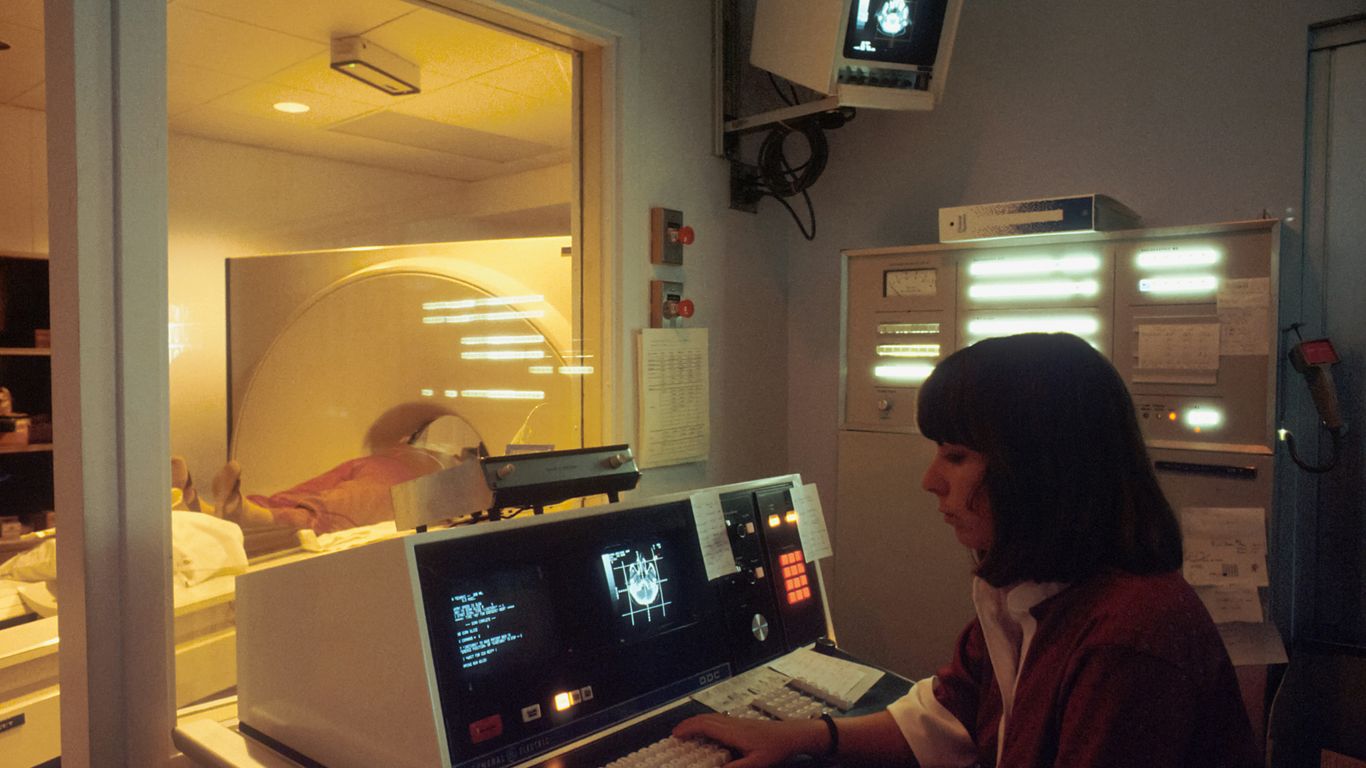

There’s a whole toolbox of AI tech being used. You’ve got machine learning, which is great for spotting patterns in things like medical images – think X-rays or MRIs. Then there’s natural language processing, which helps devices understand and respond to patient information, maybe in a monitoring system. And sometimes, you’ll see reinforcement learning, which is used in things like automated insulin delivery systems to figure out the best way to manage blood sugar.

Here are some common applications:

- Diagnostic Imaging: AI helps analyze scans to detect diseases earlier or more accurately.

- Patient Monitoring: Devices can track vital signs and alert healthcare providers to potential issues.

- Robotic Surgery: AI assists surgeons with precision and control during operations.

- Personalized Medicine: Tailoring treatments, like insulin doses, based on individual patient data.

Potential Benefits for Patient Outcomes

When these AI tools work well, the sky’s the limit for patient care. We’re talking about catching diseases sooner, which often means better treatment success. AI can also help make treatments more personal, fitting them exactly to what an individual patient needs. Plus, these devices can help manage chronic conditions more effectively, potentially reducing hospital visits and improving overall quality of life. The ultimate goal is to make healthcare more precise, efficient, and accessible for everyone.

Navigating Regulatory Frameworks in the US

When it comes to AI medical devices here in the States, the Food and Drug Administration (FDA) is the main player. They’ve got a few different paths devices can take to get approved, but the one most people talk about, especially for AI, is the 510(k) pathway. It’s designed to get new devices to market by showing they’re pretty much the same as something that’s already out there and approved.

Challenges with the FDA’s 510(k) Pathway

The 510(k) process was really set up for devices that don’t change much after they’re made. Think of a simple scalpel – it does the same thing every time. But AI? That’s a whole different ballgame. AI is built to learn and adapt as it gets more data. This makes it tricky to say something is "substantially equivalent" when its performance might change over time. The FDA knows this is a hurdle, and they’re trying to figure out how to handle it.

Addressing Adaptive AI Algorithms

This is where things get really interesting, and honestly, a bit complicated. If an AI algorithm can learn and change on its own after it’s been approved, how do you regulate that? The FDA is looking at ideas like a "predetermined change control plan." Basically, a manufacturer can get approval for a range of potential updates or changes the AI might make. As long as the changes stay within that pre-approved box, they don’t have to go through the whole 510(k) process again. It’s an attempt to let the AI improve without slowing down innovation too much.

FDA’s Evolving Regulatory Strategies

The FDA isn’t just sitting still. They’re actively working on new ways to deal with AI. They’ve put out discussion papers and are looking at different frameworks. The goal is to find a balance. They need to make sure these devices are safe and work as intended, but they also don’t want to stifle the development of potentially life-saving technology. It’s a tough balancing act, and you can bet they’re paying close attention to how AI evolves and what challenges pop up along the way. They’re also focusing their attention on devices that have a bigger impact on patient safety, which can sometimes give companies a bit more room to test new AI features in less critical areas.

European Union’s Approach to AI Medical Devices

The European Union has its own way of looking at AI in medical devices, mainly through its Medical Device Regulation, or MDR. This whole system is designed to make sure that medical devices, including those that use AI, are safe and work like they’re supposed to. It’s a pretty big deal, especially since it fully kicked in back in May 2021, updating older rules.

The Medical Device Regulation (MDR) Framework

The MDR is the main rulebook here. It’s pretty strict, aiming for high safety standards and making sure devices are effective. For AI-powered devices, this means manufacturers have to show solid proof that their products work well, not just before they go on the market, but also afterward. It’s all about keeping patients safe.

Conformity Assessments for Adaptive Algorithms

Now, dealing with AI that learns and changes, like adaptive algorithms, is where things get tricky. These algorithms aren’t static; they get better with more data. The MDR tries to handle this by putting a bigger emphasis on real-world evidence and what’s called post-market surveillance. Basically, manufacturers need to keep a close eye on how their devices are performing after they’re out there and report back. It’s a way to keep up with the technology’s evolution without compromising safety.

Data Protection Under GDPR

Then there’s the General Data Protection Regulation, or GDPR. This is a big one for any device that uses personal data, and medical devices are no exception. AI often needs a lot of data to learn, and that data can include sensitive health information. The GDPR lays down strict rules about how this data can be collected, processed, and protected. Manufacturers have to be really careful to follow these rules, making sure patient privacy is respected and that people have control over their data. It’s a balancing act between using data for innovation and protecting individuals.

Key Challenges in AI Medical Device Regulation

So, getting these AI medical devices approved and keeping them safe is, well, complicated. It’s not like approving a regular old Band-Aid, you know? There are some big hurdles that regulators and companies have to jump over.

Ensuring Algorithmic Transparency

One of the trickiest parts is figuring out exactly how the AI makes its decisions. Think of it like a doctor’s diagnosis – you want to know the reasoning behind it, not just the final answer. With AI, especially the really complex ones, it can be like a black box. We need to be able to peek inside and understand the logic, especially when patient lives are on the line. This is tough because some AI models are so intricate, even the people who built them can’t fully explain every single step. This lack of clarity makes it hard to spot potential problems before they cause harm.

Managing Data Privacy and Security

These AI systems often need a ton of patient data to learn and work properly. That’s a lot of sensitive information, right? So, keeping that data safe and private is a massive deal. We’re talking about health records, personal histories – the works. Regulations like GDPR in Europe are pretty strict about this, and for good reason. Companies have to be super careful about how they collect, store, and use this data. Plus, there’s always the worry about data breaches. It’s a constant battle to stay ahead of cyber threats and make sure patient information doesn’t fall into the wrong hands. It’s not just about protecting data from hackers, but also making sure patients know exactly what’s happening with their information.

Addressing Model Degradation and Bias

AI models aren’t static; they can change over time. This is called model degradation. Imagine an AI trained to spot a certain disease. If the disease characteristics subtly shift, or if the AI is exposed to new, different data in the real world, its performance might slip. It might start making mistakes it didn’t before. Then there’s bias. If the data used to train the AI isn’t representative of everyone – say, it’s mostly trained on data from one demographic group – the AI might not work as well for other groups. This could lead to unfair or incorrect diagnoses for certain patients. It’s a real concern that needs constant attention and testing to make sure the AI stays accurate and fair for everyone.

The Importance of Post-Market Surveillance

Okay, so we’ve talked about getting these AI medical devices approved, but the story doesn’t end there. Not by a long shot. Think about it: these aren’t like a simple bandage that stays the same. AI systems, especially the ones that learn and adapt, are constantly changing. It’s like they have a mind of their own, but for your health.

Continuous Monitoring of AI Performance

This is where post-market surveillance comes in. It’s basically keeping an eye on the AI device after it’s out there helping patients. Because these algorithms can learn from new data, their performance might shift. What worked perfectly in the lab or during initial testing might behave a little differently in the real world, with all its messy, unpredictable situations. We need to make sure the AI is still doing what it’s supposed to do, accurately and safely, even as it evolves. It’s not a set-it-and-forget-it kind of deal.

Responding to Real-World Outcomes

Sometimes, even with the best pre-market checks, things pop up once a device is in widespread use. Maybe the AI misinterprets a scan in a specific population it wasn’t extensively tested on, or perhaps a software update, meant to improve things, accidentally introduces a new issue. That’s why tracking how these devices perform in everyday clinical practice is so important. If we see a pattern of errors or unexpected results, we need a way to address it quickly. This could mean:

- Notifying healthcare providers about potential issues.

- Working with the manufacturer to update the software.

- In some cases, even recalling the device if the risk is too high.

It’s all about closing the loop between what we expect and what’s actually happening.

Balancing Innovation with Patient Safety

This is the tightrope walk, right? We want these amazing AI tools to get to patients faster because they can do so much good. But we absolutely cannot compromise on safety. Post-market surveillance helps us strike that balance. By having solid systems in place to monitor performance and react to problems, we can feel more confident letting these innovative technologies develop. It allows for a more flexible approach to regulation, acknowledging that AI is different from traditional devices. We can allow for updates and improvements, knowing that there’s a watchful eye making sure patient well-being remains the top priority.

Future Directions for AI Medical Device Governance

So, where do we go from here with all these AI medical devices popping up? It’s a big question, and honestly, nobody has all the answers yet. But there are definitely some paths we need to explore to make sure these tools are safe and helpful.

Developing Adaptive Policy Frameworks

Right now, a lot of regulations are built for devices that don’t change much after they’re approved. That’s a problem when you have AI that learns and gets better over time. We need rules that can keep up. Think of it like trying to use a flip phone to browse the internet – it just doesn’t work well. The FDA is already looking into this, with programs that let them talk to companies more often about how their AI is doing in the real world. This helps them adjust rules without slowing down progress too much. The goal is to create policies that are flexible enough to handle AI’s constant evolution while still keeping patients safe.

Fostering Collaboration Between Regulators and Industry

Nobody can figure this out alone. Regulators need to work closely with the companies making these AI devices. It’s not about one side telling the other what to do; it’s more like a partnership. Companies have the technical know-how, and regulators understand the safety side. By sharing information and working through problems together, they can build better guidelines. This also means being open about how AI models are built and tested, and what happens when things go wrong. It’s about building trust, you know?

Establishing Global Standards for AI

AI doesn’t stop at borders, so why should our rules? Right now, different countries have different ways of looking at AI medical devices. This can get really confusing for companies trying to sell their products worldwide. It would be much simpler and safer if we could agree on some basic rules that apply everywhere. This doesn’t mean every country has to do things exactly the same, but having common ground on things like data privacy, testing, and monitoring would make a huge difference. It’s a long road, but working towards global standards will help make AI medical devices more accessible and reliable for everyone.

Looking Ahead

So, where does all this leave us with AI in medical devices? It’s clear that this technology is moving fast, and the rules are trying to keep up. Both the US and Europe are working on ways to handle these smart devices, but it’s not always straightforward. Things like AI that keeps learning or potential biases in the data are big hurdles. We’ve seen how important it is to watch these devices even after they’re approved, because what works in a lab might not always work perfectly in the real world. The goal is to find that sweet spot: letting these helpful AI tools get to patients quickly without putting anyone at risk. It’s going to take ongoing talks between the people making the rules and the companies developing the tech, all with the patient’s well-being front and center.