Keeping up with all the new rules about artificial intelligence can feel like a full-time job. It seems like every week, there’s a new law or a proposed change somewhere in the world. This guide is designed to help you make sense of it all, especially as we head into 2025. We’ll break down what’s happening globally and in the US, looking at the big picture as well as specific areas where AI is being regulated. Think of this as your go-to ai legislation tracker to understand the evolving landscape.

Key Takeaways

- The US is seeing a mix of federal proposals and state-level action, with a notable shift in executive policy direction for 2025.

- Several key areas are drawing regulatory attention, including political ads, job surveillance, and the use of AI in decision-making.

- The European Union’s AI Act is a major development, setting a precedent for comprehensive AI governance that other regions are watching.

- Federal agencies like the SEC, FTC, and FCC are already using existing authorities to address AI-related issues, from fraud to deceptive content.

- States like Colorado and Utah are leading the way with specific AI legislation, creating a complex regulatory environment across the US.

Global AI Legislation Tracker Overview

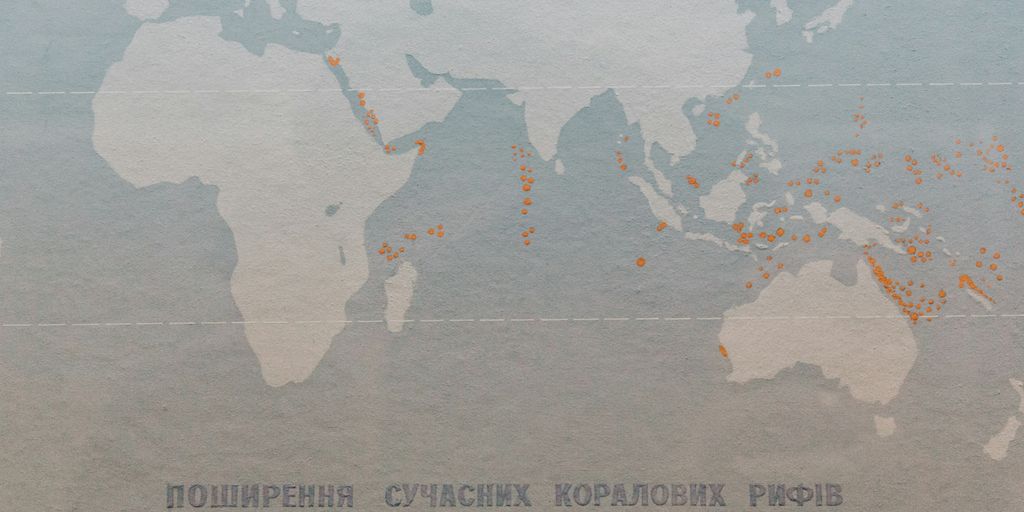

It feels like every country is trying to figure out what to do with AI, and honestly, it’s a lot to keep up with. Different places are trying different things, from big, sweeping laws to rules for really specific uses of AI. The main challenge seems to be balancing new ideas with making sure AI doesn’t cause problems. Many countries start with a national strategy or some ethical guidelines before they even think about actual laws. It’s a global effort, and everyone’s watching what everyone else does. We’ve put together this tracker to help make sense of it all, covering what’s happening in various countries. You can check out the Global AI Law and Policy Tracker for more details.

Navigating the Global AI Law and Policy Landscape

Countries worldwide are creating and putting into practice laws and policies for artificial intelligence. These efforts are happening at the same pace as AI technology itself is growing and changing. What we’re seeing are:

- Broad AI legislation: Laws that try to cover AI in general.

- Specific use-case laws: Rules targeting particular ways AI is used, like in hiring or for political ads.

- National AI strategies: Plans outlining a country’s approach to AI development and use.

- Voluntary guidelines: Recommendations and standards that companies can choose to follow.

There isn’t one single way to regulate AI. However, a common pattern is for a country to first release a national strategy or an ethics policy before jumping into legislation. This approach helps them think through the issues before making binding rules.

Understanding International AI Governance Efforts

When we look at AI governance on a global scale, it’s clear that many nations are actively involved. They’re not just making their own rules; they’re also talking to each other and participating in international discussions. This collaboration is important because AI doesn’t respect borders. What happens in one country can easily affect others. So, there’s a push to find some common ground and maybe even harmonize rules where it makes sense. It’s a complex web of different approaches, but the goal is generally to manage the risks associated with AI while still allowing for innovation.

Key Jurisdictions in AI Regulation

Several countries are really leading the charge when it comes to AI regulation. You’ve got places like the European Union, which has been very proactive with its AI Act. Then there’s the United States, which is taking a multi-pronged approach with federal considerations, executive actions, and a growing number of state-level initiatives. Other countries are also developing their own strategies and laws. It’s helpful to keep an eye on these key players because their actions often set precedents or influence what other nations decide to do. The landscape is always shifting, so staying updated on these major jurisdictions is pretty important.

United States AI Regulatory Developments

The United States’ approach to AI regulation in 2025 is a bit of a mixed bag, with a focus on encouraging innovation while trying to keep things from getting too wild. It’s not like there’s one big law that covers everything AI, which can make it tricky to figure out what’s what. Instead, we’re seeing a mix of executive actions, agency guidance, and a growing number of state-level laws that are starting to shape the landscape.

Federal AI Legislation Under Consideration

Congress has been looking at a bunch of different AI bills. Many of these proposals lean towards creating voluntary guidelines and best practices rather than strict rules. The idea seems to be to help AI grow without putting the brakes on too hard, especially when you think about how other countries are pushing ahead. It’s a balancing act, for sure. Some of the bills that have been discussed include:

- The AI Training Act

- The National AI Initiative Act

- The TAKE IT DOWN Act

- The Create AI Act

- The NO FAKES Act of 2025

It’s still up in the air whether any of these will become law, but they give us a good idea of where lawmakers are focusing their attention. You can find more details on some of these legislative efforts in this AI legislation tracker.

Executive Orders Shaping AI Policy

President Trump signed an executive order in January 2025 called "Removing Barriers to American Leadership in Artificial Intelligence." This basically swapped out the previous administration’s order on AI safety and trustworthiness. The new order is all about clearing the path for AI development and trying to boost America’s standing in the AI world. Following this, there have been a couple of Office of Management and Budget (OMB) memos that change how the federal government uses and buys AI systems. Plus, there was another executive order focused on AI education and the workforce.

State-Level AI Lawmaking Trends

While the federal government figures things out, states are stepping up. We’re seeing a patchwork of laws popping up across the country. Some states are tackling AI through existing privacy laws, while others are getting specific. Colorado, for instance, passed a pretty detailed AI law that looks at risk levels, kind of like what the EU is doing. Utah also made some changes to its consumer protection laws related to AI. It’s becoming really important for businesses to keep an eye on what’s happening at the state level because these laws can affect how they operate with AI.

Key AI Legislation and Frameworks

When we talk about AI legislation, it’s not just one big thing. It’s a bunch of different ideas and rules trying to catch up with how fast AI is moving. Think of it like trying to build a fence around a herd of wild horses – they keep changing direction!

The SAFE Innovation AI Framework

This isn’t a law yet, but it’s a set of principles that a lot of people in government are looking at. It’s meant to guide how AI is developed and used, pushing for safer practices. Basically, it’s a blueprint for future laws, trying to get everyone on the same page about what responsible AI looks like. It covers things like making sure AI systems are tested properly and that there’s some accountability when things go wrong. It’s a bipartisan effort, which is pretty interesting in today’s political climate.

Proposed Regulations for Political Advertisements

This is a big one, especially with elections coming up. There are proposals to regulate how AI is used in political ads. You know, those deepfakes or AI-generated voices that can make politicians say things they never actually said? These rules are trying to get ahead of that. The idea is to make it clear when AI is being used to create political content, so voters aren’t misled. It’s all about transparency in political messaging.

AI in Employment and Surveillance

Another area getting a lot of attention is how AI is used in the workplace. There are concerns about AI systems being used to monitor employees too closely, or making hiring and firing decisions without much human oversight. Bills like the ‘Stop Spying Bosses Act’ are trying to put limits on this kind of surveillance. The goal here is to protect workers’ privacy and ensure fair treatment, preventing AI from becoming a tool for unchecked monitoring or biased decision-making in employment. We’re seeing a push to make sure AI in the workplace is fair and doesn’t violate basic rights. For example, California is looking at new rules for AI in hiring processes, as mentioned in recent legislative updates California Senate advanced two AI bills.

Emerging AI Regulatory Focus Areas

It feels like every week there’s a new headline about AI doing something wild, and honestly, keeping up with the laws is getting tricky. Governments are trying to figure out how to handle all this, and it’s not like there’s one single rulebook everyone’s following. It’s a real mix of new laws, old laws being stretched to cover AI, and just general guidelines.

Automated Decision-Making Regulation

One big area folks are looking at is how AI makes decisions, especially when those decisions affect people’s lives. Think about loan applications, job screenings, or even who gets flagged for a review. If an AI messes up here, it can really mess someone up too. So, there’s a push to make sure these systems are fair and don’t just repeat old biases. It’s about making sure there’s some accountability when an AI’s decision has a big impact.

Generative AI and Deepfakes

Then there’s the whole generative AI thing – you know, AI that can create text, images, and even videos. It’s pretty amazing, but it also opens the door for some shady stuff. Deepfakes, for example, can make it look like someone said or did something they never did. This is a huge concern, especially when it comes to spreading misinformation or damaging reputations. Figuring out how to regulate this without stifling creativity is a real balancing act. We’re seeing a lot of discussion around watermarking AI-generated content or requiring disclosures.

AI in Political Advertising and Likeness Protection

Speaking of deepfakes, political advertising is another hot topic. Imagine an AI creating a fake ad of a candidate saying something outrageous right before an election. That’s a nightmare scenario. There’s a growing effort to create rules around using AI in political ads to prevent manipulation and protect people’s likeness. It’s all about making sure voters get accurate information and that candidates aren’t unfairly targeted by AI-generated content. The Trump Administration’s focus on AI also touched on some of these areas, showing how seriously these issues are being taken at the highest levels.

International AI Policy and Treaties

It feels like every country is trying to figure out AI rules, and honestly, it’s a lot to keep track of. We’re seeing a real push for international agreements to make sure AI is used responsibly across borders. It’s not just about one country’s rules anymore; it’s about how we all work together.

The European Union’s AI Act

The EU’s AI Act is a big deal, setting up a risk-based approach to AI. Basically, AI systems are categorized by how much risk they pose. High-risk AI, like those used in critical infrastructure or law enforcement, face the strictest rules. Lower-risk AI has fewer obligations, and some AI, like social scoring by governments, is just banned outright. It’s a pretty detailed piece of legislation that aims to balance innovation with fundamental rights. The goal is to create a trustworthy AI ecosystem within the EU, and it’s definitely influencing how other regions think about AI regulation.

Council of Europe Framework Convention on AI

This is a pretty significant development. The Council of Europe has put forward a framework convention that’s the first of its kind – a legally binding treaty focused specifically on AI. The core idea is to make sure AI development and use respect human rights, democracy, and the rule of law. Several countries, including the U.S., UK, and EU members, have already signed on. It’s still working its way through ratification, but it shows a strong international commitment to establishing common principles for AI governance. You can find more details about this important treaty here.

Global AI Policy Trends and Harmonization

Looking at the bigger picture, there’s a clear trend towards harmonization. Countries are sharing best practices and trying to align their approaches, even if the specifics differ. We see this in things like the OECD AI Principles and the G7’s Hiroshima Process Guiding Principles. Many nations are also adopting UNESCO’s Recommendation on the Ethics of AI. It’s a complex dance, with each country trying to foster innovation while also managing risks. The U.S., for example, has been involved in various international discussions and agreements, trying to find that balance. It’s all about trying to create a global environment where AI can develop safely and ethically.

Agency Actions and Enforcement

When it comes to AI, a lot of the action isn’t just in Congress or statehouses. Federal agencies are stepping up too, using their existing powers to keep an eye on things. It’s a bit of a patchwork, but they’re definitely making their presence felt.

SEC’s Focus on AI and Cybersecurity

The Securities and Exchange Commission (SEC) has been pretty clear about its interest in how companies use AI, especially concerning cybersecurity. They’re looking at whether companies are being upfront with investors about the risks and benefits of AI in their operations. The SEC wants to make sure that companies aren’t just hyping AI without a solid plan to manage the associated risks. This ties into their broader mission of protecting investors and maintaining fair markets. They’ve put out guidance, and you can bet they’re watching for any missteps.

FTC’s Stance on AI-Generated Content

The Federal Trade Commission (FTC) is also getting involved, particularly with AI-generated content and potential consumer harm. They’ve already taken action, like the case involving Rite Aid and facial recognition technology. This case showed how the FTC plans to use existing laws, like the FTC Act, to address issues like bias and discrimination stemming from AI. They’re focused on making sure AI isn’t used in ways that deceive or harm consumers. Expect them to keep a close watch on how AI is used in advertising and customer interactions. The FTC is really trying to get ahead of potential problems before they become widespread issues. You can find more details on their approach to AI in their AI Action Plan.

FCC Regulations on AI-Powered Voices

Over at the Federal Communications Commission (FCC), the focus is on AI-generated voices, especially in the context of political advertising and scams. They’re looking at rules to require clear labeling of AI-generated audio content, particularly when it mimics real people’s voices. This is a big deal for preventing misinformation and protecting people from voice cloning scams. The goal is to make sure consumers know when they’re hearing a real person versus an AI-generated voice. It’s all about transparency and preventing the misuse of this technology.

State-Specific AI Legislation Insights

Things are really starting to heat up at the state level when it comes to AI laws. It feels like every week there’s a new bill or a new trend popping up, and keeping track is becoming a full-time job. We’re seeing states take different approaches, some focusing on specific industries, others trying to create broader rules. It’s a bit of a patchwork quilt right now, but it’s definitely shaping how AI is used across the country.

Colorado’s Comprehensive AI Act

Colorado really made waves by passing what’s considered the first broad AI law in the US. This Act, which is set to take effect in 2026, puts duties on both the people who create AI systems and those who use them. What’s interesting is that it doesn’t have a revenue threshold, meaning it applies to pretty much anyone developing or deploying AI that’s considered high-risk. The main focus here is on systems that make big decisions, the kind that could really affect whether someone gets a loan, a job, or even healthcare. They’re really zeroing in on bias and making sure these AI systems don’t discriminate. Developers and users have to take reasonable steps to prevent that, especially when AI is a big part of making important choices in areas like housing, employment, and insurance. This law is already becoming a model for other states looking to regulate AI.

Utah’s AI Consumer Protection Amendments

Utah has also been busy, putting forward amendments focused on consumer protection related to AI. Their approach seems to be about making sure consumers are treated fairly when interacting with AI systems. We’re seeing a push for more transparency and accountability from companies using AI, particularly when it impacts consumer choices or services. It’s all about giving people more control and understanding over how AI is being used in their daily lives. It’s a good step towards making sure AI benefits everyone, not just the companies that build it.

California’s Patchwork of AI Laws

California, as usual, is a big player in this space. They’ve been busy passing a bunch of different AI bills that cover a wide range of topics. We’re talking about transparency, privacy, how AI is used in entertainment, making sure elections are secure, and even how government agencies use AI. It’s not one single, big law like Colorado’s, but more like a collection of specific rules addressing different AI issues. This creates a complex regulatory environment, and businesses operating in California will need to pay close attention to each new piece of legislation. It’s a sign that states are really starting to grapple with the many different ways AI can impact society, and they’re trying to address it piece by piece. For instance, some bills are looking at how AI is used in political ads, trying to prevent misinformation and protect people’s likenesses. It’s a lot to keep up with, but it shows a real effort to get ahead of potential problems. You can find more details on these developments in our California AI laws tracker.

Looking Ahead: What’s Next for AI Rules

So, that’s a lot of new rules and ideas about AI floating around for 2025. It feels like everyone, from governments to companies, is trying to figure out the best way to handle this technology. We’ve seen a big push for national strategies, and states are getting involved too, with places like Colorado and Utah already passing their own laws. It’s a bit of a mixed bag, with some aiming for strict rules and others leaning towards guidelines. The US, for example, is seeing shifts in policy, and it’s still unclear exactly how everything will shake out. One thing’s for sure, though: keeping up with these changes is going to be important for anyone working with AI. It’s a fast-moving area, and what’s new today might be old news tomorrow.

Frequently Asked Questions

What exactly is this AI legislation tracker?

Think of it like a guide that keeps track of all the new rules and suggestions being made about artificial intelligence around the world. It helps you see what different countries and even states within the U.S. are doing to manage AI.

What kind of AI laws are being discussed in the United States?

The U.S. is looking at several new laws. Some are about making sure AI ads are honest, others want to stop employers from using AI to spy on workers, and some aim to protect people’s voices and images from being copied by AI.

Have any states already made their own AI laws?

Yes, places like Colorado and Utah have already passed their own AI laws. Colorado’s law focuses on AI systems that could be risky, making companies responsible for managing those risks. Utah has also made changes to protect consumers when they interact with AI.

What are the main areas that AI laws are trying to cover?

This is a big topic! It includes rules for AI that makes decisions on its own, how to handle AI that creates fake images or videos (deepfakes), and making sure AI used in political ads is clear about who made it.

Are there any big international rules for AI?

The European Union has a major law called the EU AI Act. It’s one of the first big, overall rules for AI in the world. Other countries are also working together, like signing agreements to set common goals for AI.

Which government agencies are involved in AI rules?

Government groups like the SEC (which deals with money and stocks), the FTC (which protects consumers), and the FCC (which handles communication) are all looking at AI. They’re creating rules about things like AI used in finance, fake reviews, and AI voices in phone calls.