So, OpenAI is suddenly talking about some pretty big numbers, like a $20 billion annual revenue run-rate. It sounds huge, and honestly, it is. This isn’t just about making cool AI tools anymore; it’s about turning that tech into serious cash. But it’s not all smooth sailing. There’s a lot going on behind the scenes, especially with how much it costs to actually run all this AI.

Key Takeaways

- OpenAI is projecting a $20 billion annual revenue run-rate by 2025, showing massive growth.

- This revenue surge comes from businesses using their APIs and services like ChatGPT Plus and Enterprise.

- The company’s success is heavily tied to its partnership with Microsoft Azure and demand for NVIDIA hardware.

- High costs for computing power are a major challenge, impacting the company’s profit margins.

- OpenAI’s financial performance sets a benchmark for other AI companies and the industry as a whole.

OpenAI’s Astonishing ARR Trajectory

It feels like just yesterday we were talking about OpenAI hitting a billion dollars in revenue, and now? The numbers are just staggering. We’re talking about a company that’s not just growing, but exploding. The latest buzz is that OpenAI has already blown past a $12 billion annual run-rate. That’s a serious chunk of change, and it puts them in a league of their own when it comes to how fast companies can scale these days. It’s the kind of growth that makes you stop and think about what’s really going on.

Surpassing Billion-Dollar Milestones

OpenAI has been hitting these big revenue markers at a pace that’s hard to believe. We’re seeing projections that put them at $2 billion in annual recurring revenue (ARR) for 2023, then a jump to $6 billion for 2024, and the big one: over $20 billion by 2025. This rapid ascent signals a fundamental shift in how AI is being valued and adopted by businesses worldwide. It’s not just about cool tech anymore; it’s about real money being spent on AI services. This kind of financial momentum is unprecedented in the tech world.

Forecasting Future Revenue Peaks

Looking ahead, the predictions are even wilder. Some analysts are talking about $30 billion by 2026, while others are throwing around numbers as high as $125 billion by 2029. These aren’t just random guesses; they’re based on the current demand and how quickly businesses are integrating OpenAI’s tools. Of course, hitting those higher numbers depends on a lot of factors, like keeping up with compute power and staying ahead of the competition. It’s a high-stakes game, for sure.

The Narrative of Unprecedented Hypergrowth

What we’re witnessing is a story of hypergrowth, plain and simple. The demand for advanced AI models is clearly there, and OpenAI seems to be capturing a huge piece of it. This narrative is reshaping how we think about software growth, setting new benchmarks for what’s possible. It’s a story that’s captivating investors and competitors alike, all watching to see if this pace can be maintained against the backdrop of intense AI development and infrastructure challenges.

Drivers Behind OpenAI’s Revenue Surge

It’s pretty wild to think about how fast OpenAI has managed to bring in money lately. It feels like just yesterday we were all talking about the tech itself, and now, it’s all about the dollars and cents. Turns out, there are a few big reasons why the cash is flowing in so strongly.

Enterprise Uptake and API Consumption

This is a huge one. Businesses are really starting to see the value in using OpenAI’s tools for their own products and services. They’re not just playing around with it; they’re building things. This means a lot more companies are signing up to use OpenAI’s API, which is basically the way developers connect their own software to OpenAI’s AI models. Every time a business uses the API, they’re charged, usually based on how much they use it – think of it like paying for electricity by the kilowatt-hour. This steady stream of usage from businesses, especially those integrating AI into their core operations, adds up fast. It’s the shift from novelty to necessity that’s really fueling this part of the revenue.

ChatGPT Plus and Enterprise Subscriptions

Beyond the developers using the API, there’s the direct customer side. ChatGPT Plus, the paid version for individuals, offers faster responses and priority access, which a lot of people are willing to pay for. But the real money-maker here is likely ChatGPT Enterprise. This is aimed at larger organizations, offering more security, better performance, and custom features tailored to a company’s needs. These aren’t small, cheap subscriptions; they’re significant contracts that bring in substantial revenue. It shows OpenAI isn’t just relying on one type of customer; they’re catering to both individuals and big businesses.

The Role of Tailored Business Plans

OpenAI seems to be getting smarter about how they structure deals for businesses. It’s not a one-size-fits-all approach anymore. They’re offering different tiers and custom solutions that meet specific industry needs or company sizes. This flexibility is key. For example, a startup might need a different package than a massive corporation. By creating these tailored plans, OpenAI can better align its services with what businesses are willing to pay for, making the value proposition clearer and encouraging larger commitments. It’s about making the AI accessible and useful for a wide range of business problems, which naturally leads to more revenue.

The Financial Landscape of Generative AI

Shifting from Tech Specs to Bottom-Line Battles

It feels like just yesterday we were all talking about which AI model had the most parameters or could spit out the most impressive text. Now, though? The conversation has really changed. We’re seeing a big move from just looking at the cool tech stuff to focusing on actual money – how much is being made and how. OpenAI’s projected revenue numbers, like hitting a $20 billion annual run rate, really put this shift into focus. It’s not just about having a powerful AI anymore; it’s about turning that power into a solid business that makes serious cash. This means companies are now being judged less on their research papers and more on their balance sheets.

Setting New Standards for AI Monetization

OpenAI’s success is basically forcing everyone else to figure out how to make money from AI, and fast. They’ve shown that there’s a real market for these tools, not just for tech enthusiasts but for big businesses too. Think about it:

- Enterprise API Access: Companies are paying good money to use OpenAI’s models in their own products and services.

- ChatGPT Subscriptions: From individuals wanting better AI help to entire companies needing advanced features, subscriptions are a huge revenue stream.

- Custom Business Solutions: Tailored plans for specific company needs are also popping up, showing a willingness to pay for specialized AI.

This isn’t just about selling software; it’s about selling intelligence as a service, and the price tags are getting pretty hefty. It’s setting a new bar for what’s possible in the AI industry.

The Economic Viability of Frontier AI Models

Building these cutting-edge AI models, the ones that are really pushing the boundaries, costs a fortune. We’re talking massive amounts of computing power, specialized hardware, and a whole lot of electricity. So, when a company like OpenAI starts pulling in billions, it’s a huge signal that these expensive, frontier models can actually be economically viable. It proves that the investment in developing these complex systems can pay off. However, it also highlights a tricky balance: the cost of running these models is incredibly high. The real challenge is making sure the money coming in is significantly more than the money going out to keep the lights on and the servers running. It’s a constant push and pull between innovation and the bottom line.

Navigating the Compute Cost Conundrum

So, OpenAI is pulling in some serious cash, right? We’re talking billions in ARR. But here’s the thing that doesn’t always make the headlines: all that money earned comes with some pretty hefty bills. Think of it like running a super-powered race car. You might win the race and get a big trophy, but filling that gas tank costs a fortune.

The Sky-High Expenses of AI Infrastructure

Running these massive AI models, the ones that power things like ChatGPT, isn’t cheap. It takes a ton of specialized computer chips, mostly GPUs, and a whole lot of electricity to keep them humming. These aren’t your average computer parts; they’re cutting-edge and come with a premium price tag. Plus, you need massive data centers to house all this gear, and those have their own costs for space, cooling, and maintenance. It’s a constant battle to keep the lights on and the servers running.

Unit Economics and Inference Cost Reduction

When we talk about "unit economics," we’re basically asking: how much does it cost to do one thing? For OpenAI, that "thing" could be answering a single question or processing a chunk of text. The goal is to make that cost as low as possible. They’re always looking for ways to make their models run more efficiently, using fewer resources for each task. This is super important because as more people use their services, the costs add up fast. If they can’t get the cost per query down, those big revenue numbers start to look a lot less impressive.

Gross Margins Versus Compute Outlays

This is where things get really interesting. You’ve got revenue coming in, but then you have the cost of actually making the service available – that’s your "compute outlay." The difference between those two is your "gross margin." OpenAI wants this margin to be as big as possible, like any business. But with AI, the compute costs are enormous. It’s a constant tug-of-war: can they keep growing their income faster than their expenses for the hardware and power needed to run everything? It’s a tricky balance, and one that will shape their long-term success.

Competitive Dynamics in the AI Arena

Raising the Stakes for Industry Rivals

OpenAI’s rapid climb in annual recurring revenue (ARR) isn’t just a win for them; it’s basically setting a new, really high bar for everyone else in the generative AI space. Companies like Anthropic, Google, and others are now under serious pressure to show they can do more than just build impressive models. The game has shifted from who has the best tech specs to who can actually build a business that makes serious money. It feels like the early days of the internet all over again, where a few big players started pulling away from the pack. This means rivals have to map out their own paths to billions, and fast. It’s a tough market out there, and OpenAI’s success is definitely making things more interesting, and probably a bit more cutthroat.

The Impact on AI Infrastructure Providers

When OpenAI does well, companies that provide the backbone for AI, like NVIDIA and Microsoft, tend to do pretty well too. Think about it: all those AI models need a ton of computing power, and that means buying lots of fancy chips and using cloud services. OpenAI’s massive growth directly translates into huge demand for things like NVIDIA’s GPUs. It’s a symbiotic relationship, really. Microsoft, through Azure, is a key partner, so OpenAI’s success is a big win for their cloud business. This whole AI boom is a massive boon for these infrastructure providers, and OpenAI’s numbers are a big reason why. It’s a clear signal that the big bets these companies made on AI infrastructure are paying off. We’re seeing a real AI capex supercycle happening right now.

Open-Source Models as a Price Ceiling

Now, here’s where it gets complicated. While OpenAI is charging ahead, there’s a whole world of open-source AI models out there, like Meta’s Llama and Mistral. These models are getting really good, and they’re often free to use or much cheaper. This creates a sort of natural price limit for what OpenAI can charge for its own services. If their API prices get too high, businesses might just switch to a powerful open-source alternative. So, OpenAI has to keep innovating and offering more value, not just on the core model but on the services and features around it, to justify its pricing. It’s a constant push and pull: OpenAI needs to make money, but the availability of strong, free options means they can’t just charge whatever they want. It forces them to be smarter about how they build their business.

Stakeholder Impact and Market Implications

So, OpenAI’s massive revenue growth isn’t just a win for them; it’s shaking things up for pretty much everyone involved in the AI world. It’s like they’ve set a new bar, and now others have to figure out how to jump over it.

Enterprise Buyers and Budgetary Considerations

For companies looking to use AI, OpenAI’s success is a big signal. It means the tech is here to stay and that OpenAI is likely a stable partner for the long haul. This makes it easier for IT departments to justify spending money on AI tools. But, it also means AI is becoming a serious budget item, not just a small experiment. Companies need to plan for this, and there’s always a worry that as OpenAI grows, prices might go up later on. It’s a bit of a balancing act, really.

NVIDIA and Microsoft’s Symbiotic Relationship

This whole situation is a huge win for companies like NVIDIA and Microsoft. OpenAI needs tons of computing power, which means buying lots of NVIDIA’s specialized chips. And a lot of that computing happens on Microsoft’s Azure cloud. So, as OpenAI makes more money, it directly translates into more business for these partners. It’s a classic example of how different parts of the tech industry can grow together. OpenAI’s journey is a big reason why NVIDIA’s valuation has been so high lately.

Valuation Benchmarks for AI Ventures

OpenAI’s numbers are changing how people think about valuing other AI companies. Before, it was all about the cool tech and potential. Now, investors are really digging into the actual money being made and the costs involved. They’re looking at:

- Profitability: Can the company actually make money after paying for all the computing power?

- Growth Rate: How fast is the revenue climbing, and can it keep going?

- Market Share: How much of the AI pie are they capturing?

It’s not just about having a good idea anymore; it’s about building a real business that can bring in serious cash. This pressure means startups need to show a clear path to making money, not just promise future breakthroughs.

The Critical Tension: Revenue vs. Infrastructure

So, OpenAI’s ARR is shooting up, which is pretty wild to think about. But here’s the thing that gets glossed over a lot: all that money coming in has to be paid for, and the bill is mostly for super-expensive computer power. It’s like having a super popular food truck that’s always packed, but the cost of ingredients and keeping the generator running is eating up all your profits.

Scaling Revenue Against Physical Constraints

This whole revenue surge is great, but it’s bumping up against some hard limits. You can’t just magically create more computer chips or data center space. Every dollar OpenAI makes is tied to using a ton of computing resources, and that’s a physical thing. It’s not like selling software where you just copy it. You need actual hardware, power, and cooling. This means their growth isn’t just about sales pitches; it’s about whether they can actually get the machines and power needed to serve all those customers. It’s a constant race to keep up with demand without breaking the bank.

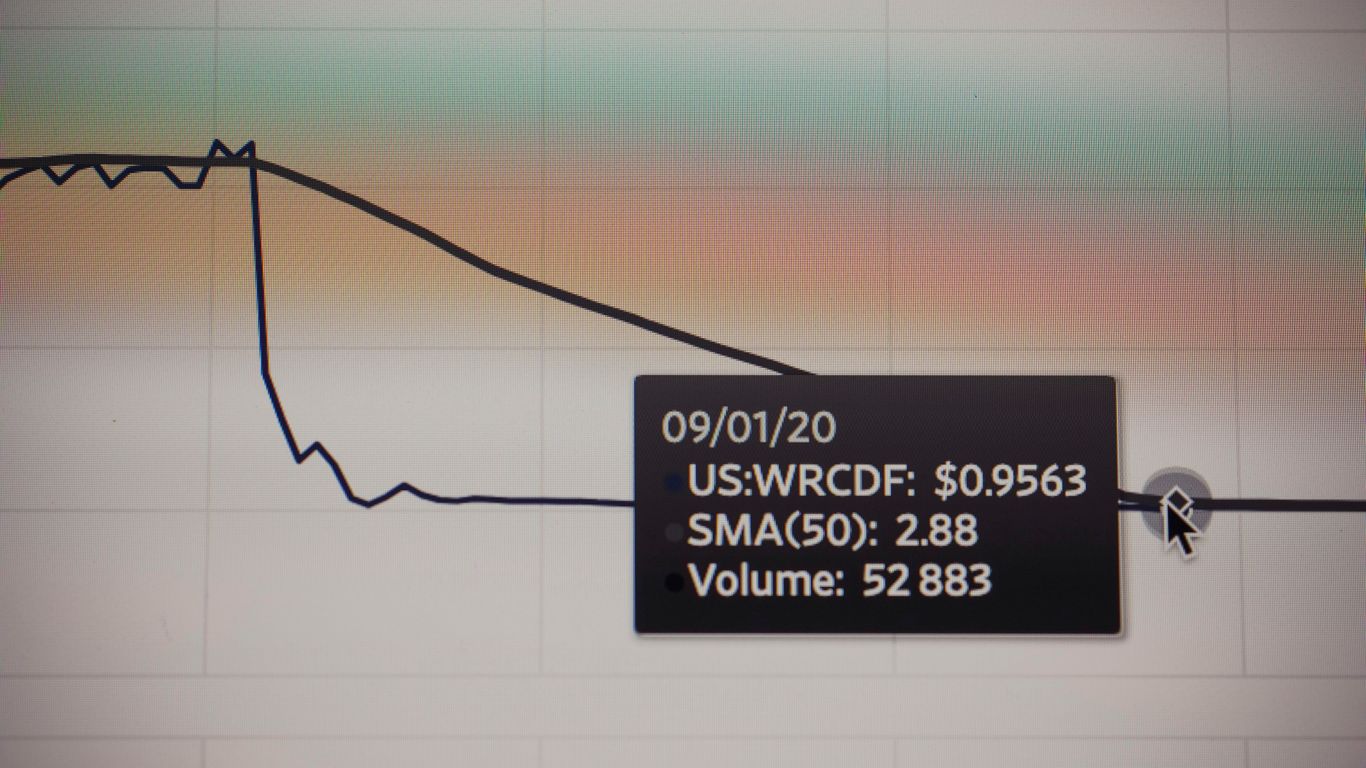

Dependency on GPU Supply Chains

Think about the chips that power all this AI. Companies like NVIDIA are the gatekeepers, and their production schedules directly impact OpenAI. If NVIDIA can’t make enough chips, or if there’s a hiccup in the supply chain, OpenAI’s ability to serve its customers and grow its revenue hits a wall. It’s a bit like a restaurant waiting for a shipment of a key ingredient – no ingredient, no food, no sales. This reliance means OpenAI’s financial future is partly in the hands of chip manufacturers and their factories. It’s a big reason why some analysts are a bit cautious about those sky-high revenue projections.

High-Margin Software vs. Capital-Intensive Service

This is the big question mark hanging over everything. Is OpenAI building a business that makes a lot of profit on each sale, like a typical software company? Or is it more like a utility, where they have to spend a ton of money just to keep the lights on and the service running? Right now, it feels like the latter. The cost of running these massive AI models is huge. They’re trying to get smarter about how they use computing power to lower those costs, but it’s a tough fight. If they can’t get their costs down, those impressive revenue numbers might not translate into the kind of profits investors are hoping for. It’s a balancing act between selling more and spending less on the actual ‘making’ of the AI.

The Road Ahead

So, what does all this mean for OpenAI and the wider AI world? Hitting that $20 billion ARR target is a massive win, showing that people are really willing to pay for advanced AI. It’s not just about cool tech anymore; it’s about real business value. But, and this is a big but, the real test isn’t just how much money they bring in. It’s about managing those huge costs for computing power. If they can figure out how to make more profit on each dollar earned, that’s what will truly show if they’re built to last. This whole situation puts a lot of pressure on other AI companies to step up their game, not just in technology, but in making actual money. It’s going to be fascinating to see how this all plays out, especially with the costs of running these AI systems constantly rising.

Frequently Asked Questions

What is OpenAI’s big money goal for 2025?

OpenAI is aiming to make about $20 billion in revenue by the year 2025. This is a huge jump and shows how much people and businesses are using their AI tools.

How is OpenAI making so much money?

They’re earning money in a few main ways. Businesses are paying to use their AI through something called an API, which lets them build AI into their own apps. Also, people are subscribing to services like ChatGPT Plus, and big companies are getting special, bigger plans.

Is making AI expensive?

Yes, it costs a lot of money to run AI. Think of it like needing super powerful computers and lots of electricity, which costs a fortune. OpenAI has to spend a ton of money just to keep their AI running for everyone.

Who are OpenAI’s main helpers and partners?

Microsoft is a really big partner. They help provide the powerful computer systems that OpenAI needs, and in return, Microsoft benefits from OpenAI using their services. NVIDIA is also super important because they make the special computer chips (GPUs) that power AI.

Are there other companies trying to do what OpenAI does?

Absolutely! Companies like Google and Anthropic are also working hard on AI. Plus, there are free AI tools called ‘open-source models’ that are getting really good, which makes it harder for companies to charge a lot of money for their own AI.

What’s the biggest challenge for OpenAI’s money goals?

The biggest challenge is balancing the money they make with the huge costs of running the AI. They need to make sure they’re earning more than they spend on computers and power, which is tough when AI is so expensive to operate.