Google is making some big moves in the AI space, and it’s kind of a big deal. They’ve got this new collection of tools they’re calling “Googly Play,” and it sounds like it could change how we all make and use digital stuff. Plus, they’ve got this Gemini AI thing that’s getting a serious upgrade, and they’re making it easier for developers to use it. It feels like Google is really pushing hard to be ahead in the AI game, and we’re here to break down what that means for everyone. This is a google techcrunch exclusive, so let’s get into it.

Key Takeaways

- Google’s “Googly Play” suite is set to shake up content creation with new AI tools.

- Gemini Deep Research AI, powered by Gemini 3 Pro, is making strides in autonomous exploration and research.

- The new Interactions API simplifies AI integration for developers, making powerful tools more accessible.

- Google is integrating its AI advancements across devices, including foldables and Wear OS.

- The company’s future plans show a strong focus on using AI to help people be more creative and access information.

Unpacking Google’s “Googly Play” AI Innovations

So, Google’s been busy, huh? They’ve rolled out this whole new suite of AI tools they’re calling "Googly Play." It’s not just one thing; it’s more like a collection of smart programs designed to change how we make and use digital stuff. Think of it as Google’s latest attempt to get ahead in the AI game, and honestly, it feels pretty significant.

Introduction to Google’s Latest AI Suite

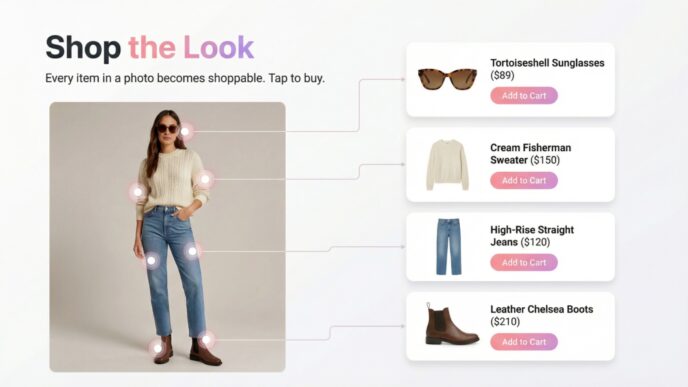

This "Googly Play" thing is Google’s big push into making AI more accessible for creating content. It’s a bunch of different tools, all working together, that can help with everything from writing text to making images and even videos. The idea is to make complex AI tasks simpler for everyday users and businesses. It’s like they’re trying to put a super-powered creative assistant in everyone’s hands. The AI market is growing like crazy, and Google wants a big piece of that pie. They’re betting that by making these tools easy to use, more people will jump on board.

The Evolving AI Content Creation Landscape

Making content with AI is changing fast. It used to be that AI could only do basic stuff, like writing simple sentences or generating generic images. Now, with tools like those in "Googly Play," AI can help create much more complex and original content. We’re talking about AI that can help write scripts, design graphics, and even edit videos. This means that people who aren’t professional designers or filmmakers can now create pretty impressive stuff. It’s making the whole process faster and cheaper, which is a big deal for businesses and individual creators alike. The demand for new content is huge, and AI is stepping in to help meet that demand.

Strategic Advantages of "Googly Play"

What makes "Googly Play" stand out? Well, for starters, it’s Google. They have a massive infrastructure and a lot of data, which helps their AI models learn and improve quickly. Plus, they’re trying to make these tools work well with other Google products, which could make things really convenient if you’re already in their ecosystem. They’re also focusing on making the AI helpful, not just a replacement for human creativity. It’s more about working with the AI. Here are a few key points:

- Integration: "Googly Play" tools are designed to work together and with other Google services.

- Accessibility: Google is aiming to make advanced AI features available to a wider audience, not just tech experts.

- Innovation: The suite includes tools that push the boundaries of what AI can do in content creation, like advanced video generation and editing assistance.

- Speed and Efficiency: Businesses can potentially speed up their content production significantly, getting more done in less time.

Gemini Deep Research AI: A New Era of Autonomous Exploration

Overview of Gemini 3 Pro Enhancements

Google just dropped a pretty big update with Gemini Deep Research AI, and it’s powered by the Gemini 3 Pro model. This isn’t just a small tweak; it’s a significant step up, especially when it comes to how it handles searching the web and putting together information. Think of it as an AI that’s gotten much better at digging through the internet on its own to find what it needs. This new version is designed to be more of an agent, capable of handling complex research tasks autonomously. It’s built to be more efficient, which should mean faster results and potentially lower costs for generating detailed reports.

Key Benchmarks in AI Research

So, how do we know it’s actually better? Google is pointing to a few key tests. One is called Humanity’s Last Exam (HLE), which is a tough one for AI. Another is a new benchmark Google created called DeepSearchQA. They also have something called BrowseComp, which specifically checks how well the AI can search and understand information from the web. Gemini Deep Research has shown strong performance on these, suggesting it’s really good at tasks that require sifting through a lot of online data. It’s not just about answering questions; it’s about the AI’s ability to actually go out and find the answers itself.

Comparative Analysis: Google vs. OpenAI

This Gemini Deep Research launch happened right around the same time OpenAI released GPT-5.2. It feels like a direct challenge, doesn’t it? While GPT-5.2 is likely another powerful language model from OpenAI, Google is really pushing Gemini Deep Research as a specialized tool for research and report generation. It’s like comparing a Swiss Army knife to a specialized research probe. Google’s approach seems to be about creating AI agents that can perform specific, complex workflows, whereas OpenAI’s models have historically been known for their broad language capabilities. This head-to-head release highlights the different paths these companies are taking in the AI race, focusing on agentic systems versus more general-purpose language models.

The Interactions API: Streamlining AI Integration for Developers

So, Google’s dropped this new thing called the Interactions API, and it’s pretty neat if you’re into building apps that use AI. Think of it as a way to make talking to Google’s AI models, like Gemini, a whole lot simpler. Before, getting AI to do complex stuff, especially over multiple steps, could be a real headache. You’d have to manage all the back-and-forth yourself, keeping track of what was said and what the AI needed next. This new API seems to handle a lot of that heavy lifting.

Accessibility and Features of the Interactions API

What’s cool about the Interactions API is that it gives you a single point of entry for interacting with Gemini models and agents. It’s designed to manage the conversation’s state, so you don’t have to. This means you can build applications that have more natural, multi-turn conversations with the AI without getting bogged down in the technical details. It also helps with connecting to other tools, which is a big deal for making AI actually useful in real-world scenarios. This unified interface is a major step towards making advanced AI capabilities more approachable for developers. You can get a single API key from Google AI Studio to start using it, which cuts down on setup time.

Simplifying AI Integration with Google AI Studio

Google AI Studio is where you’ll likely be spending time if you want to play with this API. It’s basically a web-based tool that lets you experiment with Google’s AI models. With the Interactions API, integrating these models into your own projects becomes much more straightforward. You can build custom agents, manage data uploads, and even connect to third-party services, all from within this environment. It’s like having a workbench specifically designed for AI development, making the whole process less intimidating.

Empowering Developers with State-of-the-Art Tools

This API is really about giving developers better tools to work with. Instead of wrestling with complex code to get AI to perform specific tasks, you can focus more on the creative side of your application. The API handles the underlying mechanics of AI interaction, allowing for more sophisticated features like:

- Stateful Conversations: The AI remembers the context of your chat, making interactions feel more natural.

- Tool Orchestration: Easily connect the AI to other services or functions to perform actions.

- Simplified Data Handling: Streamlined ways to upload and process data for the AI.

It’s a move that seems to be pushing Google’s AI development forward, making it easier for more people to build cool things with it.

Google’s AI Integration Across Devices and Platforms

Gemini for Foldable Devices

Google is making sure its latest AI, Gemini, works well on all sorts of screens, and that includes those foldable phones. Think about unfolding your phone to a bigger display – Gemini is designed to adapt, giving you more space to interact with AI features. It’s not just about fitting the AI onto the screen; it’s about making the experience better when you have that extra real estate. This means things like more complex data visualizations or multi-panel interfaces could become standard on these devices.

Enhanced "Circle to Search" Functionality

Remember "Circle to Search"? It’s getting a serious upgrade. Now, instead of just finding basic information, it can tap into Gemini’s deeper research capabilities. So, if you circle something on your screen, you might get more detailed summaries, comparative analyses, or even generated content based on what you’re looking at. This makes finding information on the fly much more powerful than just a quick web lookup. It’s like having a research assistant built right into your phone’s display.

Wear OS 5 and Smartwatch Innovations

Your smartwatch is about to get a lot smarter. With Wear OS 5, Google is bringing more AI power to your wrist. This isn’t just about better fitness tracking; imagine getting AI-powered insights directly on your watch, or having your watch handle more complex tasks without needing your phone. We’re talking about:

- Smarter notifications: AI that understands what’s important and summarizes it for you.

- On-device AI processing: Some AI tasks will run directly on the watch, making them faster and more private.

- Contextual assistance: Your watch could offer help based on your location, schedule, and activity.

This push means your watch becomes less of a companion device and more of an independent smart tool.

Future Trajectory of Google’s AI Endeavors

Integration Plans for Gemini AI

So, what’s next for Google’s AI push? It looks like Gemini is going to be everywhere. We’re talking about it getting woven into pretty much everything Google does. Think about your everyday apps and services – Gmail, Docs, even Search – they’re all slated to get smarter with Gemini’s help. This isn’t just about adding a new feature here or there; it’s about fundamentally changing how we interact with technology. The goal seems to be making these tools more intuitive and helpful, almost like having a personal assistant built into your digital life. It’s a big undertaking, and they’re rolling it out gradually, but the direction is clear: AI is becoming a core part of the Google experience.

The Role of AI in Augmenting Human Creativity

It’s easy to worry that AI will replace human creativity, but Google seems to be taking a different approach. The idea behind "Googly Play" and Gemini isn’t just to automate tasks, but to work alongside us. Imagine AI helping you brainstorm ideas, suggesting different ways to phrase something, or even generating initial drafts that you can then refine. It’s like having a super-powered creative partner. This could really lower the barrier for people who have great ideas but maybe lack some of the technical skills to bring them to life. We’re seeing tools that can help with everything from writing scripts to creating visuals, and the trend is towards AI becoming a collaborator, not a replacement. This partnership between human ingenuity and AI capability is where the real magic is likely to happen.

Transforming Information Access with AI

Accessing information is something we do constantly, and AI is set to change that too. Think about how search works now. With Gemini, it could become much more conversational and context-aware. Instead of just typing keywords, you might be able to ask complex questions and get detailed, synthesized answers. This could make research a lot faster and more efficient. It also means that information could be presented in more personalized ways, tailored to what you actually need to know. This shift could have a big impact on education, business research, and even just our daily curiosity. It’s about making knowledge more accessible and understandable for everyone.

Wrapping It Up

So, what does all this mean for us? Google’s really pushing the envelope with these new AI tools, making things faster and maybe even a bit easier for creators and businesses. It’s kind of like getting a super-powered assistant for your projects. The AI market is booming, and Google is definitely playing a big part in that. It’s going to be interesting to see how these tools change the way we make and consume content. Keep an eye on this space, because things are moving fast, and staying updated is key if you want to keep up.

Frequently Asked Questions

What is “Googly Play”?

“Googly Play” is a name for Google’s new set of AI tools that help people create and work with digital stuff like text, images, and videos. Think of it as a collection of smart helpers for your creative projects.

How is Gemini 3 Pro different from older AI models?

Gemini 3 Pro is like a super-smart researcher. It can look through tons of information on the internet by itself, figure out what’s important, and even create reports. It’s much faster and better at understanding complex topics than many previous AI systems.

Is the Interactions API hard for developers to use?

No, the Interactions API is designed to make it easier for developers. It’s like a universal remote for Google’s AI, letting them connect Gemini’s smarts into their own apps without a lot of complicated steps.

Will these new AI features work on my phone or watch?

Yes! Google is putting these AI tools into many devices. For example, Gemini can work better on foldable phones, and features like “Circle to Search” are getting smarter and will be available on more gadgets, including smartwatches running Wear OS 5.

How will Google use AI in the future?

Google plans to weave its AI, like Gemini, into almost everything they offer, from Search to apps like Google Finance. The goal is to make information easier to find and use, and to help people be more creative.

Can AI really help people be more creative?

Absolutely! Google’s AI tools are meant to work alongside people, not just replace them. They can help brainstorm ideas, create drafts, and handle boring tasks, freeing up humans to focus on the truly creative and imaginative parts of a project.