Understanding the Vision: Cameras for Self-Driving Cars

The Eyes of Autonomous Vehicles

Think of cameras as the eyes for self-driving cars. They’re how the vehicle actually sees and understands the world around it. Just like we use our eyes to spot lane lines, read signs, and notice other cars, these cameras do the same job for autonomous systems. They capture a ton of visual information, which is then processed by the car’s "brain" – its computer system. This visual input is absolutely key for the car to know where it is, where it’s going, and what’s happening on the road. Without these cameras, the car would be essentially blind.

Visual Data for Navigation and Safety

So, what exactly do these cameras help the car do? A lot, actually. They’re used for things like:

- Lane Identification: Spotting the white and yellow lines that keep the car centered in its lane.

- Object Recognition: Figuring out if that blob is a pedestrian, another car, a bicycle, or even a traffic cone.

- Traffic Sign Reading: Recognizing speed limits, stop signs, and other important road signals.

- Distance Estimation: While cameras don’t measure depth perfectly on their own, they contribute to the car’s ability to judge how far away things are, which is vital for safe braking and steering.

- Hazard Detection: Spotting unexpected things on the road, like debris or animals, before they become a problem.

All this visual data is constantly being analyzed to make sure the car drives safely and follows the rules of the road.

Key Components of Computer Vision Systems

Making sense of all the images from the cameras involves a sophisticated system called computer vision. It’s not just one thing, but a collection of technologies working together. Here are some of the main parts:

- Image Sensors: These are the actual chips inside the cameras that capture the light and turn it into digital data. Higher resolution usually means more detail.

- Image Processing Software: This is the first step in cleaning up the raw image data, adjusting for brightness, contrast, and other factors.

- Object Detection Algorithms: These are smart pieces of software, often using machine learning, that are trained to find and identify specific objects within an image. Think of it like teaching a computer to recognize a cat in a photo.

- Machine Learning Models: These models are trained on massive datasets of images and driving scenarios. They learn patterns and make predictions about what they’re seeing, allowing the car to react appropriately.

These components work in concert, processing information incredibly quickly so the car can make split-second decisions.

Diverse Camera Applications in Modern Vehicles

Cars today aren’t just about getting from point A to point B anymore. They’re packed with tech, and cameras are a huge part of that. Think of them as the car’s eyes, helping out in all sorts of ways, from making parking easier to keeping an eye on the driver.

Essential Rearview and Front-Facing Cameras

Most cars now come with at least a rearview camera. It’s usually tucked away near the license plate and gives you a clear picture of what’s behind you when you’re backing up. This is a lifesaver for avoiding bumps and scrapes, especially in tight parking spots. Front-facing cameras are also becoming more common. These are often used for advanced driver assistance systems (ADAS). They can help detect lane markings to keep you centered in your lane, or even read traffic signs. These cameras are the first line of defense for many safety features.

Surround-View and All-Around Camera Systems

For a more complete picture, some vehicles offer surround-view systems. These use multiple cameras placed around the car – front, back, and sides – to create a 360-degree view. It’s like having a bird’s-eye perspective of your car and its surroundings, all displayed on your infotainment screen. This makes maneuvering in tricky situations, like crowded parking lots or narrow streets, much less stressful. It really helps you see everything you might miss otherwise.

Specialized Cameras: Dash, In-Cabin, and Trailer

Beyond the standard views, there are other specialized cameras. Dash cams, for instance, record your drive. They’re super useful if you’re ever in an accident, providing video evidence for insurance claims or just to show what happened. In-cabin cameras are starting to appear too, often used for driver monitoring. They can detect if you’re getting drowsy or distracted, sending out alerts to help keep you focused on the road. And if you tow a trailer or drive an RV, there are even cameras designed specifically for that, giving you a view of what’s happening behind and alongside your trailer, making those long drives and tricky maneuvers much more manageable.

Advancing Autonomy with Camera Technology

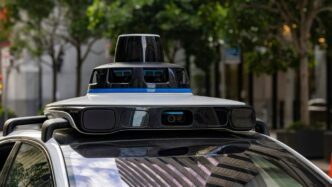

Cameras are really the workhorses when it comes to making cars drive themselves. They’re not just for seeing where you’re going; they’re actively figuring out what’s happening around the car. This visual information is what allows the car to understand its environment and make smart decisions.

Enabling Lane Identification and Object Recognition

Think about staying in your lane on the highway. Front-facing cameras are constantly looking for those lane lines, whether they’re solid, dashed, or even faded. They help the car know its exact position on the road. Beyond just lines, these cameras are also trained to spot all sorts of things: other cars, people walking, cyclists, even animals that might dart out. The better the camera and the smarter the software, the quicker and more accurately the car can react to these objects.

Traffic Sign Recognition and Surround View Capabilities

It’s not just about what’s on the road, but also the rules of the road. Cameras can read traffic signs – speed limits, stop signs, yield signs – and feed that information directly to the car’s system. This means the car can adjust its speed or prepare to stop without a human needing to tell it. Then there’s the surround-view stuff. By using multiple cameras placed all around the vehicle, the car can create a sort of "bird’s-eye" view. This is super helpful for parking in tight spots or just generally understanding what’s happening on all sides, eliminating those tricky blind spots.

Driver Monitoring for Enhanced Safety

Even in cars that can drive themselves to some extent, the human driver is still often in the loop. Interior cameras are becoming more common for something called driver monitoring. These cameras watch the driver to see if they’re paying attention, if their eyes are on the road, or if they might be getting sleepy. If the car detects the driver is distracted or drowsy, it can issue a warning or even take over more control if needed. It’s like having an extra set of eyes looking out for everyone’s safety.

Overcoming Challenges in Automotive Camera Performance

So, cameras are pretty neat for self-driving cars, right? They’re like the car’s eyes. But, just like my own eyes sometimes, they can get a bit fuzzy. It’s not always smooth sailing for these automotive cameras, and there are a few hurdles they have to jump over to work right.

Addressing Contamination and Environmental Factors

Think about driving on a really muddy road or in a downpour. That stuff gets everywhere, and camera lenses are no exception. Dirt, water, even bug splatters can totally block the view. This is a big deal because a dirty lens means the car can’t see what’s in front of it, which is obviously not good for safety. Some cars have little covers that pop open only when needed, or they might position cameras where the windshield wipers can reach them. It’s also tough when the weather gets extreme – super cold, super hot, foggy, or snowy. The cameras themselves need to handle a wide range of temperatures, from freezing cold to scorching heat, and still take clear pictures. Image noise can get worse when it’s hot, making it harder for the car’s brain to figure things out.

Navigating Lighting Conditions and Flicker Issues

Bright sunlight can be just as bad as darkness. Imagine driving out of a tunnel into bright daylight, or facing oncoming headlights at night. The camera has to deal with huge differences in light, which can make it hard to see details. Then there’s the whole flicker thing. Lots of modern lights, like LEDs, blink really fast, but at different speeds. This rapid blinking can mess with the camera’s ability to get a steady picture, making it tricky to track moving objects like other cars or bikes. It’s like trying to watch a strobe light show while also trying to read a book – not easy.

Regional Differences and Object Variety Challenges

Roads and signs aren’t the same everywhere, are they? What looks like a stop sign in one country might be a different shape or color in another. Lane markings can also change. Cameras need to be smart enough to recognize these differences and still know what they’re looking at. Plus, there are just so many things out there on the road. It’s not just cars and trucks; it’s pedestrians, cyclists, animals, debris, and all sorts of other stuff. Each one can look different depending on the angle, the lighting, and how far away it is. Getting the camera system to correctly identify and classify all these different objects, all the time, is a massive puzzle.

The Future of Vision-Based Autonomous Driving

So, where are we headed with all this camera tech for self-driving cars? It’s pretty exciting stuff, honestly. We’re seeing a big push towards systems that rely more and more on cameras, sometimes even exclusively. Think about it – cameras are relatively cheap compared to some other sensors, and they capture a ton of detail. This camera-only approach could make self-driving tech more affordable and accessible down the road.

But cameras alone can’t do everything, right? That’s where sensor fusion comes in. It’s like giving the car multiple ways to ‘see’ and understand its surroundings. By combining what the cameras pick up with data from things like radar and LiDAR, the car gets a much richer, more complete picture of the world. This helps it deal with tricky situations, like bad weather or when things are too far away for a camera to clearly make out.

And of course, none of this would work without AI and machine learning. These are the brains behind the operation. They take all the raw data from the cameras and sensors and figure out what it all means. They learn to spot pedestrians, understand traffic lights, predict what other cars might do, and make split-second decisions. It’s a constant learning process, with AI models getting better and better the more data they process. It’s not just about seeing; it’s about understanding and reacting intelligently.

Here’s a quick look at what’s driving this future:

- Camera-Centric Systems: Reducing reliance on expensive sensors by making cameras do more heavy lifting.

- Advanced Sensor Fusion: Combining camera data with radar, LiDAR, and other sensors for a robust perception system.

- AI and Machine Learning: The engine that processes visual data, enabling object recognition, prediction, and decision-making.

- Real-World Data Training: Continuously feeding AI models with diverse driving scenarios to improve their adaptability and reliability.

Integrating Cameras for Enhanced Vehicle Safety

Cameras are really stepping up to make our cars safer, not just for the future of self-driving but for the cars we drive today. They’re like giving your car extra eyes, helping it see things you might miss.

Improving Lateral Visibility and Eliminating Blind Spots

Remember how nerve-wracking changing lanes used to be? You’d crane your neck, hoping for the best. Well, cameras are changing that. Side-view cameras, often tucked near the side mirrors or on the panels, give you a much better look at what’s happening beside and slightly behind your car. This is a big deal for avoiding those nasty blind spots that can cause accidents. Some systems even replace traditional mirrors with digital displays, offering a clearer picture, especially when the weather is bad.

Night Vision and Low-Light Performance

Driving at night or in foggy conditions has always been a challenge. Standard headlights only illuminate so far, and it’s easy to miss a pedestrian or an animal darting out. Night vision cameras use infrared technology to see heat signatures. This means they can spot things that are invisible to the naked eye, even when it’s really dark. It’s like having a superpower for spotting hazards before they become a problem.

Backup Cameras and Parking Assistance

This is probably the most common camera feature people are familiar with now. Backup cameras, usually mounted on the rear of the car, show you exactly what’s behind you when you’re reversing. They often come with helpful parking lines that curve as you turn the steering wheel, making it way easier to get into tight spots without scraping anything. Some cars go even further with surround-view systems that stitch together images from multiple cameras to give you a bird’s-eye view of the car and its surroundings. It makes parking in a crowded lot feel a lot less stressful.

Looking Ahead

So, yeah, cameras are a pretty big deal for self-driving cars. They’re like the eyes that let the car see everything going on around it, from reading signs to spotting a pedestrian. While they have their limits, especially when the weather gets rough or it’s super dark, they work with other tech to make things safer. As this technology keeps getting better, these camera systems are going to be key in making cars that can drive themselves a normal part of our lives. It’s a complex puzzle, but cameras are definitely a piece that can’t be ignored.