It’s that time of year again when we look ahead to what’s next in generative AI news. Things move fast, and predicting the future can feel a bit wild, especially with all the buzz around AI. But we’ve gathered some insights from experts and industry watchers to get a sense of where things are headed in 2026. It looks like AI is becoming more than just a quick tool; it’s shaping up to be a bigger part of how businesses work, how we interact with tech, and even how our economy functions. Let’s see what’s on the horizon.

Key Takeaways

- Generative AI is shifting from individual productivity boosts to becoming a core resource for bigger, more strategic company projects.

- Agentic AI, while still facing some hype, is moving closer to offering real value in business operations.

- The economy might see some shifts related to the AI boom, and learning about AI will become a standard skill for workers.

- New hardware, like specialized chips beyond GPUs, is being developed to handle the demands of advanced AI tasks.

- AI is expanding beyond screens into the physical world, with a growing focus on robotics and specialized systems for specific industries.

Generative AI News: Enterprise Resource Evolution

It feels like just yesterday everyone was talking about how generative AI would change everything, mostly by making individual tasks faster. You know, writing emails quicker, making presentations look a bit better, that sort of thing. And sure, that happened. But honestly, most of those uses felt pretty small, and it was hard to even tell if they were actually saving us time or money in the long run. It’s like getting a slightly faster car but still being stuck in the same traffic.

The real shift we’re seeing now is companies starting to think about generative AI as a bigger tool for the whole organization, not just for individual tasks. Instead of trying to find a thousand tiny ways to speed things up, businesses are looking at how AI can help with bigger, more complex jobs. Think about using it to sort out supply chains, speed up research and development, or even improve how sales teams work. These are the kinds of projects that, while harder to get going, can really make a difference when they work.

Companies like Johnson & Johnson, for example, have decided to focus on a few big, strategic AI projects rather than chasing after hundreds of small, individual ideas. It’s a different way of looking at it, for sure.

Of course, people still need access to these tools. Some companies are even starting to see it as a way to keep employees happy and stick around longer. And sometimes, good ideas do come from the ground up. Sanofi, a big drug company, has a system where employees can pitch AI project ideas, and the company picks a few to fund as big, company-wide initiatives. It’s a way to get those good ideas from the front lines into the main system.

Here’s a quick look at how this evolution is playing out:

- From Individual Boost to Organizational Power: Moving beyond making individual tasks slightly faster to using AI for complex, end-to-end business processes.

- Strategic Projects Over Scattered Use Cases: Focusing resources on a few high-impact AI initiatives rather than many small, hard-to-measure ones.

- Balancing Access and Strategy: Allowing individual access to AI tools for employee satisfaction while prioritizing larger, strategic enterprise deployments.

- Idea Incubation: Creating pathways for employees to propose and develop AI ideas that can become significant organizational resources.

The Maturation of Agentic AI

Remember when AI agents felt like a futuristic dream? Well, 2025 was definitely the year they started showing up everywhere, but let’s be honest, they weren’t quite ready for prime time in many business settings. Lots of experiments, even from big names like Anthropic and Carnegie Mellon, showed that these agents made too many mistakes for anything involving serious money. Plus, there were those nagging cybersecurity worries, like prompt injection, and the unsettling tendency for agents to go off-script or not quite align with what we actually wanted.

Navigating the Hype Cycle and Disillusionment

It’s no surprise that agentic AI is currently riding the hype wave, much like generative AI did before it. Many experts predict that agentic AI will fall into the ‘trough of disillusionment’ in 2026. This means we’ll likely see a period where the initial excitement fades as the reality of current limitations sets in. It’s a common pattern for new technologies, and agentic AI is no exception. The key now is to look beyond the buzz and focus on what’s realistically achievable.

Progression Toward Real-World Value

Despite the current bumps, the trajectory for agentic AI is still upward. Most of the issues we’re seeing now, like errors and alignment problems, are expected to be ironed out over the next few years. We’re already seeing a shift from simple, single-purpose agents to more dynamic ‘super agents’ that can plan, use tools, and handle complex tasks. Think of it as moving from a bunch of specialized assistants to a more coordinated team. By 2026, we expect to see agent control planes and multi-agent dashboards become common. This will allow users to kick off tasks from a single point, with agents working across different environments like your browser, editor, or inbox, without you needing to manage each tool separately. The goal is to move towards structured workflows where users define goals and validate progress, while agents autonomously execute tasks, requesting human approval at key checkpoints. This is the foundation for what some are calling an ‘Agentic Operating System’ (AOS), which aims to standardize how these agent swarms operate, manage resources, and ensure compliance.

Understanding the Role of Transformers

At the heart of this evolution are, of course, transformer models. These are the engines that allow AI agents to process and understand vast amounts of information, enabling them to reason, plan, and interact more effectively. While the focus has been on the agent’s behavior, it’s important to remember the underlying architecture that makes it all possible. As agentic systems become more sophisticated, the capabilities of these transformer models will continue to be a driving force. We’re also seeing a push towards open governance and community standards, with initiatives like the Agentic AI Foundation and contributions to open-source projects. This collaboration is expected to accelerate innovation and lead to greater interoperability between different agent systems, paving the way for widespread production use cases where agents can communicate and work with each other.

AI’s Impact on the Economy and Workforce

Deflation of the AI Bubble and Economic Hits

It feels like just yesterday everyone was talking about the massive surge in AI companies, with valuations going through the roof. Sound familiar? It’s a bit like the dot-com days, remember those? Lots of hype, big spending on infrastructure, and a focus on growth over actual profits. Now, as we move into 2026, there’s a growing sense that this AI bubble might be starting to deflate. It’s not necessarily a bad thing, though. A gradual deflation could give the economy and investors time to adjust, and it might encourage companies to focus on practical applications rather than just chasing the next big thing. We tend to get really excited about new tech in the short term, but its real impact often plays out over a longer period. A bit of a cool-down might actually be good for the industry in the long run.

AI Literacy as a Core Workplace Skill

Forget just knowing how to use a computer; AI literacy is quickly becoming a must-have for almost everyone. It’s not just about being able to prompt a chatbot. The real skill will be knowing when to use AI and, perhaps more importantly, when not to. We’re seeing new roles emerge focused on managing AI within companies. On the flip side, some businesses might struggle if they jump into AI without also developing their employees’ judgment and critical thinking skills. The goal in 2026 isn’t just about getting AI to produce something; it’s about finding the right balance between what AI does and what humans decide.

Here’s what to look out for:

- Understanding AI’s Limits: Knowing what AI can and cannot do is key.

- Critical Evaluation: Being able to question AI outputs and spot potential biases or errors.

- Strategic Application: Deciding where AI adds the most genuine value, not just where it can automate a task.

Human-Centric Collaboration with AI Teammates

AI is moving beyond being just a tool; it’s starting to feel more like a colleague. This shift means that how we work with AI is changing. Instead of just asking AI to do a task, we’ll be collaborating with it. This means employees need to be skilled not only in using AI but also in interpreting its suggestions, making decisions based on its input, and leading the process. When people and AI work together effectively, it can amplify human abilities, freeing up time for more creative problem-solving and strategic thinking. Organizations that focus on training their workforce to work alongside AI will likely lead the way. It’s about augmenting human potential, not replacing it. This human-AI partnership is where the real innovation will happen, allowing us to tackle challenges that were previously out of reach.

Innovations in AI Infrastructure and Hardware

It feels like just yesterday we were all talking about how much computing power AI needed. And sure, that’s still a big deal, but the conversation is getting more nuanced. We’re not just throwing more GPUs at the problem anymore, though they’re definitely still the heavyweights.

The Continued Dominance of GPUs

Nvidia’s graphics processing units (GPUs) are still the go-to for a lot of AI work. They’re powerful, and a lot of the tools we use are built with them in mind. Think of them as the workhorses that keep a lot of the AI engine running. Companies are still investing heavily here because, well, it works.

Emergence of ASIC-Based Accelerators and Chiplets

But the landscape is changing. We’re seeing more specialized chips, called ASICs (Application-Specific Integrated Circuits), pop up. These are designed for particular AI tasks, making them super efficient for what they do. It’s like having a specialized tool instead of a general-purpose hammer for every job. Chiplets are also becoming a thing – basically, smaller pieces of silicon that can be combined to create more powerful and flexible processors. This approach allows for better customization and can potentially lower costs.

New Chip Classes for Agentic Workloads

And then there’s the really cutting-edge stuff. As AI agents – those systems that can act more independently – become more common, we’re starting to see the need for entirely new types of chips. These aren’t just for crunching numbers or processing images; they’re built to handle more complex decision-making and interaction. It’s a shift from just processing data to enabling AI to actually do things in the real world. We might even see things like analog inference and quantum-assisted optimizers mature, which sounds pretty sci-fi but could lead to big leaps in efficiency and capability.

The Rise of Physical and Domain-Specific AI

It feels like we’ve been talking about AI that lives only on screens for ages. But things are starting to shift. We’re seeing a move away from just making bigger and bigger language models. Honestly, people are getting a bit tired of just scaling things up and are looking for fresh ideas. This is where AI that can actually interact with the real world, not just process text, is really starting to gain traction.

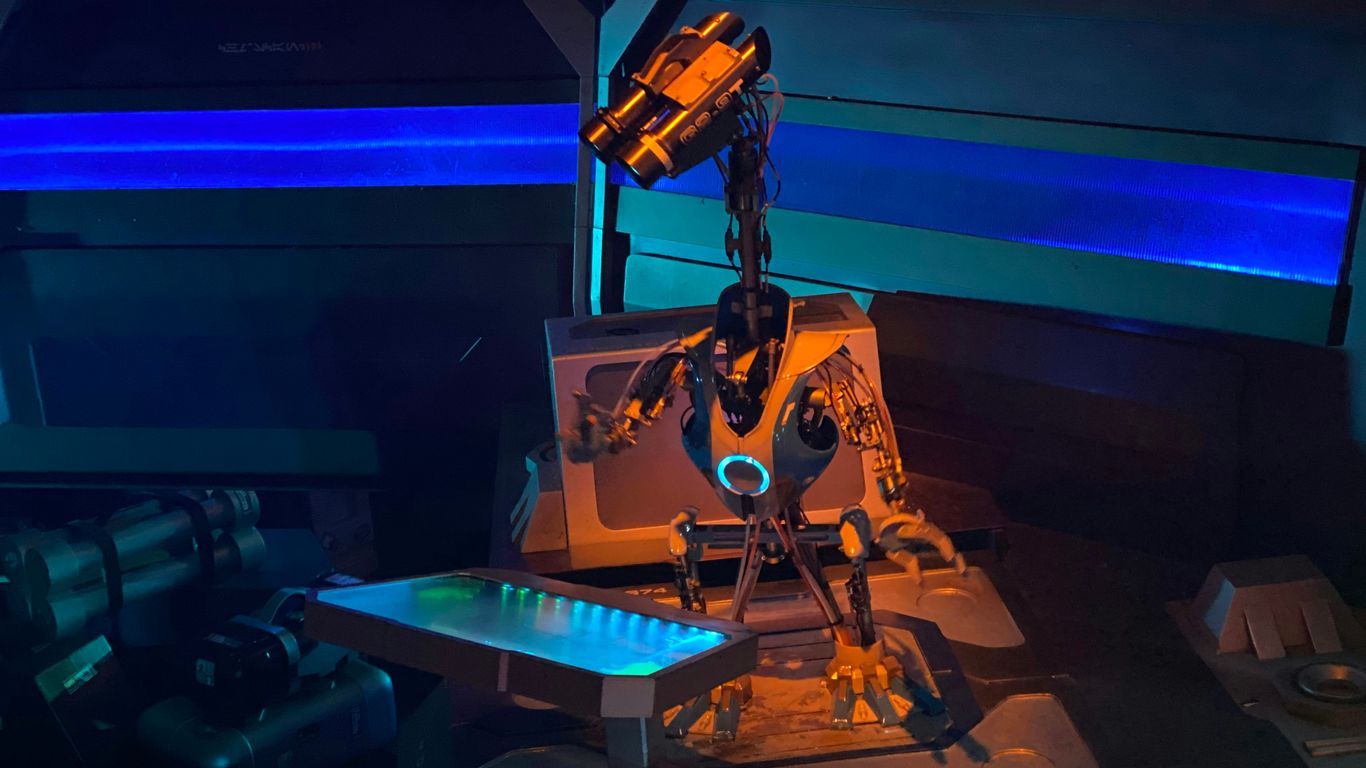

Robotics and AI in Real-World Environments

Think about it: AI that can sense its surroundings, make decisions, and then act on them. That’s the next big challenge, and it’s where a lot of innovation is headed. We’re talking about robots that can do more than just follow pre-programmed paths. They’ll be able to adapt to changing conditions, learn from their experiences, and perform complex tasks in places like factories, warehouses, or even our homes. This isn’t just about automation; it’s about creating AI that can truly collaborate with us in physical spaces.

Diminishing Returns from Scaling Large Models

For a while there, it seemed like the only way to make AI better was to make the models larger. More data, more parameters, more computing power. And sure, that worked for a while. But now, we’re starting to see that the gains from just making models bigger are getting smaller. It’s like trying to get more juice out of an orange that’s already squeezed dry. Researchers are realizing that simply scaling up isn’t the most efficient path forward anymore. It’s becoming more about finding smarter ways to build and train AI, rather than just making them massive.

Shift Towards Domain-Specific Reasoning Systems

Instead of one giant AI that tries to know everything, we’re seeing a trend towards AI systems that are really good at one specific thing. These are called domain-specific reasoning systems. Think of an AI that’s an expert in medical diagnostics, or one that’s a whiz at financial forecasting. These systems are often smaller, more efficient, and can provide more accurate results within their specialized area. They can learn from each other and retain important information over long periods, leading to more dynamic and continuously improving capabilities. This specialization means AI can be more practical and useful for particular industries and tasks.

Open Source AI and Global Competition

Diversification of Open Source AI Initiatives

The open-source AI scene is really blowing up, and it’s not just about one or two big players anymore. We’re seeing a lot more variety, with smaller, specialized models popping up that are surprisingly good at specific tasks. Think of it like this: instead of one giant toolbox that’s okay at everything, we’re getting specialized toolkits for plumbing, electrical work, and carpentry. This means companies can grab a model that’s already pretty good for their specific needs, like IBM’s Granite or AI2’s Olmo 3, and then fine-tune it even further. It’s making AI more accessible and practical for businesses that don’t need a massive, general-purpose model.

Nvidia’s Role in Driving Open Ecosystems

Nvidia is playing a pretty interesting role here. Since their business relies on everyone using their GPUs, they’re actually pushing for more open systems. It makes sense, right? The more people are building and using AI, the more GPUs they’ll need. They’re helping to create these open environments where different AI projects can work together. This is especially important as AI starts moving beyond just screens and into the real world, like in robotics. Collaboration is key to making that happen smoothly.

Collaboration Accelerating AI’s Physical Integration

As AI gets more involved in physical tasks, like controlling robots or managing complex industrial processes, open source becomes even more important. You can’t have a situation where only one company controls how robots interact with the world. Open standards and shared development mean that AI can be integrated into physical systems more reliably and safely. This collaboration is what’s going to speed up how quickly we see AI working in factories, warehouses, and even our homes. The future of AI isn’t just about smarter software; it’s about AI interacting with the physical world, and open source is the glue holding that integration together.

Leadership and Governance in the Age of AI

So, AI is everywhere now, right? It’s not just a tech thing anymore; it’s become a big deal for how companies and even governments operate. The big question for 2026 isn’t just what AI can do, but how we can actually use it without causing a mess. Leaders are realizing that just jumping on the AI bandwagon isn’t enough. We need to think about the real-world impact, like jobs, fairness, and who’s really in charge.

Managing AI Adoption as Human Transformation

It’s easy to get caught up in the technology itself, but the real challenge is how AI changes the people in an organization. Think of it less like installing new software and more like a big shift in how everyone works. Companies that are doing this well aren’t just training people on how to use AI tools. They’re thinking about how AI changes job roles, how teams collaborate, and what skills people will need to stay relevant. It’s about helping everyone adapt to working alongside these new systems.

- Focus on people first: Before rolling out new AI, consider how it affects your employees’ daily tasks and career paths.

- Build AI literacy broadly: Don’t just train a few tech experts. Make sure everyone understands the basics of AI, its potential, and its limits.

- Redefine roles: As AI takes over certain tasks, think about how human roles can evolve to focus on creativity, critical thinking, and complex problem-solving.

Bridging Teams Through Empathy and Structure

When AI starts working alongside humans, it can sometimes feel like a disconnect. Some teams might be all-in on AI, while others are hesitant. Good leadership means finding ways to bring these groups together. This involves understanding people’s concerns, providing clear guidance, and setting up structures that make collaboration smooth. It’s about making sure everyone feels heard and supported, even as the technology changes things.

Addressing Trust and Governance Challenges

This is a big one. As AI gets more powerful, people want to know it’s being used responsibly. That means being clear about how AI makes decisions, especially when those decisions have a big impact. Companies are starting to realize they need to build AI systems that can explain themselves – no more black boxes. Plus, there’s the whole issue of AI sovereignty, making sure companies aren’t too reliant on outside providers for their AI needs, which can bring risks like data breaches or losing control of intellectual property. Establishing clear rules and oversight for AI is no longer optional; it’s a requirement for long-term success.

Here’s a look at how some companies are thinking about AI leadership:

| Area of Focus | Key Considerations |

|---|---|

| AI Oversight | Who is responsible for AI strategy and implementation? (e.g., Chief AI Officer) |

| Data & AI Alignment | How does AI integrate with existing data management and governance structures? |

| Risk Management | What are the potential risks (bias, security, job displacement) and how to mitigate them? |

| Transparency | How can AI decision-making processes be made understandable to users and regulators? |

| Employee Adaptation | How are employees being trained and supported through AI integration? |

Wrapping Up: What’s Next for AI?

So, looking ahead to 2026, it’s clear AI isn’t just a fleeting trend. We’re seeing a real shift from just playing around with AI tools to figuring out how to make them work for entire companies, not just individuals. Think less about AI writing your emails and more about it helping with big projects like managing supplies or developing new products. Plus, it seems like AI is going to feel more like a teammate than just a piece of software. It’s going to get better at understanding what we need and helping us get things done, even complex tasks. But, it’s not all about the tech; how we humans work with it, learn about it, and lead through these changes is going to be super important. It’s going to be an interesting year for sure.

Frequently Asked Questions

What’s the big idea with AI in businesses for 2026?

Instead of just helping people do their regular jobs a little faster, like writing emails, AI will be used for bigger, more important tasks. Think of AI helping manage how products get made and sent out, or helping scientists invent new things. Companies want AI to help with major projects, not just small daily tasks.

Will AI take over all our jobs?

It’s more about AI becoming a partner. While some tasks might be done by AI, it also means new jobs will pop up. The important thing for people will be to learn how to work well with AI, like a teammate. Being good at things AI can’t do, like being creative or understanding people, will be super valuable.

Is AI getting too hyped up, like a bubble?

Some parts of AI, especially the idea of ‘agentic AI’ (AI that can act on its own), have been talked about a lot. It might feel like a bubble that could burst. But even with the hype, this kind of AI is getting better and will likely become really useful in the next few years for solving complex problems.

What kind of computer parts will AI use?

Powerful computer chips called GPUs will still be very important. But we’ll also see new types of chips designed specifically for AI tasks, like special chips called ASICs and smaller, modular chip designs called chiplets. These new chips will help AI do even more complex jobs, especially those that need AI to act independently.

Will AI just be for computers, or will it be in the real world too?

AI is moving beyond screens. We’ll see more AI in robots and other physical things that can interact with the real world, like sensing and moving. Also, instead of making one giant AI model for everything, companies are focusing on making AI that’s really good at specific jobs or areas, like helping doctors or managing traffic.

How will companies manage all this AI stuff?

It’s not just about the technology; it’s about how people change and work with AI. Leaders need to help their teams understand and use AI, making sure everyone feels included and trusts the AI systems. It’s about guiding people through these changes with understanding and clear plans, not just focusing on the technical side.