Hey everyone! Ever wonder what makes your computer tick behind the scenes? We’re going to talk about chipset architecture, which is basically the traffic cop for all the data moving around your motherboard. It’s changed a lot over the years, from big separate chips to being built right into your CPU. Let’s break down how this stuff works and why it matters for your PC.

Key Takeaways

- Chipset architecture used to be split into two main chips: the Northbridge for fast stuff like the CPU and RAM, and the Southbridge for slower peripherals like USB drives.

- Over time, many functions of the Northbridge, like memory control and graphics, got moved directly into the CPU itself to speed things up.

- Modern systems often use a single chip, like Intel’s Platform Controller Hub (PCH) or AMD’s equivalent, which handles the Southbridge tasks.

- The chipset still plays a big role in what ports and features your motherboard has, like how many USB ports or what kind of storage you can use.

- Intel and AMD are the main players making chipsets today, and they design them to work with their specific CPUs, influencing things like compatibility and performance.

The Evolution of Chipset Architecture

Back in the day, building a computer felt a bit like assembling a complex puzzle, and the chipset was a big part of that. It was essentially the traffic cop for all the data zipping around your motherboard. Think of it as the central nervous system, making sure the brain (CPU) could talk to everything else – the memory, the graphics card, your hard drives, and all those USB devices you plug in.

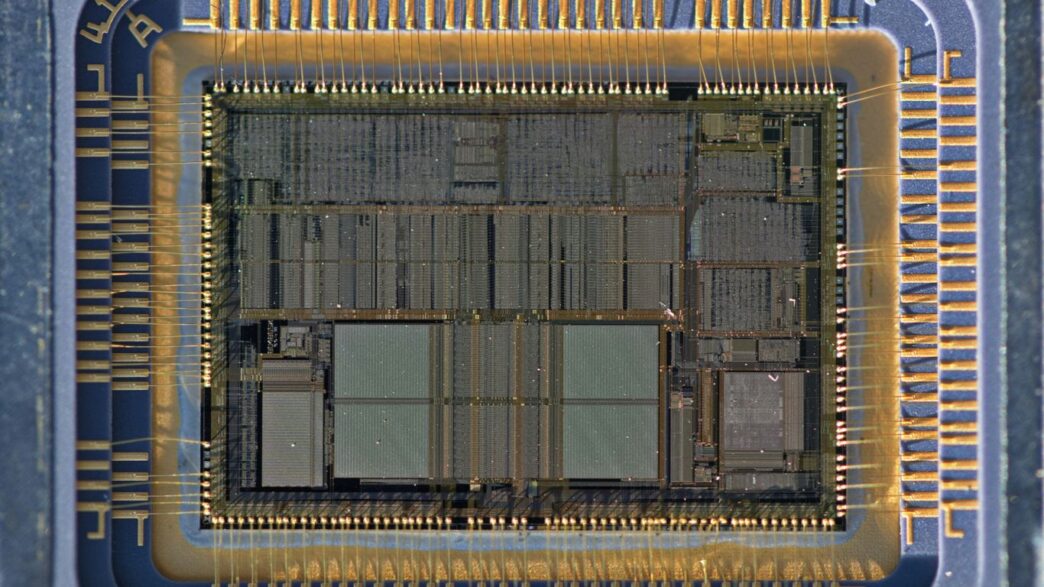

Understanding the Northbridge Role

The Northbridge was the high-speed highway of this system. It handled the really fast stuff, like talking directly to the CPU and managing the connection to your RAM and graphics card. Because these components needed to communicate so quickly, the Northbridge was designed for maximum speed. It was pretty much the gatekeeper for the most performance-critical parts of your system. Its primary job was to bridge the gap between the CPU and the high-bandwidth components.

The Emergence of the Southbridge

While the Northbridge was busy with the fast lane, the Southbridge took care of the rest. This chip managed slower, but still important, connections. Think about your USB ports, your audio chip, your network card, and your storage drives (like older SATA hard drives). The Southbridge handled all these peripheral and I/O (Input/Output) tasks. It wasn’t as fast as the Northbridge, but it was essential for making your computer actually useful for everyday tasks.

Early Chipset Integration Trends

For a long time, motherboards had separate chips for the Northbridge and Southbridge. This was necessary because putting everything onto a single piece of silicon was really difficult and expensive. However, as technology got better, manufacturers started looking for ways to combine these functions. The first big step was integrating some of the Northbridge’s duties directly into the CPU itself, especially the memory controller. This cut down on the distance data had to travel, making things faster. Later, even graphics functions started getting baked into the CPU. This trend towards integration meant fewer chips on the motherboard, which could save space and power, especially in laptops, and eventually led to the modern designs we see today.

Core Functions of Traditional Chipsets

Back in the day, motherboards had a pretty clear division of labor when it came to their main chips. Think of it like a company with two main departments: one handling the really fast, important stuff, and the other managing all the day-to-day operations and connections.

Northbridge: High-Speed Communication Hub

The Northbridge was basically the VIP lounge of the motherboard. Its main job was to connect the CPU to the things that needed the absolute fastest communication. This primarily meant the system RAM (your computer’s working memory) and the graphics card. Because these components are so critical for performance, the Northbridge had a direct, high-speed link to the CPU, often called the Front-Side Bus (FSB). It was the bottleneck buster for critical data paths. If you wanted a fast computer, a good Northbridge was key.

Southbridge: Peripheral and I/O Management

If the Northbridge was the VIP lounge, the Southbridge was the busy reception and mailroom. It handled all the slower, but still necessary, connections. This included things like:

- Storage devices (hard drives and early SSDs via SATA)

- USB ports for your keyboard, mouse, and other accessories

- Audio controllers

- Networking interfaces (like Ethernet)

- Legacy ports (like PS/2 for keyboards/mice, though those are pretty rare now)

The Southbridge didn’t talk directly to the CPU at the same super-high speed as the Northbridge. Instead, it communicated with the Northbridge, which then relayed information to the CPU. This two-step process was fine for these less demanding peripherals.

Inter-Bridge Communication Dynamics

The way these two chips talked to each other was pretty straightforward. The CPU would send instructions or data requests. If it was for RAM or the graphics card, the Northbridge handled it directly. If it was for a USB device or a hard drive, the Northbridge would pass that request down to the Southbridge. The Southbridge would then manage the communication with the specific peripheral and send the data back up through the Northbridge to the CPU. This setup worked for a long time, but as computers got faster, the communication between the Northbridge and Southbridge, and even between the Northbridge and CPU, started to become a bit of a traffic jam. That’s what eventually led to changes in how chipsets were designed.

The Shift Towards Integrated Designs

Remember when motherboards looked like a highway with a bunch of separate chips connected by tiny roads? For a long time, that’s how it was, with the Northbridge and Southbridge acting as these distinct traffic controllers. But things started to change, and honestly, it was a pretty big deal. The main idea was to simplify things, cut down on costs, and make everything run faster.

Memory Controller Integration into CPUs

One of the first big moves was pulling the memory controller right onto the CPU itself. Before this, the Northbridge was responsible for talking to the RAM. This meant data had to travel from the CPU, to the Northbridge, and then to the RAM. That’s a lot of steps, right? By putting the memory controller directly on the CPU die, like AMD did with their K8 processors and Intel later with their Nehalem chips, the path got a whole lot shorter. This reduction in distance significantly cut down on latency, making memory access much quicker. It’s kind of like having a direct phone line instead of going through a switchboard.

Graphics Controller Integration

Following the memory controller, graphics processing started getting integrated too. Initially, this meant the Northbridge might have had built-in graphics capabilities, or a separate graphics chip would connect directly to it. But then, we saw graphics processing units (GPUs) start appearing on the same package or even the same die as the CPU. This was a huge step, especially for laptops and mainstream desktops, as it meant you didn’t always need a separate, bulky graphics card for basic display output. Think of Intel’s Sandy Bridge architecture, which brought many Northbridge functions, including graphics, into the CPU package. It really streamlined the whole system.

The Rise of the Platform Controller Hub (PCH)

As more and more functions moved from the traditional Northbridge and Southbridge chips directly into the CPU, the old chipset structure became less relevant. Intel, in particular, started consolidating the remaining I/O functions. They introduced the Platform Controller Hub (PCH) to handle tasks that were previously managed by the Southbridge, and sometimes even some Northbridge duties. This PCH typically connects to the CPU via a high-speed link, like Intel’s Direct Media Interface (DMI). AMD also made similar moves, renaming their integrated controller hubs. This shift meant fewer chips on the motherboard, simpler board designs, and often, better power efficiency. It was a move away from the distinct Northbridge/Southbridge setup towards a more centralized, CPU-centric design.

Modern Chipset Architecture Explained

So, things have changed a lot from the old Northbridge/Southbridge days. You know, back when those two chips were the main traffic cops on the motherboard? Now, a lot of what they used to do is handled differently, mostly by the CPU itself. This shift really started to pick up steam because, well, faster is better, right? And having the CPU talk directly to memory and graphics cards cuts down on delays.

CPU-Centric Chipset Functions

Think of the CPU as the star player now. It’s got its own built-in memory controller. This means it can access your RAM way faster than before, which is a big deal for everything you do on your computer. Plus, the main graphics card slot (the one that gets the most attention) is usually wired directly to the CPU too. This direct connection is why modern graphics cards feel so responsive.

The Role of the Modern Southbridge

What’s left for the chipset then? It’s mostly taken over the duties of the old Southbridge. This is where all the other stuff connects. We’re talking about your USB ports, your SATA connections for hard drives and SSDs, your Ethernet port, and Wi-Fi. It’s still super important for making sure all your peripherals can talk to the rest of the system.

Here’s a quick rundown of what the modern chipset typically manages:

- Connectivity: This includes the number and speed of USB ports (like USB 3.2 and even USB4), Thunderbolt ports, and networking interfaces (like 2.5Gb or 10Gb Ethernet).

- Storage Interfaces: While the CPU might handle the fastest NVMe SSDs directly, the chipset often provides additional SATA ports for older drives or more M.2 slots.

- Expansion Slots: It manages the lanes for secondary PCIe slots, which are useful for sound cards, capture cards, or extra storage.

- Platform Management: This includes things like power management and the system’s ability to boot up.

Chiplet Design and Connectivity

Some manufacturers are getting creative with how they build these chips. Instead of one giant piece of silicon, they’re using smaller, specialized chips called "chiplets." These are then put together to create the final chipset. This approach can be more efficient and allows for more flexibility in designing different configurations. For example, AMD’s high-end chipsets on the AM5 platform often use a dual-chiplet design. This basically means they’ve linked two smaller chips together to give you more connectivity options without having to make one massive, complex chip. It’s a clever way to pack in more features and bandwidth for things like:

- More high-speed USB ports.

- Support for the latest Wi-Fi standards.

- Additional PCIe lanes for storage or other add-in cards.

Key Considerations in Chipset Selection

So, you’ve picked out a shiny new CPU, maybe even a fancy graphics card. But wait, there’s another piece of the puzzle that really matters: the chipset. It’s not just some random collection of chips; it’s basically the traffic cop for your motherboard, deciding what talks to what and how fast.

CPU Compatibility and Chipset Tiers

First things first, you can’t just slap any CPU into any motherboard. Intel CPUs generally need Intel chipsets, and AMD CPUs need AMD chipsets. It’s like trying to put a Ford engine in a Chevy – it just doesn’t work without some serious (and usually impossible) modifications. Beyond just brand compatibility, there are different tiers of chipsets. Think of it like car trim levels. You’ve got your basic models, your mid-range options, and then the top-of-the-line performance versions. For Intel, you might see B-series for everyday use, H-series for a bit more, and Z-series for the enthusiasts who want all the bells and whistles, like overclocking. AMD has similar tiers, often with X-series for high-end performance. Choosing the right tier is important because a high-end CPU paired with a low-end chipset might not be able to reach its full potential, kind of like having a sports car with economy tires.

Connectivity and Expansion Capabilities

This is where the chipset really shows its colors. It dictates how many things you can plug into your computer and how fast they’ll run. We’re talking about:

- USB Ports: How many? What speed? Are we talking USB 3.2 Gen 2×2, or maybe even Thunderbolt 4 or USB4? The chipset determines this.

- Storage Options: How many SATA ports for traditional hard drives or SSDs? How many M.2 slots for super-fast NVMe SSDs, and what PCIe generation do they support (PCIe 4.0, 5.0)?

- PCIe Lanes: These are like highways for data. More lanes mean more devices can run at high speeds simultaneously, which is great for multiple graphics cards or lots of fast storage.

- Networking: Does it have built-in Wi-Fi (and what standard, like Wi-Fi 6E or 7)? What about Ethernet speeds (1Gb, 2.5Gb, 10Gb)?

Performance Implications for Overclocking

If you’re the type of person who likes to push your hardware to its limits, the chipset is a big deal. Not all chipsets are created equal when it comes to overclocking. Generally, the higher-end chipsets (like Intel’s Z-series or AMD’s X-series) are designed with overclocking in mind. They often have better power delivery systems and more robust VRMs (Voltage Regulator Modules) to handle the extra heat and power demands when you crank up the CPU or RAM speeds. Cheaper chipsets might lock you out of overclocking features entirely, or they might not be stable enough to handle sustained high-performance loads. So, if tweaking your system for every last bit of speed is your jam, make sure the chipset you choose supports it.

Chipset Manufacturers and Market Landscape

Historical Third-Party Chipset Players

Back in the day, the motherboard scene was a lot more crowded. You had companies like VIA, SiS, and even ATI (before they got bought by AMD) making chipsets that competed with the big guys. NVIDIA also had a hand in it for a while, not just with graphics cards but with their nForce series of chipsets. It was a time when you had more choices, and these companies often tried to differentiate themselves with unique features or performance tweaks. These third-party players really pushed innovation, forcing Intel and AMD to step up their game. It made building a PC a bit more interesting, trying to figure out which chipset offered the best balance for your needs.

The Intel and AMD Duopoly

Fast forward to today, and things have really shaken out. The market for x86 processors and their accompanying chipsets is pretty much a two-horse race between Intel and AMD. If you’re building an Intel system, you’re going to be looking at Intel chipsets, and for AMD processors, you’ll need an AMD chipset. This consolidation means that the features and capabilities you get are largely dictated by what these two giants decide to offer. They control the compatibility, the I/O options, and even the overclocking potential. It’s simpler in a way, but it also means less variety for consumers who might have preferred a different manufacturer’s approach.

Specialized Chipsets for Different Platforms

It’s not just about desktop PCs anymore, either. Chipsets are tailored for specific jobs. For servers and workstations, you’ll find chipsets designed for massive amounts of RAM, often with error correction (ECC memory), and tons of connectivity for storage arrays using things like SAS instead of just SATA. Laptops, on the other hand, often have chipsets so integrated into the CPU itself (think System on Chip, or SoC) that the term "chipset" almost doesn’t apply in the traditional sense; it’s all about saving space and power. Then there are specialized platforms, like those for embedded systems or high-performance computing, each with its own set of chipset requirements focusing on reliability, specific I/O, or extreme processing power.

Wrapping It Up

So, we’ve seen how the old Northbridge and Southbridge setup worked, basically managing all the traffic between the CPU and the rest of your computer parts. It was a necessary way to build things back then. But as computers got faster, designers found ways to put more of that work directly onto the CPU itself, or into a single chip that handles the input and output. This whole shift means things are generally quicker and more efficient now. While you don’t really see separate Northbridge chips anymore, understanding that history helps explain why modern motherboards and CPUs are designed the way they are today. It’s all about making your computer run as smoothly as possible.

Frequently Asked Questions

What is a chipset and why was it important?

Think of a chipset as the traffic director for your computer’s motherboard. It helps all the different parts, like the CPU (the brain), memory (short-term memory), and other devices, talk to each other smoothly. In older computers, this job was split between two main chips: the Northbridge and the Southbridge. The Northbridge handled the fast stuff, like talking to the CPU and graphics card, while the Southbridge managed slower devices like your keyboard and hard drive.

What happened to the Northbridge?

The Northbridge used to be super important for managing how fast the CPU could talk to the computer’s memory and graphics card. But as CPUs got much faster, it became a bottleneck. To speed things up, companies started putting the Northbridge’s jobs, like controlling the memory, directly onto the CPU itself. This made communication much quicker because the CPU didn’t have to go through a separate chip anymore.

What does the Southbridge do now?

Even though the Northbridge’s main jobs moved to the CPU, the Southbridge is still around, though its name has changed. Now, it’s often called the Platform Controller Hub (PCH) or something similar. It still handles the slower connections and input/output tasks, like managing USB ports, audio, network connections, and storage drives. It’s the part that lets you plug in your mouse, keyboard, and other everyday devices.

Are all chipsets the same?

Not at all! Chipsets are like different levels or classes of motherboards. Some chipsets are designed for basic computers, while others are made for high-performance gaming or professional workstations. The chipset determines how many things you can connect, how fast they can run, and even if you can ‘overclock’ your CPU (make it run faster than its normal speed).

Who makes chipsets?

In the past, several companies made chipsets. But today, it’s mostly a two-horse race for computer processors. Intel makes chipsets for Intel CPUs, and AMD makes chipsets for AMD CPUs. They design these chipsets to work best with their own processors, controlling everything from how many USB ports you get to how fast your graphics card can perform.

What is a ‘chiplet’ design?

Chiplets are a newer way of building powerful processors and chipsets. Instead of making one giant chip, companies break down the functions into smaller, specialized chips (chiplets). These chiplets are then combined together. For example, AMD uses this to put together different pieces for high-end chipsets, allowing them to offer a lot of connections and features without needing one massive, hard-to-make chip.