Getting Started with `from google import genai`

So, you’re looking to jump into generative AI, huh? It’s a pretty exciting field right now, and getting started is easier than you might think, especially with tools like from google import genai.

Understanding Generative AI Fundamentals

At its core, generative AI is about teaching computers to create new stuff. Think of it like teaching an artist to paint or a writer to craft a story, but with data. These AI models learn from massive amounts of existing information – text, images, code, you name it – and then use that knowledge to produce something entirely original. It’s not just about recognizing patterns; it’s about using those patterns to generate novel content. This technology is what powers things like chatbots that can hold a conversation, tools that can write articles, or even programs that can create realistic images from a simple description.

Exploring Google’s Generative AI Offerings

Google has been putting a lot of effort into generative AI, and they’ve got some powerful tools available. One of the big names you’ll hear about is Gemini. Gemini models are designed to be multimodal, meaning they can understand and work with different types of information all at once – text, images, audio, and video. This makes them really flexible for all sorts of tasks. You can experiment with these models through platforms like Vertex AI Studio, which gives you a place to test out prompts and see what the AI can do.

Setting Up Your Development Environment

Before you can start coding, you’ll need to get your development environment ready. This usually involves a few key steps:

- Install the necessary libraries: You’ll need to install the Google AI SDK. This is typically done using a package manager like pip. For example, you might run

pip install google-generativeai. - Get an API key: To use Google’s AI services, you’ll need an API key. You can usually obtain this from a Google Cloud console or a dedicated AI platform dashboard. Keep this key secure, as it’s your access pass.

- Configure your code: Once you have the library installed and your API key, you’ll write a small piece of code to initialize the SDK with your key. This sets up the connection so your program can talk to the AI models.

It might sound like a lot, but these initial steps are pretty straightforward and set you up for all the cool things you can build.

Core Concepts of Generative AI

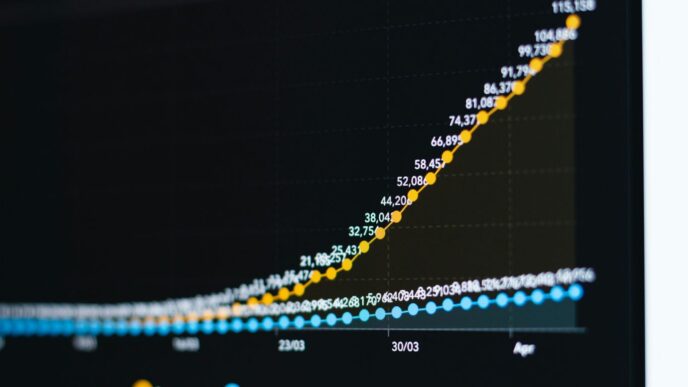

So, what exactly is generative AI? At its heart, it’s a branch of machine learning focused on creating new stuff. Think of it like teaching a computer to be an artist or a writer, but instead of paint or words, it uses data. These systems are often called Large Language Models, or LLMs, because they’re usually pretty big and good at understanding and making human-like text. But it’s not just about text anymore.

What are Large Language Models (LLMs)?

LLMs are the workhorses behind a lot of generative AI. They’re trained on massive amounts of text data, which allows them to grasp grammar, facts, reasoning styles, and even different writing tones. This training lets them do things like answer questions, summarize long documents, translate languages, and write different kinds of creative content. The bigger the model and the more data it sees, generally the more capable it becomes.

The Power of Multimodal Models

While LLMs are text-focused, many generative AI models can now handle more than just words. These are called multimodal models. Imagine a model that can look at a picture and describe it, or watch a video and tell you what’s happening. Gemini, for example, is a family of models that can work with text, images, audio, and video all at once. This opens up a whole new world of possibilities for how we interact with AI, allowing for richer and more complex applications.

Key Capabilities for Useful Content Generation

For generative AI to be truly helpful, it needs a few key abilities:

- Understanding Instructions: The model needs to accurately interpret what you’re asking it to do, whether it’s a simple question or a complex creative brief.

- Accessing External Information: While trained on a lot of data, models don’t know everything. They need ways to look up current or specific information, like product details for a customer service bot, to give accurate and up-to-date answers.

- Generating Safe Content: AI can sometimes produce unexpected or inappropriate output. Having built-in safety filters is important to block harmful content and ensure responsible use.

- Adaptability: Models should be able to be adjusted or

Leveraging Gemini Models with `from google import genai`

So, you’ve got from google import genai imported. What’s next? It’s time to actually use something, and that’s where Google’s Gemini models come in. Think of Gemini as Google’s latest and greatest AI brain. It’s designed to be super flexible, handling all sorts of information – text, images, even video and code. This means you can ask it questions or give it tasks that involve more than just words.

Introducing the Gemini Family of Models

Google has put together a whole family of Gemini models. The most advanced one, Gemini 3, is pretty impressive. It’s built to understand and work with different types of data all at once. This is what we call ‘multimodal’. So, you could show it a picture and ask it to describe what’s happening, or give it some code and ask it to explain it. It’s not just about spitting out text; it’s about understanding context across different formats.

Interacting with Gemini via Vertex AI Studio

Now, how do you actually talk to Gemini? Google provides a tool called Vertex AI Studio. It’s like a playground where you can test out Gemini. You can type in your requests (we call these ‘prompts’), upload images, or even provide code snippets to see how Gemini responds. It’s a really hands-on way to get a feel for what the models can do. You can try out pre-made examples, like asking Gemini to pull text out of an image or convert image details into a structured format like JSON. It’s a good place to start experimenting without needing to write a ton of code right away.

Utilizing Gemini’s Advanced Reasoning

What really sets Gemini apart is its reasoning ability. It’s not just pattern matching; it can actually ‘think’ through problems a bit. This means you can use it for more complex tasks. For instance, you could feed it a series of documents and ask it to summarize the key arguments, or give it a scenario and ask it to predict potential outcomes. The more specific and clear your prompt, the better Gemini can apply its reasoning skills to give you a useful answer. It’s this advanced capability that lets developers build some pretty neat next-generation AI applications.

Prompt Engineering for Effective AI Interaction

So, you’ve got your from google import genai all set up, and you’re ready to start making some AI magic happen. But how do you actually talk to these AI models to get them to do what you want? That’s where prompt engineering comes in. Think of it like giving really clear instructions to a super-smart, but sometimes literal, assistant.

Crafting Natural Language Instructions

At its heart, prompt engineering is about writing instructions, or ‘prompts’, in plain language. These prompts are what you send to the AI model to get it to generate a response. It might sound simple, but the way you phrase things can make a huge difference in the output you get back. A prompt can include text, images, or even video, depending on what the model can handle.

Here’s a basic idea of how it works:

- Your Input (The Prompt): You tell the AI what you need. For example, "Write a short poem about a cat watching rain."

- The AI Model: It takes your prompt and uses its training to create a response.

- The Output: The AI generates the poem: "Window pane, a misty sheen, / Tiny paws on sill unseen. / Drops descend, a rhythmic beat, / Feline eyes, a world complete."

It’s a bit of an experimental process, kind of like trial and error, but there are ways to get better at it.

Strategies for Guiding Model Behavior

Getting the AI to behave exactly how you want often requires a bit more than just a simple question. You need strategies to guide its responses. This could involve setting a specific tone, asking it to adopt a certain persona, or providing examples of the kind of output you’re looking for.

- Be Specific: Instead of "Write about dogs," try "Write a heartwarming story about a rescue dog finding its forever home, focusing on the dog’s perspective."

- Provide Context: If you’re asking for a summary, tell the AI what the original text is about. If you want it to act as a specific character, describe that character.

- Use Examples (Few-Shot Prompting): Show the AI what you want by giving it a couple of examples. For instance, if you want it to rephrase sentences, give it one or two examples of how you want them rephrased before giving it the sentence you actually want changed.

- Set Constraints: Tell the AI what not to do. "Write a product description, but do not use any marketing jargon."

The better your prompt, the more likely you are to get a useful and relevant response.

Managing Prompts with Vertex AI Studio

Vertex AI Studio is designed to make this process easier. It’s a place where you can experiment with different prompts and see how the models respond. You can save your prompts, organize them, and even test out various models to see which one works best for your specific task. It’s like having a dedicated workshop for crafting your AI instructions, helping you manage your experiments and refine your prompts over time.

Exploring Foundation Models in Vertex AI

So, you’ve got your genai library ready to go. Now, let’s talk about what you’re actually going to use it with. Vertex AI is where Google keeps its big, pre-trained AI models, kind of like a giant toolbox for building cool stuff. Think of these as the ‘foundation models’ – they’ve already learned a ton from massive amounts of data, so you don’t have to start from scratch.

Accessing the Gemini API

At the heart of Vertex AI’s generative capabilities are the Gemini models. These are Google’s latest and greatest, designed to handle all sorts of information – text, images, code, you name it. You can chat with them, ask them to write code, or even get them to describe what’s in a picture. It’s pretty wild. You interact with Gemini through an API, which is basically a way for your code to talk to the model. You can try out different prompts right in Vertex AI Studio to see what Gemini can do. It’s a good place to start experimenting before you integrate it into your own applications.

Image Generation with the Imagen API

What if you need pictures? That’s where the Imagen API comes in. This model is all about creating images from text descriptions. You tell it what you want – say, ‘a fluffy cat wearing a tiny hat’ – and Imagen tries to make it happen. It can also do things like edit existing images or describe what’s in an image you upload. It’s a separate tool from Gemini, focused specifically on visual content.

Discovering Models in Model Garden

Vertex AI’s Model Garden is like a catalog for all these foundation models. It’s not just Google’s own stuff, either. You can find models from other companies and even open-source options here. It’s a great place to browse and see what’s available. You can test models, tweak them a bit for your specific needs, and then deploy them. It’s all about giving you choices so you can pick the right tool for the job. You’ll find different models have different strengths, sizes, and costs, so it pays to look around.

Customizing Generative AI Models

So, you’ve been playing around with generative AI, maybe using Gemini or something similar, and it’s pretty cool, right? But sometimes, you need it to do something a bit more specific than just general chat or image creation. That’s where customizing comes in. Think of it like teaching a very smart, but general-purpose, assistant a new skill that’s just for your project.

Fine-Tuning Models for Specific Use Cases

Generative AI models are trained on massive amounts of data, which makes them good at many things. However, if you have a very particular task, like generating legal document summaries or writing product descriptions in a very specific brand voice, you’ll want to fine-tune the model. This means taking a pre-trained model and training it a bit more on your own, smaller, specialized dataset. It’s like giving a chef a few extra lessons on making your grandmother’s secret cookie recipe – they already know how to bake, but now they’ll bake those cookies perfectly.

This process helps the model learn the nuances, style, and specific terminology relevant to your needs. It’s not about retraining the whole thing from scratch, which would be incredibly time-consuming and expensive. Instead, it’s a more targeted approach.

Reducing Costs and Latency with Fine-Tuning

Why bother with fine-tuning beyond just getting better results? Well, it can actually save you money and speed things up. A fine-tuned model, because it’s more specialized, often requires less computational power to run for your specific task compared to a general-purpose model. This means lower costs for inference (when the model generates a response).

Also, a more focused model can often generate responses faster. If you’re building an application where quick replies are important, like a customer service bot, reducing that latency is a big win. It’s like having a specialist who knows exactly what you need, rather than a generalist who has to think a bit longer.

Evaluating and Deploying Tuned Models

Once you’ve fine-tuned a model, you can’t just assume it’s perfect. You need to check its work. This involves setting up tests to see how well it performs on the specific tasks you trained it for. You’ll want to look at:

- Accuracy: Is it getting the facts right for your domain?

- Relevance: Are the generated responses on topic and useful?

- Style and Tone: Does it sound like you want it to?

- Safety: Is it avoiding generating harmful or biased content?

Vertex AI provides tools to help you evaluate these aspects. After you’re happy with the performance, you can deploy your fine-tuned model. This makes it available for your applications to use, just like you would deploy any other machine learning model. It’s the final step where your specialized AI assistant is ready for work.

Enabling External Data Access

Generative AI models are pretty smart, but they’re usually limited to the information they were trained on. Think of it like someone who’s only ever read books from one library – they know a lot, but not about everything happening outside.

To make these AI models truly useful for specific jobs, we need to give them access to outside information. For example, if you’re building a customer service bot for your company, it needs to know about your products and services, not just general internet knowledge.

Vertex AI gives us a few ways to do this:

- Grounding: This connects the AI’s answers to a reliable source, like your company’s internal documents or even a quick web search. It helps stop the AI from making things up, which is called hallucinating.

- Retrieval-Augmented Generation (RAG): This is a bit more advanced. It lets the AI tap into external knowledge bases – think databases or collections of documents – to pull in more accurate and detailed information for its responses.

- Function Calling: This is really neat. It allows the AI to interact with external APIs. So, if it needs real-time information, like today’s stock price or the weather, it can actually go and get it by calling another service. It can also perform actions, like booking an appointment if you set that up.

These tools help make AI responses more accurate and relevant by connecting them to the real world. When the AI generates an answer, Vertex AI can even check if parts of the response came directly from a specific source and add that source as a citation. This builds trust and transparency in the AI’s output.

Responsible AI and Safety Measures

So, we’ve talked about how to get generative AI models to do cool stuff, but what about making sure they don’t do bad stuff? It’s a big deal, honestly. These models are powerful, and with that power comes the need for some serious guardrails. We’re talking about keeping things safe and making sure the AI acts in a way that’s helpful, not harmful.

Implementing Security Filters for Content

Think of security filters like a bouncer at a club. They’re there to check what’s coming in and what’s going out. For generative AI, this means looking at the prompts you send and the responses the model gives. The goal is to catch anything that looks dodgy, offensive, or just plain wrong before it causes a problem. Vertex AI has built-in tools for this, which is pretty handy. It helps stop harmful content from being generated in the first place.

Handling Dangerous Content

Sometimes, even with filters, dangerous content can slip through. This could be anything from hate speech to instructions on how to do something unsafe. It’s not just about blocking it; it’s about having a plan for when it happens. The systems need to be able to identify and flag this kind of content so it can be reviewed and dealt with. It’s an ongoing process, kind of like patching up holes in a leaky boat.

Ensuring Safe and Responsible AI Usage

Ultimately, it all comes down to using these AI tools responsibly. This isn’t just a technical problem; it’s a human one. We need to be mindful of how we’re using AI and what impact it might have. This involves:

- Understanding the limitations: AI isn’t perfect. It can make mistakes, and it doesn’t

Wrapping Up Your Generative AI Journey

So, that’s a quick look at getting started with generative AI using from google import genai. We’ve covered the basics, from what these models can do to how you can start playing around with them. It’s not some super complicated thing anymore; you can actually begin building and experimenting right away. Think of this as just the first step. There’s a whole lot more to explore, like fine-tuning models or integrating them into bigger projects, but knowing how to import the library and send your first prompt is a solid start. Keep playing with it, see what you can create, and don’t be afraid to try different things. The world of AI is opening up, and you’ve just taken your first step through the door.