So, everyone’s talking about DeepSeek, this new AI model, and how it might change things. A lot of the buzz is about how it’s supposed to be more energy efficient. But, you know, when you dig a little deeper, it’s not quite that simple. We’re going to look into what makes DeepSeek tick and, more importantly, what its real power footprint looks like. It’s a bit more complicated than just saying it’s ‘green’ or not.

Key Takeaways

- DeepSeek’s energy use is a hot topic, challenging what we thought we knew about AI efficiency.

- Its ‘chain-of-thought’ method helps with accuracy but uses more energy, which is a trade-off.

- Early tests show DeepSeek might use more power for longer answers compared to other AI models.

- The energy used for training DeepSeek might be saved, but inference (when you use it) can still be costly.

- The future of DeepSeek, especially with more people using it, means we need to think about its environmental impact.

DeepSeek’s Energy Footprint: A New Debate

Challenging Assumptions About AI Efficiency

For a while, there’s been talk about how efficient DeepSeek is, especially when it comes to the training phase. But, things get more complicated when we look at inference – that’s when DeepSeek actually answers questions. It turns out that the way DeepSeek is built, using something called "chain-of-thought," might mean it uses more energy than we thought. This is because chain-of-thought reasoning model breaks down complex questions into smaller parts, answering each one logically. This approach helps DeepSeek give better answers, but it also raises questions about its overall energy use.

The Nuance of DeepSeek Energy Usage

It’s not as simple as saying DeepSeek is either energy-efficient or not. The truth is more complex. While DeepSeek might be efficient during training, the inference phase tells a different story. The chain-of-thought method, while improving accuracy, demands more computational power. This means more energy is used each time DeepSeek answers a question. We need to consider the trade-offs between accuracy and energy consumption. It’s also important to remember that we’re still in the early stages of understanding DeepSeek’s energy profile. Initial tests have caveats, and direct comparisons with other models are difficult because of access limitations and different architectures.

Forcing a Debate on AI’s Power Consumption

DeepSeek is pushing us to have a serious conversation about how much energy AI models should be allowed to use. The excitement around DeepSeek could lead to it being used everywhere, even when it’s not really needed. This could lead to a big increase in energy use. We need to think about whether the benefits of using DeepSeek are worth the energy cost. It’s about finding a balance between getting better answers and being responsible about our energy use. The initial tests show that DeepSeek uses more energy than some other models to answer complex questions. For example, when asked about whether it’s okay to lie to protect someone’s feelings, DeepSeek used about 41% more energy than a Meta model with the same number of parameters. This highlights the need for more research and careful consideration of the energy implications of using chain-of-thought models.

Understanding DeepSeek’s Chain-of-Thought

How Chain-of-Thought Increases DeepSeek Energy Usage

DeepSeek, like other advanced reasoning models, uses a "chain-of-thought" (CoT) approach to tackle complex tasks. Instead of spitting out an answer directly, it breaks the problem down into smaller, more manageable steps. Think of it like showing your work in math class – the AI explains its reasoning process. This step-by-step approach allows for more accurate and nuanced answers, but it also increases energy consumption. The AI has to perform multiple computations and access its memory more frequently, which all adds up. It’s like comparing a quick calculation in your head to writing out a detailed proof – the proof takes longer and requires more effort.

The Trade-Off: Accuracy Versus Energy Intensity

There’s a clear trade-off between accuracy and energy intensity when it comes to DeepSeek’s CoT reasoning. The more steps the AI takes, the more accurate its answer is likely to be. However, each step consumes energy. So, the challenge is finding the right balance. Is the increase in accuracy worth the extra energy cost? This is a question that researchers and developers are actively trying to answer. It’s not always a simple calculation, as the value of accuracy can vary depending on the task. For example, in medical diagnosis, a small increase in accuracy could be life-saving, while in a less critical application, the extra energy cost might not be justified. DeepSeek uses dynamic computation to allocate resources based on task complexity, which helps to mitigate this issue.

Real-World Implications of Chain-of-Thought

The chain-of-thought approach has significant real-world implications. Consider these points:

- Improved Problem-Solving: CoT allows DeepSeek to tackle problems that would be impossible for simpler AI models. This opens up new possibilities in fields like scientific research, engineering, and finance.

- Increased Transparency: By showing its reasoning process, DeepSeek makes it easier for humans to understand and trust its answers. This is especially important in high-stakes situations where accountability is crucial.

- Higher Infrastructure Costs: The increased energy consumption associated with CoT can lead to higher infrastructure costs, especially when deploying DeepSeek at scale. This is a barrier to entry for smaller organizations and can limit the widespread adoption of the technology.

Here’s a simple table illustrating the trade-off:

| Feature | Without Chain-of-Thought | With Chain-of-Thought | Implication |

|---|---|---|---|

| Accuracy | Lower | Higher | Better problem-solving capabilities |

| Energy Usage | Lower | Higher | Increased operational costs |

| Response Time | Faster | Slower | Potential delays in real-time applications |

| Explainability | Lower | Higher | Improved trust and accountability |

Ultimately, the success of DeepSeek’s CoT approach will depend on finding ways to optimize energy efficiency without sacrificing accuracy. This is an ongoing area of research, and future innovations could significantly reduce the energy footprint of AI reasoning.

Measuring DeepSeek Energy Usage in Practice

Initial Test Results and Caveats

Okay, so how do we actually figure out how much juice DeepSeek is using? It’s not like you can just plug it into a wall and read the meter. Some initial tests have been done, but it’s important to remember that these are just early results and come with a bunch of asterisks. One big caveat is that most tests are done on smaller versions of DeepSeek, using a limited set of prompts. This means the numbers we’re seeing might not be totally representative of how the full-scale model behaves in real-world scenarios. Scott Chamberlin, who has experience building tools to measure the environmental costs of digital activities, ran some of these tests.

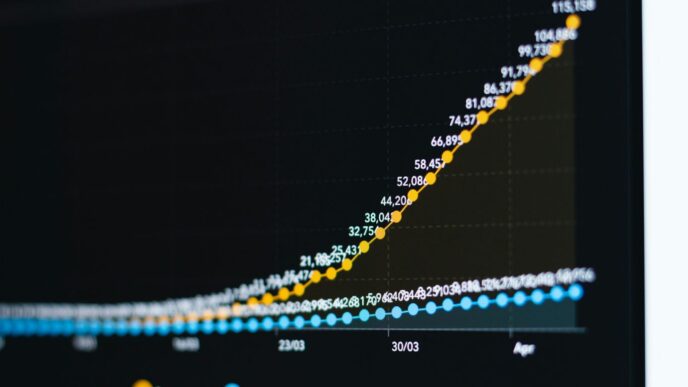

Comparing DeepSeek to Other AI Models

To get a sense of DeepSeek’s energy consumption, it helps to compare it to other models. One comparison was made against a Meta model with a similar number of parameters (70 billion). In one test, DeepSeek generated a 1,000-word response that consumed about 17,800 joules – roughly the same energy it takes to stream a 10-minute YouTube video. That’s a fair amount of energy! While DeepSeek’s energy efficiency was similar to the Meta model, it tended to generate much longer responses, resulting in higher overall energy use.

The Energy Cost of Longer Responses

It turns out that the length of DeepSeek’s responses has a big impact on its energy consumption. Because DeepSeek uses a chain-of-thought approach, it breaks down questions into smaller parts and answers each one individually. This can lead to more detailed and comprehensive answers, but it also means more processing and, therefore, more energy. A longer, more thoughtful answer comes at an energy cost. The table below shows a comparison of energy usage for different response lengths:

| Model | Response Length | Energy Usage (Joules) |

|---|---|---|

| DeepSeek | 1000 words | 17,800 |

| Meta Model | Shorter | ~12,600 |

It’s important to note that these are just a few data points, and more research is needed to fully understand the relationship between response length and energy usage.

The Life Cycle of AI Energy Consumption

Training Versus Inference: DeepSeek’s Phases

The life of an AI model, like DeepSeek, has two main stages: training and inference. Training is where the model learns from tons of data, often taking months. Inference is when the trained model is used to answer questions or generate content. Think of it like this: training is like going to school, and inference is like using what you learned on the job. The energy needs of each phase are different, and understanding them is key to figuring out DeepSeek’s overall power use.

Offsetting Training Savings with Inference Costs

DeepSeek might save energy during training because of its architecture, but that doesn’t mean it’s all good news. Some experts are worried that the energy saved in training could be canceled out by the energy it uses when answering questions, especially with its chain-of-thought approach. This method, which breaks down questions into smaller parts, helps DeepSeek perform better in areas like math and coding. However, it also uses more electricity. It’s like saving money on gas with a fuel-efficient car, but then driving it everywhere, even when you could walk. The carbon footprint of AI training is a growing concern.

The Broader Impact of DeepSeek’s Architecture

If DeepSeek’s approach becomes popular, it could change how all AI models are built. Other companies might start making their own versions of these low-cost reasoning models. This could lead to a big increase in energy use, especially if these models are used for tasks that don’t really need them. Imagine asking DeepSeek to explain something simple, like what to wear today. It’s like using a super-powered computer to do something a basic calculator could handle. The problem is that if all models become more compute intensive, any efficiency gains are voided. Model compression and optimization are key to reduce AI compute costs.

DeepSeek’s Architectural Innovations and Energy

Dynamic Computation and Resource Allocation

DeepSeek takes a smart approach to using computing power. Instead of firing on all cylinders for every task, it figures out how complex the job is and then uses just the right amount of resources. This means simple questions don’t waste energy on fancy calculations, while tough problems get the attention they need. It’s like having a car that automatically switches to eco-mode on the highway and then roars to life when you need to pass someone. This is a big deal because it directly cuts down on wasted energy.

Modularity and Energy Efficiency

Think of traditional AI models as one big, complicated machine where everything is connected. DeepSeek is different. It’s built with separate modules that can be plugged in or out depending on what you’re trying to do. This modularity is key to ethical guardrails. Need to analyze legal documents? Plug in the legal module. Working on financial forecasts? Use the finance module. This "plug-and-play" design means the system only uses the parts it needs, which saves a ton of energy. It’s like building with LEGOs – you only use the bricks necessary for your creation. This approach makes the whole system more efficient and easier to update.

Energy-Aware Training for Sustainability

DeepSeek isn’t just about saving energy during use; it also focuses on how the model is trained. The goal is to train the model in a way that uses less energy and prioritizes renewable sources. This involves optimizing hardware usage and scheduling training sessions when renewable energy is most available. It’s like planning your laundry around sunny days to use solar power. By focusing on AI for climate change during training, DeepSeek aims to reduce its overall environmental impact and promote a more sustainable approach to AI development. This is a crucial step towards making AI a responsible technology.

The Future of DeepSeek Energy Usage

The Risk of Widespread Adoption

Okay, so DeepSeek is getting a lot of buzz for being efficient, especially during training. But what happens if everyone starts using it all the time? That’s the big question. If DeepSeek becomes the go-to AI for everything, its overall energy consumption could skyrocket, even if it’s individually more efficient than other models. It’s like everyone switching to a slightly more fuel-efficient car – great, but if twice as many people are driving, we’re still burning more gas. We need to think about the total impact, not just the per-use numbers. Sasha Luccioni, an AI researcher, is worried that the excitement around DeepSeek could lead to a rush to insert this approach into everything, even where it’s not needed.

Balancing Utility and Environmental Impact

It’s a balancing act, right? We want the cool AI tools, but we also don’t want to fry the planet. How do we get there? Here are some ideas:

- Incentivize energy-efficient AI design: Maybe tax breaks for companies that prioritize low-power models?

- Promote awareness: Make sure people know about the energy costs of AI, so they can make informed choices. For example, understanding DeepSeek-R1 and its energy consumption.

- Develop better measurement tools: We need accurate ways to track the energy use of AI models in real-world settings.

The Need for Further Scientific Study

Honestly, we’re still in the dark about a lot of this. We need serious, peer-reviewed research on the energy consumption of AI models, including DeepSeek. Initial test results are a start, but they often come with caveats. We need to know:

- How does DeepSeek’s energy use compare to other models in different tasks?

- What are the biggest energy hogs in the DeepSeek architecture?

- Can we optimize DeepSeek for even lower power consumption without sacrificing performance?

Until we have solid data, we’re just guessing. And guessing isn’t good enough when the future of the planet is at stake. It’s forcing a debate about how much energy AI models should be allowed to use up in pursuit of better answers.

Wrapping Things Up

So, what’s the big takeaway here? DeepSeek definitely got everyone talking, and that’s a good thing. It made us all look a bit closer at how much power these AI models actually use. While DeepSeek might be good at some things, especially with its ‘thinking’ process, that also means it can use up more energy when it’s giving you answers. It’s not as simple as just saying it’s "energy efficient" across the board. We’re still figuring out the full picture, but one thing’s clear: as AI gets more advanced, we need to keep an eye on its energy footprint. It’s a balancing act, trying to get smarter AI without using up too much power. And that’s something we’ll all need to think about as these technologies keep growing.

Frequently Asked Questions

What exactly is DeepSeek?

DeepSeek is a new kind of AI model that’s really good at solving tough problems, like math or coding, by breaking them down into smaller steps. This step-by-step thinking is called ‘chain-of-thought.’

Is DeepSeek energy efficient?

While DeepSeek was designed to be energy-efficient during its initial setup (training), when it actually answers your questions (inference), it can use a lot more electricity. This is because its ‘chain-of-thought’ method, which helps it give better answers, requires more power.

Why does DeepSeek use more energy for its answers?

DeepSeek’s ‘chain-of-thought’ approach helps it think through problems more like a human, leading to more accurate and logical answers. However, this detailed thinking process needs more computer power, which in turn uses more electricity.

How much more energy does DeepSeek use compared to other AI models?

Early tests show that DeepSeek can use significantly more energy to answer questions compared to other AI models of similar size. For example, one DeepSeek answer used about 41% more energy than a Meta model for the same question, and its longer responses meant it used 87% more energy overall in some tests.

What are the worries about DeepSeek’s energy use in the future?

The main concern is that if many companies start using DeepSeek’s ‘chain-of-thought’ method for all sorts of apps, even when it’s not really needed, the total energy use from AI could go up a lot. It’s about finding the right balance between getting great answers and being kind to the planet.

What’s the difference between training and inference in AI energy use?

AI models go through two main energy phases: ‘training,’ where they learn, and ‘inference,’ where they answer questions. DeepSeek might save energy during training, but its detailed answering process can use more energy during inference, potentially canceling out those initial savings.