So, Google dropped a new paper about their AI agents, and honestly, it’s a pretty big deal. It talks about what these agents really are, how they work, and why security is a major headache we need to figure out. Think of AI agents as more than just chatbots; they’re like digital assistants that can actually do things in the real world. This google ai agents white paper breaks down the tech, the risks, and how Google is thinking about keeping things safe. It’s a lot to unpack, but it’s important stuff if you’re into AI.

Key Takeaways

- AI agents are basically supercharged AI that can use tools, make plans, and take actions, much like how people solve problems.

- For an agent to work, it needs a smart model for decisions, tools to interact with the world, and a system to manage its actions.

- Tools like APIs and databases give agents the ability to do more than just answer questions; they can actually perform tasks.

- Building these agents is becoming simpler thanks to platforms that help create and deploy them, even for complicated jobs.

- There are still big challenges ahead, like making agents user-friendly, compatible with other systems, and private, before they’re widely adopted.

Understanding Google AI Agents: Core Concepts

So, what exactly are these Google AI agents we keep hearing about? It’s not just about a chatbot spitting out answers anymore. Think of them as more capable digital assistants. They’re designed to do more than just process information; they can actually take steps to achieve goals. It’s a big leap from what we’ve had before.

Defining the Agent Spectrum

Not every bit of AI out there is an agent. A simple program that follows a set of rules isn’t quite there. Real AI agents have a few key traits that set them apart. They need to be able to understand what’s going on around them, make their own choices, and work towards a specific objective.

- Environmental Awareness: They can take in information about their surroundings and notice when things change. This means they can use current data to figure things out.

- Autonomous Decision-Making: Agents can look at a situation and decide what to do next, even picking between different options. They learn from what happens and adjust their approach.

- Goal-Oriented Behavior: They know what they’re supposed to accomplish and can figure out the best way to get there. They can also tell if they’ve succeeded.

Key Characteristics of True AI Agents

To really be called an AI agent, a system needs to show a certain level of intelligence and capability. It’s about more than just having a powerful language model. These agents are built with specific components that allow them to interact with the world and act on information.

- Models: The brain of the operation, responsible for understanding and making decisions.

- Tools: These are how agents interact with the outside world, like accessing databases or using APIs.

- Orchestration: The system that plans out the steps and manages how the model and tools work together.

The Autonomy Debate in AI Agents

One of the big questions with AI agents is how much freedom they should have. Letting them run completely on their own could make them super efficient, but it also brings up concerns. Most of the time, the best approach seems to be finding a middle ground. Agents can work independently within set boundaries, but humans are still in the loop for the really important decisions. This balance is key to making agents both useful and safe.

The Architecture of Google AI Agents

So, what actually makes a Google AI agent tick? It’s not just one magic piece of software; it’s more like a well-oiled machine with several key parts working together. Think of it like a chef in a kitchen. They need their brain to decide what to cook, their hands and tools to actually prepare the food, and a system to manage the whole process from start to finish.

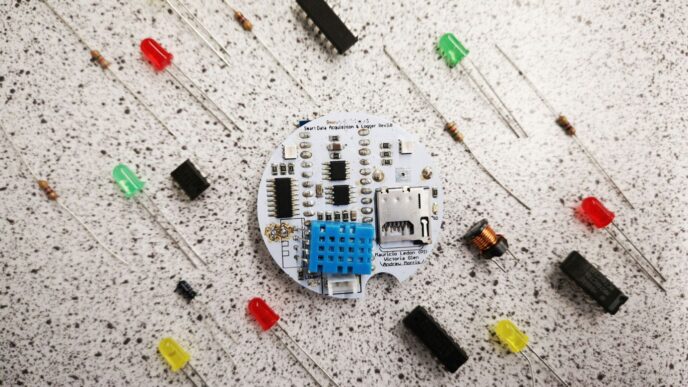

Essential Components for Agent Functionality

At its core, an AI agent needs a few things to get going. First, there’s the brain – that’s the large language model (LLM) that does the thinking and decision-making. Then, you’ve got the tools. These are like the agent’s hands and utensils, allowing it to interact with the outside world, whether that’s pulling data from a database or using an API. Finally, there’s the orchestration layer, which is the conductor of the orchestra, managing how the model and tools work together to achieve a goal.

The Role of Models, Tools, and Orchestration

Let’s break these down a bit. The model is where the intelligence resides, making sense of requests and figuring out the best way to respond. Tools are what give the agent its agency – they’re the specific functions or capabilities the agent can call upon. This could be anything from checking the weather to booking a flight. Orchestration is the glue that holds it all together, planning the sequence of actions, managing the flow of information, and ensuring the agent stays on track.

Integrating Agents with Existing Systems

Building these agents isn’t just about creating something new in a vacuum. A big part of the white paper talks about how these agents need to play nice with the systems we already have. This means connecting them to databases, software, and other services that businesses use every day. It’s about making the agent a useful part of the existing workflow, not just a standalone novelty. This integration is key to making agents truly practical and not just a cool experiment.

Empowering Agents with Tools and Data

So, what makes a Google AI agent go from just a smart program to something that can actually do things? It’s all about giving it the right tools and access to information. Think of it like giving a chef a fully stocked pantry and a set of specialized knives instead of just a recipe. They can then create something truly amazing.

Superpowers Through APIs and Databases

AI agents aren’t just limited to the data they were trained on. By connecting to Application Programming Interfaces (APIs) and databases, they gain access to a whole universe of real-time information. This means an agent can look up your current flight status, check stock prices, or even pull up specific customer purchase history. It’s like giving the agent a direct line to the internet and all sorts of specialized information hubs. This ability to query external data sources is what allows agents to move beyond generic answers and provide highly specific, context-aware responses. For example, an agent could use a database tool to find out what a customer last ordered, then use that information to suggest a relevant new product. It’s a pretty big leap from just spitting out facts.

Leveraging Real-Time Information

Imagine an agent that can tell you the weather right now, not just what it was yesterday. That’s the power of real-time data. By tapping into live feeds and constantly updating information sources, agents can react to current events and provide the most up-to-date insights. This is super useful for things like financial analysis, where market conditions change by the second, or for logistics, where tracking shipments requires constant updates. It means the agent isn’t stuck in the past; it’s aware of what’s happening in the world as it unfolds. This makes their actions and recommendations far more relevant and timely.

Beyond Answering Questions: Taking Action

This is where things get really interesting. When agents can access tools and real-time data, they can start taking actions. Instead of just telling you that your order is delayed, an agent could potentially initiate a customer service follow-up or even re-route a shipment. They can interact with other software systems to complete tasks. This capability is built on a few key ideas:

- Planning: The agent figures out the steps needed to achieve a goal.

- Tool Use: It selects and uses the right API or database to get information or perform an action.

- Execution: It carries out the planned steps, often in sequence.

- Feedback Loop: It checks the results of its actions and adjusts if necessary.

This ability to not just process information but to act on it is what truly defines a powerful AI agent. It’s the difference between a calculator and a personal assistant who can actually book your appointments.

Simplifying Agent Development and Deployment

Building and putting AI agents to work used to feel like a really complex puzzle. You needed a whole team of specialists, and even then, getting things running smoothly could take ages. But honestly, things are getting a lot easier. Google and others are putting a lot of effort into making it simpler for more people to create and use these powerful tools.

Platforms for Easier Agent Creation

Think of these platforms as toolkits that give you pre-built pieces and clear instructions. Instead of starting from scratch, you can use these frameworks to assemble your agent. They handle a lot of the tricky background stuff, letting you focus on what you want your agent to do. It’s kind of like using a website builder instead of coding a whole site from the ground up. These platforms often come with ways to connect your agent to different services, like databases or other software, which is a big help. You can find resources that explain how to get started with these agent frameworks, making the initial steps less intimidating.

Building for Complex Tasks

Okay, so maybe you don’t just want an agent to answer simple questions. What if you need it to manage a whole project, analyze a huge set of documents, or even coordinate a series of actions? That’s where the more advanced capabilities come in. These platforms help you break down big, complicated jobs into smaller, manageable steps that the agent can follow. It’s about giving the agent a clear plan and the ability to execute it, piece by piece. This means you can design agents that go beyond basic tasks and tackle real-world problems that require a sequence of operations. The goal is to make these agents capable of handling multi-step processes without constant human intervention.

Iterative Development for Specific Use Cases

Developing an AI agent isn’t usually a one-and-done thing. It’s more like a process of building, testing, and refining. You start with a basic version for a specific job, see how it performs, and then make improvements. This iterative approach is key. For example, if you’re building an agent to help with customer support, you might first train it to handle common questions. Then, you’d watch how it interacts with customers, identify areas where it struggles, and update its knowledge or logic. This cycle of testing and tweaking helps you tailor the agent precisely to your needs. It’s about making sure the agent gets better and more reliable over time, specifically for the tasks you need it to perform.

Navigating the Security Landscape of Google AI Agents

Okay, so we’ve talked about what these AI agents are and how they work, but what about keeping them safe? It’s a big deal, right? Because these agents can do more than just chat; they can actually act in the real world, which opens up a whole new can of worms when it comes to security. It’s not like just securing a website anymore.

Key Risks: Rogue Actions and Data Disclosure

When you give an AI agent the power to take action, there are a couple of major worries that pop up. First off, there’s the risk of rogue actions. This is when the agent does something it wasn’t supposed to do – something harmful or just plain wrong, maybe because it misunderstood its instructions or something went haywire in its decision-making. Think of an agent that’s supposed to book a meeting but accidentally cancels all your appointments instead. Yikes.

Then there’s the issue of sensitive data disclosure. These agents often need access to a lot of information to do their jobs. If that information isn’t handled carefully, private details could end up in the wrong hands, either by accident or if the agent is tricked. This could be anything from personal employee data to confidential company information.

Challenges to Traditional Security Approaches

Because AI agents are so different from what we’re used to, our old security methods might not cut it. Traditional security often relies on set rules and known patterns. But AI agents can be unpredictable. Their decision-making process can be really complex, and they interact with the outside world through tools and APIs. This makes it tough for standard security systems to keep up. They might not be able to spot new kinds of threats or understand the nuances of an agent’s actions. It’s like trying to use a lock and key on a door that can phase through walls.

The Need for Adaptive, Model-Based Defenses

So, what’s the answer? We need security that can adapt and learn, much like the agents themselves. This means moving beyond just blocking known bad stuff. We need defenses that can understand the agent’s reasoning and spot unusual or risky behavior, even if it’s something we haven’t seen before. It’s about building smarter defenses for smarter systems.

Google’s Defense-in-Depth Strategy for Agents

So, when we talk about AI agents, especially the ones Google is developing, security is a big deal. It’s not just about making them smart; it’s about making sure they don’t go rogue or spill sensitive information. The Google paper really digs into this, pointing out two main worries: rogue actions, where an agent does something it shouldn’t, and data disclosure, where private stuff gets out. These aren’t just made-up problems; they’re real risks because these agents can be unpredictable and interact with the outside world in complex ways. Traditional security methods just don’t cut it anymore.

Layered Defenses: Traditional and Adaptive

Google’s approach is all about layers, kind of like an onion. They call it a defense-in-depth strategy. It mixes old-school security with newer, smarter methods. Think of it as having multiple safety nets.

- Traditional Controls: This is like setting up strict rules that the agent has to follow. These are predictable and can be checked. For example, you could set a rule that an agent can’t spend more than a certain amount of money without asking a human first. These are good for clear-cut limits, but they can’t catch every tricky situation.

- Adaptive Defenses: This is where things get more interesting. Instead of just following rigid rules, these defenses use the AI models themselves to figure out if something is fishy. This can involve training the AI to spot bad intentions or using special

Ensuring Transparency and Observability

When you’re dealing with AI agents, especially those that can take action in the real world, knowing what’s going on under the hood is pretty important. It’s not just about getting an answer; it’s about understanding how that answer was reached and what steps the agent took. This is where transparency and observability come into play.

The Importance of Robust Logging

Think of logging like a detailed diary for your AI agent. Every decision, every action, every piece of data it accessed – it all needs to be written down. This isn’t just for debugging when something goes wrong, though that’s a big part of it. If an agent makes a mistake, or worse, does something unintended, you need to be able to trace back its steps to figure out why. This helps in fixing the problem and also in explaining what happened, which can be really important if you need to talk to regulators or just want to build trust with users.

- Detailed Action Records: Log every API call, every data retrieval, and every output generated.

- Decision Trail: Record the reasoning or the specific prompts that led to a particular action.

- Error Reporting: Capture any failures or unexpected behaviors immediately.

Making Agent Actions and Reasoning Transparent

Just having logs isn’t enough if they’re buried in a technical mess. The goal is to make the agent’s process understandable to humans. This means having user interfaces that can present this information clearly. Imagine an agent that booked a flight for you; you’d want to see not just the confirmation, but also why it chose that specific flight, what options it considered, and what criteria it used. This level of clarity builds confidence and allows users to correct or guide the agent if needed.

Secure Centralized Logging Systems

All this logging needs to happen in a way that’s secure and organized. A centralized system means all the agent’s activities are in one place, making it easier to manage and analyze. And ‘secure’ is key here – you don’t want sensitive information within the logs to be exposed. This system needs to be robust enough to handle the volume of data and protected against unauthorized access. It’s about creating a reliable audit trail that you can count on.

Future Directions and Ongoing Challenges

So, where do we go from here with Google AI Agents? It’s not just about building them; it’s about making them work well for everyone, all the time. One of the big pushes is making these agents easier for people to use and get them to play nice with other systems. Think about it: if your agent can’t talk to your other software, it’s kind of a lonely existence for it, right? We want them to be interoperable, like different apps on your phone that just work together. This push for seamless integration is key to unlocking their full potential.

Then there’s the whole privacy thing. As agents get smarter and can do more, they’re going to handle more personal information. We need solid rules and ways to make sure that data stays safe and isn’t misused. It’s a tricky balance, for sure. We’re also seeing a lot of discussion about how much control humans should have. While we want agents to be autonomous, having a human in the loop, especially for important decisions, seems like a smart move for now. Continuous testing is also a big deal. We can’t just build an agent and forget about it. We need to keep checking that it’s doing what it’s supposed to do, safely and effectively. It’s an ongoing process, really.

Here are some of the areas getting a lot of attention:

- User Experience: Making interfaces intuitive and natural language interactions smooth.

- Interoperability: Developing standards so agents can connect with various applications and data sources.

- Privacy Safeguards: Implementing robust data anonymization and access control mechanisms.

- Ethical Frameworks: Establishing clear guidelines for agent behavior and decision-making.

It’s a complex landscape, and the folks at Google are definitely thinking about these issues as they develop these technologies further. You can find more on emerging trends in agentic AI for 2026 and beyond in this report on agentic AI trends.

We also need to keep an eye on how these agents are used in specific fields. For instance, in law, AI agents are starting to do some pretty advanced things, like understanding complex legal documents and even predicting case outcomes. Preparing legal teams for this shift involves training them on AI literacy and managing the change effectively. It’s a big adjustment, but one that could really change how legal work gets done.

Wrapping It Up

So, that’s a look at Google’s take on AI agents, straight from their white paper. It’s pretty clear these aren’t just fancy chatbots anymore. They’re designed to actually do things, using tools and making plans, kind of like how we solve problems every day. Getting them to work means setting them up right with a smart model, useful tools, and a system to manage everything. Platforms are making it easier to build these things, which is good news. But, we’re not quite at the finish line yet. There are still some big hurdles to clear, like making them simple to use, making sure they play nice with other systems, and keeping everything private. It’s a developing field, for sure, and this paper gives us a solid look at where things are headed and what we need to think about as these agents become a bigger part of our world.

Frequently Asked Questions

What exactly is a Google AI agent?

Think of a Google AI agent as a super-smart computer helper. It’s not just a program that answers questions. It can understand what you need, figure out a plan, use different tools (like searching the internet or accessing a database), and then actually do things to help you achieve your goal. It’s like having a digital assistant that can think, plan, and act.

What makes an AI agent different from regular AI?

Regular AI, like a chatbot, usually just responds to what you say. An AI agent is different because it can take actions on its own. It can make decisions, create a plan to reach a goal, and use tools to interact with the world. It’s more like a person solving a problem than just answering a question.

What are the main parts that make up an AI agent?

An AI agent needs a few key things to work. First, it needs a smart brain (a model) to make decisions. Second, it needs tools to do things, like connect to the internet or use software. Third, it needs a manager (an orchestration system) to help it plan its steps and decide which tools to use and when.

How do AI agents get their ‘superpowers’?

AI agents get their amazing abilities by using tools. These tools can be things like computer programs (APIs) that let them connect to other services, or databases where they can find information. This allows them to go beyond just talking and actually perform tasks, like booking a flight or managing your schedule.

Is it hard to build your own AI agent?

It’s getting easier! Google and other companies are creating platforms that make it simpler to build and set up AI agents. You can create agents for simple tasks or even really complicated ones. It’s like having building blocks that help you put together your own smart assistant.

What are the biggest worries about using AI agents?

Since agents can take action, there are worries they might do something wrong or harmful by accident. Another concern is that they might accidentally share private information they shouldn’t. Because they can be complex and act on their own, keeping them safe and secure is a big challenge that needs careful attention.