You know, it feels like AI is everywhere these days. From helping with homework to creating art, it’s become a big part of our digital lives. But have you ever stopped to think about how much power all of this AI stuff actually uses? It’s a question that’s getting more attention lately, and for good reason. The energy demands are pretty significant, and it’s not always clear how it all adds up. We’re going to unpack just how much energy does AI use and what that means for us.

Key Takeaways

- We’re using AI for more things than ever, from simple questions to creating videos, and each use adds up in energy consumption.

- Figuring out exactly how much energy AI uses is tricky because companies don’t share a lot of details, and different AI models work differently.

- AI’s huge energy needs are making data centers grow, which could mean higher electricity bills for everyone and more reliance on fossil fuels.

- The amount of electricity AI uses is expected to go up a lot in the next few years, potentially using as much power as millions of homes.

- There’s a push for tech companies to be more open about how much energy their AI products consume so we can better understand the impact.

Understanding AI’s Growing Energy Footprint

It’s easy to think of artificial intelligence as just software, something that lives in the cloud and doesn’t really take up much physical space. But that’s not quite right. Every time you ask a chatbot a question, generate an image, or use an AI-powered feature in an app, there’s an energy cost involved. This energy powers the massive data centers filled with servers that do all the heavy lifting for AI. The integration of AI into our daily digital lives is happening faster than many expected, and with it comes a significant, often unseen, energy demand.

Think about it: AI isn’t just for tech enthusiasts anymore. It’s showing up everywhere. Need help with homework? There’s an AI for that. Trying to create a cool graphic for a project? AI can do it. Even mundane tasks like tracking your fitness or booking a flight might be using AI behind the scenes. This widespread adoption means that the energy required for AI is no longer a niche concern; it’s becoming a mainstream issue. The sheer scale of this shift is pretty staggering, and major tech companies are pouring resources into expanding AI capabilities, which in turn means they’re looking to harness more and more electricity. This push is so significant that it’s starting to reshape our power grids.

Here’s a look at how AI is weaving itself into our digital world:

- Information Retrieval: Asking AI models questions for research or general knowledge.

- Content Creation: Generating text, images, or even short videos.

- Personalization: AI learning your preferences to tailor experiences.

- Task Automation: Using AI for tasks like scheduling or customer service.

It’s important to realize that the energy used for a single AI query might seem small, like running a microwave for a few minutes. However, when you multiply that by billions of users and countless queries every day, the total energy consumption becomes substantial. This growing demand is a key reason why understanding AI’s energy footprint is so important, especially as we look towards a future powered by these technologies. We need to get a handle on these numbers to plan effectively for AI’s energy future.

To put it in perspective, a day where you heavily use AI – perhaps asking 15 questions about fundraising, trying 10 times to get an image just right, and then creating a few short videos – could use around 2.9 kilowatt-hours of electricity. That’s enough to power an e-bike for over 100 miles or run your microwave for more than three hours straight. While these figures are estimates and can vary wildly depending on the specific AI model and task, they highlight the tangible energy cost of our interactions with AI.

Quantifying the Energy Consumption of AI Models

Trying to pin down exactly how much energy an AI model uses for a single query feels a bit like trying to catch smoke. It’s not as straightforward as checking the wattage on a toaster. The type of AI model, how big it is, what you’re asking it to do – like generating text versus a video – all play a part. Plus, there are all these outside factors you can’t control, like which data center handles your request and when it’s processed. This means one query could use way more energy than another, sometimes thousands of times more.

Most of the big AI names, like ChatGPT or Google’s Gemini, are what we call “closed” models. The companies behind them keep the nitty-gritty details about their energy use pretty secret, mostly because they see it as proprietary information. Honestly, they probably don’t want to share if the numbers aren’t great. This lack of openness makes it tough for researchers and the public to get a clear picture. It’s like trying to understand how a car works without ever seeing the engine.

Estimating Energy Use Per AI Query

So, how do we even start to guess? Well, researchers are doing their best. Some focus on what are called “open-source” models. These are models that anyone can download and tinker with. Researchers can then use special tools to measure how much power a specific piece of hardware, like a GPU, uses for a particular task. For example, a report from the University of Michigan has been tracking these kinds of measurements for popular open-source models. They found that a single text-based AI query might use around 0.34 watt-hours of electricity. That might not sound like much, but when you think about how many queries are happening every single day, it adds up fast. It’s the sheer volume of these requests that makes the individual energy cost significant.

Challenges in Measuring AI Energy Consumption

Even with open-source models, getting a full picture is tricky. Measuring the power used by the main processing chip, the GPU, is one thing, but that doesn’t account for everything else. You’ve got the CPUs, the cooling fans, and all the other bits and pieces that keep a data center running. Some studies suggest that to get a rough idea of the total energy needed for an AI operation, you might need to double the GPU’s energy use to account for these other components. It’s a bit of guesswork, but it’s the best we have right now. The real numbers are locked away by the companies that make these models, and they’re not exactly eager to share them. We really need more transparency from these tech giants about their AI energy details.

The Significance of Inference Energy Use

When we talk about AI energy use, there are two main parts: training and inference. Training is when the AI model is first built, and it takes a massive amount of energy, like powering San Francisco for three days just to train one model. But what most of us interact with daily is called inference – that’s when you ask the AI a question, and it gives you an answer. While training is a huge one-time (or periodic) energy cost, inference happens with every single query. So, even if each query uses a small amount of energy, the sheer number of them means that inference can actually end up consuming more energy overall than the initial training. Think of it like this: training is building a factory, but inference is running all the machines in that factory, day in and day out. The ongoing operation, the inference, is where the sustained energy demand really lies.

The Impact of AI on Power Grids and Emissions

AI is changing how we use electricity, and that’s starting to show up on our power grids. Think about all those AI queries happening constantly – each one needs power. When you add up millions, or even billions, of these requests every day, it means data centers are getting bigger and needing more and more electricity. This isn’t just a small bump; it’s a significant shift that utilities and grid operators have to plan for.

Data Centers and Their Growing Electricity Needs

Data centers are the physical homes for AI. They’re packed with powerful computers that run 24/7. As AI gets more popular and integrated into everything from search engines to creative tools, these centers need more power. Companies are building new ones and expanding existing ones at a rapid pace. Some are even looking at building massive facilities that could demand as much power as entire states. This surge in demand puts a strain on existing power infrastructure, forcing utility companies to figure out how to supply enough electricity without causing blackouts or overloading the system.

The Carbon Intensity of AI-Powered Grids

When we talk about AI’s impact on emissions, we have to consider where the electricity comes from. Not all electricity is created equal in terms of its environmental impact. The amount of carbon dioxide released for every kilowatt-hour of electricity used is called carbon intensity. This varies a lot depending on your location. Grids that rely heavily on fossil fuels like coal and natural gas have a higher carbon intensity than those powered by renewable sources like solar and wind. So, if an AI request is processed in a region with a dirtier grid, it will have a larger carbon footprint than the same request processed in a region with a cleaner grid. This means the same AI task can contribute differently to climate change depending on where it’s happening and even when it’s happening, as grid mixes can change throughout the day.

AI’s Role in Reshaping Energy Infrastructure

Because AI needs so much power, it’s influencing how energy infrastructure is developed. Tech companies are making big investments in power generation and transmission to support their data centers. Some are even exploring new power sources, like building their own power plants or investing in renewable energy projects. However, there’s a concern that the way these new data centers are being built and powered might not always align with broader community development or grid stability goals. There’s also a question of who pays for all this new infrastructure. Research suggests that the discounts utility companies offer to large tech companies for their massive power consumption could end up increasing electricity rates for regular households. This means everyday people might end up subsidizing the energy costs of the AI revolution, potentially adding significant amounts to monthly bills, especially in areas where data center growth is concentrated.

Future Projections for AI Energy Demand

It’s pretty clear that AI isn’t just a passing trend; it’s becoming a bigger part of our lives every single day. Think about it – from helping with homework to generating images, AI is everywhere. But all this convenience comes with a significant energy cost, and the numbers are only going to get bigger.

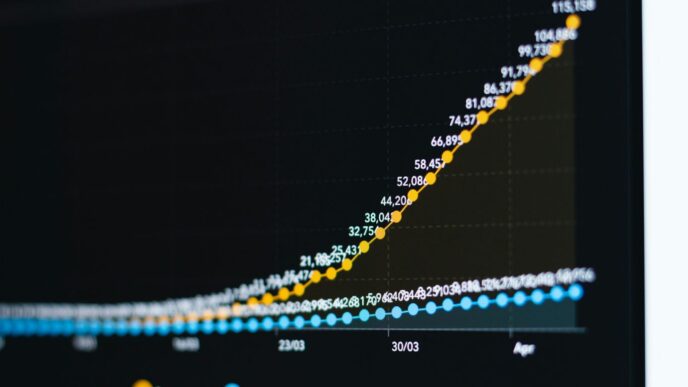

Anticipated Growth in AI’s Electricity Consumption

We’re looking at a massive jump in how much electricity AI will need. Some reports suggest that by 2028, AI-specific servers could be using between 165 and 326 terawatt-hours of electricity annually in the US alone. That’s a huge chunk, potentially enough to power over 22% of all US households. To put it another way, that’s more electricity than all current US data centers use for everything combined. This rapid increase is primarily driven by the adoption of AI and the specialized hardware needed to run it. Before AI, data center energy use was pretty steady for years, but AI has completely changed that picture.

The Potential Impact on Household Energy Bills

So, what does this mean for us? Well, the deals that utility companies are making with these massive data centers to meet their growing energy demands could end up costing us more. We might see our own electricity bills go up as the infrastructure is expanded and powered. It’s like the cost of this AI revolution is being spread out, and we’ll likely be footing part of the bill.

The Shift Towards More Energy-Intensive AI Applications

It’s not just about more AI queries; it’s about the type of AI we’re using. We’re moving towards more complex AI models. For instance, ‘reasoning models’ that can logically work through problems, while useful, can use way more energy than simpler tasks. Imagine AI agents that can handle complex jobs for you, or models that do deep research. These advanced capabilities require significantly more power. Companies are already investing billions in building massive data centers and even looking into new nuclear power plants to keep up with this demand. It’s a clear sign that the future of AI is going to be even more energy-hungry than what we’re seeing today.

The Need for Transparency in AI Energy Reporting

It feels like every day there’s a new AI tool or feature popping up, and honestly, it’s kind of exciting. But as we get more into this AI stuff, a big question keeps coming up: how much energy is all this actually using? The companies making these AI models are pretty quiet about the details. They’re not exactly shouting from the rooftops about how much power their systems chew through. This lack of openness makes it really hard for anyone outside the company to figure out the real energy cost of using AI, or what kind of impact it’s having on the environment. It’s like trying to guess how much fuel a rocket uses without anyone telling you anything about the engine.

Why Tech Giants Keep AI Energy Details Secret

So, why the big secret? Well, a lot of it comes down to business. Companies pour billions into developing these AI models, and the specifics of how they work, including their energy consumption, are often seen as trade secrets. They worry that if they share too much, competitors might get a leg up. Plus, the energy usage can vary a lot depending on the specific task, the hardware used, and even where the data center is located. It’s not a simple number to just put out there. But this secrecy leaves researchers and the public in the dark, making it tough to plan for the future energy needs of AI. It also means we can’t easily compare different AI models to see which ones are more energy-efficient. We’re basically flying blind when it comes to understanding the full picture of AI’s energy demands. It’s a bit like how Virgin Galactic is pushing the boundaries of space travel, but we don’t have all the technical specs for their spacecraft, making it hard to assess their operational footprint [6b5b].

The Importance of Open-Source Model Analysis

When AI models are open-source, it’s a game-changer for transparency. Researchers can get their hands on the models, look at the code, and actually measure the energy used for different tasks. This kind of open analysis is super important. It helps us understand not just how much energy a model uses, but also how efficient it is. For example, we can see how many kilowatt-hours are used for a single query or for generating an image. This kind of data is what we need to push for more energy-efficient AI development. Without it, we’re just guessing. It’s like trying to fix a car without a manual – you might get lucky, but it’s a lot harder.

Calls for Greater Disclosure from AI Companies

There’s a growing chorus of voices, including scientists and environmental groups, calling for AI companies to be more upfront about their energy use. They argue that as AI becomes more integrated into our lives, we all have a right to know the environmental cost. Some suggest that companies could share anonymized data or report energy use in standardized ways, even without giving away their secret sauce. This would allow for better planning and accountability. Right now, it feels like we’re building a massive new energy infrastructure for AI without a clear roadmap. This lack of information means that the costs of this AI boom could end up being passed on to regular consumers through higher electricity bills. It’s a situation where we need more information to make informed decisions about how we want AI to shape our future energy landscape.

Examining the Energy Costs of AI Training vs. Inference

When we talk about AI’s energy use, it’s easy to get caught up in the massive upfront cost of training these complex models. Think of it like building a skyscraper – it takes a huge amount of energy and resources to get it off the ground. But what happens after the skyscraper is built and people start using it? That’s where ‘inference’ comes in, and it’s becoming a much bigger piece of the energy puzzle.

The Energy Intensity of Training AI Models

Training an AI model is like sending a student to university for years. It involves feeding the model vast amounts of data, letting it learn patterns, and adjusting its internal workings. This process requires immense computing power, often running for weeks or even months on specialized hardware like GPUs. For instance, training a model like GPT-4 is estimated to have cost over $100 million and consumed around 50 gigawatt-hours of electricity. That’s enough power to keep San Francisco running for three days! It’s a significant energy investment, but it’s a one-time (or at least infrequent) event for a given model version.

Why Inference Energy Use Can Surpass Training

Here’s where things get interesting. While training is a big energy hog, the ongoing use of AI models – what we call inference – can actually end up consuming more energy over time. Every time you ask an AI a question, generate an image, or get a video created, that’s an inference request. Each of these requests uses a small amount of energy, but when you multiply that by billions of users making trillions of queries, the total energy consumption adds up fast. Some researchers suggest that inference now accounts for 80-90% of an AI model’s total energy demand. So, while training is the initial massive burst, inference is the steady, continuous drain that can ultimately be larger.

Measuring the Energy Draw of AI Inference

Figuring out the exact energy cost of a single AI query is tricky. Companies developing these models are often tight-lipped about the specifics, like the number of parameters in their models or how they distribute user requests across their data centers. This lack of transparency makes it hard to get precise numbers. However, researchers are working on ways to estimate and compare the energy efficiency of different AI models. For example, one estimate suggests a single query might use around 0.34 Wh. If a model handles a billion queries a day, that adds up quickly. The real challenge is getting companies to share their actual energy usage data so we can get a clearer picture. Understanding the energy use per AI query is key to measuring the environmental impact of AI inference.

Here’s a simplified look at how the energy use can stack up:

| Activity | Estimated Energy Use (Wh) |

|---|---|

| Training a large model | 50,000,000,000 (total) |

| Single AI query (estimate) | 0.34 |

| 1 Billion Queries (daily) | 340,000,000 |

It’s clear that while training is a massive initial investment, the cumulative effect of billions of daily inference requests is where the long-term energy story of AI truly lies.

So, What’s the Bottom Line?

It’s pretty clear that AI is a big deal when it comes to energy use, and honestly, we’re still trying to get a handle on just how much. The numbers we have are a starting point, but the tech companies are keeping a lot of the details close to their chest. What we do know is that AI is already using a significant chunk of power, and that’s only going to grow as it becomes part of more and more things we do every day. It’s not just about individual queries adding up; it’s about the massive infrastructure being built to support it all. We’re talking about new data centers and even new power plants. This means we all might end up paying more for electricity, and we need to think about where that power is coming from. The conversation about AI’s energy footprint is just getting started, and it’s going to be a big one.

Frequently Asked Questions

How much electricity does a single AI question use?

It’s a tiny amount, like a quick zap of power, maybe less than running your microwave for a minute. But when millions of people ask AI questions every day, it all adds up quickly. Think of it like a single raindrop versus a flood – one drop is nothing, but many raindrops can cause a big impact.

Why is AI using so much energy?

AI needs a lot of power because the computer programs, called models, are really complex. Training these models, which is like teaching them, takes tons of computing power for a long time. Then, when you ask the AI a question or ask it to create something, that’s called ‘inference,’ and it also uses energy, especially when lots of people are using it at the same time.

Will AI make my electricity bill go up?

It’s possible. Big tech companies are building huge buildings full of computers called data centers to run AI. These data centers use a massive amount of electricity. If these companies get special deals on electricity, the extra costs might be passed on to regular customers, making everyone’s bills higher.

Where does the energy for AI come from?

A lot of the electricity used by data centers comes from sources that aren’t clean, like coal and natural gas, which release pollution. This means that using AI can contribute to climate change. Some companies are looking into using cleaner energy sources, but right now, much of it still relies on power that harms the environment.

Do companies tell us how much energy AI uses?

Not really. Most big tech companies keep the exact energy use details for their AI a secret. This makes it hard for scientists and the public to know the true impact. Some researchers are trying to figure it out by studying AI models that are shared openly, but it’s like trying to solve a puzzle with many missing pieces.

Is training AI or using AI more energy-hungry?

While training an AI model uses a lot of energy upfront, the ongoing process of answering millions of questions and creating content (called inference) can actually use even more energy over time. So, even though a single question uses a little energy, the sheer number of questions asked makes inference a big energy user.