Understanding The Core Of Robotics Meaning

So, what exactly is robotics? It’s not just about shiny metal people walking around like in the movies, though that’s part of the fun. At its heart, robotics is a mix of science, engineering, and technology all rolled into one. It’s all about creating machines, which we call robots, that can do things humans do, or sometimes, things humans can’t easily do.

Defining Robotics: Science, Engineering, And Technology

Think of robotics as a field where different smart people come together. Scientists figure out the ‘why’ and ‘how’ things could work, engineers design and build the actual machines, and technology provides the tools and methods to make it all happen. It’s this blend that lets us create robots capable of everything from simple, repetitive tasks on an assembly line to complex jobs in places humans can’t go, like deep underwater or on other planets.

The Etymology Of The Word ‘Robot’

It’s kind of interesting where the word ‘robot’ actually comes from. It was first used in a play back in 1920, called R.U.R. The word itself comes from a Czech word, ‘robota,’ which basically means ‘forced labor’ or ‘drudgery.’ So, right from the start, the idea was about machines doing the hard, often unpleasant, work for us. It’s a pretty fitting origin, considering what many robots are still used for today.

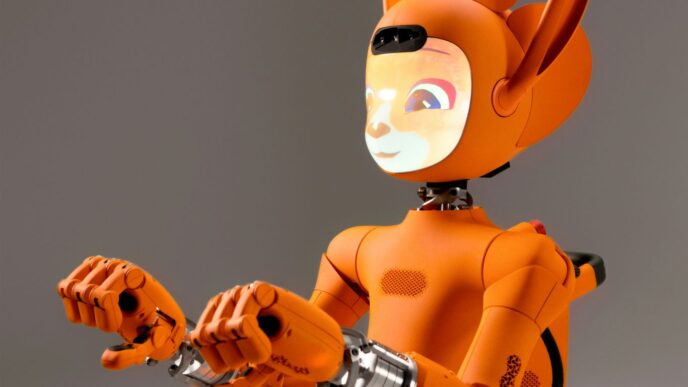

Robots: Machines That Replicate Human Actions

At the most basic level, a robot is a machine that can be programmed to do something. The key is that it’s designed to perform actions, often mimicking human movements or decision-making processes. This could be anything from a robotic arm on a factory floor precisely placing parts, to a drone flying a pre-set path, or even a small rover exploring Mars. They’re built to interact with their environment and carry out specific tasks, making our lives easier or allowing us to achieve things we couldn’t otherwise.

Key Components That Define A Robot

So, what actually makes a machine a robot? It’s not just about looking like a sci-fi character. A robot is a complex system, and it’s built from a few core parts that work together. Think of it like a body with a brain and senses. Without these pieces, a machine is just a machine, not a robot capable of doing anything interesting.

The Role Of The Control System

This is basically the robot’s brain. The control system is where the programming lives. It takes all the information coming in from the robot’s senses and decides what to do next. It’s like when you see a red light and your brain tells your foot to hit the brake. For a robot, this could mean telling an arm to move, a wheel to turn, or a camera to focus. The control system is what gives the robot its intelligence and ability to act. It’s programmed with instructions, often called algorithms, that guide its actions for specific tasks, whether that’s picking up a package or performing a delicate surgery.

Sensors: Enabling Environmental Interaction

Robots need to know what’s going on around them, and that’s where sensors come in. They’re like the robot’s eyes, ears, and even its sense of touch. A camera acts as an eye, letting the robot see. Microphones can act as ears, picking up sounds. Other sensors can detect light, temperature, or even proximity to objects. All this information is converted into signals that the control system can understand and use to make decisions. Without sensors, a robot would be blind and deaf to its surroundings, unable to react to anything.

Actuators: The Driving Force Behind Movement

If sensors are the senses and the control system is the brain, then actuators are the muscles. These are the parts that actually make the robot move. They take commands from the control system and translate them into physical motion. Most actuators are electric motors, but they can also be powered by compressed air (pneumatic) or hydraulic fluid. Different types of actuators are used for different jobs. Some are designed for precise, delicate movements, while others provide the power needed for heavy lifting or fast motion. They are what allow a robot to walk, grasp, lift, or steer.

The Necessity Of A Power Supply

All these components – the brain, the senses, the muscles – need energy to work. That’s where the power supply comes in. For most robots, this means batteries. Some larger industrial robots might be plugged directly into an electrical outlet. The power supply needs to be robust enough to keep all the systems running, especially when the robot is performing demanding tasks. It’s the lifeblood of the robot, without which none of the other components can function.

The Interdisciplinary Nature Of Robotics

Robotics isn’t just about building cool machines that move. It’s a field that pulls together ideas and skills from a bunch of different areas. Think of it like a really complex recipe where you need just the right amount of each ingredient to make it work.

Mechanical Construction For Task Execution

This is where the physical body of the robot comes into play. Engineers design the robot’s structure, its joints, its limbs, and how it will move around. It’s not just about making something that looks like a human or an animal; it’s about creating a form that can actually do the job it’s meant for. For example, a robot designed to pick up delicate objects will need a very different kind of gripper and arm than one built to lift heavy crates. The materials used are also important – they need to be strong enough but not too heavy, and able to withstand the environment the robot will operate in. The mechanical design is what allows the robot to interact physically with the world.

Electrical Components For Power And Control

Once you have the physical structure, you need to bring it to life. That’s where electrical engineering comes in. This involves figuring out how to power the robot, usually with batteries or a direct power source. It also includes all the wiring, circuits, and electronic components that allow the robot to receive commands and execute them. Think of motors that make the joints move, sensors that gather information, and the main processing units that make decisions. It’s a complex web of connections that makes everything happen.

Software Programming: The Robot’s Brain

All the fancy hardware is useless without the right instructions. This is the job of software programming. Computer scientists and software engineers write the code that tells the robot what to do, how to interpret the information from its sensors, and how to control its movements. This can range from simple commands for repetitive tasks to very complex algorithms that allow the robot to learn and adapt. The software is essentially the robot’s brain, making sense of the world and directing its actions.

Exploring The Robotics Development Lifecycle

Building a robot isn’t just about slapping some parts together and hoping for the best. It’s a whole process, kind of like building a really complicated Lego set, but with more wires and a lot less plastic. This lifecycle is how we go from a cool idea to a working robot that can actually do stuff.

System Architecture and Component Selection

First off, you need a plan. This is where you figure out the big picture: what kind of robot are we making? What’s it supposed to do? You’ll sketch out the overall design, thinking about how all the different pieces will talk to each other. Then comes picking the actual parts. This isn’t just grabbing whatever looks shiny; you’ve got to think about what each sensor, motor, or computer chip can do, how much power it needs, and if it’ll play nice with everything else. It’s a bit like choosing ingredients for a recipe – you need the right ones for the dish to turn out well.

3D Modeling and Hardware Assembly

Once you have a rough idea of the components, it’s time to get visual. Using 3D modeling software, engineers create a digital blueprint of the robot. This lets them see how everything fits together, spot potential clashes, and even test out different designs before any physical parts are made. Think of it as a virtual test drive for the robot’s body. After the digital model is solid, the real building begins. This is the hands-on part: putting together the mechanical frame, wiring up the electronics, and making sure all the nuts and bolts are tight. It’s where the design starts to take physical shape.

Perception, Planning, and Control Development

This is where the robot starts to get smart. It’s not enough for a robot to just exist physically; it needs to understand its surroundings and figure out what to do. This phase involves writing the software that allows the robot to ‘see’ and ‘hear’ using its sensors (perception). Then, it needs to decide what actions to take based on that information (planning). Finally, it needs to execute those actions smoothly, whether it’s moving an arm or driving across a room (control). This is the brainpower part, making the robot more than just a collection of parts.

Simulation, Testing, and Deployment

Before you let a robot loose in the real world, especially if it’s going to be interacting with people or delicate environments, you need to test it thoroughly. Simulation software is a big help here. You can run the robot’s code in a virtual environment, trying out all sorts of scenarios – good and bad – without risking actual hardware. Once it performs well in simulation, you move to real-world testing. This involves putting the robot through its paces in controlled environments, then gradually in more complex situations. Finally, when everything checks out, it’s time for deployment, where the robot starts its intended job.

Advancements And Future Directions In Robotics

It feels like every other week there’s some new development in robotics that makes you stop and think, ‘Wow, robots are really getting smart.’ And honestly, it’s true. The field is moving at a pretty fast clip, especially with how much AI has been improving. We’re seeing robots that can do more than just repeat programmed tasks; they’re starting to figure things out on their own.

The Impact Of Artificial Intelligence On Robotics

Artificial intelligence is kind of the secret sauce that’s making a lot of this progress possible. Think about it: AI helps robots process all the information they get from their sensors way faster. This means they can make better decisions in real-time, which is a big deal for things like self-driving cars or robots working in busy factories. AI is essentially giving robots the ability to learn and adapt, moving them beyond simple automation. It’s not just about following instructions anymore; it’s about understanding the environment and reacting appropriately.

Robot Learning And Embodied Intelligence

This is where things get really interesting. Robot learning is all about robots figuring out how to do things through experience, kind of like how we learn. Instead of a programmer writing out every single step, the robot can learn by trying, failing, and trying again. Embodied intelligence ties into this – it’s the idea that a robot’s physical body and how it interacts with the world are just as important as its software for learning. So, a robot that can walk and stumble might learn to balance better than one that’s just simulated. It’s about building robots that can actually do things in the real world, not just in a computer.

The Role Of Large Language Models In Robotics

Remember when chatbots started getting really good at talking? Well, now those kinds of language models, like the ones behind ChatGPT, are starting to show up in robotics. This could mean robots that can understand and respond to complex human instructions much more naturally. Imagine telling a robot, ‘Please tidy up the living room, but be careful with the vase,’ and it actually knows what you mean and how to do it. It’s a huge step towards making robots more helpful and easier to work with in our daily lives.

Applications And Career Opportunities In Robotics

Robots aren’t just for sci-fi movies anymore; they’re showing up everywhere, and that means a lot of different jobs are popping up too. Think about it – from the factory floor to your living room, robots are doing all sorts of things.

Diverse Industrial Applications Of Robots

Robots have really become a big deal in manufacturing. They’re great at doing the same thing over and over, like assembling car parts or painting. But it’s not just factories. In agriculture, robots are helping out with planting, harvesting, and even checking on crops, especially in big greenhouses. They can do the tough, repetitive jobs so farmers can focus on other things. Then there’s healthcare, where robots can help with surgeries, deliver medicine, or even just keep patients company. We’re also seeing them in search and rescue, going into dangerous places to help people or put out fires when it’s too risky for humans. Even at home, you’ve probably seen robot vacuums, but there are also robots that can mow your lawn or help with simple chores.

Defining Roles: Robotics Developers Versus Researchers

When you get into robotics, there are generally two main paths. You have the robotics developers, who are the folks that take existing algorithms and tools and put them together to make a robot work. They know which tools to use and how to connect them to the robot’s systems. Then you have the robotics researchers. These are the people who are coming up with the brand-new ideas, developing new algorithms, and figuring out how to solve problems that nobody has solved before. It’s like one group builds the house using blueprints, and the other group designs the blueprints themselves.

Exploring Specialized Engineering Positions

Beyond those two broad categories, there are lots of specific engineering jobs. You might be an embedded systems engineer, working on the low-level code that makes the robot’s hardware function, often using languages like C or C++. Or you could be a perception engineer, focusing on how the robot ‘sees’ and understands its surroundings using cameras and sensors. Then there are control engineers, who figure out how to make the robot move smoothly and precisely. And of course, with all the AI advancements, there are roles for AI and machine learning engineers who specialize in teaching robots to learn and adapt. It’s a field that needs all sorts of skills, from mechanical design to advanced software programming.

Wrapping Things Up

So, we’ve taken a pretty good look at what robotics is all about. It’s not just about fancy machines that look like they’re from a sci-fi movie, though those are cool too. It’s a whole field where engineering, computer science, and a bunch of other smart stuff come together to make machines that can do things. From the nuts and bolts that make them move to the code that tells them what to do, and even how they sense the world around them, it’s a complex but fascinating area. We’ve seen how robots are already working in factories, helping out in medicine, and even exploring other planets. And with AI getting smarter, the possibilities for what robots can do are only going to grow. It’s a field that’s constantly changing, and understanding the basics is a great first step for anyone curious about the future of technology and how it’s shaping our world.