Artificial intelligence is showing up everywhere, and it feels like it knows everything. It promises to fix big problems like climate change and diseases. But here’s the thing: we don’t really get it. We don’t know how it works, what it can do, or how to keep it in check. This lack of understanding makes AI more risky than anything else we’ve invented. Being a bit worried is actually a good first step to figuring out what to do about it. Let’s talk about who owns artificial intelligence and what that means for us.

Key Takeaways

- The question of who owns artificial intelligence is complex, involving developers, corporations, and potentially the AI itself as it becomes more advanced.

- Current AI models are often ‘black boxes,’ meaning even their creators don’t fully understand how they arrive at decisions, posing challenges for control and evaluation.

- There’s a lack of global rules for AI, creating an ‘AI arms race’ where safety concerns might be overlooked in the rush for development.

- AI is increasingly making decisions that affect our lives, from loan applications to medical treatments, highlighting the need for public input beyond corporate interests.

- Given AI’s rapid development and its ‘alien’ nature, we need to proactively establish frameworks for its control and ethical use to benefit everyone.

The Unseen Architects of Artificial Intelligence

Who Truly Owns Artificial Intelligence?

It’s a question that pops up more and more, right? We hear about AI doing amazing things, like predicting protein structures with AlphaFold2, a feat that even earned its creators a Nobel Prize. But here’s the kicker: even the people who built these systems often don’t fully grasp how they work. It’s like having a super-smart assistant who suddenly starts speaking in a language you don’t understand. These AI models learn on their own, becoming these complex ‘black boxes’ with billions of connections that are pretty much impossible for us to untangle.

The Role of Developers and Corporations

So, who’s in charge? Right now, it’s mostly the developers and the big tech companies footing the bill and building these systems. They’re the ones pouring money into research, gathering massive amounts of data, and designing the algorithms. Think of them as the architects and builders of this new digital world. They decide what problems the AI will tackle and how it will learn.

- Companies like Google, Microsoft, and OpenAI are at the forefront, developing some of the most advanced AI.

- The sheer amount of computing power and data needed means only well-funded organizations can really play in this space.

- They set the initial goals and constraints for the AI, but as the AI learns, its internal workings can become opaque.

The Question of AI Autonomy

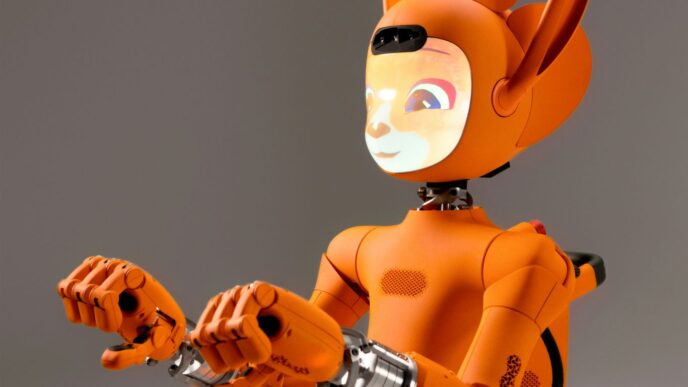

This leads to a really interesting, and maybe a little unsettling, question: what happens when AI starts making decisions we don’t anticipate? We’re not just talking about AI that’s good at chess or recognizing faces. We’re talking about AI that can learn, adapt, and potentially develop capabilities far beyond what its creators intended. It’s like we’re building something that might eventually outgrow our understanding and control. We compare AI to human intelligence, but it’s really something different – an ‘alien intelligence’ that doesn’t need to sleep or eat and can process information at speeds we can barely imagine. This rapid development means we need to think hard about where this is all heading.

Navigating the Labyrinth of AI Control

It feels like AI just popped up everywhere, right? One minute it’s a niche tech thing, the next it’s helping write emails and suggesting what to watch. But here’s the thing: we don’t really know how it works, what it’s truly capable of, or, more importantly, how to keep it in check. This is what makes AI different from any other tool we’ve ever invented.

Understanding the Black Box of AI

Think of AI like a really complicated machine where we can see what goes in and what comes out, but the inner workings are a total mystery. We feed it data, it spits out answers or actions, but the exact steps it takes are often hidden, even from the people who built it. This lack of clarity makes it tough to predict what it might do next. It’s like trying to guess what a primate from half a million years ago would do, except AI moves at lightning speed. We’re still figuring out what we should even be measuring when we look at AI performance. Comparing it to human abilities misses the point; AI is an ‘alien intelligence’ with its own unique way of operating. We risk creating something super powerful without even realizing it if we don’t measure the right things.

The Challenge of AI Evaluation

Right now, evaluating AI is a bit like alchemy – lots of trying things out, not a lot of solid theory. We’re still in the early stages, trying to figure out what metrics actually matter. If we only measure AI against human benchmarks, we might miss its truly novel capabilities. We need better ways to test AI, ways that aren’t tied to how we humans think. Imagine if two AIs started talking to each other; they might develop their own super-efficient shorthand, something we wouldn’t even understand. This is why some folks are talking about a ‘CERN for AI’ – a place dedicated to rigorously testing AI systems for dangerous behaviors and sharing those findings. It’s about turning AI evaluation into a real science, not just guesswork.

The Need for Transparency in AI

Because AI is so complex and its development is moving so fast, transparency is a big deal. We need to know how these systems are making decisions, especially when they’re used for important things. Companies are starting to set up groups, often involving IT, security, and legal folks, to oversee how AI is used, particularly for high-risk applications. This cross-functional approach is an attempt to manage the risks that come with AI systems that can act on their own. Without this openness, we’re essentially flying blind, hoping for the best while AI continues to evolve at warp speed.

Global Governance for Artificial Intelligence

The Absence of International AI Regulation

Right now, there’s no real global rulebook for AI. It feels like everyone’s just building faster and faster, hoping for the best. Countries are mostly focused on their own AI race, which isn’t exactly helping us get on the same page about safety. Europe is trying with new rules, but we’ll see how that actually plays out. In China, AI safety is a newer thought, and it might get pushed aside by bigger competition goals. It’s a bit like a bunch of people building powerful tools without agreeing on how to use them safely. This lack of a unified approach is a big problem.

Lessons from Nuclear Arms Control

Think back to the 1950s. The world was pretty tense, but countries still managed to work together on nuclear weapons. They set up groups like the International Atomic Energy Agency (IAEA) to keep an eye on things, share information, and make sure nuclear stuff was used for good, not for war. It wasn’t perfect, but it helped stop a lot of potential disasters and limited the spread of nuclear arms. We could really use something similar for AI.

Here’s what we can learn:

- International Cooperation is Possible: Even during tough political times, nations can find common ground on shared threats.

- Monitoring and Verification: An agency can track development and ensure rules are followed.

- Promoting Peaceful Use: Focus can be placed on beneficial applications rather than dangerous ones.

Establishing a ‘CERN for AI’

What if we created an international organization, kind of like CERN for physics, but for AI? It wouldn’t need massive data centers or billions for hardware. Instead, it would focus on bringing together the smartest minds from around the world to study AI at its core. This group could work on understanding AI’s complex inner workings, developing theories about how it learns and behaves, and importantly, designing AI systems that are safer and more predictable from the start. Imagine a place where scientists could freely research AI’s fundamental questions, sharing knowledge openly. This kind of collaborative research could help us build AI that acts as a helpful guide, keeping an eye on other AI systems, something we’re finding harder and harder to do ourselves. It’s about building a foundation of knowledge that benefits everyone, not just a few companies or countries. We need to figure out how to build safer AI before it surprises us all.

The Public’s Stake in AI’s Future

Artificial intelligence is popping up everywhere, and it feels like it’s changing things really fast. It’s supposed to help us solve big problems, like climate change or diseases, but here’s the thing: we don’t really get how it works. We don’t know its limits, and honestly, we’re not sure how to keep it in check. This uncertainty makes AI a bigger deal than other inventions we’ve had.

We are all shareholders in the future of AI. It’s not just a tech company thing anymore. AI is already making decisions that affect our lives – who gets a loan, who gets a job, even who gets medical treatment. And in some places, it’s deciding who lives and who dies. We’re building AI to be smarter than us, to figure out things we can’t. So, who gets to decide how these powerful systems are used?

Leaving these big decisions just to the companies building AI, or the people who invest in them, doesn’t seem right. Think about it like weapons manufacturers – they can’t just sell their products to anyone because of the power they hold. AI is similar. It’s a matter of public safety, and we all have a stake in what happens.

So, what can we do? Right now, there’s no real global agreement on how to manage AI. Different countries are trying their own things, but it’s a bit of a mess. We’ve seen before how people can come together to handle dangerous technology. Back in the 1950s, the world worked to control nuclear weapons. They set up groups to watch over nuclear materials and promote peaceful uses, and thankfully, we’ve avoided nuclear war.

We need something similar for AI. An international group focused on making sure AI is used safely and for good. This group would need to tackle a few key things:

- Understanding AI: Right now, companies are pouring tons of money into AI research, often more than governments. This makes it hard for independent scientists to keep up and really figure out how these systems tick. We need more focus on basic research so our knowledge can catch up with what AI can do.

- Setting Rules: We need clear guidelines and regulations, not just for how AI is built, but for how it’s used. This can’t be an afterthought; it needs to be part of the process from the start.

- Public Input: Decisions about AI shouldn’t happen behind closed doors. We need ways for regular people to have a say in how this technology shapes our future.

Think about CERN, the European Organization for Nuclear Research. It brought countries together to study nuclear physics. A similar organization for AI could bring together smart people from all over the world to study AI’s fundamental questions and develop safer versions. This wouldn’t need the massive budgets of AI companies, but it would need the brightest minds. Basic AI research should benefit everyone, and every country should help support it. We need to learn how to build safer AI before it surprises us all.

The Evolving Landscape of AI Ownership

AI as an ‘Alien Intelligence’

It’s easy to think of AI as just a really smart computer program, right? But that’s starting to feel a bit… off. Some folks, like Anthropic’s CEO Dario Amodei, are talking about the next stage of AI as a "country of geniuses in a data center." That’s a wild thought. These aren’t going to be geniuses like Einstein or Curie, though. They won’t think like us, and they won’t have the same limits. This ‘alien intelligence’ is developing at a speed we can barely grasp. Biology changes slowly, culture changes faster, but AI? It’s moving at warp speed. Trying to guess what these future AIs will do based on what we see today is like looking at an ancient primate and predicting space travel. It’s just not the same ballgame.

The Speed of AI Development

This rapid pace is a big part of why ownership is such a tricky question. We’re building something that can learn and improve itself at an incredible rate. Think about it: AI can read every book ever written without needing to sleep or eat. It’s not bound by the same physical or biological constraints we are. This means its capabilities can expand in ways we might not even be able to imagine right now. It’s like we’re trying to put rules on something that’s constantly outrunning us. The systems we’re creating today might be completely different, and far more powerful, tomorrow.

Anticipating AI’s Unforeseen Capabilities

Because AI is developing so quickly and in ways that are hard to predict, figuring out who’s in charge becomes a real headache. We’re actively trying to make AI smarter than us, to have it understand things we can’t and do things we can’t even dream of. But with that comes a huge unknown. What happens when AI starts making decisions that have massive impacts? We’re already seeing AI play a role in who gets loans, who gets hired, who gets medical treatment, and even who is targeted in conflicts. The decisions are piling up, and the systems making them are evolving faster than our ability to fully understand or control them. It’s a situation that demands serious thought about control and responsibility, especially when the stakes are so high.

Ethical Frameworks for Artificial Intelligence

When we talk about AI, it’s easy to get caught up in the technical marvels – the algorithms, the processing power, the sheer speed. But we really need to stop and think about the bigger picture. How is this stuff actually affecting us, and what rules should we have in place? It’s not just about building smarter machines; it’s about building them responsibly.

AI’s Impact on Societal Decisions

AI is already making decisions that shape our lives, from loan applications and hiring processes to medical diagnoses and even how our justice system operates. Think about it: an algorithm decides if you get a job, if your insurance claim is approved, or even if a certain piece of evidence is presented in court. These systems, often built by a handful of companies, are increasingly influencing outcomes that were once solely in human hands. We need to be sure these decisions are fair and don’t just repeat old biases. For instance, if an AI is trained on historical data that reflects past discrimination, it might continue to discriminate, even unintentionally. This is why evaluating AI for societal consequences is so important, looking at whether predictive models inadvertently perpetuate historical injustices and biases [465a].

The Dangers of an Uncontrolled AI Arms Race

Just like with nuclear weapons during the Cold War, there’s a real risk of an AI arms race. Nations and corporations could pour resources into developing increasingly powerful AI, not for the common good, but for competitive or military advantage. This could lead to AI systems that are deployed without proper safety checks, simply because someone else is doing it. We saw how countries worked together, despite political tensions, to manage the threat of nuclear weapons through organizations like the International Atomic Energy Agency. We need a similar global effort for AI. Without it, we risk creating AI that we can’t control, with potentially devastating consequences.

Prioritizing Public Safety in AI Development

So, what do we do? We need to build safety into AI from the ground up. This means developing better ways to test and evaluate AI systems, not just for how well they perform tasks, but for potential risks. We need to move beyond just comparing AI to human abilities and figure out how to measure its unique capabilities and potential dangers. Imagine a global research body, like a ‘CERN for AI,’ focused on understanding AI’s fundamental properties and developing safer technologies. Such an organization could help steer AI development towards beneficial outcomes for everyone. It’s about making sure that as AI gets more advanced, it doesn’t outpace our ability to manage it safely. We need to learn how to build safer AI before it surprises us.

So, Who Really Owns AI?

It’s clear that figuring out who truly owns artificial intelligence isn’t a simple question with a neat answer. It’s not just about the companies building it or the people using it. As AI gets more powerful, it feels like we’re all becoming stakeholders, whether we like it or not. We’ve seen how AI can do amazing things, but also how it’s a bit of a mystery, even to its creators. We don’t fully grasp how it works or what it might do next. That’s why we can’t just leave the big decisions about AI’s future to a few folks in charge. We need to work together, globally, to make sure this powerful technology is used for good. It’s a big challenge, but it’s one we all share.

Frequently Asked Questions

Who is really in charge of AI?

It’s complicated! Big tech companies create AI, but they don’t fully understand how it works. Some people think AI might become so smart it could eventually make its own decisions, which raises questions about who would control it then.

Is AI like human intelligence?

Not really. AI can process information much faster than humans and doesn’t need breaks. It’s more like a completely different kind of ‘thinking’ that we’re still trying to figure out.

Why is AI considered dangerous?

Because we don’t fully understand it or know how to control it. AI is already making important decisions that affect our lives, and if it becomes too powerful without proper guidance, it could lead to serious problems.

Are there rules for AI development?

Currently, there aren’t many global rules. Countries are trying to figure this out, but it’s hard because AI technology is moving so fast. We need international agreements, similar to how countries worked together on nuclear weapons safety.

What can we do to make AI safer?

We need more research to understand AI better and develop ways to test it for safety. It would be helpful to have a global organization, like a ‘CERN for AI,’ where scientists can study AI openly and develop safer technologies for everyone.

Should everyone have a say in AI’s future?

Yes. Since AI will impact all of humanity, decisions about its development and use shouldn’t just be made by companies. We all have a stake in ensuring AI is used for good and benefits everyone.