If you rewind the clock fifteen or twenty years, residential solar energy was a tough sell. The systems were incredibly expensive, wildly inefficient, and—let’s be brutally honest—they were usually an eyesore. You would bolt these massive, glaringly blue grid-lined rectangles to your roof, cross your fingers for a perfectly cloudless day, and hope the system eventually paid for itself before the parts degraded.

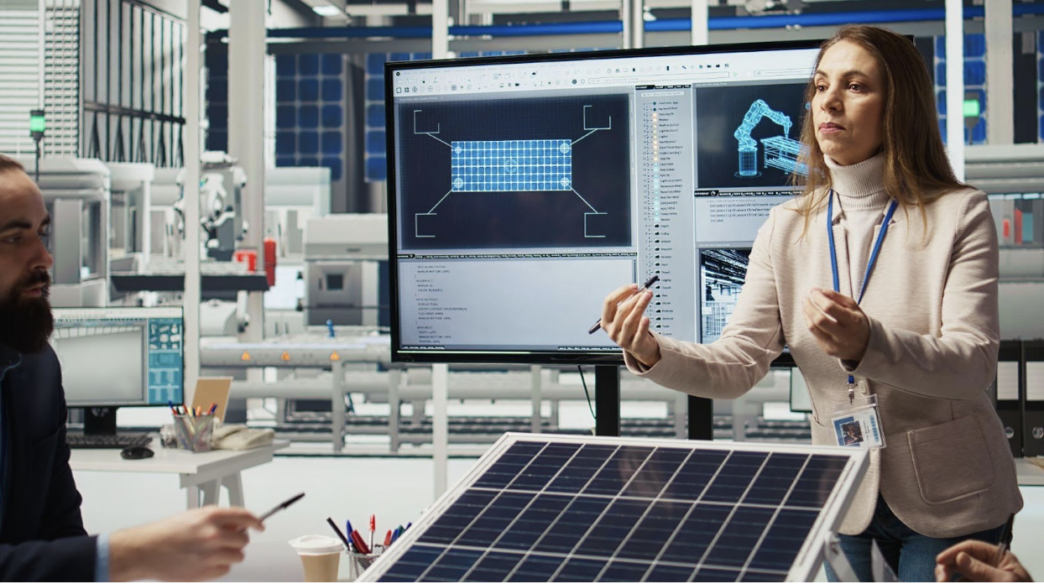

Today, that entire industry is unrecognizable. Driven by massive influxes of research funding and intense market competition, the engineering behind renewable energy has advanced at a blistering pace. If you are deciding to install solar panels on your home today, you are not buying the same technology your neighbors bought a decade ago. You are investing in a highly refined, hyper-efficient smart appliance.

If you are on the fence about making the jump to renewable energy, here is a look at exactly how technological innovations have completely transformed modern solar systems from a fringe green novelty into a financial powerhouse.

1. The Efficiency Boom: Squeezing More Juice from a Smaller Footprint

The biggest hurdle for early solar adopters was the conversion rate. Old-school solar cells could only convert a tiny fraction of the sunlight hitting them into usable electricity. If you had a smaller roof, you simply couldn’t physically fit enough panels up there to power your home. That math has completely changed.

The industry largely abandoned the older polycrystalline technology (those blue, speckled panels) in favor of high-purity monocrystalline silicon. But the real game-changer was the introduction of PERC (passivated emitter and rear cell) technology. Engineers essentially added a reflective layer to the back of the solar cell. Instead of sunlight passing straight through the silicon and being lost, the PERC layer bounces the unabsorbed light back up into the cell for a second chance at conversion.

More recently, the leap to TOPCon (tunnel oxide passivated contact) cells has pushed commercial efficiency rates even higher. What does this mean for a homeowner? It means a modern 400-watt panel is roughly the same physical size as an older 250-watt panel. You can now offset your entire electric bill with significantly less roof space.

2. Solving the Christmas Light Problem with Microinverters

For a long time, residential solar arrays were wired together in what is called a string system. They operated exactly like a cheap string of holiday lights: if one bulb went out, the entire strand went dark.

If a large oak tree cast a shadow across just one of your panels for an hour in the afternoon, the electricity production of your entire roof would instantly drop to match that single shaded panel. It was a massive design flaw.

The tech industry solved this by miniaturizing the hardware and creating microinverters. Instead of sending all the power to one central box on the side of your house, modern systems feature a tiny, dedicated computer attached to the back of every single panel. Now, every panel operates entirely independently. If a chimney shades one corner of your array, the rest of the roof continues pumping out maximum power without missing a beat.

3. The End of the Ugly Roof Era

Let’s address the aesthetics. For decades, homeowners’ associations fought tooth and nail against solar installations because the silver metal frames and bright blue cells clashed heavily with neighborhood architecture. Manufacturing innovations have essentially eliminated this argument.

Modern production techniques now allow for sleek, all-black panels. The silver framing is gone, the internal wire grids are hidden using back-contact technology, and the mounting hardware has been redesigned to sit incredibly low to the roofline. From the street, a premium solar array now looks like a flush, seamless sheet of tinted glass. Furthermore, the rise of building-integrated photovoltaics (BIPV)—like solar shingles that mimic the exact look of traditional asphalt or slate roofs—proves that you no longer have to sacrifice curb appeal to generate your own power.

4. Bifacial Panels: Catching the Rebound

Why only collect sunlight on one side of the panel when the sun bounces off everything?

Bifacial solar panels are a brilliant mechanical innovation that features light-absorbing silicon on both the front and the back of the unit. While these aren’t typically used flush against a residential asphalt roof, they are taking over the commercial sector and residential ground-mounts.

If you build a solar pergola in your backyard, the top of the bifacial panels captures the direct sunlight, while the underside captures the ambient light reflecting off your concrete patio or the winter snow. This simple architectural shift can boost a system’s total energy yield by up to 20% without adding a single extra square inch of footprint.

5. AI and the Smart Battery Revolution

A solar panel is ultimately just a generator; it only works when the sun is up. The final piece of the evolutionary puzzle was figuring out how to make that power useful at 9:00 PM.

The introduction of lithium iron phosphate (LiFePO4) home battery systems completely changed the dynamic. But the real innovation isn’t the battery chemistry—it is the artificial intelligence running the system. Modern home energy setups use predictive algorithms. Your system’s software will actively monitor local weather forecasts and your utility company’s time-of-use pricing.

If the AI knows a heavy storm is rolling in tomorrow, it will automatically hoard your solar power in the battery today to ensure your house survives a potential grid blackout. If rates spike at 5:00 PM when everyone in your city turns on their air conditioning, your smart system will automatically switch your house off the grid and run entirely on your stored solar power, saving you a fortune.

The Future of Solar Energy

We are long past the era of solar energy being an experimental project for early adopters. Thanks to relentless engineering and smart technology integration, slapping a few panels on your roof is no longer a gamble. It is a highly predictable, incredibly durable, and visually appealing way to take permanent control over your home’s operating costs.