Maxwell Zeff’s TechCrunch Reporting Focus

Maxwell Zeff’s work at TechCrunch really zeroes in on the fast-moving world of technology, with a particular knack for spotting trends before they become mainstream. He covers a lot of ground, but a few areas stand out in his reporting.

Coverage of AI Advancements

Zeff has been tracking the progress of artificial intelligence closely. It’s not just about the big announcements; he digs into what these advancements actually mean for businesses and everyday users. He often looks at how new AI tools are being developed and what problems they aim to solve. His reporting highlights the practical applications of AI, moving beyond the hype to show real-world impact.

Analysis of Startup Ecosystems

Beyond specific technologies, Zeff spends a good amount of time looking at the companies building them. He examines the startup scene, paying attention to funding rounds, new business models, and the people behind these ventures. Understanding the health and direction of the startup world is key to seeing where tech is headed, and Zeff provides a clear view of this landscape.

Reporting on Emerging Technologies

AI is a big part of it, but Zeff also keeps an eye on other new tech that’s just starting to gain traction. This could be anything from new ways to use data to advancements in hardware. He tries to explain these new technologies in a way that makes sense, even if they seem complicated at first. His goal is to inform readers about the next wave of innovation.

Key Themes in Maxwell Zeff’s Articles

Maxwell Zeff’s reporting often circles back to a few core ideas that really define the current AI conversation. He digs into how AI is changing, not just in terms of new models, but how these tools are actually being used by people and businesses.

The Evolution of AI Agents

Zeff has spent a lot of time looking at AI agents, those programs designed to perform tasks autonomously. It’s not just about the cool demos anymore; he’s interested in the practical side. How do these agents move from a lab setting to something you can actually rely on day-to-day? He explores the challenges in making them robust and reliable enough for everyday use, which is a big hurdle for widespread adoption. It’s a complex area, and Zeff breaks down the progress and the remaining roadblocks.

Foundational Models and Their Impact

Another big theme is the impact of foundational models – the massive AI systems that power many newer applications. Zeff looks at how companies are building on top of these giants, like Google’s Gemini or models from Cohere. He highlights discussions from events like TC Sessions: AI, where experts from places like Google DeepMind and Cohere share insights. These foundational models are changing how software is built and what’s possible, and Zeff makes sure to cover the companies and people shaping this space.

Enterprise AI Solutions

Beyond the consumer-facing AI, Zeff also pays close attention to how businesses are adopting AI. This includes looking at how companies are securing their AI systems and what practical applications are emerging in the corporate world. He covers how AI is being integrated into existing business processes, aiming to improve efficiency and create new opportunities. It’s about the real-world business impact, not just the theoretical potential.

Insights from TechCrunch Sessions

TechCrunch events are always a good place to get a feel for what’s happening in the tech world, and their AI-focused sessions are no exception. Maxwell Zeff often highlights key takeaways from these gatherings, giving readers a condensed version of the important discussions. It’s like getting the CliffsNotes for the future of technology.

At TC Sessions: AI, for instance, there were some really interesting panels. One that stood out featured leaders from Google DeepMind, Cohere, and Twelve Labs. They talked about how founders can build on top of existing AI models. It’s a tricky balance, trying to innovate without getting left behind by the big players.

Here’s a quick look at some of the topics covered:

- The Evolution of AI Agents: Discussions often touch on how these agents are moving from simple demos to actual, usable products. It’s not just about showing off cool tech anymore; it’s about making it practical.

- Foundational Models: Companies like Cohere and Google DeepMind are really pushing the boundaries here. Their work is what a lot of other startups are building upon, so understanding their direction is key.

- AI in the Workforce: There’s a lot of talk about how AI will change jobs and how companies can integrate these tools effectively. It’s a big topic with lots of different opinions.

Then there’s Disrupt, which is a much bigger event. It brings together a huge crowd of tech leaders, investors, and founders. Zeff’s reporting from Disrupt often covers the general vibe of the industry, major product announcements, and interviews with big names. It’s a good snapshot of where the entire tech landscape is heading. You can get tickets for Disrupt 2025, happening October 27-29 in San Francisco, and it’s a great chance to connect with people in the industry. It’s also where you might see the next big thing, kind of like how the new iPager was announced recently.

OpenAI’s Developer Tools and APIs

So, OpenAI has been busy. They recently rolled out some new tools aimed at helping developers and businesses create their own AI agents. Think of these as automated helpers that can do tasks for you, using OpenAI’s tech. It’s a big move, especially since the whole idea of AI agents has been a bit of a buzzword lately, with some companies showing off demos that don’t quite deliver. OpenAI wants to make sure their agents are actually useful.

The Responses API Explained

This new Responses API is pretty interesting. It’s basically taking over from their older Assistants API, which they plan to stop supporting sometime in 2026. The Responses API lets you build custom AI agents that can do things like search the web or look through your company’s files. They even say it won’t use your company data to train its models, which is good to hear. This new API is designed to let developers build applications that feel more independent than what we’ve seen so far. It’s a step towards making these agents more capable.

Computer-Using Agent (CUA) Model

One of the cool parts of this release is the Computer-Using Agent, or CUA model. This is what powers tools like OpenAI’s Operator, which can navigate websites for you. The CUA model can generate mouse and keyboard actions, meaning it can automate computer tasks like data entry or app workflows. It’s still in a preview stage, and OpenAI admits it’s not perfect yet. They mention it can make mistakes, especially when trying to automate tasks on operating systems. But they are working on making it better. For now, the version in Operator works on the web, but businesses can run the CUA model locally on their own systems for more control.

AI Agent Development and Challenges

Building these AI agents isn’t without its hurdles. Even with advanced search capabilities, AI can still get facts wrong sometimes. For instance, OpenAI’s own GPT-4o search still misses about 10% of factual questions. Plus, AI search tools can struggle with simple queries, like asking for a sports score, and sometimes the sources they cite aren’t totally reliable. OpenAI is also releasing an open-source toolkit called the Agents SDK. This gives developers free tools to connect models to their systems, add safety features, and keep an eye on how the agents are working. It’s a way to help developers build and manage their own AI agents more effectively. It’s exciting to see how this technology will evolve, especially with events like Disrupt 2025 coming up, where these topics will surely be discussed.

The Future of AI Agents

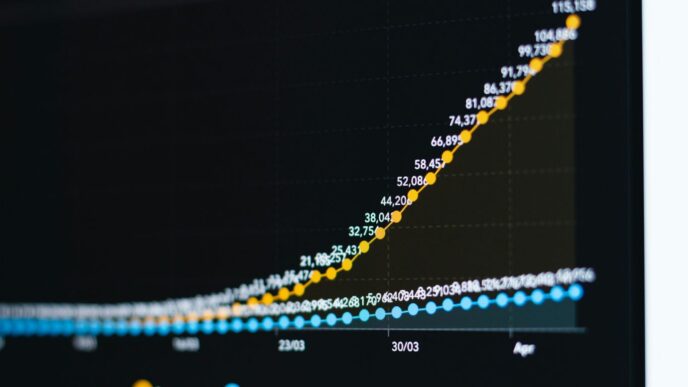

It feels like every week there’s a new AI model making waves, and the talk around AI agents is getting louder. Olivier Godement from OpenAI mentioned hoping to see the gap between AI agent demos and actual products shrink this year. He even went as far as to say agents might be the most significant AI application we’ll see. This lines up with what OpenAI’s CEO, Sam Altman, suggested earlier this year: 2025 is the year AI agents really start showing up in the workforce. OpenAI’s recent releases, like the Responses API and the Computer-Using Agent (CUA) model, seem to back this up, aiming to move beyond just impressive demos to tools people can actually use.

But let’s be real, building these agents is tough. It’s one thing to show off a cool demo, but making it work reliably and getting people to use it regularly is a whole different ballgame. OpenAI’s own Operator and deep research tools in ChatGPT gave us a peek at what’s possible, but they still had a ways to go in terms of true autonomy. The new Responses API is designed to give developers the building blocks to create their own agent-like applications, hopefully with more independence than we’ve seen so far.

There are still hurdles, though. Even with AI-powered search, which is generally more accurate than older AI models because it can just look up the answer, problems like AI hallucinations aren’t completely solved. For instance, GPT-4o still gets some factual questions wrong. Plus, these tools can struggle with simple, direct queries, and there have been reports about the reliability of citations. OpenAI itself admits that the CUA model is still in its early stages and can make mistakes. They’re working on improving it, but it’s clear we’re still in the early days of making these agents truly dependable for everyday tasks.

Here’s a quick look at what OpenAI is offering for agent development:

- Responses API: This is the main tool for businesses to build custom AI agents. It allows agents to search the web, look through company files (OpenAI says they won’t train on these files), and navigate websites.

- Computer-Using Agent (CUA) Model: This model powers actions like mouse and keyboard movements, letting developers automate computer tasks. It’s available in a research preview and can be run locally by enterprises for more control.

- Agents SDK: An open-source toolkit to help developers integrate models, add safety features, and monitor agent activity.

The big question remains: can these tools bridge the gap from impressive demos to widely adopted products? Only time will tell, but the push towards more practical, scalable AI agents is definitely on.

Maxwell Zeff and the Tech Landscape

Maxwell Zeff’s work at TechCrunch really puts him in the middle of everything happening in the tech world. It’s not just about reporting on new gadgets or company announcements; it’s about understanding the bigger picture. He’s often at major industry events, soaking in the atmosphere and talking to the people who are actually building the future.

Covering Major Tech Events

Think about events like TechCrunch Disrupt. Zeff is there, not just as an observer, but as someone trying to figure out what the buzz is all about. He’s looking for the trends that will shape the next few years. It’s a lot like trying to predict the weather, but with more venture capital and fewer clouds. He’s seen how these events can be launchpads for new ideas and companies, and his reporting reflects that.

Interviews with Industry Leaders

Beyond the big conferences, Zeff also gets one-on-one time with some pretty important people in tech. These aren’t just casual chats; they’re opportunities to get direct insights into what drives these companies and their leaders. He asks the questions that many of us are thinking but don’t get the chance to ask. It’s through these conversations that we get a clearer view of the strategies and challenges facing major players.

The Intersection of Media and Technology

What’s also interesting is how Zeff’s role highlights the connection between media and the tech industry itself. TechCrunch isn’t just reporting on tech; it’s part of the tech ecosystem. Zeff’s articles often touch on how information spreads, how narratives are built around new technologies, and how media outlets themselves adapt to the fast-changing digital landscape. It’s a two-way street, really. The tech world influences media, and media coverage, in turn, shapes public perception and investment in tech.

Looking Ahead

So, what’s the takeaway from all this? Maxwell Zeff’s work at TechCrunch really shows how fast things are changing in the tech world, especially with AI. We’ve seen how companies like OpenAI are trying to make AI agents more useful, moving from cool demos to actual tools people can use. It’s a tricky path, and while there are still bumps in the road, like making sure AI is accurate and reliable, the progress is clear. Zeff’s reporting helps us understand these shifts, from new product releases to the bigger picture of where AI is headed. It’s a reminder that keeping up with tech means paying attention to the details and the people telling those stories.