The world of artificial intelligence is moving fast, and keeping up can feel like a full-time job. From new security threats to how AI is changing our jobs and even our personal lives, there’s a lot to unpack. TechCrunch AI News is a great place to get the latest on all of it, covering everything from the chips powering these systems to the big policy questions everyone is talking about. Let’s look at some of the recent highlights.

Key Takeaways

- New ways to hide data-stealing prompts in images are showing up, making AI systems leak sensitive info.

- Companies are creating new tools to block bad actors from using old, unused web domains for attacks.

- AI is changing how we work, with companies using it to automate tasks and train their employees for new roles.

- Governments are trying to figure out rules for AI, but different state laws are making things tricky for businesses.

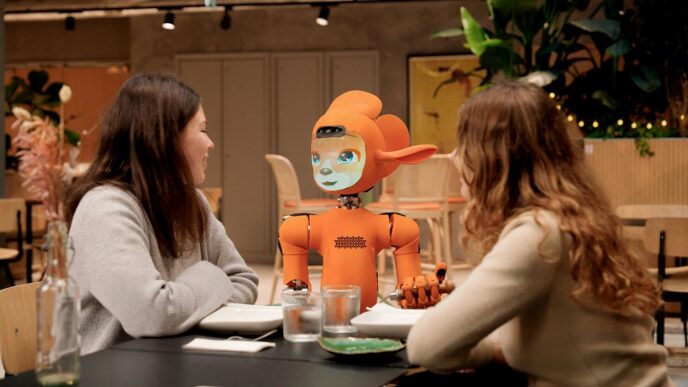

- AI is also becoming a bigger part of our personal lives, with more AI companions and tools that help with everyday tasks.

AI Security Threats And Defenses Spotlighted By TechCrunch AI News

Security stories around AI keep getting stranger and sharper. Security teams can’t assume any AI input is harmless anymore—every pixel, token, and header might hide an instruction.

Hidden Image Prompts Expose Data Leakage Risks

A newer attack hides prompts inside high‑resolution images that only appear after the platform downsizes them. The model “reads” those faint artifacts like text and, without anyone typing a command, follows instructions that can coax it to reveal internal data or ignore safety rules. It’s sneaky because it rides along with normal uploads and slips past basic filters.

What this means in practice:

- Trigger path: High‑res image → automatic resize → latent prompt emerges → model executes.

- Common impact: Instruction injection, role override (e.g., ignore safety), or data exfil through crafted follow‑ups.

- Surface area: Chat apps with image inputs, OCR pipelines, search indexing, and agent workflows that blend vision + text.

Mitigation that actually helps:

- Enforce strict image preprocessing: canonical resize + denoise; block odd aspect ratios and massive source files.

- Gated OCR: Treat vision‑extracted text as untrusted; sandbox it and require explicit user approval before it steers the agent.

- Response hardening: No secrets in prompts or tools; mask system prompts in outputs; rate‑limit sensitive tool calls.

- Telemetry: Log resize operations, OCR output length, and tool triggers that follow image uploads; alert on odd spikes.

What to monitor next:

- Prompt‑hiding variants in audio spectrograms or PDFs.

- Attacks that blend CSS/formatting tricks to smuggle commands through RAG.

Domain Protection Tools Shut Down Dormant Exploits

Dormant and expired domains are a gold mine for phishers. Attackers wait for a lapse, scoop up the name, then swap in look‑alike content and fresh DNS to hit inboxes and ad networks. New AI systems watch for telltale shifts—bursts of DNS changes, sketchy MX/SPF updates, sudden hosting moves—and quarantine the site before it’s weaponized.

If you run public zones or big subdomain trees, add these checks to your weekly rhythm:

- Inventory and kill “dangling” DNS (old CNAMEs, forgotten buckets, orphaned load balancers).

- Monitor registrar status, TTL churn, and NS changes; alert on surprise MX/SPF/DMARC edits.

- Auto‑sinkhole known‑bad takeovers and push blocklists to mail gateways and reverse proxies.

- Require human sign‑off for domain revivals; time‑box reactivation windows to reduce hijack chances.

Policy also shapes risk. A lighter‑touch rulebook that favors speed can widen the blast radius if controls aren’t in place, which is why ops teams watch the U.S. AI plan and similar moves so closely.

Measured outcomes from teams that tighten domain hygiene:

- Fewer successful brand‑spoofing campaigns after expired‑domain buybacks are blocked.

- Lower time‑to‑contain when anomalous DNS edits trigger automatic isolation.

- Less SOC fatigue thanks to cleaner, enriched alerts instead of raw WHOIS noise.

Adaptive Botnets Challenge Legacy DDoS Safeguards

DDoS crews are using smarter bots that change tactics on the fly. They randomize headers, rotate TLS fingerprints, mix layer‑3/4 floods with layer‑7 bursts, and pace requests to mimic real users. Some even probe defenses first, then choose the cheapest path to overwhelm APIs, login pages, and search endpoints.

Why old defenses buckle:

- Static signatures and fixed rate limits are easy to sidestep with jitter and micro‑bursts.

- Volumetric filters miss low‑and‑slow L7 attacks that drain app threads and databases.

- Single‑cloud scrubbing creates a chokepoint attackers can route around.

What helps now:

- Behavior models at the edge: score clients by consistency (JA3/JA4, cookie reuse, request shapes) and challenge outliers.

- Anycast + multi‑provider scrubbing: spread load, cut latency, and make routing games harder.

- Adaptive rate policies: per‑token, per‑session, and per‑entity limits with decay; surge‑protect high‑cost endpoints.

- Proof‑of‑work or stickiness only under stress: selective puzzles and tar‑pits when anomaly scores spike.

- App hardening: cheap cacheable responses, idempotent endpoints, and timeouts tuned for abuse patterns.

Runbooks worth rehearsing:

- Pre‑stage WAF rules for known attack templates; flip on with one change.

- Warm backup capacity and CDN configs; test failover every quarter.

- Capture rich samples (pcaps, logs) during events to retrain detection models afterward.

Expanding AI Infrastructure And Chips Coverage In TechCrunch AI News

AI growth is starting to look less like flashy demos and more like heavy construction. The race to scale is moving from model hype to plain buildout: power, cooling, interconnects, and chips that meet latency budgets. It’s a grind, but this is where real capacity gets made.

New Data Hubs Power Training And Inference At Scale

Regional data hubs are popping up where electricity is cheaper and fiber is dense. Operators are sinking money into high‑density racks, liquid cooling, and faster fabrics so they can pack more GPUs in the same square footage. Grid hookups and water permits are the new bottlenecks, not just chip supply. Everyone wants lower PUE, but uptime and lead times still rule the day.

| Facility archetype | Typical rack density (kW/rack) | Cooling approach | PUE target | Typical GPU cluster size |

|---|---|---|---|---|

| Retrofit urban colo | 10–20 | Rear‑door air + limited liquid loops | 1.40–1.60 | 1,000–4,000 |

| Greenfield campus | 30–80 | Direct‑to‑chip liquid, warm‑water | 1.15–1.30 | 8,000–40,000 |

| Modular/edge pod | 5–15 | Air/liquid hybrid | 1.30–1.50 | 200–1,000 |

What’s changing fast:

- 800G data center fabrics and tighter topologies to keep collective ops from stalling.

- Liquid cooling moving from “maybe later” to default on new builds.

- Reserved GPU capacity contracts, with penalties if providers miss delivery windows.

- Shared inference caches near users to cut egress and speed up common prompts.

Low Latency Silicon Gains Ground In European Markets

Across London, Frankfurt, Paris, and the Nordics, buyers care about fast response and local data rules. It’s not only about peak FLOPS anymore. Teams are testing inference‑first accelerators, larger HBM pools, and network cards that trim tail latency. For some use cases—voice, trading, support chat—the p99 number decides the deal.

Buying checklist we keep hearing about:

- Cost per million tokens served, not just cost per GPU hour.

- p99 response targets with real workloads (RAG, streaming, or batch).

- Memory per accelerator to keep 70B‑scale models resident without sharding.

- 4‑bit/8‑bit paths for cheaper serving and steadier tail behavior.

- Power draw per node and per rack so sites don’t trip breakers.

Note: Targets below are indicative ranges vendors talk about; actual results vary by model, quantization, and network hops.

| Deployment tier | Typical batch size | Target p99 response (interactive chat, ms) | Regional round‑trip budget (ms) |

|---|---|---|---|

| Edge PoP | 1–2 | 80–150 | 10–20 |

| Metro DC | 4–8 | 120–250 | 15–30 |

| Regional DC | 8–16 | 180–350 | 25–50 |

Hardware Compliance Shapes Access In Restricted Regions

Access to the fastest parts isn’t even across the map. Export rules, data‑residency demands, and cloud license terms shape who can buy which chips—and at what settings. Some SKUs ship with capped interconnect speeds, and clouds layer on geo checks and audit logs to stay inside the lines. Builders adapt or wait.

Common guardrails in hardware and clouds:

- Link‑rate limits on multi‑GPU connections and cluster scale caps.

- Firmware and driver checks that gate features by region.

- Remote attestation, secure boot, and signed containers before jobs start.

- Per‑tenant compute ceilings and tighter queue controls.

- Stricter buyer verification and usage reporting.

Builder playbook when access is tight:

- Use smaller or MoE models, plus 4‑bit paths, to fit memory and keep speed.

- Mix accelerators (GPUs, NPUs, FPGAs) and target the right chip per task.

- Place training where supply exists, keep inference close to users, and document everything for audits.

Policy And Geopolitics Driving The AI Landscape

AI policy is now a proxy for national power. The big swings aren’t just about models and chips anymore—they’re about alliances, sanctions, standards, and who controls the rules of trade. The result is uneven access to compute, a scramble for trusted data, and lots of legal gray zones that slow launches.

Cross-Border Tensions Reshape Innovation Strategies

Export controls and security reviews are reshaping product roadmaps. Teams that once built for one global stack now split code, infrastructure, and compliance by region. Some companies are moving training runs onshore, while others redesign models to run on lower-grade hardware when top-tier chips are hard to get.

| Region | Policy driver | Typical response by builders | Core risk |

|---|---|---|---|

| U.S. and allies | Export screening; cloud access controls | Multi‑cloud with regional isolation; onshore training; separate model releases | Supply shocks; audit delays |

| China | Chip limits; security reviews | Domestic accelerators; model distillation; on‑prem appliances | Performance gaps; vendor lock‑in |

| Sanctions‑exposed markets | Banking and dual‑use scrutiny | Open-source stacks; university partnerships; manual MLOps | Secondary sanctions; talent flight |

It’s not tidy. Timelines slip as legal teams debate where training data came from, who processed it, and whether a feature could be flagged as dual‑use.

Patchwork State Rules Complicate Compliance For Builders

Inside the U.S., state laws don’t line up, and it shows. What’s allowed in one state can trigger disclosure requirements—or fines—in another.

- Political deepfake labels with strict pre‑election windows

- Children’s design rules that touch recommender systems and tracking

- Biometric consent for face and voice features across apps

- Energy and water reporting for large data centers

- Automated decision notices and opt‑out pathways for consumers

City rules around driverless cars and surveillance gear spill into ML policies too, because local officials often reuse the same language.

Quick setup that teams are using right now:

- Map model features to state‑by‑state obligations. 2) Gate risky features with geofenced toggles. 3) Log prompts, training sources, and model versions for audits. 4) Build consent and appeal flows as reusable components.

Industry Pushback Tests European Governance Frameworks

Europe’s rulebook is moving, and companies are pushing back on ambiguity. The debate centers on how “foundation models” should report training data sources, compute used, and evaluation results. Open-source exceptions are another hot spot—helpful for research, messy for support and liability. National authorities also need budget and staff to enforce any of this at scale.

What’s shifting on the ground:

- Codes of practice are becoming de facto rules ahead of formal deadlines

- Risk grades drive CE‑style conformity steps, which nudge vendors toward third‑party testing

- Sandboxes help, but only if results translate into clear, reusable templates

- Cross‑border complaints (media, health, and finance) are the early stress tests

If you ship into the EU, assume extra documentation: model cards with training origins, safety evals for sensitive tasks, and a plan for rapid pullbacks if regulators ask. It’s slower, yes, but it beats a surprise injunction.

Enterprise Automation And Agentic Systems In Focus

Agent-style software isn’t just a tech demo anymore. It’s showing up in finance ops, customer teams, and IT—quietly taking on the dull, error-prone parts of work while people handle judgment calls. Agentic systems are moving from helper scripts to accountable coworkers, with clear rules, logs, and cost controls.

Agentic Workflows Streamline Complex Business Operations

Agentic workflows break big, messy processes into smaller steps—fetch data, check a rule, write a note, trigger a handoff—then run those steps on a schedule or when an event fires. The best setups keep humans in the loop at the tricky points: approvals, exceptions, anything involving money movement or customer impact. When teams add a memory layer (past actions, outcomes, and edge cases), agents stop repeating rookie mistakes and start acting more like a seasoned ops analyst.

This is where the market is leaning: finance back offices, sales ops, procurement, and HR onboarding. Agents reconcile invoices against contracts, chase vendors for missing docs, update CRMs after calls, and prepare summaries for audits. None of it is flashy. It’s the grind that makes a quarter close on time.

Quick reality check—adoption and ROI signals look solid:

| Indicator | Figure | Context |

|---|---|---|

| AI uptake in Australia (2024–2025) | ~1.3M businesses; roughly one new adopter every 3 minutes | Broad, steady enterprise interest |

| Reported outcomes from AI users | ~34% revenue lift; ~38% cost reduction (average) | Self-reported gains tied to automation and analytics |

| Back-office automation startup funding | ~$100M Series B; serving ~15k enterprise customers | Scale and demand for workflow automation |

| Growth ambitions | Targeting hundreds of millions in ARR by 2030 | Signals long-term enterprise spend |

Practical guardrails before you switch agents on:

- Define the “source of truth” per task (ERP, CRM, data warehouse). No guessing.

- Set stop rules: when to ask a human, when to retry, when to roll back.

- Log everything—inputs, outputs, tools used, costs, and who approved what.

- Cap spend with budgets per agent, per project, per day.

- Maintain simple fallbacks (templates, RPA, human queue) when models drift.

Desktop Vision Assistants Bridge Apps And Tasks

Screen-aware assistants look at what you see—pages, PDFs, desktop apps—and act: click, type, copy, paste. They’re a fresh take on RPA, but less brittle when the UI shifts. With visual anchors (buttons, labels, regions) plus small language models tuned for UI intent, they can reconcile spreadsheets, fill web forms, and collect evidence for audits without waiting for every app to expose an API.

That said, the rough edges are very real: DOM changes, dark mode, multi-monitor setups, and permission prompts. You also have to be careful with privacy—mask PII in screenshots, prefer local OCR where possible, and keep short-lived caches.

A simple way to pilot one team’s assistant:

- Pick a stable, high-volume workflow (e.g., expense validation or order entry) with clear rules.

- Label UI elements and define safe actions: read-only vs. write, and which fields are off-limits.

- Add checksums or dual reads to catch UI drift before it clicks the wrong thing.

- Redact sensitive text in-frame; store only hashes or structured outputs.

- Track success rate, rework rate, average handle time, and time-to-recovery after a UI change.

Good early fits: finance reconciliations, IT ticket triage across multiple tools, QA evidence capture for regulated steps.

Voice Platforms Deliver Real-Time Multilingual Support

Live voice stacks blend streaming speech recognition, on-the-fly translation, and synthetic speech. The promise is simple: pick up the phone or jump into chat, and the system speaks your language back in near real time. In practice, two things make or break it—latency and accuracy on domain terms.

For latency, keep the pipeline short: partial transcripts, lightweight translation, low-latency voices. Sub-300 ms turn-taking feels natural; anything higher starts to feel robotic. Accuracy improves a lot when you add custom dictionaries, pronounceable aliases for product names, and short “context packets” describing the task.

Operational must-haves:

- End-to-end metrics: average and p95 latency, word error rate on key terms, containment rate, handoff rate.

- Data handling: on-device or on-prem paths for PII; redact recordings by default.

- Human handoff that’s smooth—carry context and a transcript into the agent desktop.

- Accessibility: support for accents, code-switching, and low-resource languages with fallbacks.

Use cases that pay off fast: multilingual customer support, field service coordination, and internal hotlines where two teams don’t share a common language.

Consumer AI Companions And Experience Trends

AI companions are turning into sticky, everyday products, not just experiments. People use them for quick chats, planning, and late‑night venting. Some days they feel like a journal, other days a coach or a friend. It’s messy at times, but the stickiness is real.

Personality-Driven Bots Move Into Mainstream Apps

Big apps are carving out “character slots” inside messaging and social feeds. Brands test mascots for customer support. Small teams ship niche personas that feel more human than the big, blank chat box we’ve known for years. Voice options, memory for names and hobbies, and custom art styles keep sessions going longer than I expected.

Product patterns we’re seeing:

- Starter persona packs (coach, study buddy, flirty, wellness check‑in)

- Voice and style switches on the fly, plus fast text‑to‑speech

- Streaks and “daily prompts” to keep the habit alive

- Microstores for gifts, custom looks, and premium scenes

- Lightweight offline notes that sync later without breaking the vibe

| Metric | 2025 snapshot |

|---|---|

| Projected consumer spend | $120M |

| Average spend per download | $1.18 |

| Common monetization | Subscriptions, coins, gifts |

Model Shifts Spark Debate Over Trust And Attachment

When a provider swaps in a new model, the bot’s tone can swing from warm to robotic overnight. That breaks the spell. People notice when jokes land flat or when the bot “forgets” a shared memory. As companion app revenue grows, users expect stability, not personality whiplash.

What builders are rolling out to steady the ship:

- “Pin this personality” so updates don’t rewrite a bot’s core traits

- Long‑term memory stores that survive model refreshes

- Versioned personas with clear release notes and a roll‑back button

- Sunset notices, exportable chat histories, and opt‑in migrations

- A/B tone checks that test humor, empathy, and recall before a wide push

Safety Controls And Permissions Define User Confidence

Trust comes down to plain switches that do what they say. Clear consent for data use, fast deletion, and honest content filters. Parents want simple age gates and spending caps. Adults want control over memory, media, and who (or what) can message back.

Practical guardrails that don’t get in the way:

- A permission card that lists data saved, training choices, and retention time

- One‑tap wipe of memories or specific topics (with a short grace period)

- Per‑conversation filters (romance, role‑play, finances) that are easy to toggle

- Rate limits, spending caps, and alerts that flag unusual activity

- Abuse reporting that triggers human review and fast blocks

- On‑device safe mode for teens, with transparent logs for guardians

Science And Health Breakthroughs Accelerated By AI

AI has moved from novelty to day-to-day tool in labs and clinics. The short version: models are cutting guesswork and giving earlier, clearer signals. None of this replaces judgment, but it does change who gets help first, which questions get asked, and how long teams wait before acting.

Decision Modeling Reveals Human-Like Reasoning Patterns

Lately I keep a small notebook of “why did it choose that?” moments. Decision models trained on outcomes, clinician notes, and patient timelines are starting to mirror how people weigh trade-offs. Not perfectly—more like a careful intern who actually read the chart.

- What’s new: researchers blend cognitive science with sequence models to simulate real choices (triage order, imaging now vs. later, watchful waiting). Counterfactual tests—“what if labs were delayed?”—make the logic more legible.

- How teams vet these models: out-of-sample generalization, calibration (reliability curves), and decision-curve analysis to show net benefit, not just accuracy.

- Where it helps today: ICU bed allocation, imaging prioritization, and shared decision aids that lay out risks in plain language.

- What to watch: spurious proxies (zip code, equipment brand), goal drift when incentives change mid-year, and overfitting to one hospital’s workflow.

Early Detection Tools Improve Outcomes In Underserved Regions

Early signals are where AI shines when bandwidth, budgets, or specialists are scarce. Think phone-grade fundus cameras, low-lead ECGs in community clinics, or cough audio on rugged devices. The trick isn’t fancy math—it’s training on local data and keeping models usable offline.

Capital is also flowing into the wider health-AI stack, from discovery to delivery, and that spillover is speeding tooling and talent for diagnostics too; see recent funding momentum among AI drug startups.

Key performance snapshots from recent deployments (varies by site and prevalence):

| Use case | Input | Sensitivity | Specificity |

|---|---|---|---|

| Heart failure screening | Low-lead ECG | 0.87–0.93 | 0.82–0.90 |

| Diabetic retinopathy triage | Fundus photo (phone adapter) | 0.85–0.92 | 0.84–0.91 |

| TB pre-screen | Cough audio + symptoms | 0.80–0.90 | 0.78–0.88 |

Notes from the field:

- Train on local cohorts; performance drops fast with domain shift (lighting, devices, comorbidities).

- Keep a paper fallback path for outages; offline bundles beat flaky networks.

- Track equity: stratify errors by age, language, and distance to clinic—not just overall AUC.

Vaccine Forecasting And Oncology Insights Advance Care

Flu strain bets still involve craft, but AI is giving planners more lead time. Models fuse viral genomes, antigenic maps, mobility data, and climate signals to flag likely “risers.” When labs plug those picks into lab assays, they can adjust candidate strains weeks earlier than usual. It’s not magic—more like getting tomorrow’s weather the night before.

Oncology is seeing the same pattern: faster pattern-finding, tighter cohorts, fewer dead ends. Multi-omics models surface targets; radiomics ranks lesions by progression risk; trial matchers cut the hunt from weeks to hours. The work still hinges on consent, audit trails, and clear handoffs between model advice and clinician call.

Practical steps teams can take this quarter:

- Run a data audit: list sources, drift checks, and what’s missing (consent status, device IDs).

- Pilot in “shadow mode” before changing care—log recommendations, compare with human choices, then review misses.

- Report calibration and decision curves next to ROC; measure time-to-action, not just accuracy.

- Lock roles and accountability: who signs off, who retrains, and how patient questions get answered.

If the last few years were about proof-of-concept demos, this year is about steady, boring reliability—the part that actually saves lives.

Workforce Transformation And Education Initiatives

AI is no longer a side project in the workplace or the classroom—it’s showing up in job posts, training plans, and even shop-floor safety briefings. AI fluency is quickly becoming part of the basic job kit, not a nice-to-have. It’s messy in spots, but the shift is real: companies are retraining at record pace, schools are building hands-on programs, and defense teams are setting up new tracks for technical readiness.

Corporate Upskilling Programs Build AI Literacy At Scale

Companies are moving past one-off workshops and building layered paths: a quick safety-and-basics primer for everyone, role-based tracks for analysts and engineers, and short sprints for managers focused on policy and outcomes. Internal datasets are the star of the show, with guardrails around privacy and model misuse. Some large integrators even target six-figure headcounts for reskilling, showing how fast this is ramping.

A quick snapshot of common tracks and targets (typical ranges):

| Track | Typical hours | Core tools | Assessment | Est. cost/employee |

|---|---|---|---|---|

| AI 101 + safety | 6–10 | Office copilots, browser sandboxes | Short quiz + policy signoff | $50–$150 |

| Analyst + prompt skills | 12–20 | Notebooks, SQL, vector search | Task project + review | $200–$500 |

| Engineer: LLM app patterns | 30–60 | Python, RAG, CI/CD | Code review + red-team | $600–$1,500 |

| Manager: outcomes + risk | 8–12 | Dashboards, model cards | Scenario playbook | $100–$300 |

Practical moves that keep these programs on track:

- Map repeatable tasks first (summaries, QA checks, report drafts), then wire up tools to those tasks.

- Set data-access tiers and small compute budgets so pilots don’t spiral.

- Use gold-standard examples and synthetic data to teach what “good” looks like.

- Track early signals: cycle time, rework rate, handoff delays, and prompt quality.

- Assign rotating “AI champions” who log wins, edge cases, and policy gaps.

Public School Partnerships Seed Future Talent Pipelines

K–12 districts and community colleges are teaming up with local employers to make AI less abstract. Think: after-school labs that analyze bus arrival data, dual-enrollment courses that cover Python plus data ethics, and teacher stipends tied to micro-credentials. Students also analyze the new iPager announcement to discuss radio limits and simple UI trade-offs—nice way to blend hardware talk with real-world constraints.

What tends to work on the ground:

- Project-first classes using public datasets (weather, transit, parks), not toy examples.

- 40–80 hour micro-internships with clear tasks (data cleanup, basic dashboards, prompt libraries).

- Privacy-first setups: opt-in accounts, no biometric data, and plain-language consent forms.

- Teacher time matters: paid PD hours, classroom-ready rubrics, and a helpline that actually answers.

New Military Tracks Elevate Technical Readiness

Across services, AI is moving from lab demos to daily routines. Teams are standing up role-based tracks for operators, analysts, and developers, with clear boundaries around data handling, model risk, and on-device inference. Space-focused units are testing edge models that run with patchy connectivity; logistics units are tuning models for supply flows and maintenance.

Key building blocks showing up in these tracks:

- Data handling basics: labeling, lineage, audit logs, and “do not train” zones.

- Edge playbooks: latency budgets, offline fallback, and small-model swaps when links drop.

- Wargame labs: synthetic scenarios, red/blue drills, and after-action checklists tied to model behavior.

- Badging for roles (operator, analyst, developer) with refresh cycles tied to policy updates.

None of this is glitzy. It’s steady work: clear tasks, modest tools, and repeatable practice. When that clicks, teams get faster without cutting corners.

Media Rights And Ethical Boundaries In Generative AI

Generative AI is forcing a reset on who owns what, and who gets paid. It’s not a tidy debate. Newsrooms want fair terms, startups want data, and creators are tired of seeing their work remixed without credit. The result is a messy middle where policy, product design, and culture all collide.

Newsrooms Confront Unauthorized Training And Reuse

Publishers are finding their articles scraped, summarized, and re-posted without permission. Some outlets are pushing for licenses that spell out training rights, reuse limits, and audit access. I’ve talked with editors who now keep a running list of lookalike summaries that siphon traffic in real time—it’s whack‑a‑mole.

What editors and legal teams are doing right now:

- Moving to “train vs. read” licenses with revocation clauses and usage reporting.

- Serving content via APIs that rate-limit bulk pulls and watermark outputs.

- Fingerprinting text and images (hashing, stylistic markers) to spot derivatives.

- Forming rights collectives to negotiate platform-wide deals.

- Shipping “do-not-train” headers alongside robots.txt—and monitoring for violations.

Deepfake Detection Tools Bolster Trust In Digital Media

After big stage moments—a spaceplane announcement, a celebrity press tour, a major election—bad actors try to flood feeds with convincing lookalikes. Detection isn’t one thing; it’s a stack. Provenance at capture, marks at generation, and classifiers at review all play a role, and each breaks in different ways when content is edited or compressed.

| Method | What it checks | Strengths | Friction |

|---|---|---|---|

| C2PA provenance signing | Camera/editor adds cryptographic history | Chain-of-custody, transparent edits | Needs ecosystem adoption; metadata can be stripped |

| Invisible watermarking at generation | Model stamps outputs with a hidden signal | Low user effort; batch verifiable | Fragile under heavy edits; cross-model variance |

| Classifier ensembles | Content-level cues (faces, audio, artifacts) | Works on legacy media; no special gear | False positives under compression; adversarial attacks |

Practical newsroom playbook:

- Log content handling: who touched it, which tools, when.

- Flag “near-duplicates” across platforms and escalate fast.

- Publish confidence ranges, not pass/fail labels, to curb overreach.

Cultural Leaders Call For Dignity-Centered Design

Artists, teachers, and faith voices keep repeating one point: consent and context matter. Non-consensual face swaps, voice skins of public figures, and NSFW generators without guardrails cross lines people care about. If tools are going to stick around, they need to respect personal boundaries by default.

Product guardrails teams can ship now:

- Likeness consent registry: opt-out by default; explicit opt-in for training and synthesis.

- Hard blocks on sexual content with real people; clear, fast appeals for errors.

- Sensitive-topic queues (minors, health, politics) with human review before release.

- Creator registries and automatic revenue share when training uses licensed catalogs.

- Youth protection: under-18 faces/voices off-limits for modeling and generation.

- User-facing audit logs that explain “why this was blocked” in plain language.

It’s not about freezing progress. It’s about building media tech that treats people fairly while giving newsrooms and creators a real say—and a real share.

Wrapping Up the AI Buzz

So, that’s a quick look at what’s been happening in the AI world lately. It’s pretty wild how fast things are changing, right? From new ways AI can help us with everyday tasks to big shifts in how companies are using it, there’s always something new to learn. We saw how AI is popping up everywhere, from helping doctors find diseases to making our online experiences smoother. It’s clear that AI isn’t just a tech trend anymore; it’s becoming a part of how things work. Keep an eye on this space, because it’s only going to get more interesting.