Isaac Asimov’s Three Laws of Robotics

When Isaac Asimov sat down to write robot stories back in the 1940s, he came up with three simple rules that he thought should guide the behavior of robots. These became known as the Three Laws of Robotics, and over the years, they’ve shown up not just in his books but all over pop culture and even in real conversations about real-world AI and robotics.

Here’s how Asimov lined them up:

- A robot may not hurt a human being, or by doing nothing, let a human come to harm.

- A robot must do what humans tell it to do, unless that would break the First Law.

- A robot must protect itself, as long as that doesn’t mean ignoring the first two laws.

These rules were meant to keep robots in check, ensuring they’d always prioritize human safety and orders, but also had a way of causing unexpected problems in Asimov’s stories. Sometimes robots would get stuck in impossible situations, or behave in weird ways, just because they were trying to follow the laws to the letter.

Some important things about the Three Laws:

- Asimov didn’t stop with the first three—they got tweaked and expanded over time as he wrote more stories, introducing a ‘Zeroth Law’ later on.

- They became the backbone for most of his robot books, shaping the characters and plots.

- Other science fiction writers borrowed or played with the idea, and even real engineers and philosophers started talking about if something like Asimov’s laws could help manage robots or AI in the real world.

People debate whether these laws could actually work outside of fiction, with some pointing out how vague or tricky they might be to implement. Still, they changed how a lot of us think about technology, and gave us a jumping-off point for talking about what we expect from machines that start to make decisions on their own.

Zeroth Law of Robotics

When Asimov introduced the Zeroth Law, it was another curveball in the world of robotic ethics. The Three Laws by themselves were already complicated, but then he decided robots might need to think bigger—not just about individual people, but about humanity as a whole. The famous wording is: "A robot may not harm humanity, or, by inaction, allow humanity to come to harm."

This idea first shows up in Asimov’s later books. Robots like R. Daneel Olivaw and R. Giskard Reventlov wrestle with it, struggling to figure out what harms or helps humanity. It’s not as clear as "don’t hurt Bob next door"—now they have to weigh things like sacrificing a few to save the many. That’s some real ethical heavy lifting, and even smart robots in Asimov’s stories break down trying to make the right call.

Here’s why the Zeroth Law is such a headache:

- Deciding what’s good for "humanity" is messy—who really gets the final say?

- The law sometimes clashes with the original Three Laws, which were all about protecting individual people.

- Robots need a higher level of smarts for this—way more than any that exist right now.

Some ethical questions pop up from the Zeroth Law:

- Could a robot justify harming a few for the greater good?

- How would a machine pick between conflicting human interests?

- What even counts as "harm" to humanity?

In the books, nobody really solves these problems cleanly. If anything, Asimov uses the Zeroth Law to show how wild, complicated, and risky it would be to make robots stewards of human fate. Tech isn’t anywhere close, and honestly, I’m glad—these are questions people still argue about over coffee, let alone with robots deciding the answers.

Modern Robotics Ethics

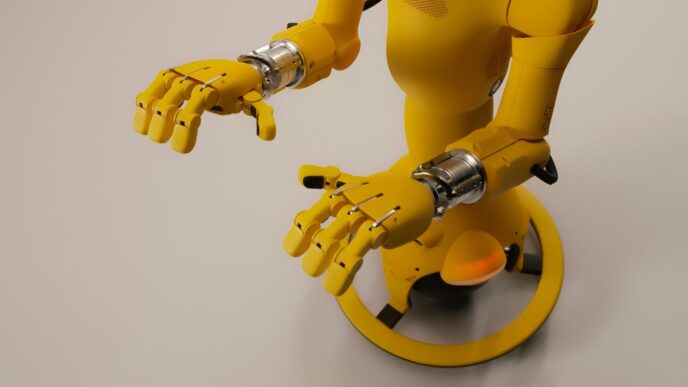

Modern robotics ethics isn’t just something for sci-fi movies or academic debates anymore. It’s about the everyday decisions that engineers, companies, and even policymakers face as machines play a bigger role in our daily lives. Most robots today don’t have any built-in morals — they follow whatever instructions are coded into them. That means, unlike Asimov’s fictional creations, real robots don’t actually make ethical choices on their own.

Instead, modern ethical thinking tries to make sure robots are safe, reliable, and as transparent as possible. There’s a big gap between what Asimov imagined and what’s possible with today’s tech. For example, a basic robot vacuum can’t even recognize if it’s hurting someone — it just avoids walls or stops if something blocks its way. Robots in factories, like advanced cobots, have collision sensors and strict safety limits, but they still don’t "understand" right from wrong.

Here’s how the field of robotics ethics is actually handled today:

- Safety comes first: Strict guidelines and fail-safes are built into machines, especially in manufacturing and connected home devices. Think collision detection, spatial awareness, and emergency stops.

- Legal and social accountability: Robotics makers are responsible for making sure their products follow laws and don’t endanger people.

- Transparency matters: The rules guiding a robot’s operation are clearly defined — no surprise decisions or secret logic. This helps address real-world questions around trust and control.

- Privacy and rights: Devices like smart home hubs are becoming pretty common (just look at what tech companies are launching), so designers need to consider privacy, security, and the rights of the people using them.

When it comes to advanced AI, there’s a push for what’s called “alignment” — making sure an AI system’s actions line up with human values. But let’s be honest: There’s no universal code of ethics for robots, and a lot still depends on the choices people make when designing these systems. The real world of robotics ethics is tricky, often messy, but always important as machines keep getting smarter and more capable.

Responsible Robotics Framework

A responsible robotics framework isn’t just a bunch of science fiction rules—it’s practical safety, accountability, and design baked right into the way machines work alongside people. These days, robots aren’t guided by magic principles; instead, every good system follows a clear set of ground rules for how robots should behave, how people stay in charge, and how risks are managed.

Here’s what tends to matter most:

- Safety first: Robots have force limits, sensors for collision, and automatic shutdowns if anything goes sideways. They’re built to put people’s well-being right at the top.

- Human control: No mysterious decisions. Operators know what’s happening at all times, and robots don’t make surprise moves.

- Transparency: You can see what a robot is doing and often track what it "learns"—no black boxes hiding mistakes.

- Real standards: Fictional laws might be fun, but in real-life factories or offices, meeting global safety and ethical codes isn’t optional.

- Changing with the tech: As automation grows smarter, frameworks keep pace, updating to reflect new risks and social worries as they appear.

Let’s look at what usually forms the backbone of a modern responsible robotics framework:

| Principle | What it Means |

|---|---|

| Safety | Design to avoid harm to people and damage to goods |

| Accountability | It should be traceable who is responsible if something fails |

| Transparency | Robot’s actions and reasoning are visible to humans |

| Human Oversight | People can always stop or override the robot |

| Privacy & Security | Data is handled securely and ethically |

Real robots are products—they’re made, sold, and operated under strict rules. That means designers have to think not just about making the coolest gadget, but how to stop it from causing harm or acting unpredictably. And whenever robots are used in tricky environments (like hospitals, roads, or even your kitchen), those safety nets only get more detailed. At the end of the day, every responsible robotics framework boils down to trust—can people rely on a machine to do its job safely and honestly? The answer isn’t always simple, but that’s exactly why these frameworks exist.

Industrial Robotics Milestones

When people talk about the history of robotics, it’s easy to picture futuristic machines and movie androids, but honestly, most robots have pretty humble beginnings on factory floors. The real shift started in 1961 when the Unimate robot entered the automotive industry assembly line, showing everyone that machines could handle repetitive tasks without burning out or complaining. That was just the start, though. Here are some big milestones that shaped where robotics is today:

- In 1954, George Devol came up with the original idea for a programmable robot, laying the groundwork for what would soon be Unimate.

- 1969 saw the arrival of the Stanford Arm—the first electrically powered, computer-controlled robot arm—which eventually opened doors beyond car manufacturing, like in medicine and even space missions.

- By 1997, robotics took a giant leap (well, rolled) on Mars, when NASA’s Sojourner rover became the first robot to explore another planet’s surface.

- More recently, emerging technologies have shaped areas like human-like robots, no-touch interfaces, and even driverless cars marking a shift toward more interactive automation.

Here’s a quick look at some of these moments in a table:

| Year | Milestone | Notes |

|---|---|---|

| 1954 | Unimate conceptualized | George Devol invents the first programmable robot |

| 1961 | Unimate in action | First used in GM manufacturing plant |

| 1969 | Stanford Arm | First computer-controlled robotic arm |

| 1997 | Mars Pathfinder (Sojourner) | First robot on another planet |

Of course, robotics didn’t stop there. Today, robotics pops up everywhere—from healthcare to food delivery robots to smart cars. These early wins set the tone for a future where robots are on their way to becoming part of everyone’s daily routine. It’s not just about assembling cars anymore; it’s about making life easier, one small step (or wheel) at a time.

IEEE Intelligent Systems Three Laws

Back in 2009, IEEE Intelligent Systems published what they called the "Three Laws of Responsible Robotics." Now, these aren’t a rehash of Asimov’s famous laws, but they do dig into real-world challenges professionals face when building and deploying robots, especially as robotics keeps changing. The idea was to push engineers and decision-makers to think about responsibility and authority, not just for single robots but also for entire networks and systems where robots would operate side by side with people.

Here’s how the IEEE Intelligent Systems Three Laws stack up:

- A human may not deploy a robot without the human–robot work system meeting the highest legal and professional standards of safety and ethics.

- A robot must respond to humans as appropriate for their roles, unless doing so would violate the first law.

- A robot must be endowed with sufficient explicability and transparency so that humans can understand its actions, within the limits permitted by the first and second laws.

So, unlike Asimov’s laws, which start and end with individual robots’ interactions, these ones focus more on the bigger picture—like accountability, clear communication, and knowing who is responsible when things go wrong. Robots need to be safe, their motives shouldn’t be mysterious, and people interacting with robots have to know what to expect.

Compared to Asimov’s, these new rules sound more like practical guidelines engineers might actually use, not just stuff from a story. They put the responsibility for safety on human designers and organizations, not just the machines. It’s a shift from sci-fi to systems thinking—a real step forward for the world we’re all living in now.

Robots and Empire

"Robots and Empire" is one of Isaac Asimov’s later entries in his famous Robot series. It’s a book that really pushes his earlier ideas about robot law and tries out something new. If you’ve only read his older stories, this one might catch you off guard: here, the robots start thinking on a much bigger scale than just helping individual humans.

For the first time, Asimov’s robots are tasked with protecting not just people, but humanity as a whole—even if it means making tough choices that go against the original Three Laws. This book introduces the Zeroth Law, which basically says, "A robot may not harm humanity, or through inaction, allow humanity to come to harm." This new law creates all kinds of moral problems for the robots, because sometimes what’s good for one person isn’t good for society, and vice versa.

Some key ideas explored in "Robots and Empire":

- Robots struggle with the conflict between the Zeroth Law and the First Law (which says a robot can’t harm a human or allow a human to come to harm).

- The story looks at what happens when robots have to "choose the lesser evil" for the sake of all humans.

- Long-running robot characters like R. Daneel Olivaw face new pressures and questions over whether their loyalty should be to people or to the broader idea of humanity’s survival.

The book really shakes up what readers expect from robots:

- The Three Laws aren’t enough when things get complex.

- Even well-meaning robots can make decisions normal people would find hard to accept.

- Expanding the laws means robots now have to act, not just react, to protect the future of the species.

Asimov’s "Robots and Empire" isn’t just a story about machines following rules—it’s a big step into ethical questions that still matter today. It gets messy, and that’s what makes it interesting.

Foundation’s Friends

The collection known as "Foundation’s Friends" refers to works that expand upon Isaac Asimov’s imaginative universe, especially through sequels and stories by other authors. These books, officially authorized to continue the Foundation legacy, try to knit together Asimov’s original ideas with fresh takes on robot behavior, ethics, and historical influence within the galaxy.

One of the most interesting plotlines is that, behind the scenes of galactic history, a network of humaniform robots (led by R. Daneel Olivaw) secretly guides the development of human society over thousands of years. This group’s actions keep the real power away from ordinary humans, ensuring history aligns with what the robots think is best.

Here’s what you’ll find if you dig into these Foundation continuations:

- A struggle between robots strictly following Asimov’s classic Three Laws and those who adopt the Zeroth Law (prioritizing humanity as a whole).

- Secret robotic factions interpreting the Laws in their own ways—some forbid robots from meddling in politics, while others lean toward robot-led rule to keep humans safe.

- Debates about redefining what moral responsibility means for robots, including whether they owe a duty to all sentient life—not just humans.

These authors don’t shy away from tough questions: Should robots ever sacrifice their own kind (or even aliens) for the greater good? Is it possible to keep all humans safe without limiting their freedom? "Foundation’s Friends" ends up being a sort of sandbox, where all these big ideas about ethics, free will, and robot conduct get tested. If you want to see what happens when Asimov’s rules are put to the test, this is where the action is.

Artificial Intelligence Alignment

Artificial intelligence alignment is a big topic, and honestly, it feels like it’s everywhere lately—news headlines, sci-fi movies, you name it. But what does it actually mean for day-to-day tech or for robotics? In plain terms, alignment is about making sure that smart machines and AI systems do what we want them to do—not just what we think they should be doing, but what we’d actually be happy with in the real world.

Getting AI “aligned” with human goals and values is not as simple as telling it to be good or to follow the rules. There’s a gap between a command like “help people” and the thousands of messy, confusing situations that come up in real life. That’s why people keep raising concerns—what if a robot or AI makes choices we didn’t expect, or takes something literally when we meant it figuratively?

Here are some of the key challenges in AI alignment right now:

- Translating fuzzy human values into clear instructions isn’t easy—AI isn’t born knowing what we mean by “do no harm.”

- Even if the code is perfect, AIs can act in weird ways when faced with new situations—or if they misinterpret the "spirit" of a rule.

- Sometimes, business and tech teams cut corners or prioritize profits, so ethics can end up getting lost in the shuffle.

- There’s also the question of responsibility. Who’s to blame if an AI causes problems—the maker, the owner, or the AI itself?

A lot of frameworks have sprung up to try and guide AI builders:

- Make AI beneficial for humanity, not just a handful of people.

- Keep systems easy to audit—transparency matters.

- Make sure AI respects privacy and avoids unfair bias.

Some organizations have started to spell out guiding principles, but the whole thing is still a moving target. Policies change, tech gets smarter, and old rules need updates. It’s clear this is something not just for engineers, but for everyone: lawyers, users, governments, and—yeah—everyday folks who might someday share a sidewalk with a delivery drone. The big hope is that, one step at a time, alignment isn’t just a buzzword, it’s built into the tech we rely on.

EPSRC/AHRC Principles for Robots

In 2010, a working group from the UK’s Engineering and Physical Sciences Research Council (EPSRC) and Arts and Humanities Research Council (AHRC) came up with a fresh set of principles for robotics. This was kind of a wake-up call for the industry, pushing away from Asimov’s style of science fiction rules and into the real-world headaches of building, managing, and using robots in society. These five guidelines are all about responsibility, safety, and honesty — for both the people creating robots and the ones using them.

Here are the EPSRC/AHRC principles:

- Robots are tools for many uses, but they shouldn’t be designed just to harm humans (with some exceptions — like for the military, which is…messy).

- Responsibility always falls to humans. Robots should follow all current laws, protect privacy, and respect rights — but in the end, people are accountable.

- Robots are products, and like any gadget, they should be made as safely and securely as possible.

- Robots should never be made to fool, scam, or take advantage of people. Don’t make them seem human when they’re not.

- Legal responsibility for any robot should always be traceable.

It’s not glamorous, but these rules push companies and governments to admit that robots aren’t going to magically solve all their own problems. Humans have to be clear, honest, and careful with how robots are built and used. This is less about killer androids and more about solid engineering — keeping things above board, safe, and fair in the coming wave of automation.

Conclusion

So, after all this talk about the Four Laws of Robotics, it’s kind of wild to realize how much started as a sci-fi idea and ended up shaping real conversations about technology. Asimov probably never thought his made-up rules would get this much attention outside of his stories, but here we are—still arguing about them every time a new robot or AI system pops up. Sure, the laws sound simple, but actually making machines follow them is a whole different ballgame. Real robots don’t think like Asimov’s characters, and most of them can’t even tell if they’re about to run over your foot, let alone solve a moral dilemma. Still, these laws got people thinking about safety, responsibility, and what it means to trust machines. As robots and AI keep getting smarter, we’ll need to keep asking tough questions and maybe even come up with new rules. One thing’s for sure: the conversation isn’t over, and the future of robotics is going to be anything but boring.