Fundamentals Of Dexterity Robotics Systems

Dexterity robotics is all about giving machines the ability to handle objects and perform tasks with a level of skill that starts to look a lot like what humans can do. Think about picking up a fragile egg without cracking it, or threading a needle – these are the kinds of complex actions that dexterity systems aim to replicate.

Mimicking Human Handling With Advanced Technology

At its core, this field tries to copy how we use our hands and bodies to interact with the world. It’s not just about grabbing and moving; it’s about the subtle adjustments, the feel of an object, and the planning that goes into each action. This blend of sensing, processing, and movement is what sets dexterity robotics apart. For instance, systems use advanced sensors to understand an object’s texture, weight, and shape, then use that information to control their movements precisely. This allows robots to perform tasks that were once thought to be exclusively human, like assembling intricate parts or even performing delicate surgical procedures.

Essential Components For Precise Control

Achieving this level of skill requires a few key ingredients. First, you need smart sensors that can detect changes in the environment and the object being handled. These sensors provide real-time data, like how much pressure is being applied or if an object is slipping. Then there’s adaptive motion control, which uses this sensor data to constantly adjust the robot’s movements, making them smooth and accurate. Think of it like a dancer adjusting their steps based on the music and their partner. Finally, multi-fingered grippers are often used, as they offer more ways to hold and manipulate objects than simple pincers. These grippers, combined with force feedback, allow the robot to

Advanced AI Techniques Driving Dexterity Robotics Manipulation

So, how do robots get that almost human-like touch when they’re grabbing things? It’s all thanks to some pretty clever AI. Think of it like teaching a kid to ride a bike – they learn by trying, falling, and adjusting. That’s basically what’s happening with these robots.

Machine Learning For Natural Robotic Touch

Machine learning is a big part of this. It lets robots learn from experience, kind of like how we get better at tasks with practice. For instance, the DemoStart framework uses a method called reinforcement learning. This is where a robot tries different ways to grip an object, using feedback from its sensors – its digital eyes and ears – to figure out what works best. It learns to adjust its grip strength and precision, making its touch feel more natural. It’s like the robot is constantly saying, “Okay, that didn’t work, let’s try this instead.” This is how they get good at handling everything from a stiff box to a soft piece of fabric.

Reinforcement Learning And Imitation Learning

Beyond just learning from trial and error, robots are also learning by watching. This is called imitation learning. A great example is the ALOHA Unleashed system. Instead of just one arm, it uses two arms working together. They learn by watching demonstrations, which really speeds up their learning process. Plus, their grippers, which are like the robot’s hands, can actually change shape to fit different objects. This makes them super adaptable. It’s like having a multi-tool for a hand.

Physical AI And Simulation Environments

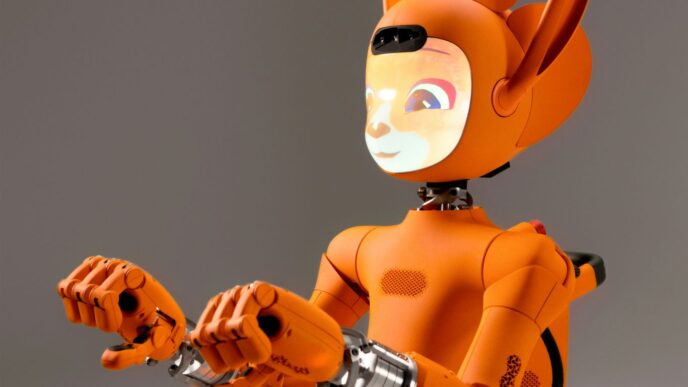

To really nail these complex moves, engineers are also using something called Physical AI. This means the AI is directly connected to the robot’s physical parts, using sensor feedback to make instant adjustments. It’s not just about writing code; it’s about the robot reacting in real-time to what’s happening around it. They also use simulation environments, which are like digital playgrounds. Here, they can test out new ways for robots to grab things without risking any actual robots or objects. This lets them refine things like dynamic grasp planning – figuring out the best way to hold something – in a safe space before trying it out in the real world. This blend of real-world testing and digital practice is key to making robots truly dexterous. It’s how we’re moving towards robots that can handle delicate tasks with surprising skill, much like the advancements seen in human-like robots.

Here’s a quick look at how these AI methods help:

- Reinforcement Learning: Robots learn optimal actions through trial and error, improving grip and manipulation.

- Imitation Learning: Robots learn by observing and mimicking human or expert demonstrations, speeding up skill acquisition.

- Sensor Fusion: Combining data from multiple sensors (like vision and touch) to create a more complete understanding of the environment and the object being handled.

- Simulation Environments: Creating virtual worlds to train and test AI algorithms safely and efficiently before deployment.

Industrial Applications Of Dexterity Robotics Systems

Streamlining Manufacturing and Logistics

Dexterity robotics systems are really changing how factories and warehouses operate. Think about unloading shipping containers. Before, this was a slow, manual job, often tough on workers. Now, robots with advanced vision and touch sensors can sort through items, whether they’re stiff boxes or soft bags, with surprising speed and care. This isn’t just about speed, though. It means fewer damaged goods and a more predictable workflow. In manufacturing itself, these robots handle repetitive tasks on assembly lines, like putting small parts together or packaging finished products. They do it with a consistency that’s hard for people to match, day in and day out. This leads to better quality control and less waste.

Precision in Electronics and Pharmaceuticals

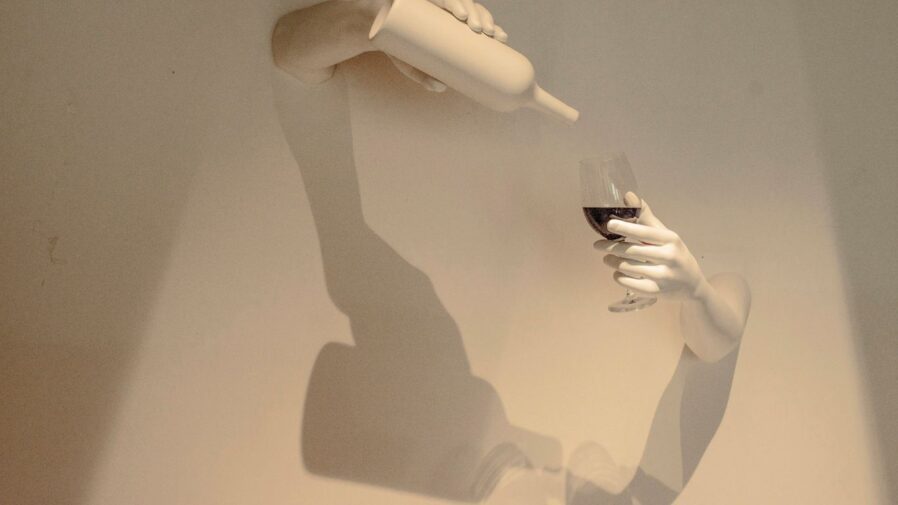

When you’re dealing with tiny electronic components or sensitive pharmaceutical ingredients, precision isn’t just a nice-to-have; it’s a must. Dexterity robots excel here. In electronics assembly, they can pick and place minuscule parts onto circuit boards, a task that requires incredibly steady hands. Even a slight tremor could ruin the whole thing, but these robots are built for that level of detail. For pharmaceuticals, imagine a robot carefully handling vials or dispensing precise amounts of medication. The systems use advanced sensors to detect the weight and texture of materials, allowing them to adjust their grip and movement accordingly. This careful handling is vital for maintaining product integrity and meeting strict industry regulations.

Enhancing Automotive and Service Sectors

In the automotive industry, dexterity robots are taking on more complex assembly jobs. They can now install intricate parts, like dashboard components or even engine parts, with accuracy and speed. This frees up human workers for more specialized tasks that require problem-solving or oversight. Beyond manufacturing, these robots are starting to show up in service roles. Think about maintenance tasks that require reaching into tight spaces or handling tools with a delicate touch. Some systems are even being developed for tasks like cleaning or basic repair work, showing a broad potential for robots to assist in a variety of service-oriented jobs.

Challenges And Research Directions In Dexterity Robotics

Getting robots to do things that seem simple for us humans is still a big hurdle. Think about a robot trying to tie a knot or carefully pick up a very soft piece of fruit. This requires a deep look into how things move, called kinematics, and studying motion very closely. Basically, engineers need to figure out the exact sequence of movements for each joint to copy what we do naturally. It gets really complicated when you have systems with many joints that have to work in places that aren’t set up perfectly.

Researchers are also focusing on something called sensor fusion. This is all about combining information from different sensors to get a clearer picture of what’s going on. Right now, little glitches in sensor data, like background noise, can mess up the real-time feedback needed for smooth actions. At the same time, people are trying out new, tougher materials and smarter joint designs to cut down on mistakes and make robots better at different jobs. They’re also looking at how to make these systems more adaptable, like how wearable technology is trying to adapt to user needs.

Another big area is testing how reliable these robots are. They put robots through all sorts of tests in different situations to see how well they perform. Engineers are also developing smart ways for robots to decide what to do quickly in tricky, unplanned situations. It’s a bit like giving the robot its own quick thinking skills. With these improvements, robots might soon be able to match the fine movements of human hands. This could really change how precise things are done in factories and other workplaces.

Future Trends And Investment Landscape In Dexterity Robotics

It’s pretty wild how much money is flowing into robotics that can do fine, human-like tasks these days. We’re seeing big investment rounds, like that $95 million boost led by some major players, pushing total capital well over a quarter of a billion dollars. This isn’t just pocket change; it shows that both investors and large companies are really betting on robots that can handle things with more finesse, especially when it comes to moving stuff around in warehouses and factories.

What’s driving this cash infusion? Well, startups are now building these complete systems that combine advanced robots with smart sensors. Venture capital firms are noticing that when robots get better at manipulating objects, it opens up all sorts of new ways to use them in industry. Think about automated systems that don’t just grab and move containers, but can actually adjust on the fly if something changes on the production line. That’s the kind of future people are investing in.

Beyond just funding, there’s a lot of teamwork happening. Robotics companies are teaming up with big, established industrial companies. This partnership approach helps get new technology out of the lab and into real-world use much faster. We’re going to see more of this collaboration, more smart computer brains (AI) getting integrated, and a move away from robots working alone to systems that are all connected and working together.

Investor Confidence And Corporate Collaborations

Investor confidence is definitely up, and when you mix that with smart partnerships between companies, it’s creating a really dynamic environment for dexterity robotics. This growth is all about making things more precise and efficient across a bunch of different industries.

Smart Container Handling And Industrial Automation

One of the biggest areas getting attention is how robots can handle containers. The idea is to make loading and unloading much smoother and faster. This involves robots that can adapt to different types of containers and environments, making industrial automation much more flexible.

Interconnected Systems And Machine Intelligence

Looking ahead, the trend is towards robots that aren’t just standalone machines. They’ll be part of larger, interconnected systems. Machine intelligence will play a big role, allowing these systems to learn, adapt, and make smarter decisions. This move towards connected intelligence is what will really change how industries operate.

Core Components Of Dexterity Robotics Technologies

So, what actually makes these super-precise robots tick? It’s a mix of clever hardware and smart software working together. Think of it like building a really good chef’s knife – you need the right steel, a good handle, and a sharp edge, all working in harmony.

Smart Sensors And Adaptive Motion Control

First off, you’ve got the sensors. These aren’t just simple on-off switches; they’re like the robot’s senses. They can detect pressure, texture, temperature, and even how an object is moving. This constant stream of information lets the robot adjust its grip and movements in real-time, almost like a human instinctively knows how much pressure to use. Adaptive motion control is the brain that takes this sensor data and tells the robot’s motors exactly what to do. It’s what allows a robot to go from picking up a heavy box to gently placing a tiny screw without missing a beat. This combination is key for handling a wide variety of objects, from soft, squishy things to hard, slippery ones.

Multi-Fingered Grippers And Force Feedback

Forget those clunky, two-fingered claws you might see in old sci-fi movies. Modern dexterity robots often use grippers with multiple fingers, much like our own hands. This allows for a much more nuanced and secure grasp. Different finger configurations can be used depending on the object’s shape and fragility. Coupled with this is force feedback. This is like the robot having a sense of ‘touch’ – it can feel how much pressure it’s applying. If a sensor detects too much force, the system can immediately back off, preventing damage to delicate items. It’s this ability to feel and adapt that really sets these systems apart.

Machine Vision And Smart Motion Planning

Finally, there’s how the robot ‘sees’ and ‘thinks’ about its movements. Machine vision systems act as the robot’s eyes, identifying objects, their position, and orientation. This visual data is then fed into smart motion planning algorithms. These algorithms figure out the most efficient and safest path for the robot’s arm and gripper to take to complete a task. It’s not just about moving from point A to point B; it’s about avoiding obstacles, coordinating multiple movements, and executing the task with precision. This integration of sight and planning is what allows robots to perform complex assembly, sort items quickly, or even perform intricate maintenance tasks.

Wrapping Up: The Future of Robot Hands

So, we’ve seen how robots are getting way better at doing things with their hands. It’s not just about lifting heavy stuff anymore; it’s about delicate tasks, like picking up something fragile without breaking it. This progress is happening thanks to smarter sensors, better ways for robots to move, and a lot of AI learning. Companies are investing big money, and partnerships are forming, which means we’ll likely see these advanced robots in more places soon. It’s pretty exciting to think about how this will change factories and other jobs, making things more efficient and maybe even safer. The journey to truly human-like robotic dexterity is still ongoing, but the steps being taken now are really significant.