Ever wonder why your computer feels super fast sometimes and then, out of nowhere, it just crawls? A lot of that has to do with something called the processors cache. Think of it like a super-speedy mini-storage right next to your computer’s brain, the CPU. It’s designed to hold onto stuff your computer uses all the time so it doesn’t have to go digging around in slower memory. This little trick makes a huge difference in how snappy your computer feels. We’re going to break down what processors cache is, how it works, and why it’s such a big deal for everything from gaming to just browsing the web.

Key Takeaways

- Processors cache is like a small, fast memory area that helps the CPU get to frequently used data quickly.

- Understanding how cache hits (data found in cache) and cache misses (data not found) work helps explain why some tasks are faster than others.

- There are different levels of processors cache (L1, L2, L3), each with its own speed and size, working together to keep data flowing.

- Processors cache is different from buffers, but they both play a part in moving data around efficiently inside your computer.

- A good processors cache setup means less waiting for your CPU, quicker response times for your apps, and a smoother overall computer experience.

The Core Concept of Processors Cache

Defining Processors Cache

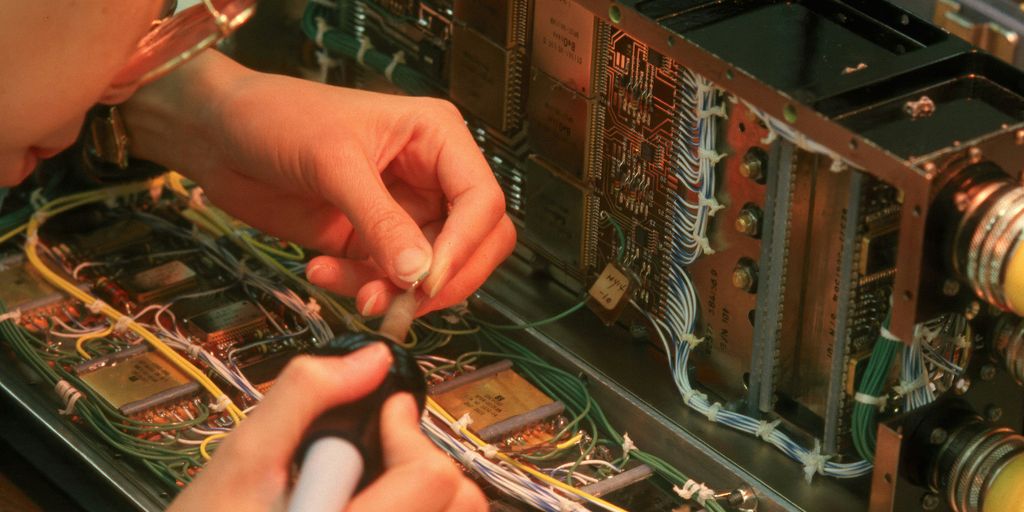

So, what is a processor cache, really? Think of it like this: your CPU needs information to do its job, right? Instead of always grabbing that info from the main memory (which is kinda slow), the cache is a small, super-fast storage area right next to the CPU. It holds the data the CPU uses most often. It’s like keeping your favorite tools on your workbench instead of in the garage. This computer performance boost is pretty significant.

The Purpose of Caching in CPUs

The whole point of caching is to speed things up. CPUs are incredibly fast, but they spend a lot of time waiting for data to arrive from memory. Caching cuts down on this waiting time. By storing frequently accessed data closer to the CPU, it reduces the latency involved in data retrieval. Imagine having to walk across the room every time you needed a pen versus having one right on your desk. Caching minimizes CPU idle time and keeps things running smoothly. It’s all about efficiency.

Why Speed is Paramount in Digital Processing

In today’s digital world, speed is everything. We expect instant results, whether we’re gaming, editing videos, or just browsing the web. Slow processing can lead to frustration and lost productivity. Caching plays a vital role in delivering that speed. It allows CPUs to access data quickly, which translates to faster application loading times, smoother multitasking, and an overall more responsive user experience. Without caching, our computers would feel sluggish and outdated. Think about how much faster your computer feels after a fresh restart – that’s partly because the cache is primed and ready to go. The speed that CPUs provide is essential for modern computing.

Understanding Cache Operations

What is a Cache Hit?

Okay, so imagine the CPU needs some data. A cache hit is when the CPU checks the cache and finds exactly what it needs right there. This is a win because it’s super fast. Think of it like finding your keys right where you left them. No time wasted searching! This speeds up processing significantly. The more hits, the better the performance.

What is a Cache Miss?

Now, a cache miss is the opposite. The CPU looks in the cache, but the data isn’t there. Bummer! It then has to go get the data from the main memory (RAM), which is way slower. This causes a delay. It’s like realizing you left your keys at work and having to drive all the way back to get them. Cache misses slow things down, so we want to avoid them as much as possible.

Optimizing Cache Efficiency

So, how do we get more cache hits and fewer misses? That’s where optimizing cache efficiency comes in. It’s all about making smart choices about what data to store in the cache. Here are a few things that can help:

- Good Algorithms: Writing code that accesses data in a predictable way helps the cache predict what data will be needed next.

- Data Structures: Using data structures that are cache-friendly can improve performance. For example, using arrays instead of linked lists can sometimes be faster because arrays store data in contiguous memory locations.

- Cache-Aware Programming: This involves understanding how the cache works and writing code that takes advantage of its features. For example, you can try to keep frequently used data close together in memory to increase the chances of a cache hit. Understanding cache hierarchy is also important.

Ultimately, optimizing cache efficiency is about minimizing the number of times the CPU has to go to main memory to get data. The more efficient the cache, the faster the computer runs. It’s a key part of making sure your computer is running smoothly.

The Processors Cache Hierarchy: L1, L2, and L3

Processors use a cache hierarchy to manage data access efficiently. This hierarchy typically consists of three levels: L1, L2, and L3, each designed with different priorities in mind. Think of it like a tiered storage system, where the most frequently accessed data is stored closest to the CPU for quick retrieval. Let’s break down each level.

L1 Cache: The Fastest Tier

L1 cache is the fastest and smallest cache memory in the CPU. It’s located directly within the CPU core, allowing for extremely low-latency data access. Because of its speed, L1 cache is used to store the most frequently accessed data, like loop variables or the results of recent calculations.

- Smallest capacity, typically ranging from tens of KB per core.

- Lowest latency, providing the quickest access times.

- Stores instructions and data that the CPU is actively using.

L2 Cache: Balancing Speed and Capacity

L2 cache is larger than L1 but slightly slower. It acts as a secondary storage area for data that isn’t accessed quite as often as the data in L1 cache, but still needs to be readily available. L2 cache can be either per-core or shared between cores, depending on the CPU architecture. It’s a compromise between speed and capacity, offering a larger storage space without sacrificing too much performance. If you are looking to improve internet speed, understanding how your processor handles data is key.

- Larger capacity than L1, usually in the hundreds of KB per core.

- Slightly higher latency than L1, but still faster than main memory.

- Can be either per-core or shared, depending on the CPU design.

L3 Cache: The Shared Resource

L3 cache is the largest and slowest of the three cache levels. It’s shared among all the CPU cores, providing a common pool of memory for data that might be needed by multiple cores. While it’s slower than L1 and L2, L3 cache is still significantly faster than accessing main system memory (RAM). It stores data that is less frequently used but still important for overall system performance.

- Largest capacity, often several MBs, shared by all cores.

- Highest latency among the cache levels, but still faster than RAM.

- Stores data that is shared or less frequently accessed by the cores.

Here’s a table summarizing the key differences:

| Feature | L1 Cache | L2 Cache | L3 Cache |

|---|---|---|---|

| Size | Smallest | Larger | Largest |

| Speed | Fastest | Slower | Slowest |

| Location | Within CPU Core | Near CPU Core | Shared by all Cores |

| Data Stored | Most Frequent | Less Frequent | Shared/Less Used |

Distinguishing Processors Cache from Buffers

Defining CPU Buffers

Okay, so you know how a cache works – it’s all about storing frequently used data for quick access. But what about buffers? Think of CPU buffers as temporary holding zones. They manage the flow of data between different components that operate at different speeds. Imagine a super-fast processor trying to talk to a much slower hard drive. The buffer acts as a middleman, preventing the processor from being bogged down waiting for the hard drive to catch up. It’s like a waiting room for data, ensuring a smoother, more consistent flow.

How Caches and Buffers Complement Each Other

Caches and buffers work together, but they tackle different problems. Caches are about speed – making frequently accessed data readily available. Buffers are about flow control – managing the rate at which data moves between components. They’re both essential for overall system performance, but they play distinct roles. You can think of it like this:

- Caches reduce latency by storing frequently used information.

- Buffers prevent bottlenecks by managing data flow.

- Both contribute to minimizing CPU idle time.

To check internet speed, you need both efficient caching and buffering to ensure data is delivered quickly and smoothly.

Impact on Data Handling

Without buffers, you’d see a lot more stuttering and delays, especially when dealing with tasks that involve a lot of data transfer, like video editing or large file transfers. The CPU would constantly be waiting for data, leading to significant performance degradation. Caches, on the other hand, ensure that the CPU doesn’t have to constantly retrieve the same data from slower storage devices. They work in tandem to keep things running smoothly. For example, in gaming, buffers ensure that player inputs are handled without delay, while caches store critical game data like physics and AI routines for quick access.

The Critical Role of Processors Cache in Performance

Processors cache is super important for how well your computer runs. It’s all about making things faster and smoother. Think of it like this: your CPU is the chef, and the main memory is the pantry. The cache? That’s the spice rack right next to the stove. The closer the spices (data) are, the faster the chef (CPU) can cook (process).

Minimizing CPU Idle Time

CPUs are fast, really fast. But they spend a lot of time waiting for data. This waiting is called "idle time," and it’s a huge waste. Cache memory helps reduce this idle time by keeping frequently used data close to the CPU. Imagine waiting for a file to load – that’s the CPU being idle. A good cache setup means less waiting and more doing. Cache memory is a high-speed component that stores frequently accessed data closer to the CPU.

Reducing Data Latency

Latency is just a fancy word for delay. When the CPU needs data, it has to go get it from somewhere. The further away that "somewhere" is, the longer it takes. Cache reduces this distance. It’s like having a shortcut. Instead of going all the way to the main memory (RAM) every time, the CPU checks the cache first. If the data is there (a "cache hit"), it’s much faster. If not (a "cache miss"), it still has to go to RAM, but the cache helps minimize those trips. Here’s a simple comparison:

| Memory Type | Access Time (approx.) |

|---|---|

| L1 Cache | 1 nanosecond |

| L2 Cache | 3 nanoseconds |

| L3 Cache | 10 nanoseconds |

| RAM | 100 nanoseconds |

Enhancing Application Responsiveness

All of this adds up to better application responsiveness. When you click a button, you expect something to happen right away, right? That’s responsiveness. Cache helps make that happen. By reducing idle time and latency, applications feel snappier and more responsive. Think about gaming: a good cache setup can mean the difference between smooth gameplay and annoying lag. Caching layers are critical for modern computing, especially in resource-intensive tasks like gaming, scientific computing, and real-time data processing. Here are some ways cache improves responsiveness:

- Faster loading times for applications.

- Smoother multitasking between different programs.

- Improved performance in data-intensive tasks like video editing.

Real-World Impact of Processors Cache

Gaming Performance and Cache

Okay, so you’re a gamer, right? Ever wonder why some games run smooth as butter while others stutter like crazy? A lot of it comes down to the processor cache. The cache helps the CPU quickly access frequently used game data, like textures and character models. Without a decent cache, the CPU has to constantly fetch data from the slower main memory, causing those annoying frame rate drops. Think of it like this: the bigger and faster your cache, the less time your CPU spends waiting around, and the more time it spends rendering those sweet headshots.

Scientific Computing and Data Processing

It’s not just about games, though. Scientific computing and heavy-duty data processing also rely heavily on processor cache. Imagine running complex simulations or analyzing massive datasets. These tasks involve tons of calculations and repeated access to the same data. A well-optimized cache can dramatically reduce the time it takes to complete these tasks. For example, in weather forecasting, models need to access frequently used data about temperature, pressure, and wind speed. A good cache setup means faster forecasts and more accurate predictions. It’s all about keeping that data close at hand for the CPU.

Everyday Computing Efficiency

Even if you’re not a gamer or a scientist, processor cache affects your everyday computing experience. Think about opening your favorite apps, browsing the web, or editing documents. The cache helps your computer quickly load these programs and files, making everything feel snappier and more responsive. A computer with a better cache can handle multiple tasks at once without slowing down as much. It’s like having a super-organized desk – you can find what you need quickly and get things done faster. So, next time your computer feels quick, remember to thank the CPU caching system working behind the scenes.

Wrapping Things Up

So, that’s the deal with processor cache. It’s not some super complicated thing, but it really makes a difference in how fast your computer feels. Think of it like a little helper right next to the main brain of your computer, always ready with the stuff it needs most. When that helper is good at its job, everything just runs smoother. It’s pretty cool how these small, fast memory spots keep things moving along, making sure your computer isn’t just sitting around waiting for data. Knowing a bit about how it works can help you get why some computers just feel snappier than others. It’s all about getting that data where it needs to be, fast.

Frequently Asked Questions

What is a processor’s cache?

A processor’s cache is like a super-fast, small storage area right inside the computer’s main brain, the CPU. It holds information the CPU uses often so it doesn’t have to go all the way to the slower main memory (RAM) every time. Think of it like keeping your favorite snacks right next to you on the couch instead of walking to the kitchen for each one.

Why do computers need cache?

The main reason for cache is speed! Computers need to work really fast. If the CPU always had to wait for data from the main memory, everything would slow down a lot. Cache helps the CPU get to the information it needs almost instantly, making your computer feel much quicker and smoother.

What’s the difference between a cache hit and a cache miss?

A “cache hit” means the CPU found the information it was looking for right there in the cache. This is good because it’s super fast. A “cache miss” means the information wasn’t in the cache, so the CPU has to go to the slower main memory to get it. Too many misses can make your computer feel sluggish.

How many levels of cache are there?

There are usually three levels: L1, L2, and L3. L1 is the smallest and fastest, right inside each part of the CPU. L2 is a bit bigger and slower than L1 but still very fast. L3 is the biggest and slowest of the caches, but it’s shared by all parts of the CPU. They work together to make sure the CPU always has quick access to data.

Is cache the same as a buffer?

While both help with data, they’re different. Cache stores data that the CPU might need again very soon, like a temporary memory. Buffers are more like waiting areas for data that’s moving from one place to another, helping to smooth out the flow. They both make the computer run better, but in different ways.

How does cache affect how well my computer works?

Cache plays a huge role! For gaming, it means smoother graphics and faster loading times. For big number crunching, it helps the computer process information much quicker. Even for everyday things like browsing the internet or opening apps, a good cache makes your computer feel snappier and more responsive.