Artificial intelligence, or AI, is really changing how doctors diagnose illnesses. It’s not science fiction anymore; it’s here, helping out in hospitals and clinics. AI tools can look at medical images and patient data way faster than we can, spotting things that might be missed. This can lead to quicker and more accurate diagnoses. We’re going to look at some of the top AI tools out there that are helping with medical diagnosis, focusing on those that offer significant benefits, sometimes even for free or with accessible options. Finding the best free AI for medical diagnosis can really make a difference in patient care.

Key Takeaways

- AI in medical imaging helps doctors find problems faster and more accurately by looking at scans.

- Tools like IBM Watson for Oncology help doctors choose the best treatments for cancer patients by looking at lots of medical information.

- Some AI systems, like Mia for breast cancer, have shown they can find more cancers than human doctors alone.

- It’s important that AI tools work well with the systems doctors already use and that patient data stays safe.

- While AI is powerful, doctors still need to use their own judgment to make sure diagnoses are right, especially in tricky cases.

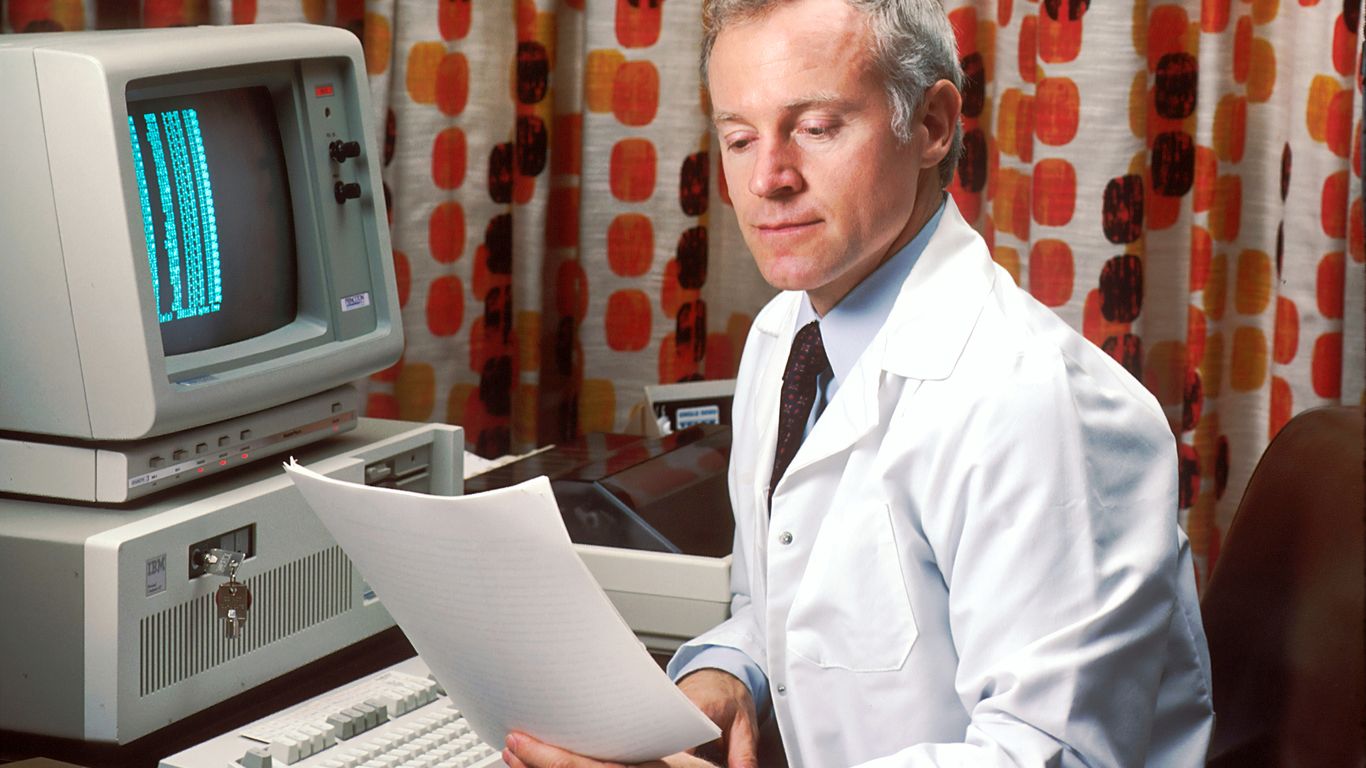

IBM Watson For Oncology

IBM Watson for Oncology is a system designed to help doctors figure out the best treatment plans for cancer patients. It looks at a lot of information, like a patient’s medical history, research papers, and current medical guidelines. Then, it suggests treatment options that are backed by evidence. The idea is to give oncologists another tool to consider, especially when dealing with complex cases. It’s meant to work alongside a doctor’s own knowledge, not replace it.

Watson for Oncology aims to personalize cancer treatment by analyzing vast amounts of data.

Here’s a bit about how it works:

- Data Input: It takes in patient data, including medical records, lab results, and genetic information.

- Information Analysis: It processes this data alongside a huge library of medical literature, clinical trials, and treatment guidelines.

- Recommendation Generation: Based on the analysis, it presents potential treatment options, often with a confidence score and the evidence supporting each choice.

While it’s a powerful tool, it’s not perfect. There have been discussions about the need for more testing and potential biases in its recommendations. Integrating it into a busy clinic also brings up questions about data privacy and how it fits ethically into patient care.

It’s important to remember that AI like Watson is a tool to assist, not to make decisions independently. The final call always rests with the medical professionals who understand the full context of the patient’s situation.

IDx-DR

IDx-DR is a pretty big deal in the world of AI for eye health. It’s an autonomous system, meaning it can make a diagnosis all on its own, which is a first for FDA-approved AI. Its main job is to spot diabetic retinopathy, a serious eye condition that can lead to blindness if not caught early. This is super important because diabetic retinopathy often doesn’t show any symptoms at first, so people might not know they have it until it’s quite advanced.

What IDx-DR does is analyze images of the back of your eye, taken with a special camera. It looks for tiny signs like small bleeds or swelling that indicate the disease. Because it can work without a specialist needing to review every single image, it can really speed things up and make screening more accessible, especially in places where there aren’t many eye doctors around. This could mean more people get checked and treated sooner, potentially saving their sight. It’s a good example of how AI can help manage chronic conditions and improve access to care. You can find out more about how these systems work on eyediagnosis.net.

The system has been put through its paces in clinical studies and has shown it’s pretty good at finding diabetic retinopathy. Getting that FDA approval was a major step, showing that these AI tools can really be trusted to help doctors and patients.

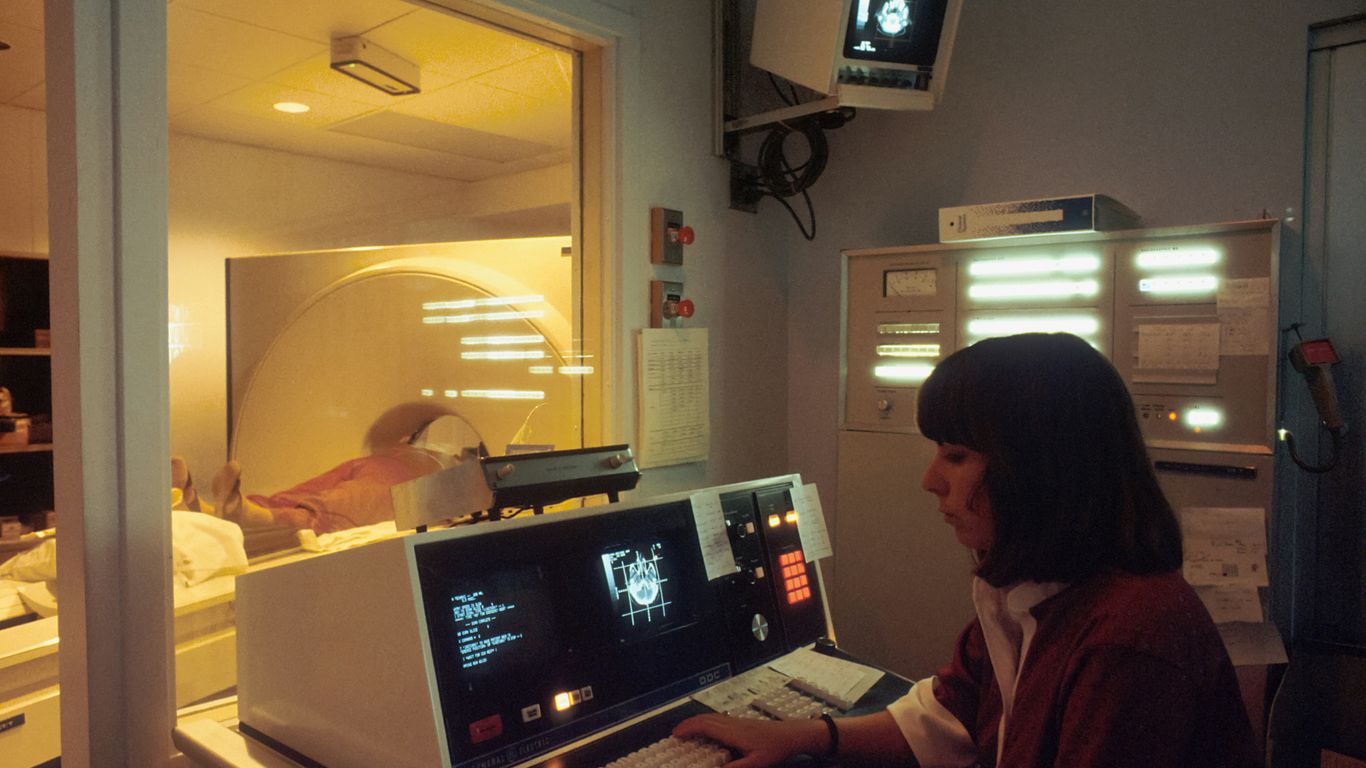

Zebra Medical Vision

Zebra Medical Vision is a company that’s really making waves in how we use AI for looking at medical images. They’ve built a bunch of AI tools that can help out in different areas of medicine, like radiology and even heart health. What’s pretty neat about their stuff is how fast and accurate it is when it comes to checking out X-rays, CT scans, and MRIs. They use smart computer programs, kind of like how our brains learn, to spot things that might be wrong. This helps doctors and other medical folks make better decisions about what to do next.

They have tools that can find broken bones, spot heart problems, check out livers, and even look for breast cancer in mammograms. The idea is to catch these things earlier so people can get help sooner, which is always a good thing. It’s like having an extra set of super-powered eyes looking at the scans. They’re really trying to make medical imaging work better for everyone involved.

The goal is to make AI tools that are easy to use and fit right into how doctors already work. This means less hassle and more time for actual patient care.

It’s important to pick the right AI partner, and Zebra Medical Vision seems to be focused on that. They’re not just about the tech; they’re about making sure it actually helps patients and doctors. Finding a company with a good reputation and a history of working well in healthcare is key, and that’s something to look into when you’re considering these kinds of tools. They’re working on systems that can help detect things like fractures, which is a pretty common issue. You can find out more about how AI is being used in areas like vehicle detection systems, which also rely on advanced pattern recognition, to get a sense of the broader applications of this technology.

Arterys Cardio AI

Arterys Cardio AI, which you might now see under the name Tempus Pixel Cardio, is a really interesting AI tool focused on heart health. It uses advanced artificial intelligence, specifically deep learning, to look at cardiac MRI scans. Think of it as a super-smart assistant that can automatically measure all sorts of important things about your heart, like how well it’s pumping blood and the condition of your heart tissue. This helps doctors get a clearer picture of what’s going on with a patient’s heart much faster than before.

One of the big pluses here is how precise and consistent the measurements are. It means doctors can get a really thorough assessment of heart structure and function without a lot of guesswork. The whole system is cloud-based, which makes it pretty easy to fit into a doctor’s daily routine and allows different specialists to collaborate easily. It’s designed to be user-friendly, so interpreting those complex heart scans becomes a bit more straightforward.

The goal is to provide doctors with better information so they can make quicker, more informed decisions about patient care.

This kind of technology is really changing how we look at diagnosing heart conditions. It’s not about replacing doctors, but giving them better tools to do their jobs. It’s pretty amazing how AI is starting to help out in specialized areas like cardiology, making complex analysis more accessible. You can find out more about how these kinds of tools are being integrated into healthcare by looking at wearable devices and health monitoring.

Here’s a quick look at what it does:

- Automates measurement of cardiac parameters.

- Analyzes cardiac MRI scans for function and blood flow.

- Provides precise and reproducible results.

- Integrates into clinical workflows via a cloud platform.

ENDEX By Enlitic

ENDEX by Enlitic is a pretty neat AI platform for looking at medical images. It uses some pretty advanced deep learning stuff to go through X-rays, CT scans, MRIs, and even ultrasounds. The idea is that it can spot problems and give doctors a clearer picture, faster. This could mean catching diseases earlier and helping figure out the best way to treat people.

What’s cool about ENDEX is that it’s designed to be easy to use. You can upload images, get reports from the AI, and even share them with other doctors. It’s meant to fit right into how hospitals and clinics already work, so it’s not some complicated system that nobody can figure out.

ENDEX aims to make AI analysis accessible, helping healthcare providers get more insights from medical scans without needing a whole new set of specialized skills.

Here’s a quick look at what ENDEX can do:

- Detects abnormalities: It’s trained to find things like tumors, broken bones, or heart issues.

- Analyzes various scans: Works with X-rays, CTs, MRIs, and ultrasounds.

- Improves efficiency: Automates some of the more time-consuming parts of reading scans.

- Provides objective assessments: Offers consistent analysis, reducing human variability.

Mia For Breast Cancer

Mia is an AI tool specifically designed to help in the detection and diagnosis of breast cancer. It works by analyzing medical images, primarily mammograms, to identify potential signs of malignancy that might be missed by the human eye. The goal is to assist radiologists in making more accurate and timely diagnoses, which is super important for effective treatment.

Mia aims to improve the accuracy and efficiency of breast cancer screening.

This AI system uses advanced machine learning algorithms, trained on vast datasets of mammographic images. By learning from thousands of cases, Mia can recognize subtle patterns and anomalies associated with breast cancer. It’s not meant to replace a radiologist, but rather to act as a second pair of eyes, flagging areas of concern for closer review.

Here’s a general idea of how it functions:

- Image Analysis: Mia processes digital mammograms, looking for specific features like masses, calcifications, and architectural distortions.

- Risk Assessment: It can provide a probability score for malignancy for suspicious findings.

- Workflow Integration: The tool is designed to fit into existing radiology workflows, helping to prioritize cases and reduce reading times.

While AI like Mia is showing a lot of promise in medical imaging, it’s still a developing field. The accuracy and effectiveness can depend on the quality of the data it’s trained on and how it’s implemented in a clinical setting. Continuous updates and validation are key to ensuring these tools provide real benefit to patients and doctors alike.

It’s pretty neat how AI can help spot things that are hard to see, potentially leading to earlier detection and better outcomes for people dealing with breast cancer.

Ramsoft PowerServer™

Ramsoft’s PowerServer™ is a pretty interesting platform if you’re looking to integrate AI into your medical imaging setup. It’s designed to work with your existing systems, like PACS and EHRs, which is a big deal because it means less disruption to how your team already works. Think of it as a way to make your current imaging software smarter by adding AI capabilities directly into the workflow. This means radiologists can get AI-driven insights without having to jump between different programs, which can really speed things up.

One of the main points is how it helps with interoperability. It’s built to connect with various systems, allowing for smoother data exchange. This is important for getting information to the right people at the right time, helping with quicker decisions. Plus, it’s set up to be secure and compliant with things like HIPAA, which is obviously a must in healthcare.

The goal here isn’t to replace radiologists, but to give them better tools. AI integrated through platforms like PowerServer™ can help flag potential issues or speed up the analysis of routine scans, freeing up experts to focus on more complex cases or patient interaction. It’s about augmenting what they do.

Here are some of the benefits you might see:

- Faster analysis: AI can process images more quickly than manual methods.

- Improved accuracy: AI tools can help detect subtle findings that might be missed.

- Streamlined workflows: By integrating AI directly, it reduces the need for extra steps or software.

- Scalability: The platform is designed to grow with your practice, whether you’re a small clinic or a large hospital.

When you’re looking at AI tools, it’s always a good idea to see how they perform in a real-world setting. Ramsoft often suggests trying out demo environments so you can test things like image recognition accuracy and how well it fits with your specific setup before committing. This helps make sure the AI actually helps your team and doesn’t just add another layer of complexity.

Ramsoft OmegaAI®

Ramsoft’s OmegaAI® is another piece of their puzzle for speeding up medical imaging. It works alongside their PowerServer™ platform, aiming to make the whole process from getting an image to understanding what it means much quicker. Think of it as a smart assistant for radiologists, using AI to look at scans and help spot things that might be easy to miss.

It’s built to handle advanced image recognition and processing, which means it can pick out subtle details in scans. This can really help in catching diseases earlier or getting a clearer picture of what’s going on with a patient. The idea is that by using AI like OmegaAI®, you can get more consistent results and reduce the time it takes to get a diagnosis.

The accuracy of AI in medical imaging is getting better all the time, especially when it’s trained on lots of different kinds of data. But it’s still really important that a human expert, like a radiologist, is looking at the results too. AI is there to help, not to take over the decision-making process entirely.

Here’s a quick look at what OmegaAI® aims to do:

- Improve diagnostic speed: By automating parts of the scan analysis, it helps speed things up.

- Enhance accuracy: AI can help catch subtle findings that might be overlooked.

- Streamline workflows: It’s designed to fit into existing systems, making things smoother.

- Support clinical decisions: Provides data-driven insights to help doctors make better choices.

When you’re looking at AI tools, it’s always a good idea to see how they perform in a real-world setting. Trying out a demo can show you if the image recognition and processing speeds are a good fit for your practice. It’s also smart to check out how well these tools work with your current setup. You can find more about analytics tools for investors at HelpTheCrowd.com.

While AI can be a big help, it’s important to remember that it’s still a tool. It works best when it’s used by skilled professionals who can interpret the results and make the final call. Ramsoft seems to be focused on making sure their AI solutions work with radiologists, not against them.

Explainable AI (XAI)

When we talk about AI in medicine, especially for diagnosis, a big question pops up: how does it actually work? That’s where Explainable AI, or XAI, comes in. Think of it as the AI giving you its homework, showing its work so you can see how it got to a certain conclusion. This transparency is super important for doctors and patients to trust the AI’s suggestions.

Without XAI, an AI might just say, ‘This scan shows cancer.’ But XAI tries to show why it thinks that. It might highlight specific areas on an image that look unusual or point to patterns it recognized from its training data. This helps medical professionals understand the AI’s reasoning, which is key when making real patient decisions.

Here’s a bit about why XAI matters:

- Builds Trust: Seeing how an AI reaches a diagnosis makes it easier for doctors to rely on it.

- Identifies Errors: If an AI makes a mistake, XAI can help pinpoint where the reasoning went wrong, allowing for fixes.

- Improves Learning: By understanding the AI’s process, doctors can also learn and refine their own diagnostic skills.

- Meets Regulations: As AI becomes more common, rules will likely require these systems to be understandable.

The goal isn’t to replace doctors, but to give them better tools. XAI helps make AI a helpful assistant rather than a mysterious black box. It’s about making sure the technology works with human expertise, not just alongside it.

Right now, XAI is still developing, but it’s a big deal for making AI a safe and reliable part of healthcare.

Deep Learning And Advanced Neural Networks

When we talk about AI in medicine, deep learning and advanced neural networks are really where a lot of the exciting stuff is happening. These systems are built to learn from massive amounts of data, spotting patterns that humans might miss. Think of it like teaching a computer to see and understand medical images, but on a much grander scale.

What makes deep learning stand out is its ability to automatically figure out the important features in data. Unlike older methods where people had to tell the AI what to look for, deep learning models can discover these characteristics on their own. This is a big deal, especially with medical images like X-rays, CT scans, and MRIs, which are packed with subtle details.

Some of the specific types of networks you’ll hear about include:

- Convolutional Neural Networks (CNNs): These are fantastic for image analysis. They’re good at picking out visual patterns, making them useful for tasks like identifying tumors or other abnormalities in scans.

- Recurrent Neural Networks (RNNs): These are better suited for sequential data, like patient records over time or genetic sequences.

- Graph Convolutional Networks (GCNs): These are used to analyze data that can be represented as a network or graph, like how different parts of an image relate to each other, which can be helpful in complex scans.

The power of these networks lies in their layered structure, allowing them to process information in stages, building up a more complex understanding with each layer. This is what allows them to achieve such high accuracy in tasks that were once thought to be exclusively human domains.

It’s not just about identifying diseases, either. These advanced AI models are also being used to predict patient outcomes, help with drug discovery, and even personalize treatment plans. The accuracy they can achieve, especially when dealing with huge datasets, often surpasses what’s possible with traditional methods, and sometimes even human experts.

Wrapping Up: Your AI Toolkit for Medical Diagnosis

So, we’ve looked at some pretty cool AI tools that can help with medical diagnosis. These programs are getting better all the time, helping doctors spot problems faster and more accurately. Think of them as smart assistants, not replacements for your doctor, of course. They can sift through scans and patient data to find things that might be missed, especially when things get busy. While the technology is impressive, remember that human doctors are still key. They bring the experience and understanding that AI can’t quite replicate yet. As these tools keep improving, they’ll likely become even more important in how we approach healthcare, making things quicker and more precise for everyone involved. It’s an exciting time to see how AI continues to shape medical care for the better.